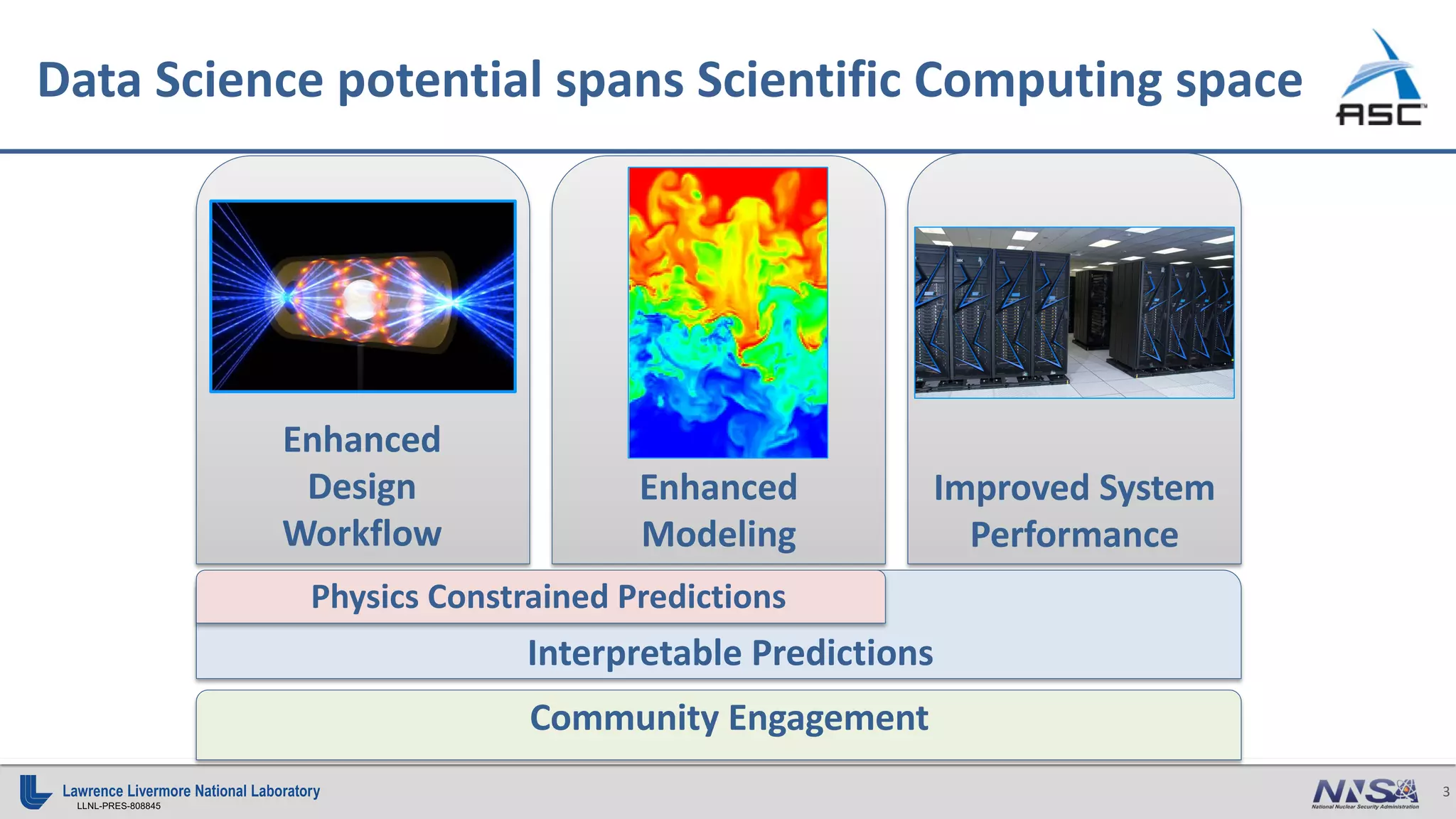

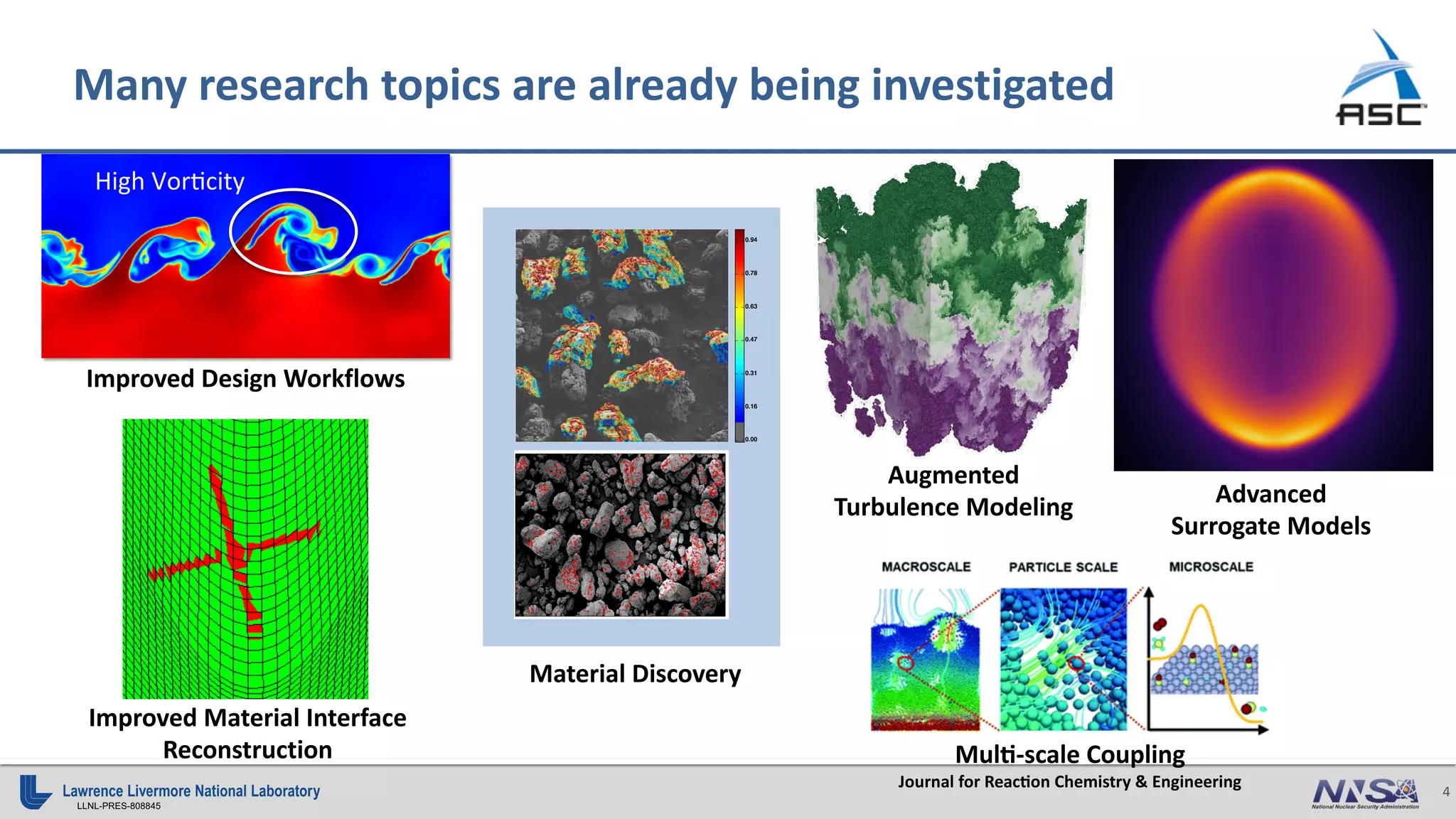

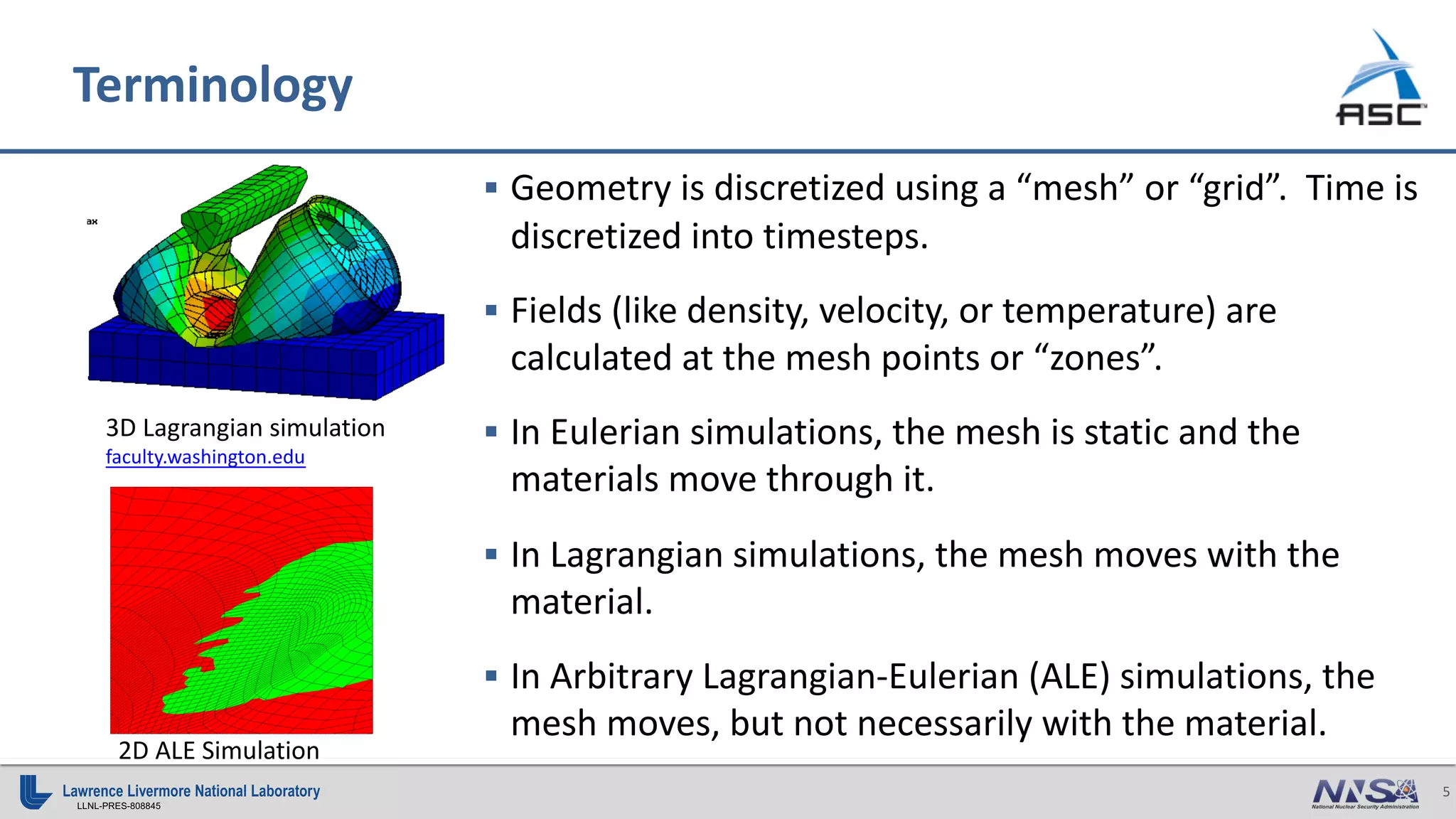

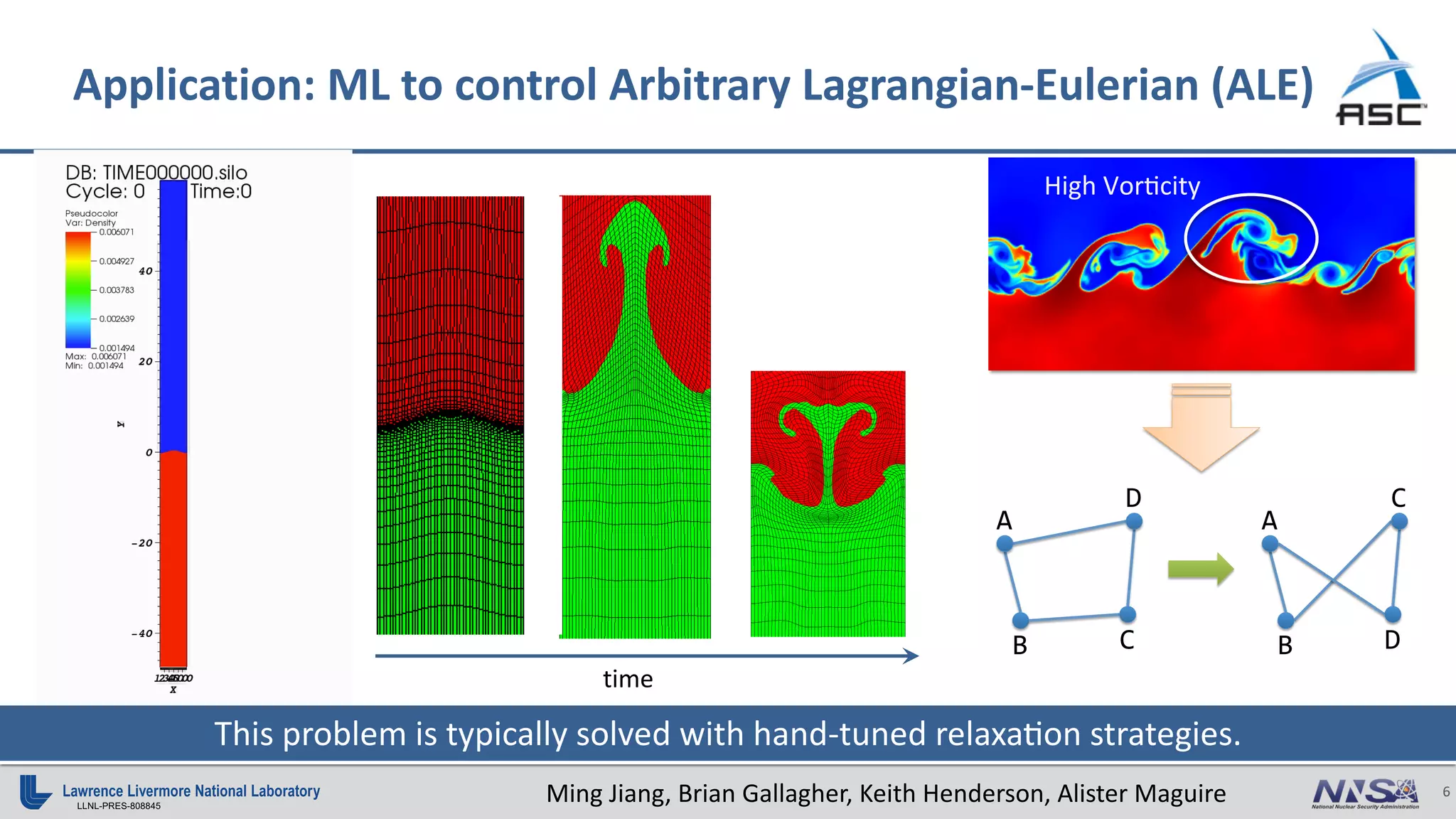

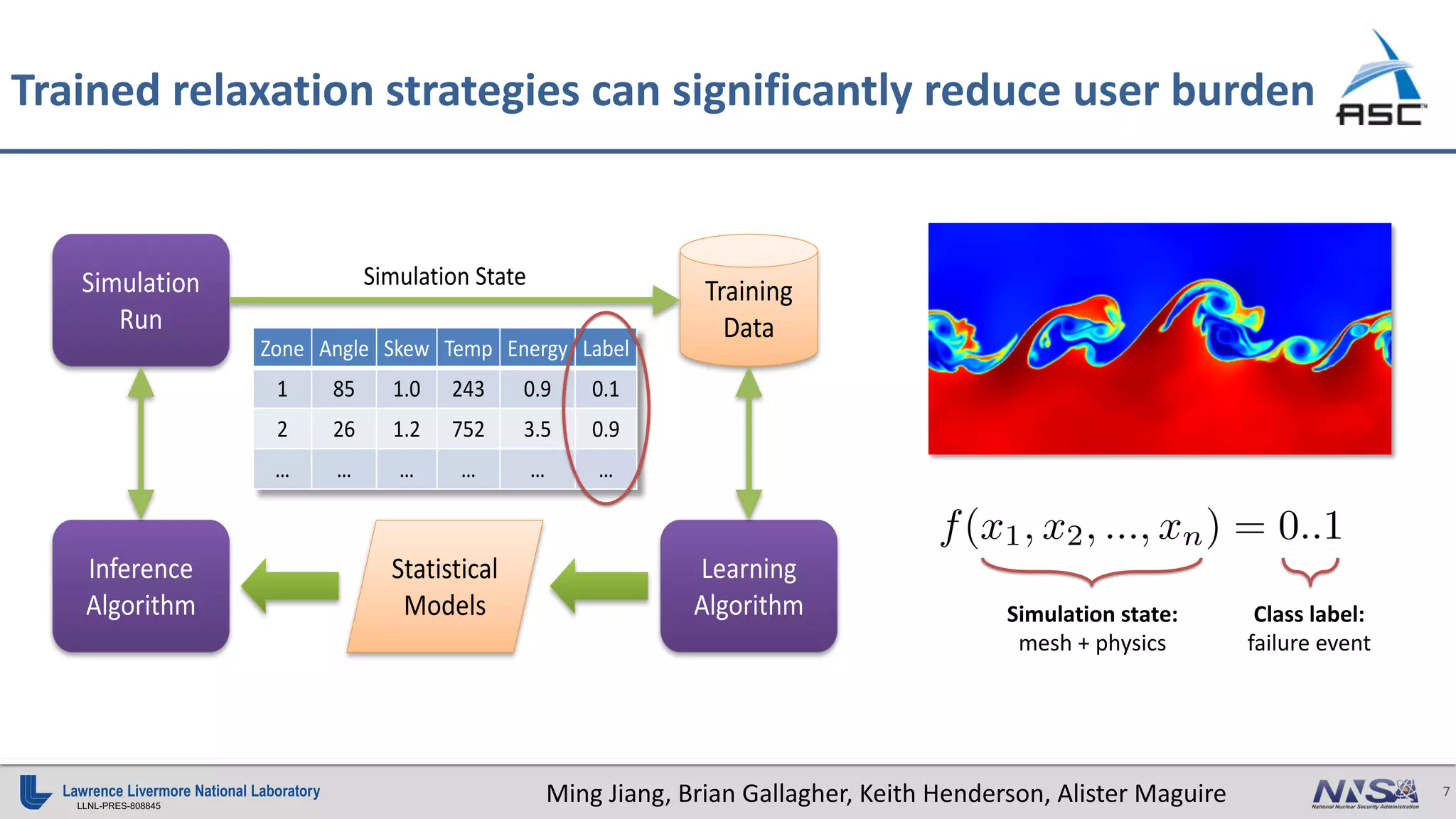

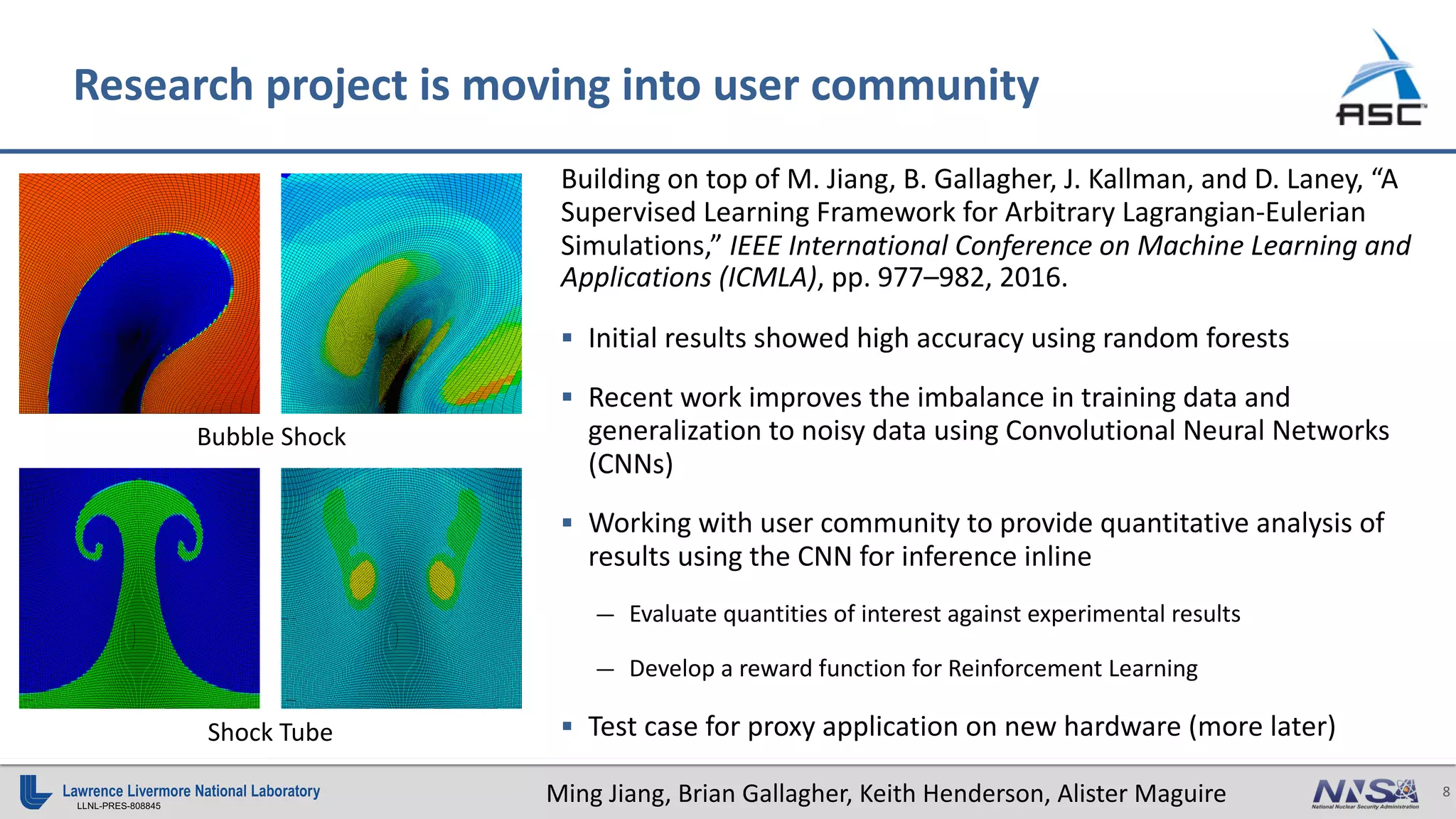

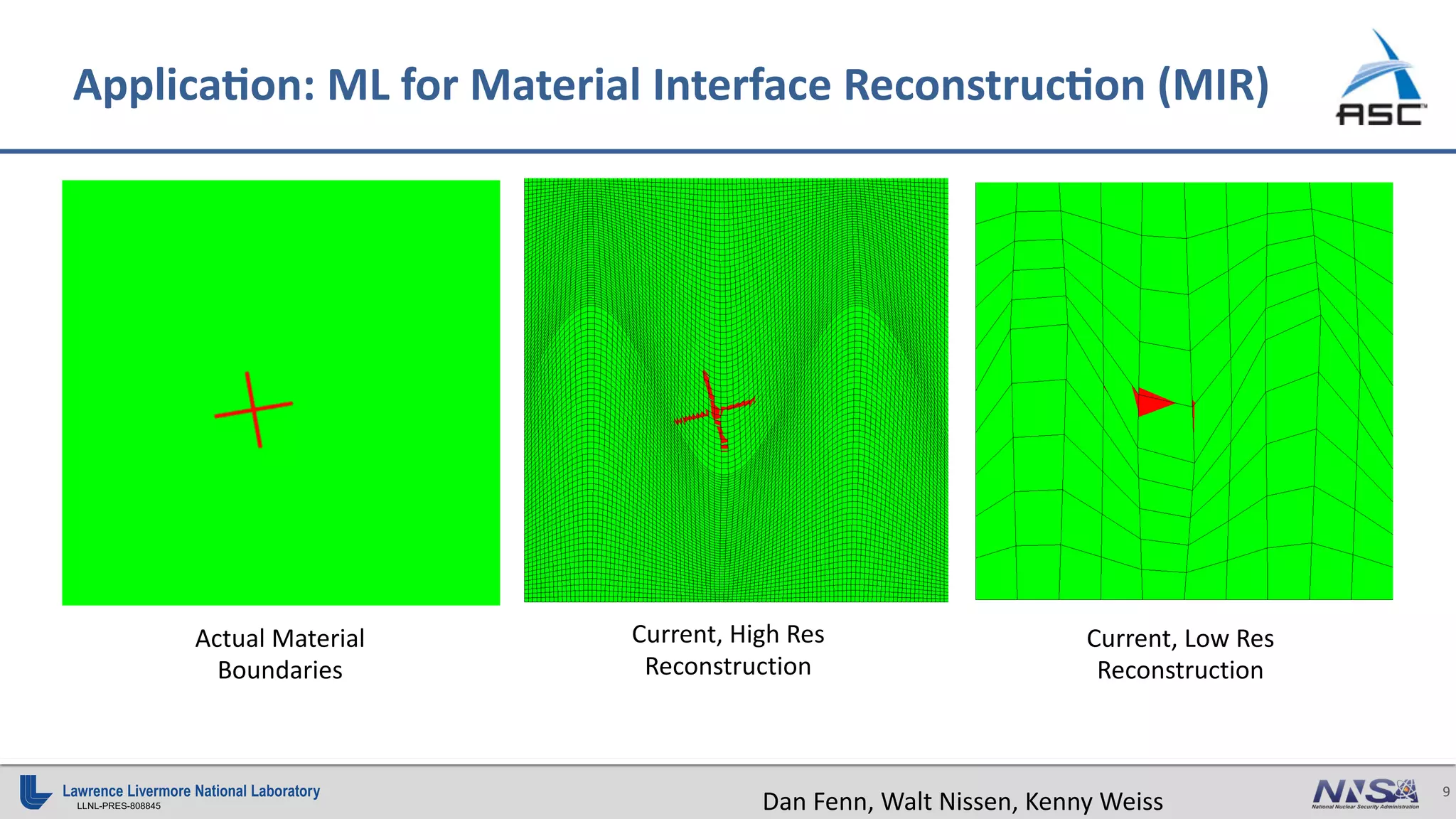

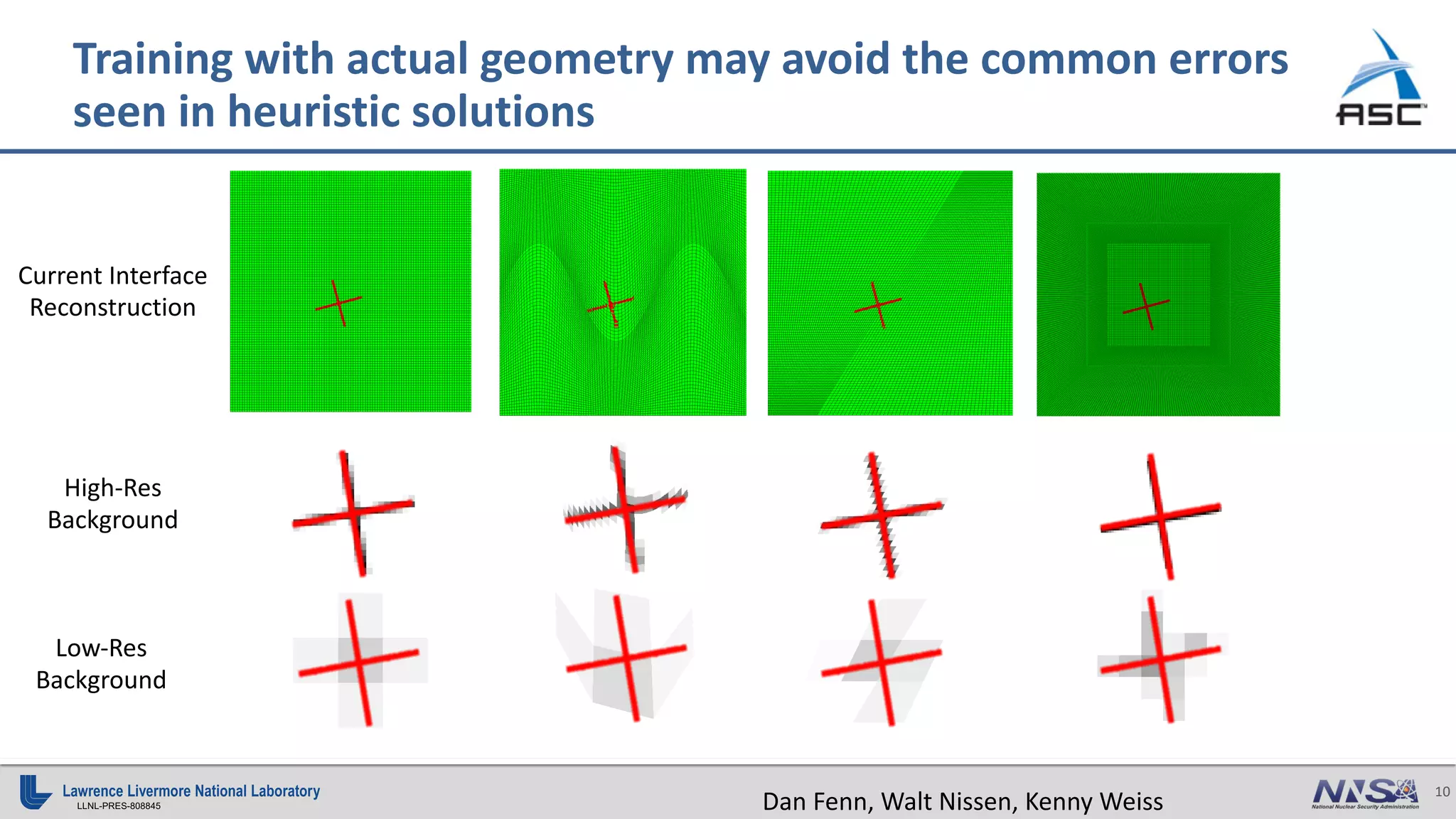

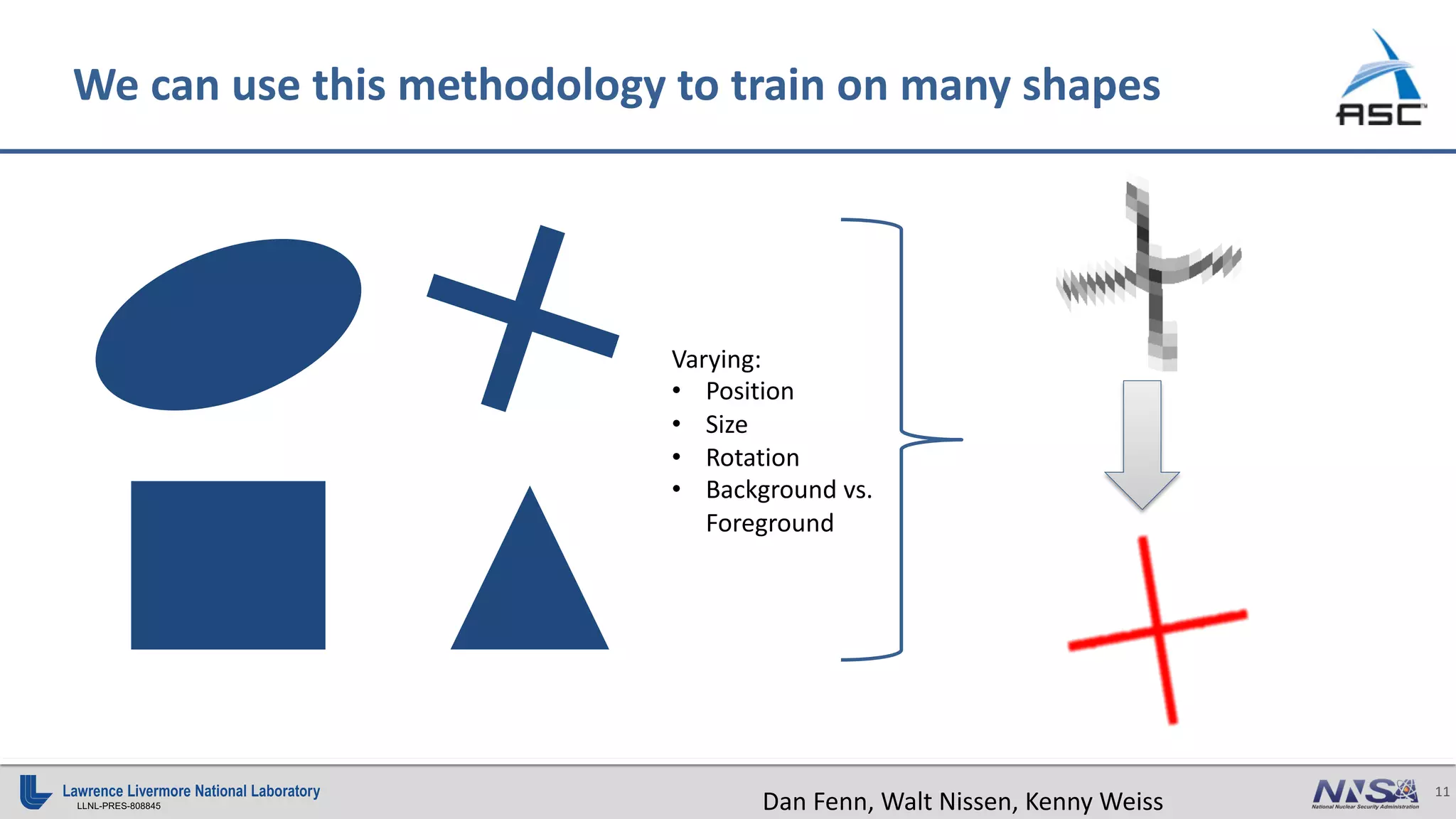

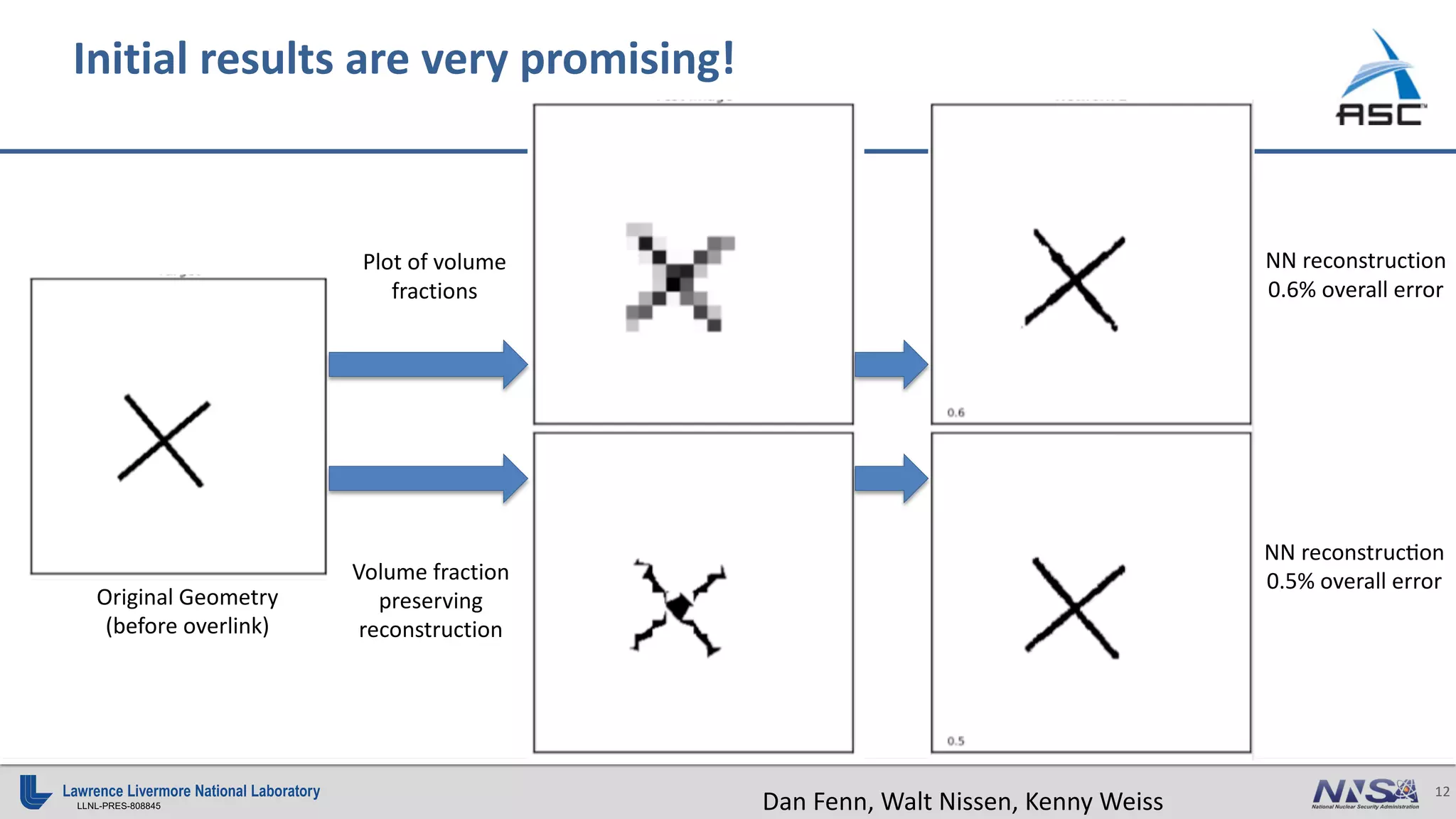

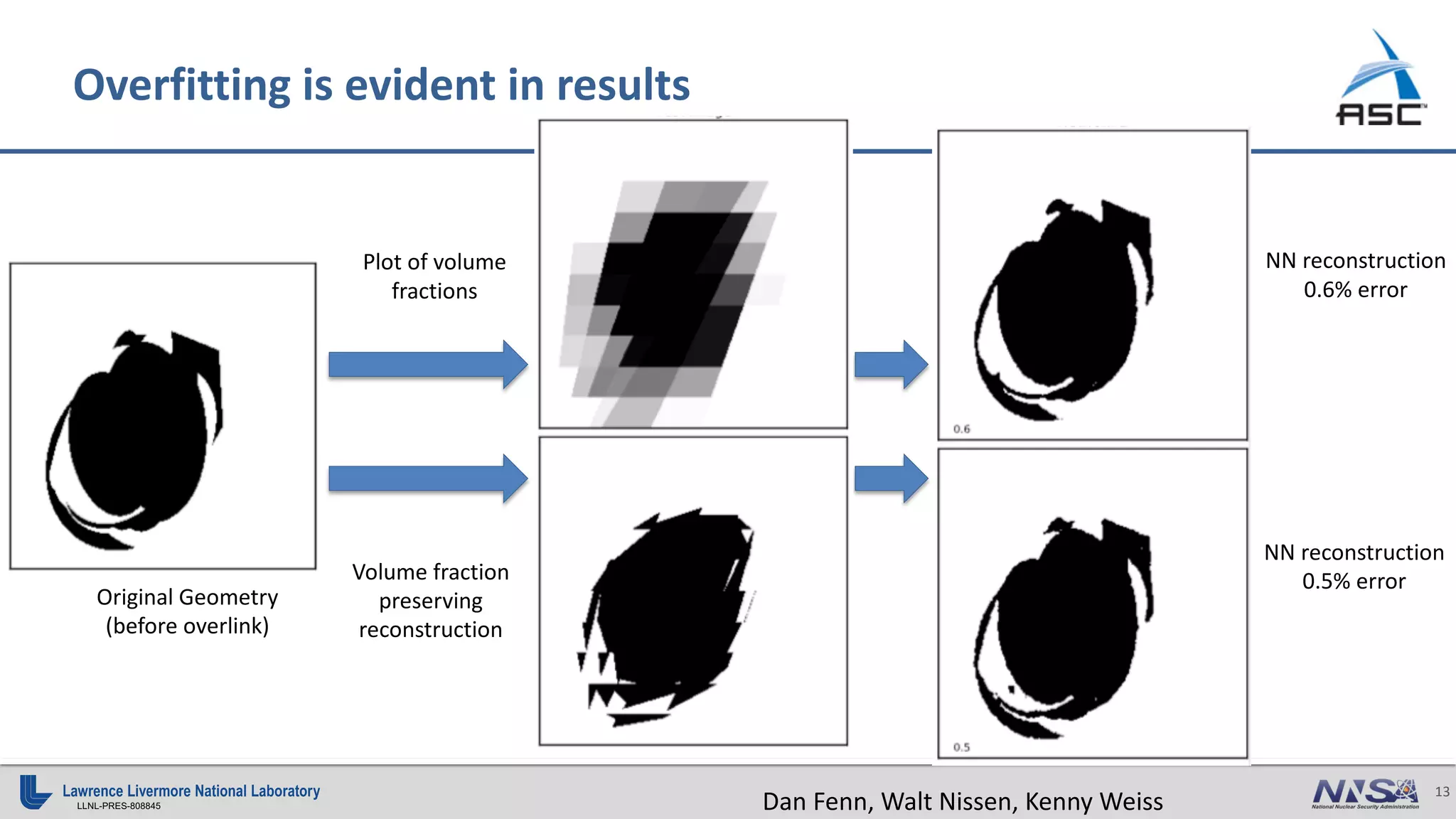

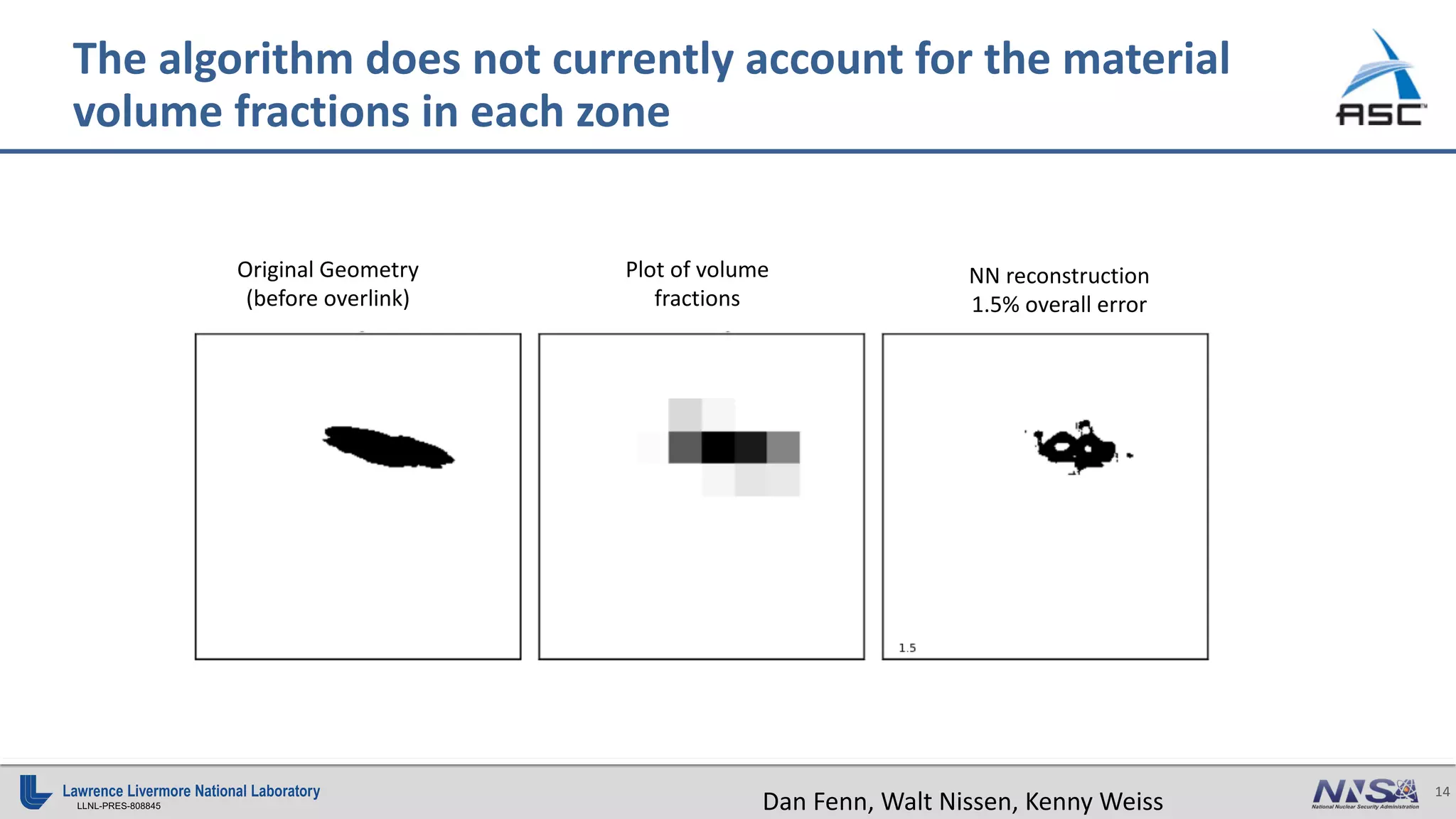

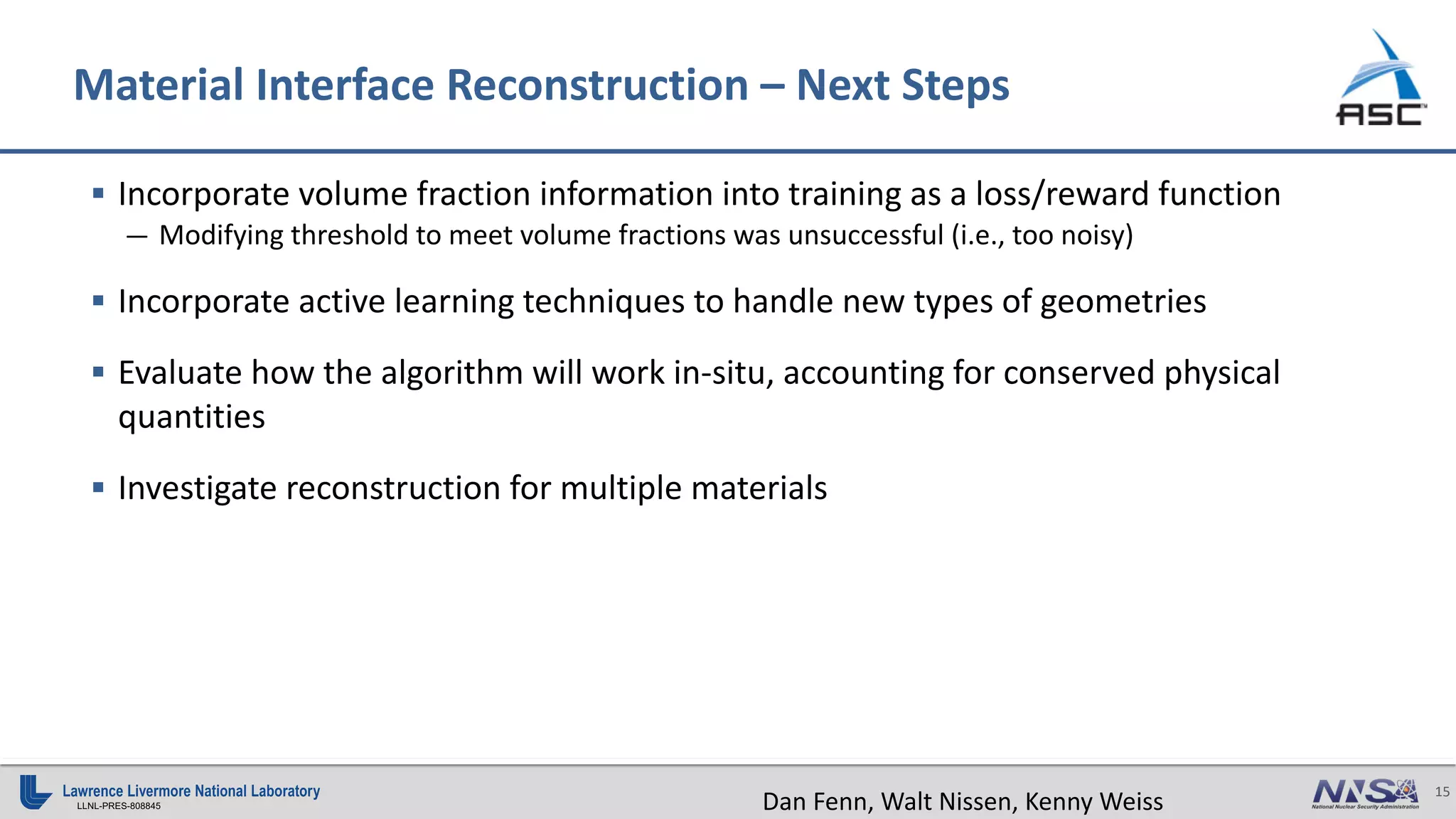

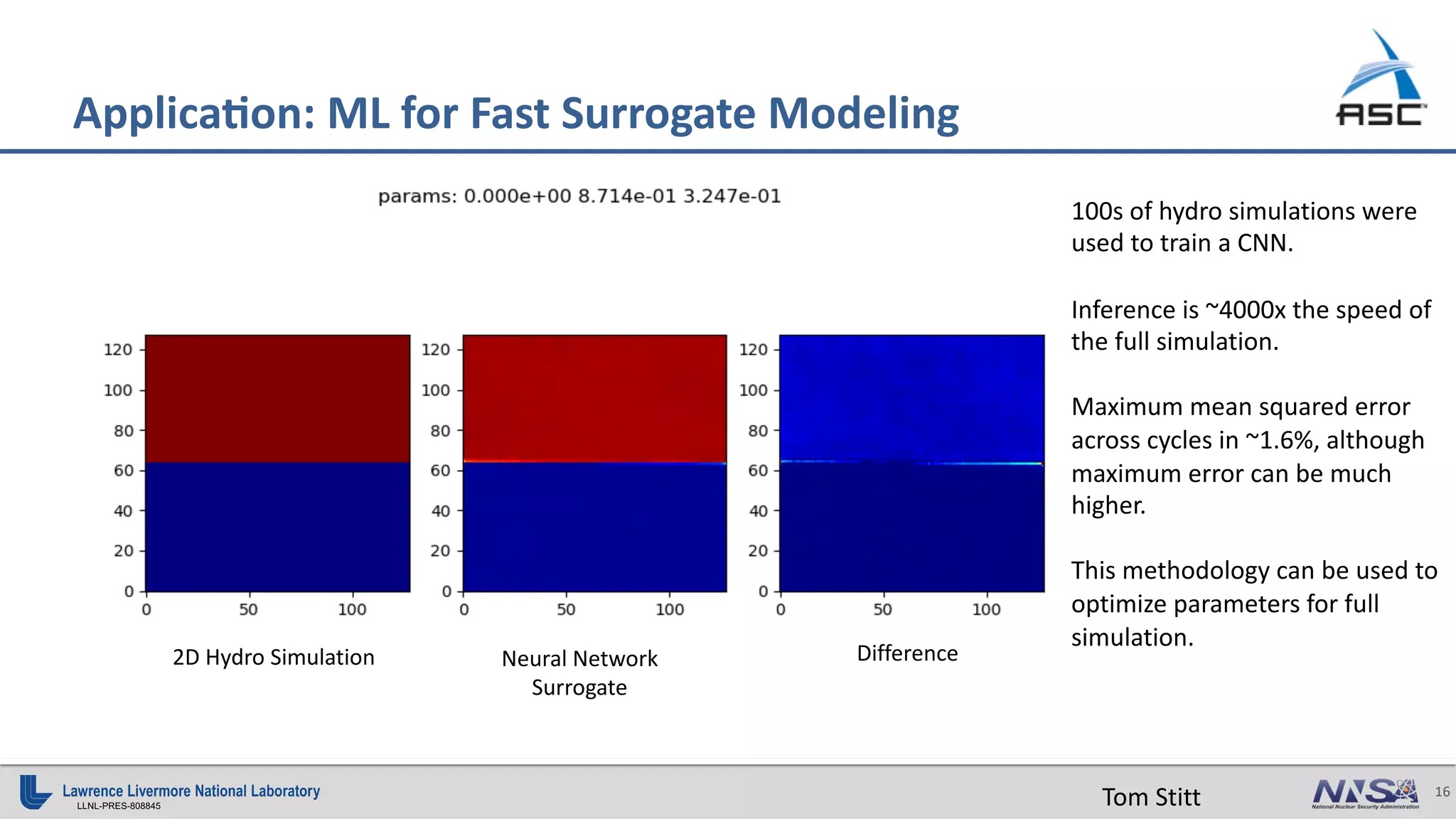

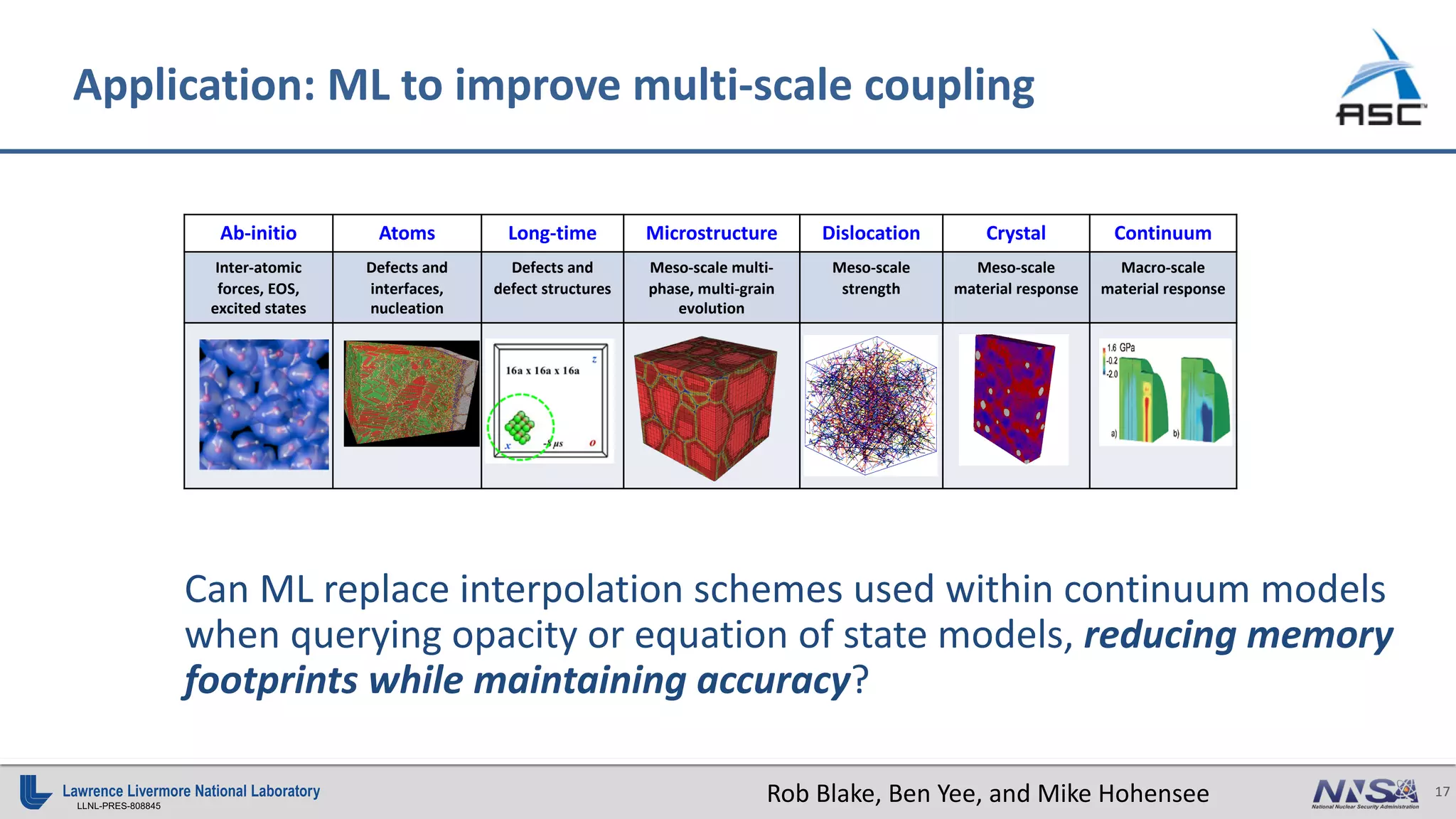

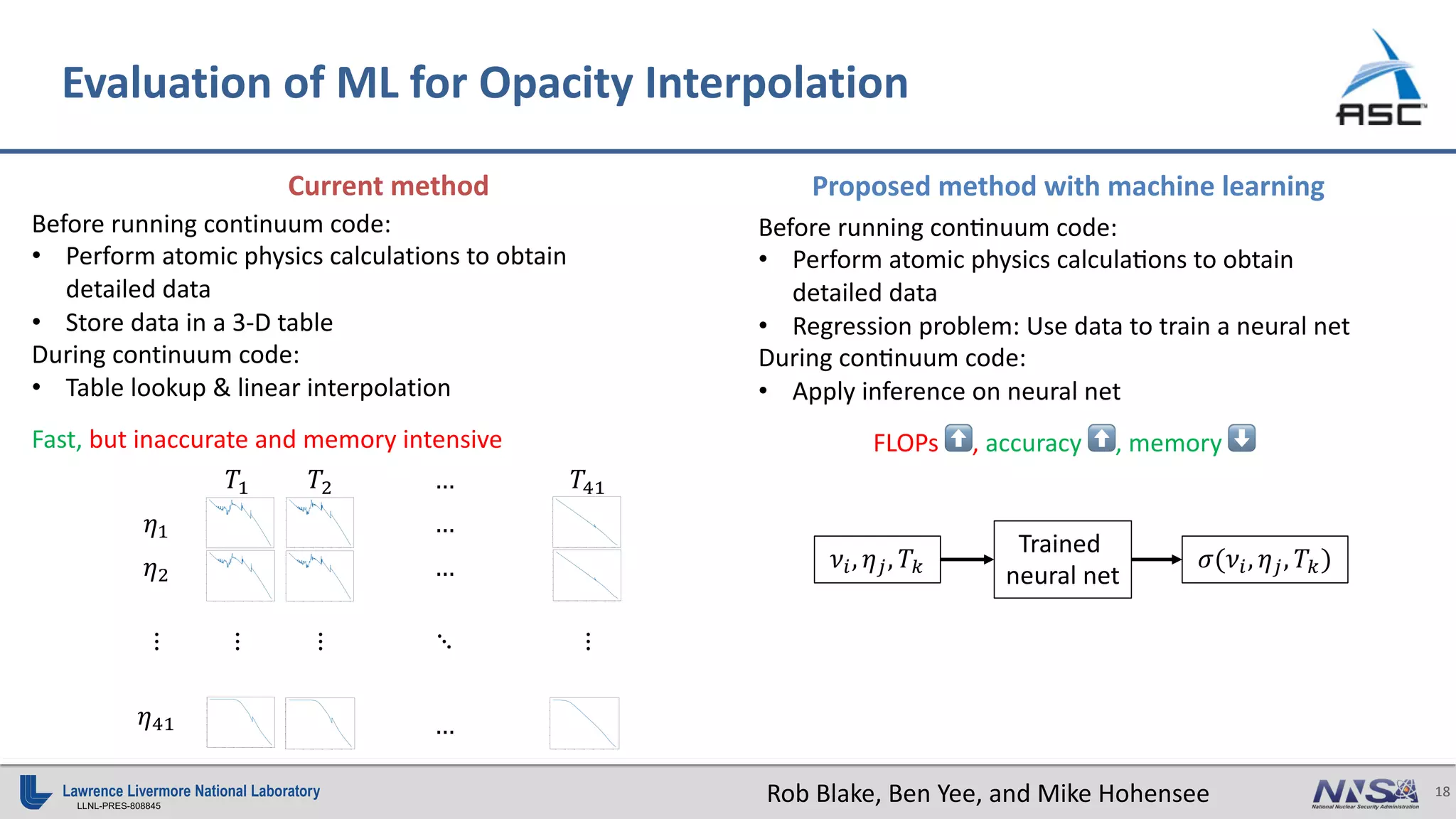

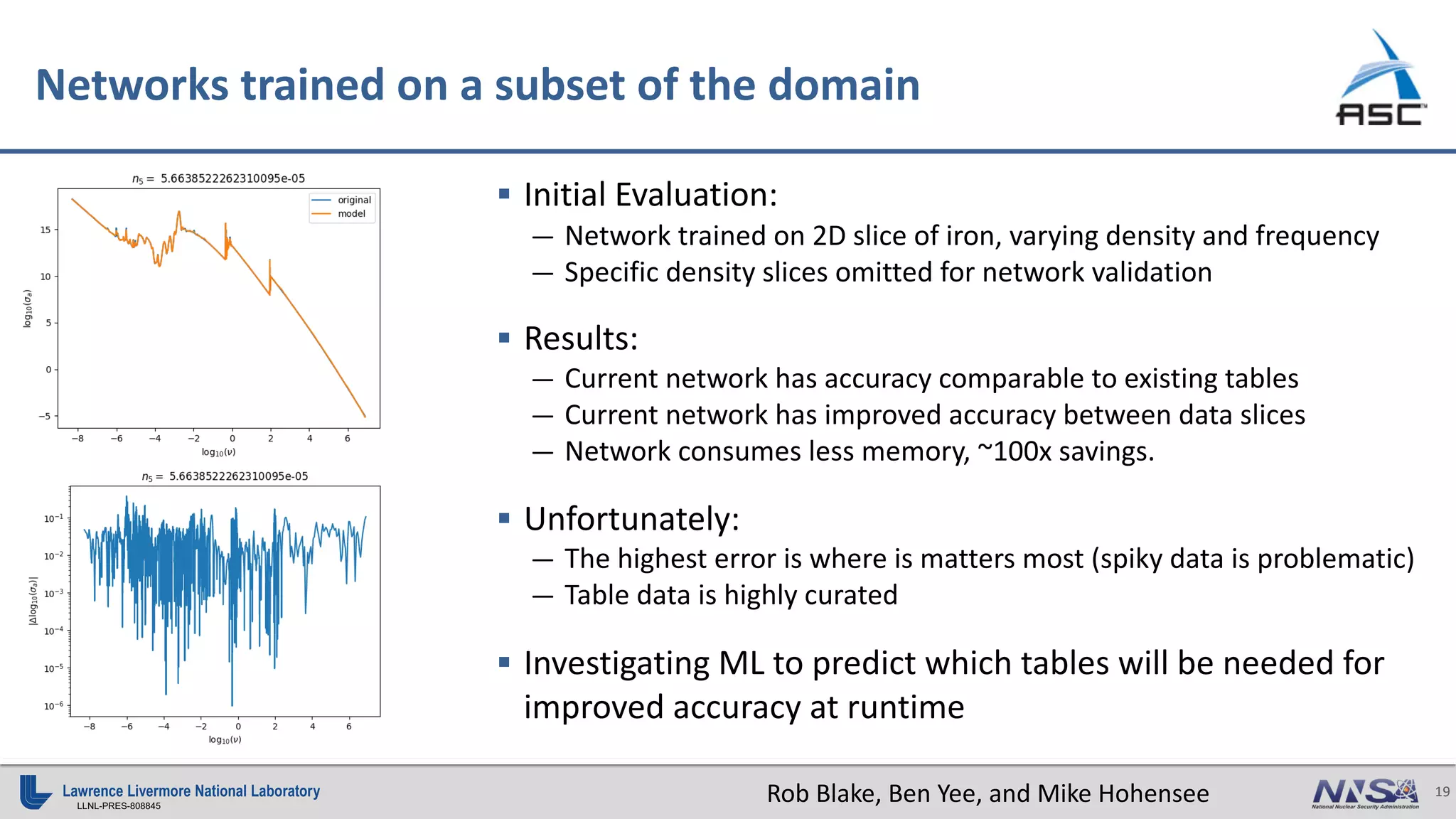

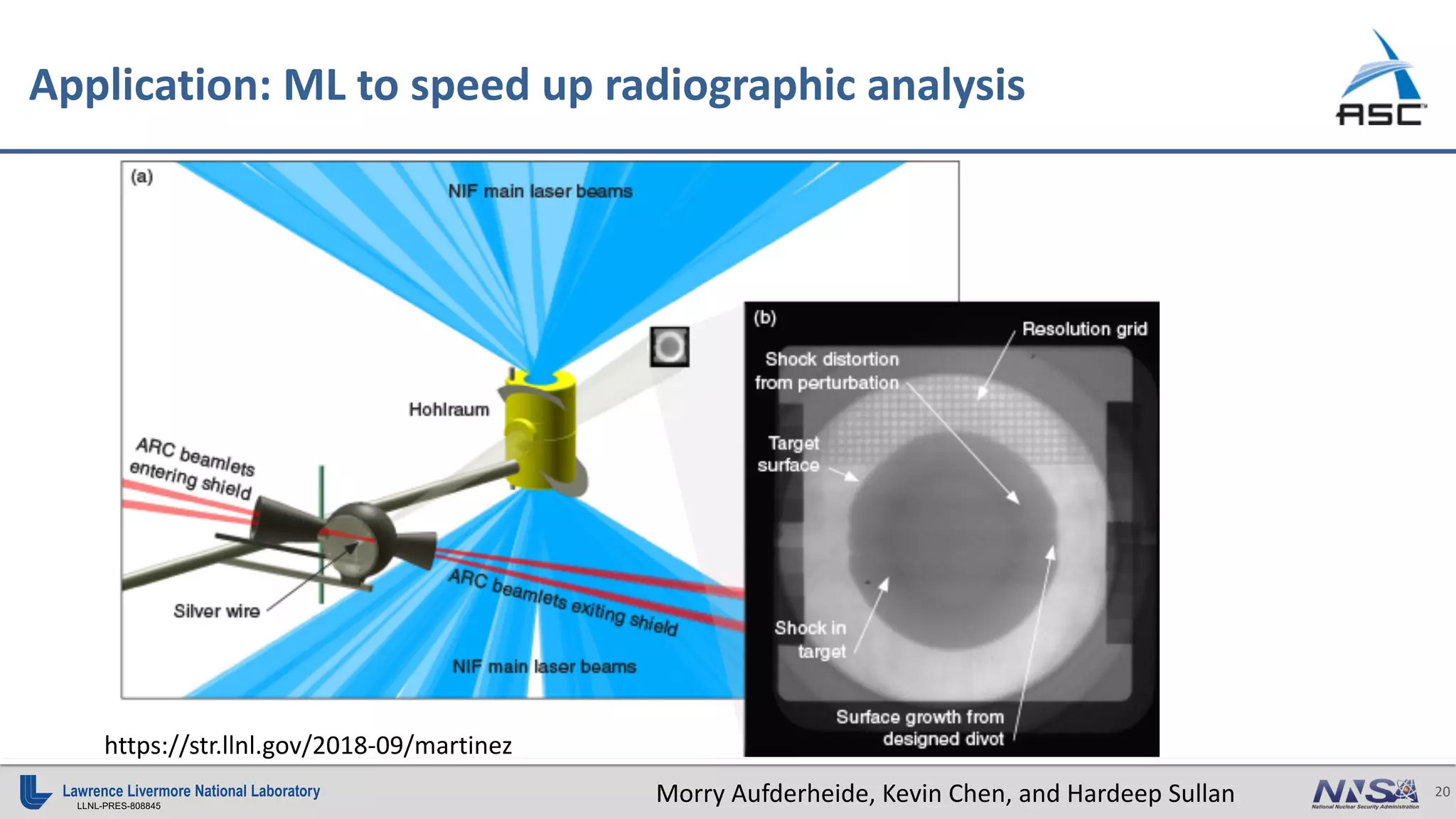

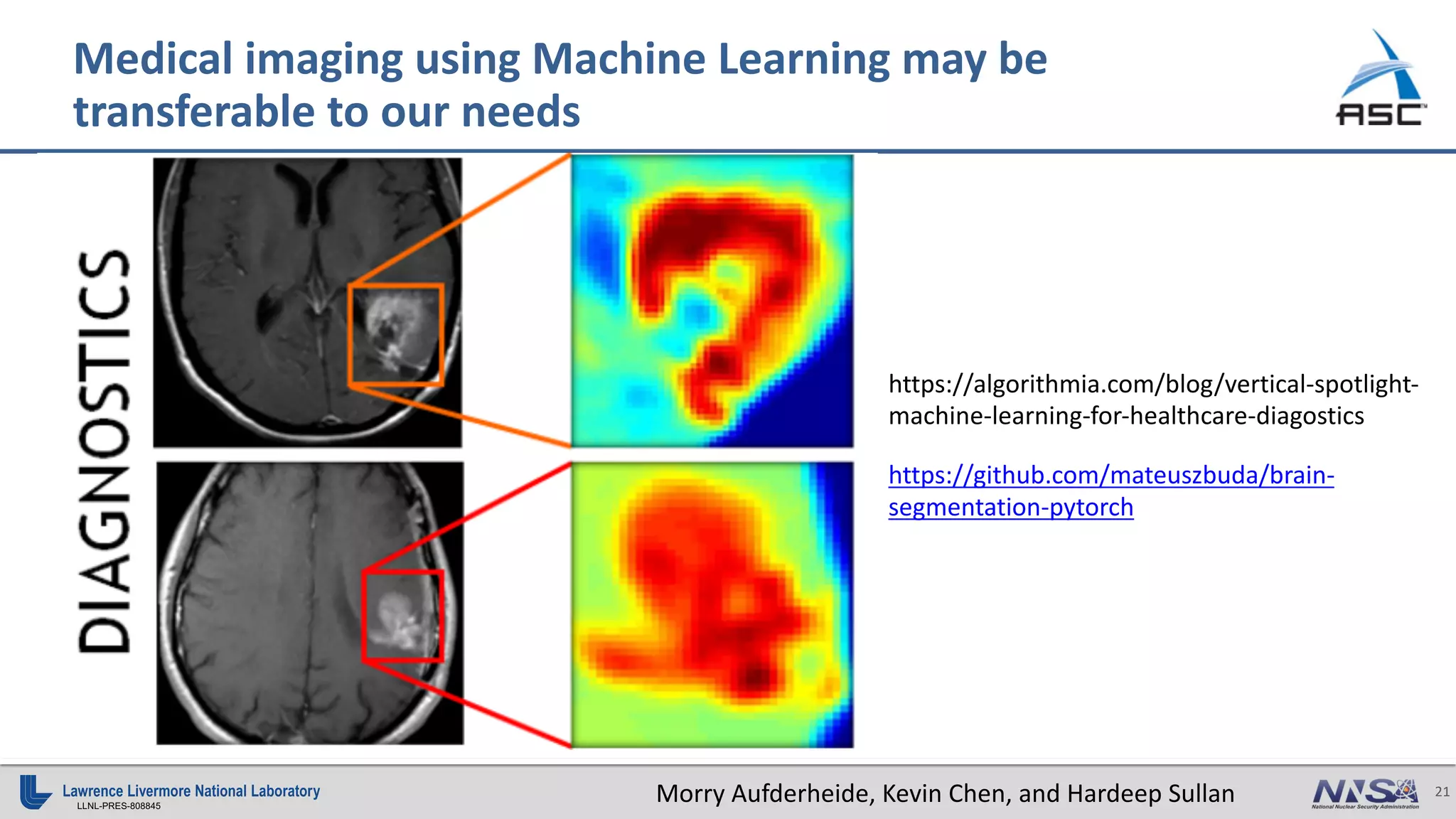

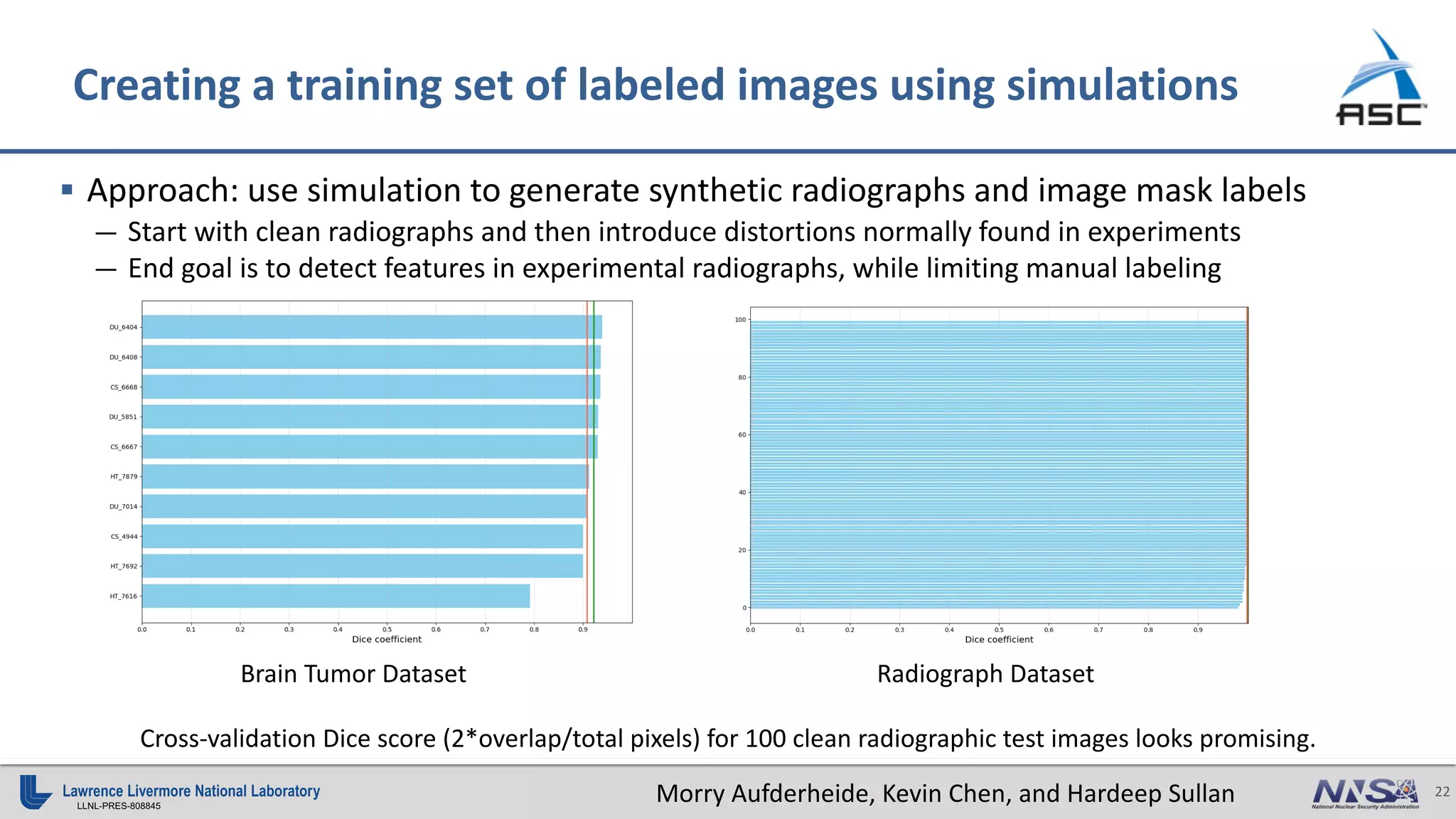

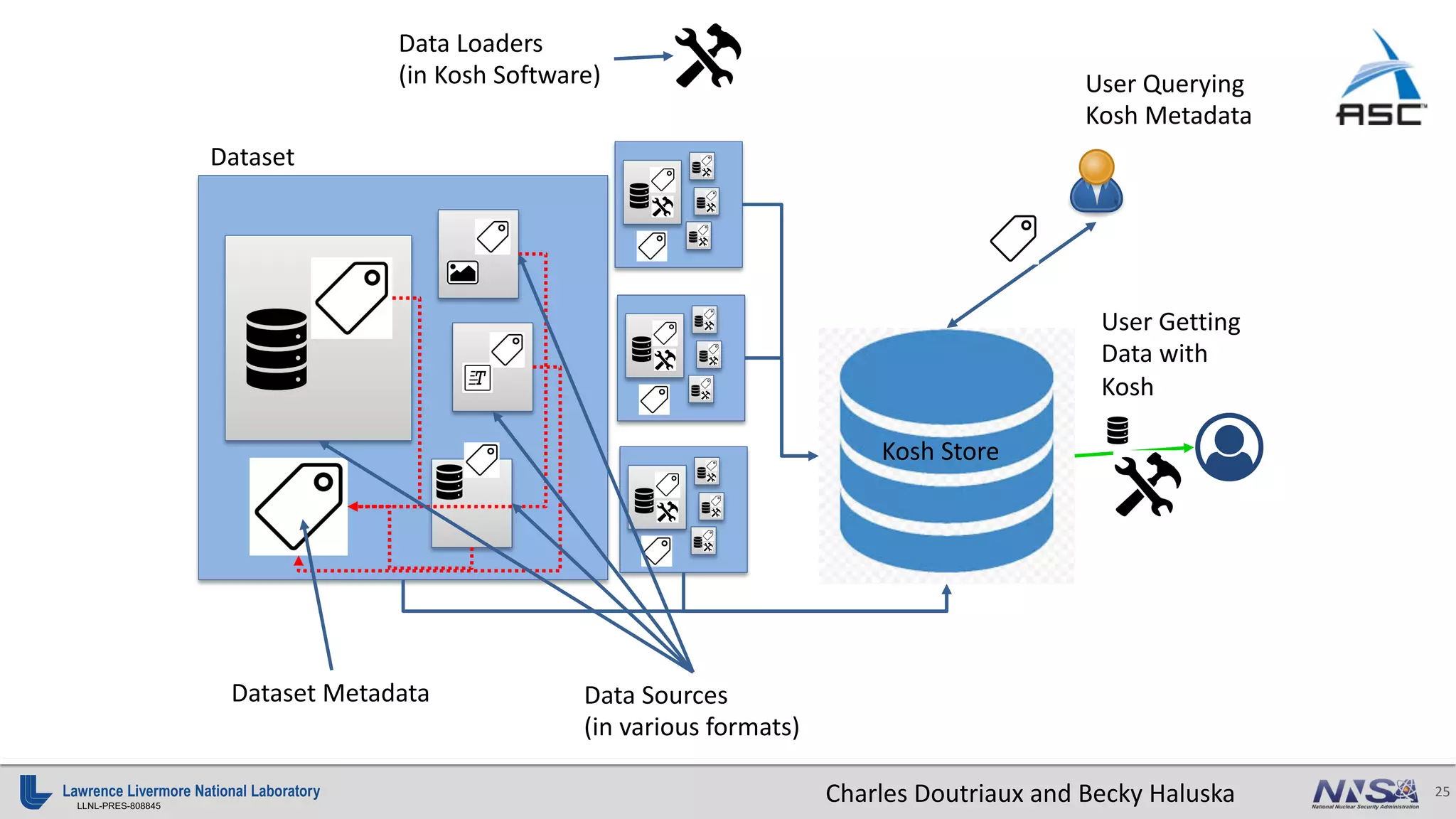

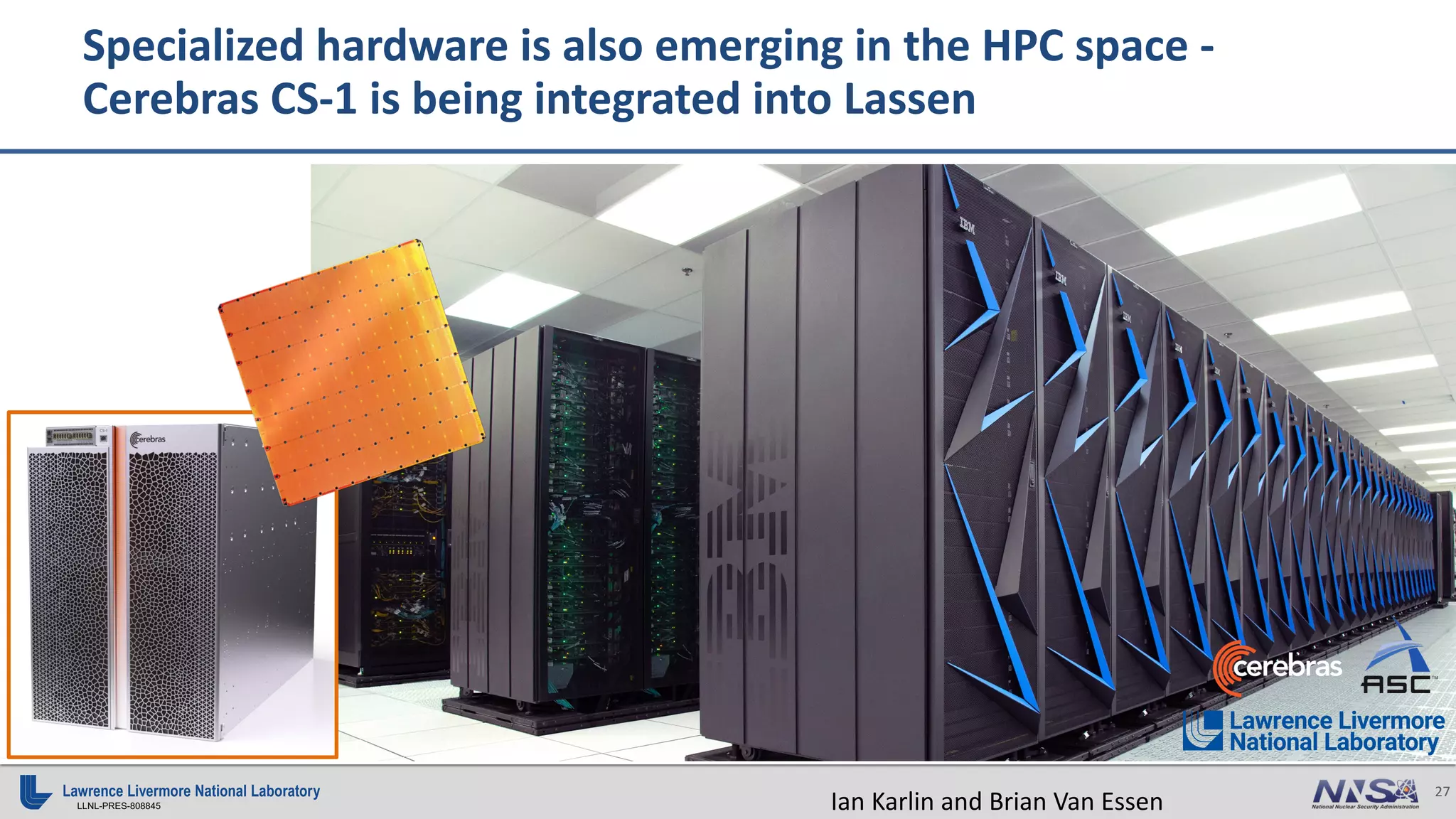

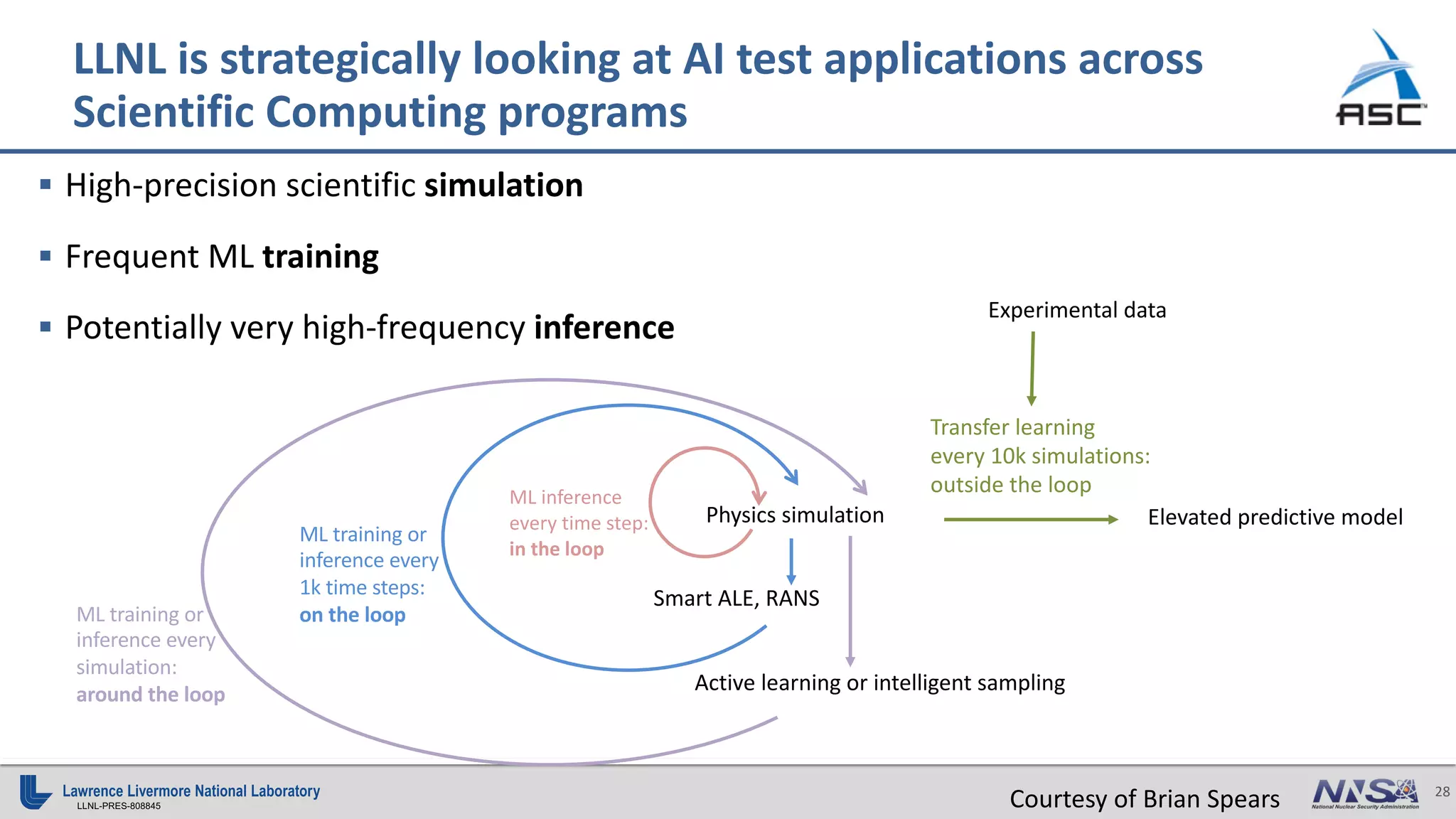

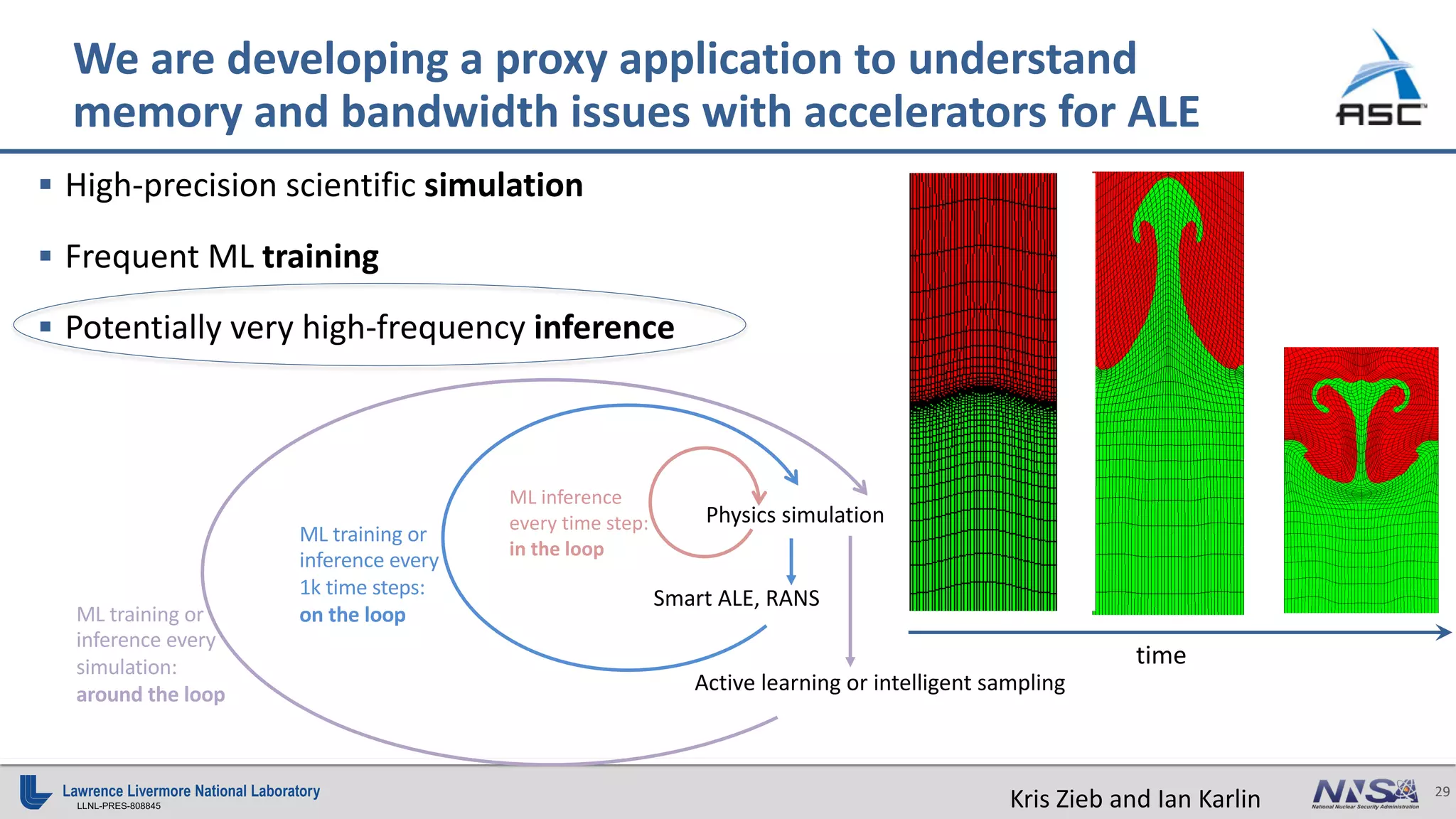

The document outlines the integration of machine learning in scientific simulations at Lawrence Livermore National Laboratory (LLNL) to enhance predictive accuracy, improve workflows, and support national security. It discusses various machine learning applications, including material interface reconstruction and fast surrogate modeling, while emphasizing the importance of data infrastructure for effective machine learning implementation. Additionally, it touches on ongoing research and development efforts, including specialized hardware and community engagement in machine learning applications.