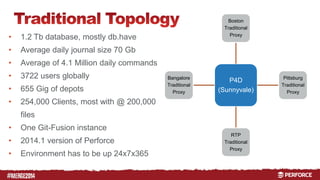

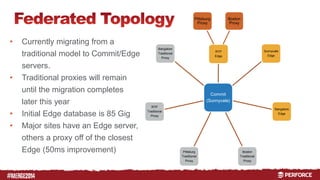

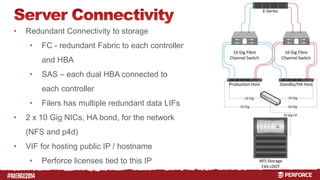

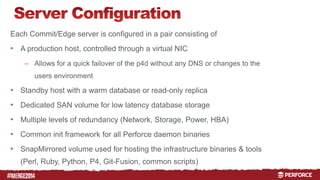

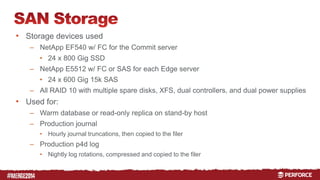

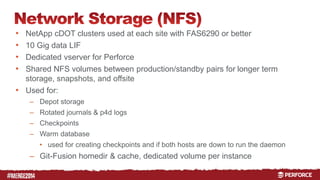

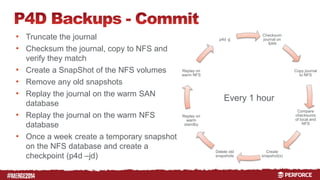

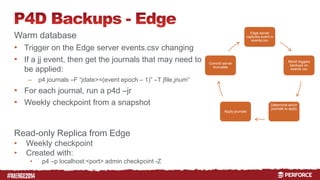

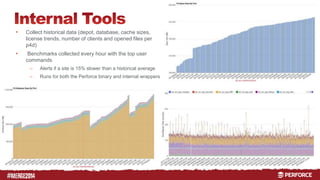

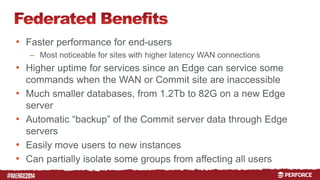

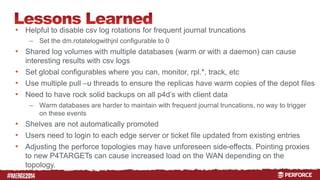

The document outlines the infrastructure and backup strategies for a global Perforce environment with over 4 million daily commands and 3722 users. It details the migration to commit/edge server architecture, high-performance storage solutions, and redundant systems for ensuring uptime and disaster recovery. Additionally, it describes monitoring tools and processes for maintaining optimal performance and data integrity across the system.