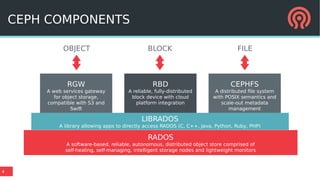

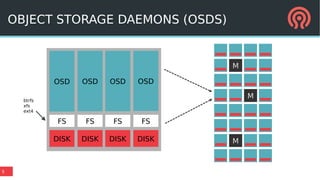

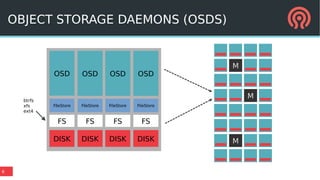

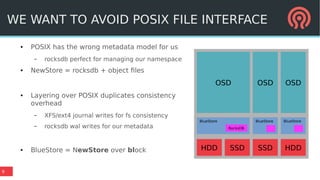

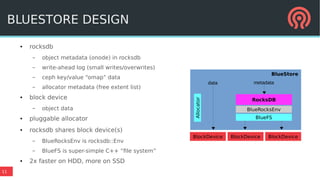

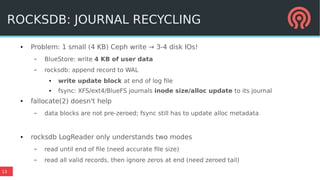

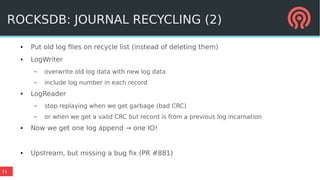

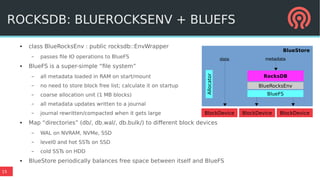

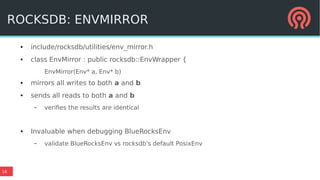

The document discusses the Ceph storage system, highlighting its capabilities for object, block, and file storage, while addressing the shortcomings of traditional POSIX storage. It introduces the new Bluestore backend utilizing RocksDB for efficient metadata management, journal recycling, and better performance. Additionally, it emphasizes the importance of avoiding POSIX interfaces due to their limitations in handling distributed data effectively.