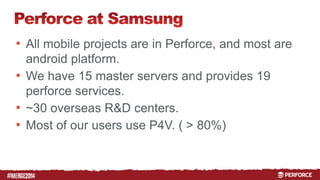

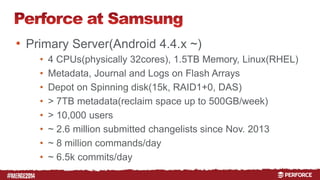

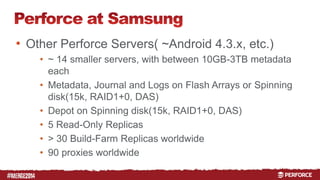

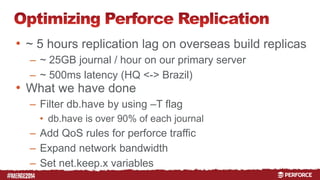

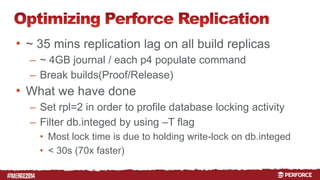

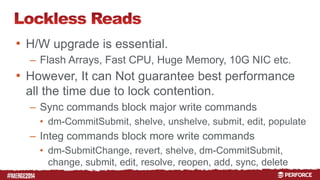

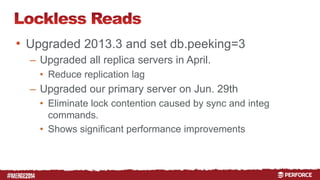

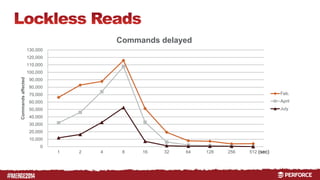

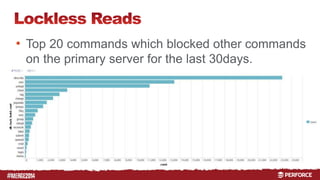

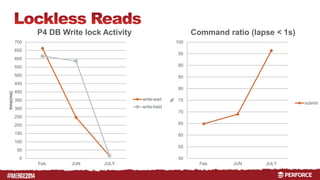

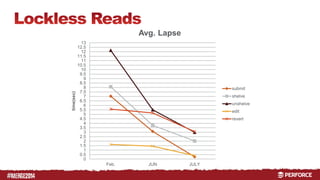

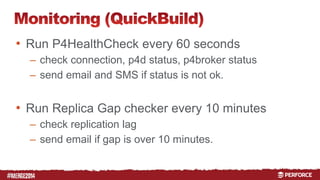

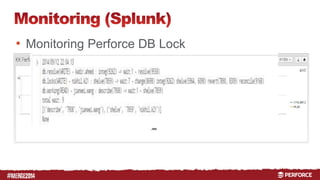

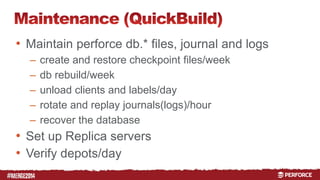

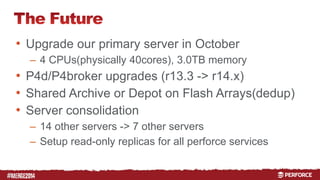

Kanggil Lee is a Senior Software Engineer at Samsung Electronics, managing Perforce servers for mobile communications. He oversees the deployment and optimization of Perforce across Samsung's global R&D centers, dealing with significant user numbers and replication challenges. Upgrade efforts have been made to enhance performance and reduce replication lag, alongside implementing extensive monitoring and maintenance strategies.