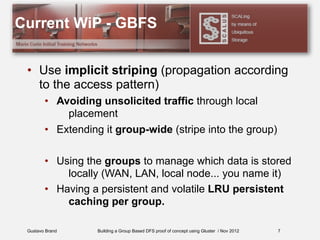

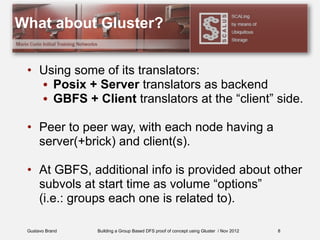

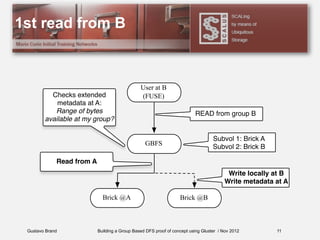

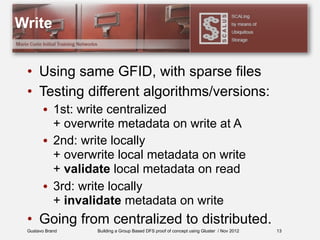

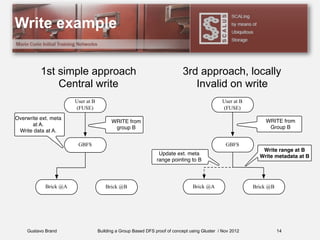

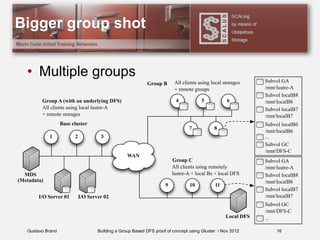

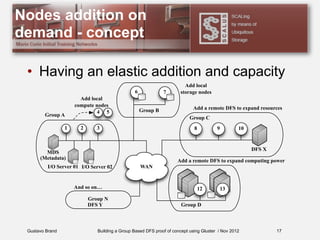

The document discusses using GlusterFS to build a proof of concept for a group-based distributed file system (GBFS). It describes experiments conducted with HDFS and Lustre to analyze the impact of metadata and data traffic over wide area networks. The findings show that using local nodes often outperforms accessing data over WAN links. The presentation outlines initial plans for GBFS to leverage GlusterFS for implicit striping, local placement, and caching across groups. It also discusses potential future scenarios involving dynamic node addition and multiple cooperative storage and computing groups.