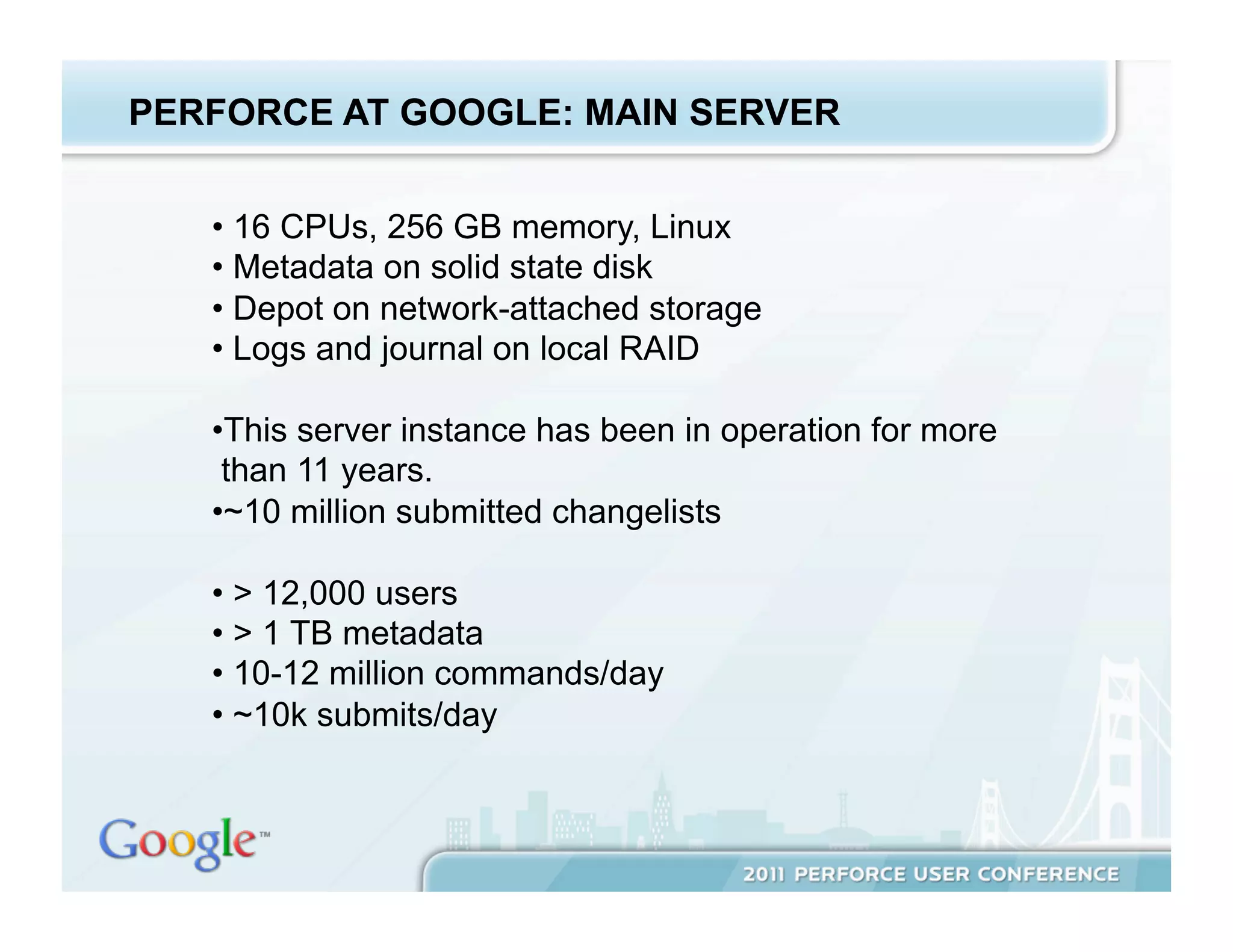

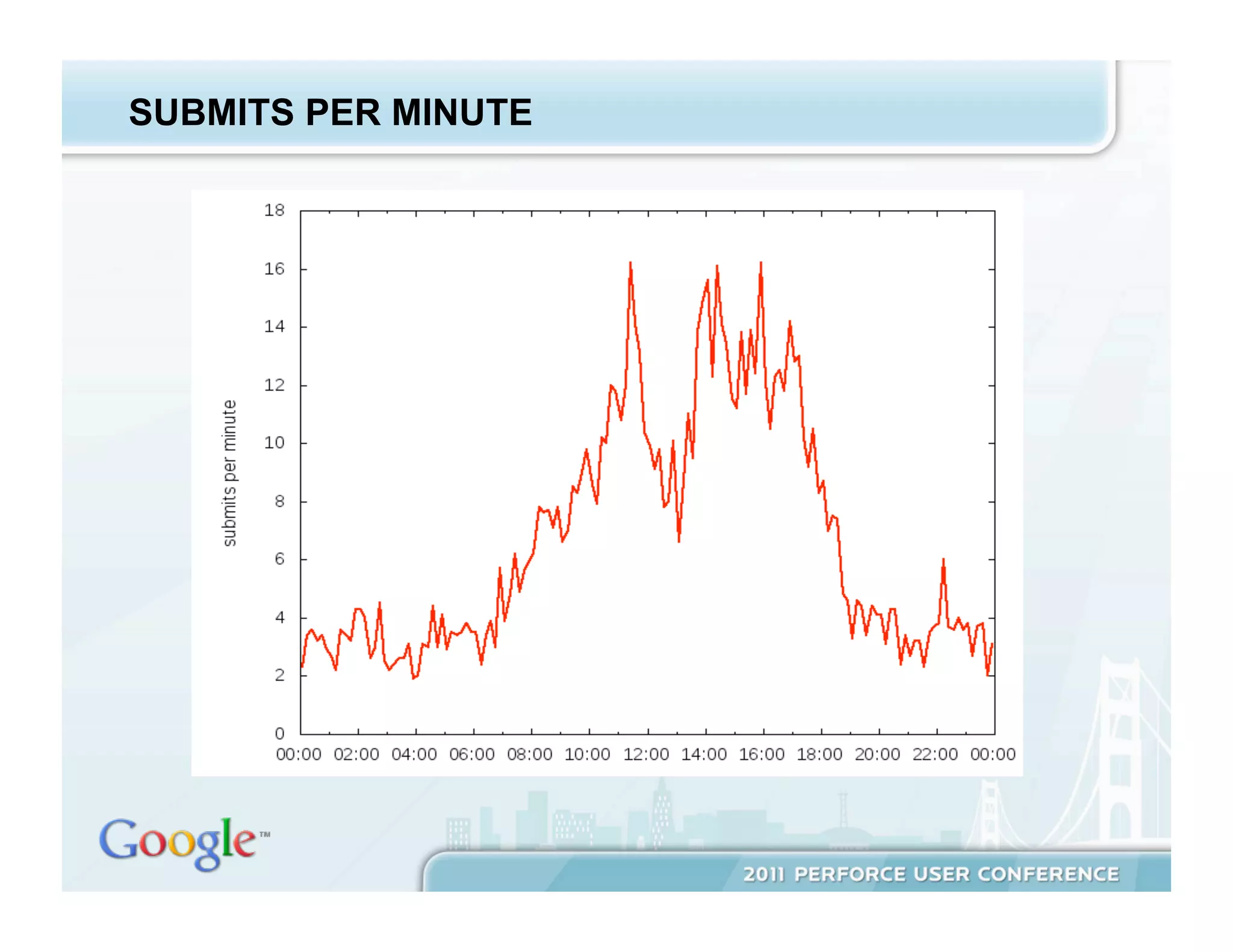

The document discusses Google's extensive use of Perforce for version control, outlining server architecture, performance management, and maintenance practices utilized over 11 years of operation. It covers the server's configuration, performance improvement strategies, and administrative efficiency measures while also emphasizing the importance of monitoring and reducing metadata. Furthermore, it shares insights and lessons learned from managing a large-scale system, highlighting the need for continuous improvement and tailored solutions.