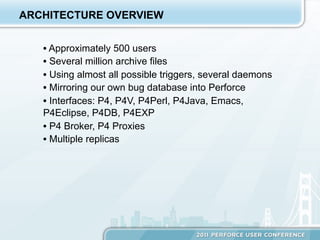

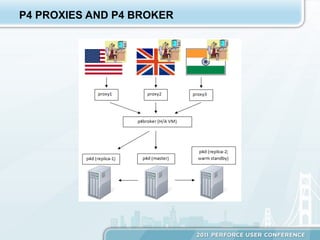

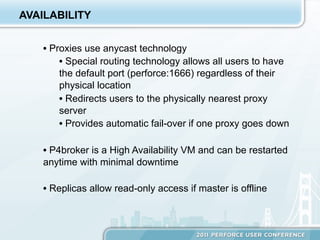

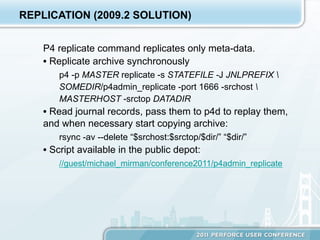

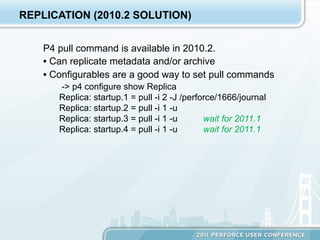

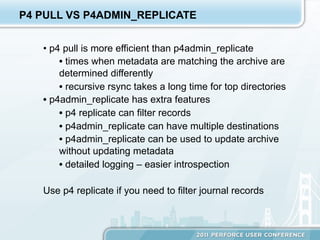

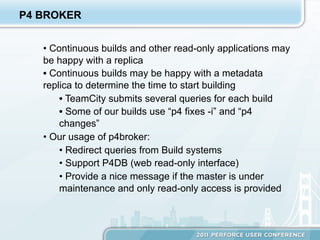

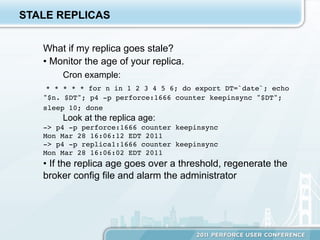

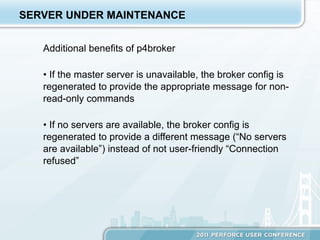

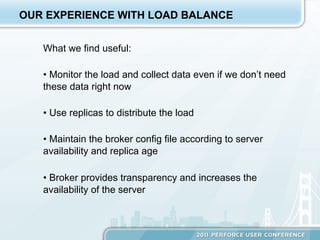

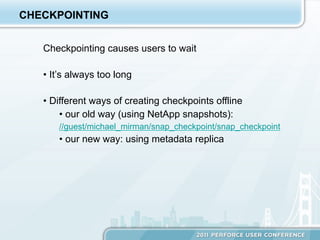

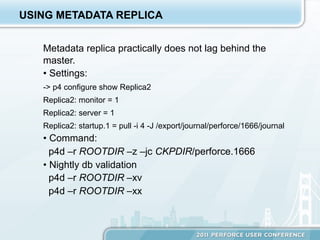

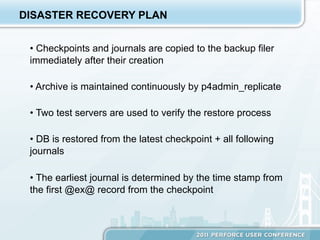

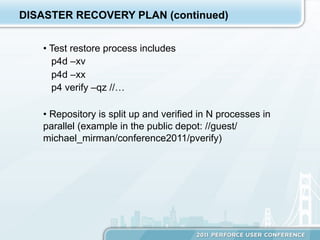

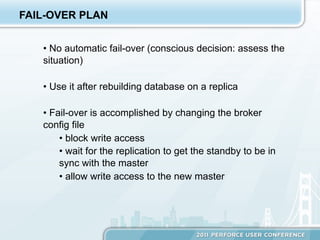

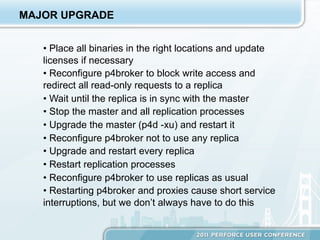

The document discusses the optimization and administration of a Perforce repository, highlighting strategies for scalability, availability, and reliability. Key elements include the implementation of proxies using anycast technology for user redirection, the use of p4 replicate and p4 pull commands for data replication, and a disaster recovery plan that involves immediate backup of checkpoints and journals. The author shares their experiences in improving load balancing and ensuring high availability through automated processes and system configurations.