The document presents a seminar series on linear algebra for machine learning, focusing on linear systems. Key topics include matrices, matrix operations, special types of matrices, the inverse of matrices, determinants, and statistical applications like the correlation coefficient. It also covers solving systems of linear equations and matrix transformations, providing definitions, examples, and properties relevant to these concepts.

![Matrices

An m × n matrix

A =

a11 a12 a13 . . . a1n

a21 a22 a23 . . . a2n

...

...

...

...

...

am1 am2 am3 . . . amn

= [aij ]

The i th row of A is

A = ai1 ai2 ai3 . . . ain , (1 ≤ i ≤ m)

The j th column of A is

A =

a1j

a2j

...

amj

, (1 ≤ j ≤ n)

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Linear Systems](https://image.slidesharecdn.com/linearalgebraformachinelearninglinearsystems-181015001657/85/1-Linear-Algebra-for-Machine-Learning-Linear-Systems-3-320.jpg)

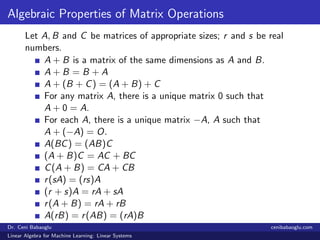

![Matrix Operations

Matrix Addition

A + B = [aij ] + [bij ] , C = [cij ]

cij = aij + bij , i = 1, 2, · · · , m, j = 1, 2, · · · , n.

Scalar Multiplication

rA = r [aij ] , C = [cij ]

cij = r aij , i = 1, 2, · · · , m, j = 1, 2, · · · , n.

Transpose of a Matrix

AT

= aT

ij , aT

ij = aji

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Linear Systems](https://image.slidesharecdn.com/linearalgebraformachinelearninglinearsystems-181015001657/85/1-Linear-Algebra-for-Machine-Learning-Linear-Systems-4-320.jpg)

![Special Types of Matrices

Diagonal Matrix

An n × n matrix A = [aij ] is called a diagonal matrix if aij = 0

for i = j

a 0 . . . 0

0 1 . . . 0

...

...

...

...

0 0 . . . 1

Identity Matrix

The scalar matrix In = [dij ], where dii = 1 and dij = 0 for

i = j, is called the n × n identity matrix

1 0 . . . 0

0 1 . . . 0

...

...

...

...

0 0 . . . 1

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Linear Systems](https://image.slidesharecdn.com/linearalgebraformachinelearninglinearsystems-181015001657/85/1-Linear-Algebra-for-Machine-Learning-Linear-Systems-5-320.jpg)

![Special Types of Matrices

Upper Triangular Matrix

An n × n matrix A = [aij ] is called upper triangular if aij = 0

for i > j

2 b c

0 3 0

0 0 1

Lower Triangular Matrix

An n × n matrix A = [aij ] is called lower triangular if aij = 0

for i < j

2 0 0

0 3 0

a b 1

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Linear Systems](https://image.slidesharecdn.com/linearalgebraformachinelearninglinearsystems-181015001657/85/1-Linear-Algebra-for-Machine-Learning-Linear-Systems-6-320.jpg)

![A statistical application: Correlation Coefficient

Sample means of two attributes

¯x =

1

n

n

i=1

x, ¯y =

1

n

n

i=1

y

Centered form

xc = [x1 − ¯x x2 − ¯x · · · xn − ¯x]T

yc = [y1 − ¯y y2 − ¯y · · · yn − ¯y]T

Correlation coefficient

Cor(xc, yc) =

xc · yc

xc yc

r =

n

i=1(xi − ¯x)(yi − ¯y)

n

i=1(xi − ¯x)2 n

i=1(yi − ¯y)2

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Linear Systems](https://image.slidesharecdn.com/linearalgebraformachinelearninglinearsystems-181015001657/85/1-Linear-Algebra-for-Machine-Learning-Linear-Systems-16-320.jpg)