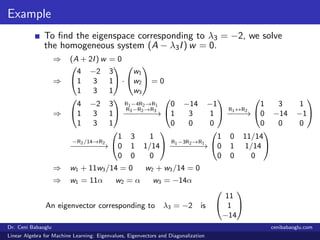

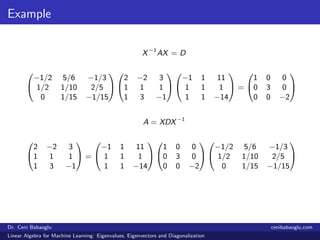

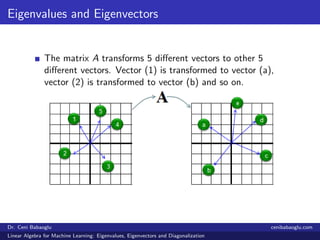

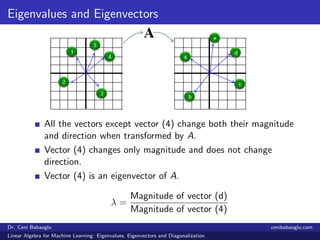

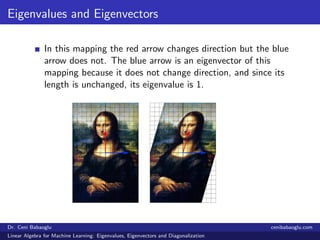

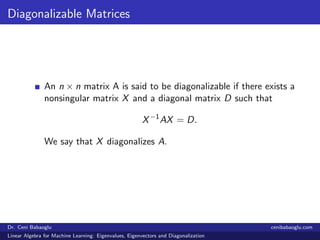

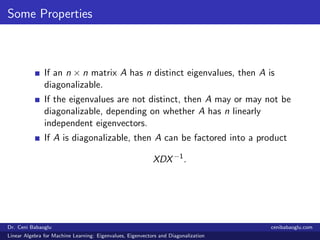

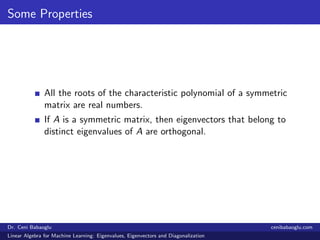

This document discusses eigenvalues, eigenvectors, and diagonalization in the context of linear algebra for machine learning. It defines eigenvectors and their associated eigenvalues, presents properties of eigenvectors and diagonalizable matrices, and provides examples illustrating how to diagonalize a given matrix. The document also includes references for further reading on linear algebra topics.

![Example

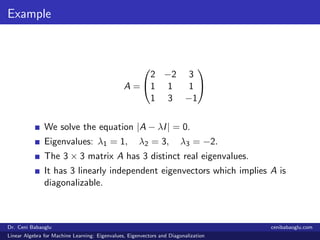

Diagonalize the matrix A =

2 −2 3

1 1 1

1 3 −1

We should solve the equation |A − λI| = 0.

|A − λI| =

2 − λ −2 3

1 1 − λ 1

1 3 −1 − λ

R2−R3→R2

−−−−−−−→

2 − λ −2 3

0 −2 − λ 2 + λ

1 3 −1 − λ

= (2 + λ)

2 − λ −2 3

0 −1 1

1 3 −1 − λ

C2+C3→C2

−−−−−−−→ (2 + λ)

2 − λ 1 3

0 0 1

1 2 − λ −1 − λ

= −(2 + λ)

2 − λ 1

1 2 − λ

= −(2 + λ)[(2 − λ)2

− 1] = −(2 + λ)(λ2

− 4λ + 3) = 0.

Eigenvalues: λ1 = 1, λ2 = 3, λ3 = −2.

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Eigenvalues, Eigenvectors and Diagonalization](https://image.slidesharecdn.com/4-190324194701/85/4-Linear-Algebra-for-Machine-Learning-Eigenvalues-Eigenvectors-and-Diagonalization-14-320.jpg)