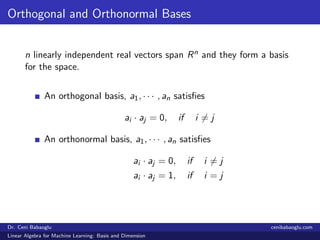

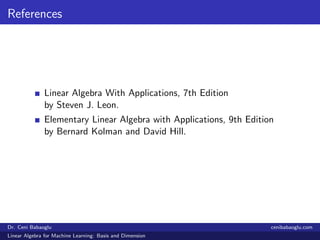

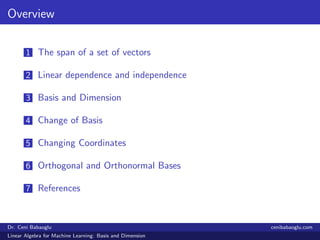

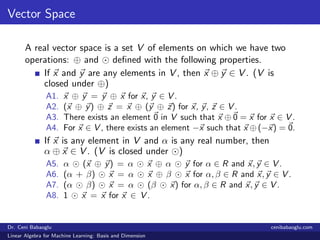

The document discusses key concepts of linear algebra pertinent to machine learning, focusing on basis and dimension topics such as the span of vectors, linear dependence and independence, and change of basis. It explains the definition of a vector space, how to form a basis, and the implications of dimension in vector spaces. The document also touches on orthogonal and orthonormal bases, alongside providing practical examples and references for further reading.

![Example

Rn is n-dimensional. Let x = [x1 x2 x3 · · · xn]T ∈ Rn. Then

x1

x2

...

xn

= x1

1

0

0

...

0

+ x2

0

1

0

...

0

+ · · · + xn

0

0

0

...

1

≡ x1i1 + x2i2 + · · · + xnin.

Therefore span{i1, i2, · · · , in} = Rn.

i1, i2, · · · , in are linearly independent.

They form a basis and this implies the dimension of R is n.

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Basis and Dimension](https://image.slidesharecdn.com/2-181124151325/85/2-Linear-Algebra-for-Machine-Learning-Basis-and-Dimension-8-320.jpg)

![Change of Basis

The standard basis for R2 is {e1, e2}. Any vector x ∈ R2 can be

expressed as a linear combination

x = x1e1 + x2e2.

The scalars x1 and x2 can be thought of as the coordinates of x

with respect to the standard basis.

For any basis {y, z} for R2, a given vector x can be represented

uniquely as a linear combination,

x = αy + βz.

[y, z]: ordered basis

(α, β)T : the coordinate vector of x with respect to [y, z]

If we reverse the order of the basis vectors and take [z, y], then

we must also reorder the coordinate vector and take (β, α)T .

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Basis and Dimension](https://image.slidesharecdn.com/2-181124151325/85/2-Linear-Algebra-for-Machine-Learning-Basis-and-Dimension-10-320.jpg)

![Example

y = (2, 1)T and z = (1, 4)T are linearly independent and form a

basis for R2.

x = (7, 7)T can be written as a linear combination:

x = 3y + z

The coordinate vector of x with respect to [y, z] is (3, 1)T .

Geometrically, the coordinate vector specifies how to get from the

origin O(0, 0) to the point P(7, 7), moving first in the direction of

y and then in the direction of z .

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Basis and Dimension](https://image.slidesharecdn.com/2-181124151325/85/2-Linear-Algebra-for-Machine-Learning-Basis-and-Dimension-11-320.jpg)

![Changing Coordinates

Suppose, for example, instead of using [e1, e2] for R2, we wish to

use a different basis, say

u1 =

3

2

, u2 =

1

1

.

I. Given a vector c1u1 + c2u2, let’s find its coordinates with

respect to e1 and e2.

II. Given a vector x = (x1, x2)T , let’s find its coordinates with

respect to u1 and u2.

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Basis and Dimension](https://image.slidesharecdn.com/2-181124151325/85/2-Linear-Algebra-for-Machine-Learning-Basis-and-Dimension-12-320.jpg)

![Changing Coordinates

I. Given a vector c1u1 + c2u2, let’s find its coordinates with

respect to e1 and e2.

u1 = 3e1 + 2e2, u2 = e1 + e2

c1u1 + c2u2 = (3c1e1 + 2c1e2) + (c2e1 + c2e2)

= (3c1 + c2)e1 + (2c1 + c2)e2

x =

3c1 + c2

2c1 + c2

=

3 1

2 1

c1

c2

U = (u1, u2) =

3 1

2 1

U: the transition matrix from [u1, u2] to [e1, e2]

Given any coordinate c with respect to [u1, u2], the corresponding

coordinate vector x with respect to [e1, e2] by

x = Uc

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Basis and Dimension](https://image.slidesharecdn.com/2-181124151325/85/2-Linear-Algebra-for-Machine-Learning-Basis-and-Dimension-13-320.jpg)

![Changing Coordinates

II. Given a vector x = (x1, x2)T , let’s find its coordinates with

respect to u1 and u2.

We have to find the transition matrix from [e1, e2] to [u1, u2].

The matrix U is nonsingular, since its column vectors u1 and u2

are linearly independent.

c = U−1

x

Given vector x,

x = (x1, x2)T

= x1e1 + x2e2

We need to multiply by U−1 to find its coordinate vector with

respect to [u1, u2].

U−1: the transition matrix from [e1, e2] to [u1, u2]

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Basis and Dimension](https://image.slidesharecdn.com/2-181124151325/85/2-Linear-Algebra-for-Machine-Learning-Basis-and-Dimension-14-320.jpg)

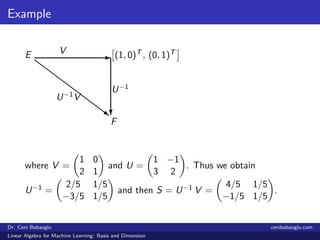

![Example

Let E = (1, 2)T , (0, 1)T and F = (1, 3)T , (−1, 2)T be ordered

basis for R2.

(i) Find the transition matrix S from the basis E to the basis F.

(ii) If the vector x ∈ R2 has the coordinate vector [x]E = (5, 0)T

with respect to the ordered basis E, determine the coordinate

vector [x]F with respect to the ordered basis F.

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Basis and Dimension](https://image.slidesharecdn.com/2-181124151325/85/2-Linear-Algebra-for-Machine-Learning-Basis-and-Dimension-15-320.jpg)

![Example

(i) We should find the matrix S =

a c

b d

such that

[x]F = S [x]E for x ∈ R2. We solve the following system:

1

2

= a

1

3

+ b

−1

2

0

1

= c

1

3

+ d

−1

2

⇒ 1 = a − b 2 = 3a + 2b

0 = c − d 1 = 3c + 2d

⇒ a = 4/5 b = −1/5 c = d = 1/5

⇒ S =

4/5 1/5

−1/5 1/5

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Basis and Dimension](https://image.slidesharecdn.com/2-181124151325/85/2-Linear-Algebra-for-Machine-Learning-Basis-and-Dimension-16-320.jpg)

![Example

(ii) Since [x]E = (5, 0)T ,

[x]F = S [x]E =

4/5 1/5

−1/5 1/5

5

0

=

4

−1

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Basis and Dimension](https://image.slidesharecdn.com/2-181124151325/85/2-Linear-Algebra-for-Machine-Learning-Basis-and-Dimension-18-320.jpg)