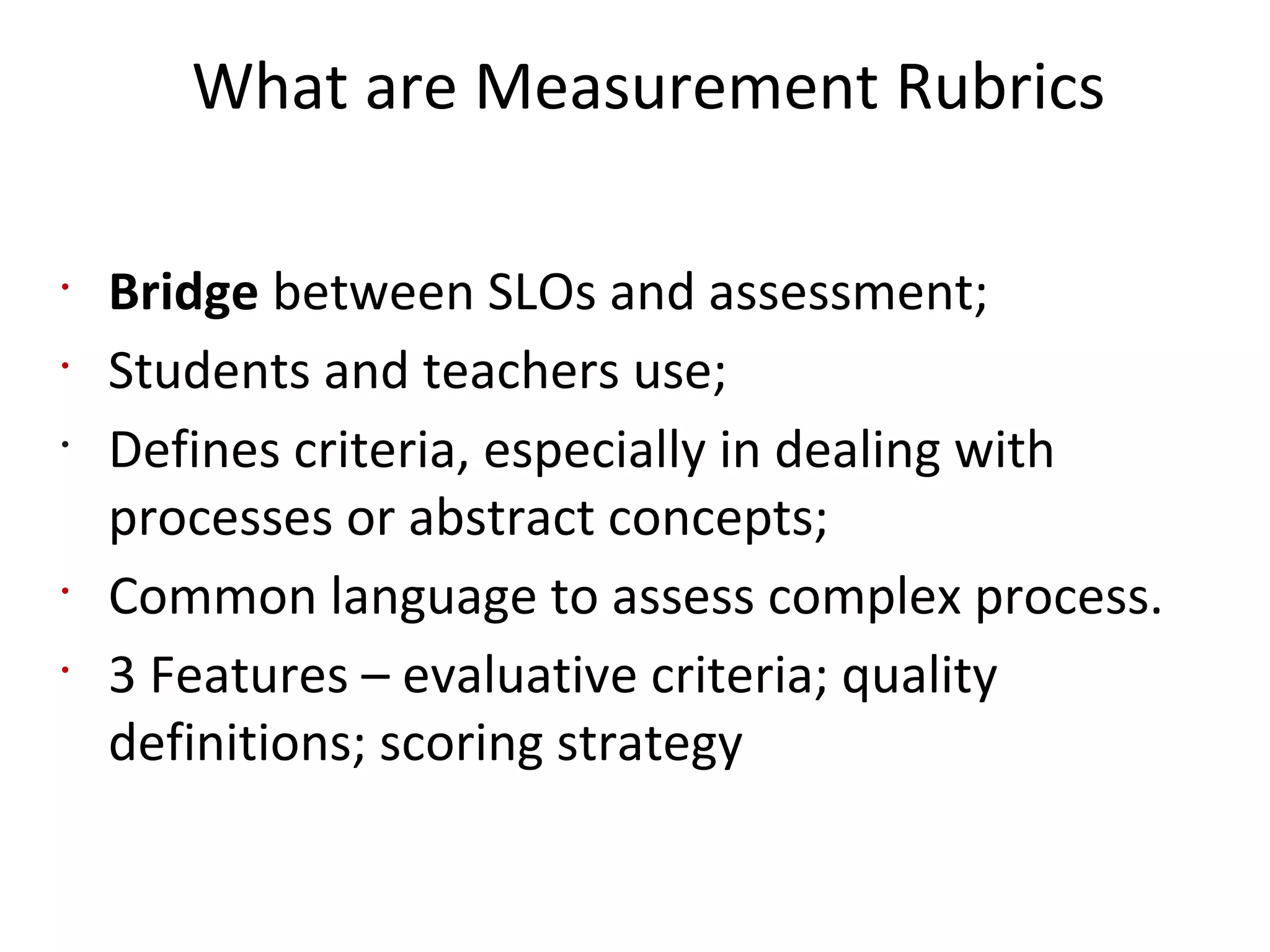

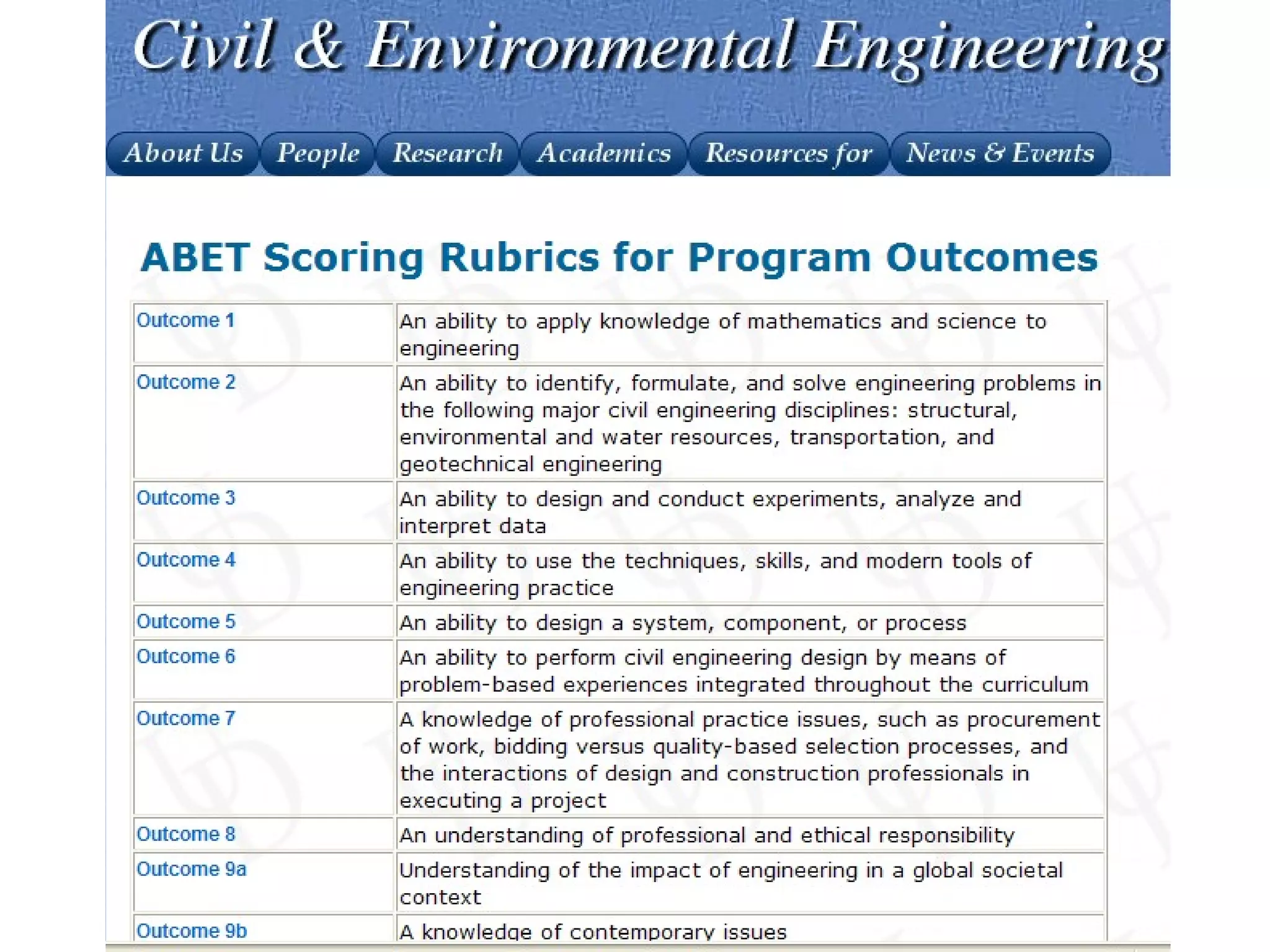

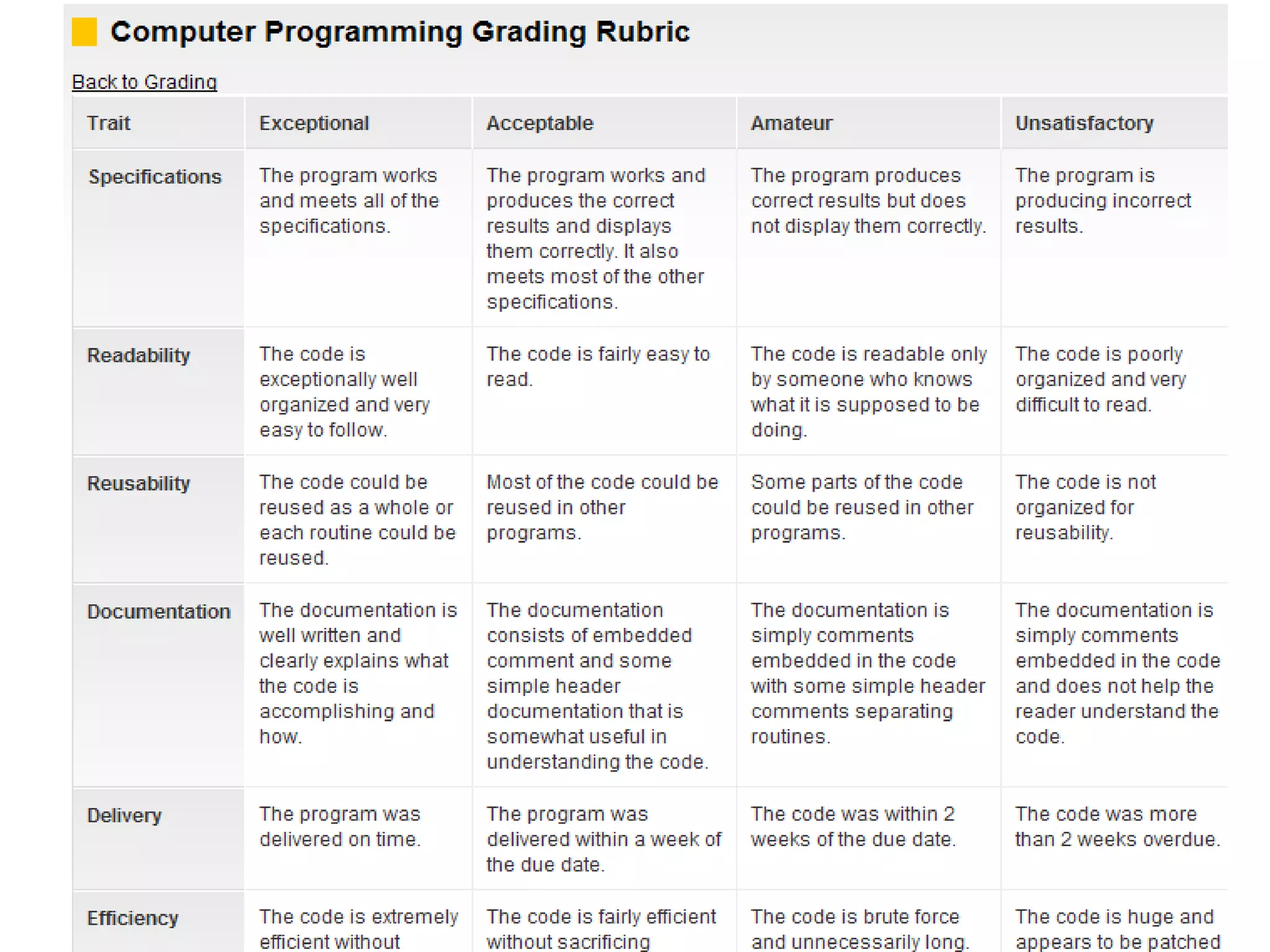

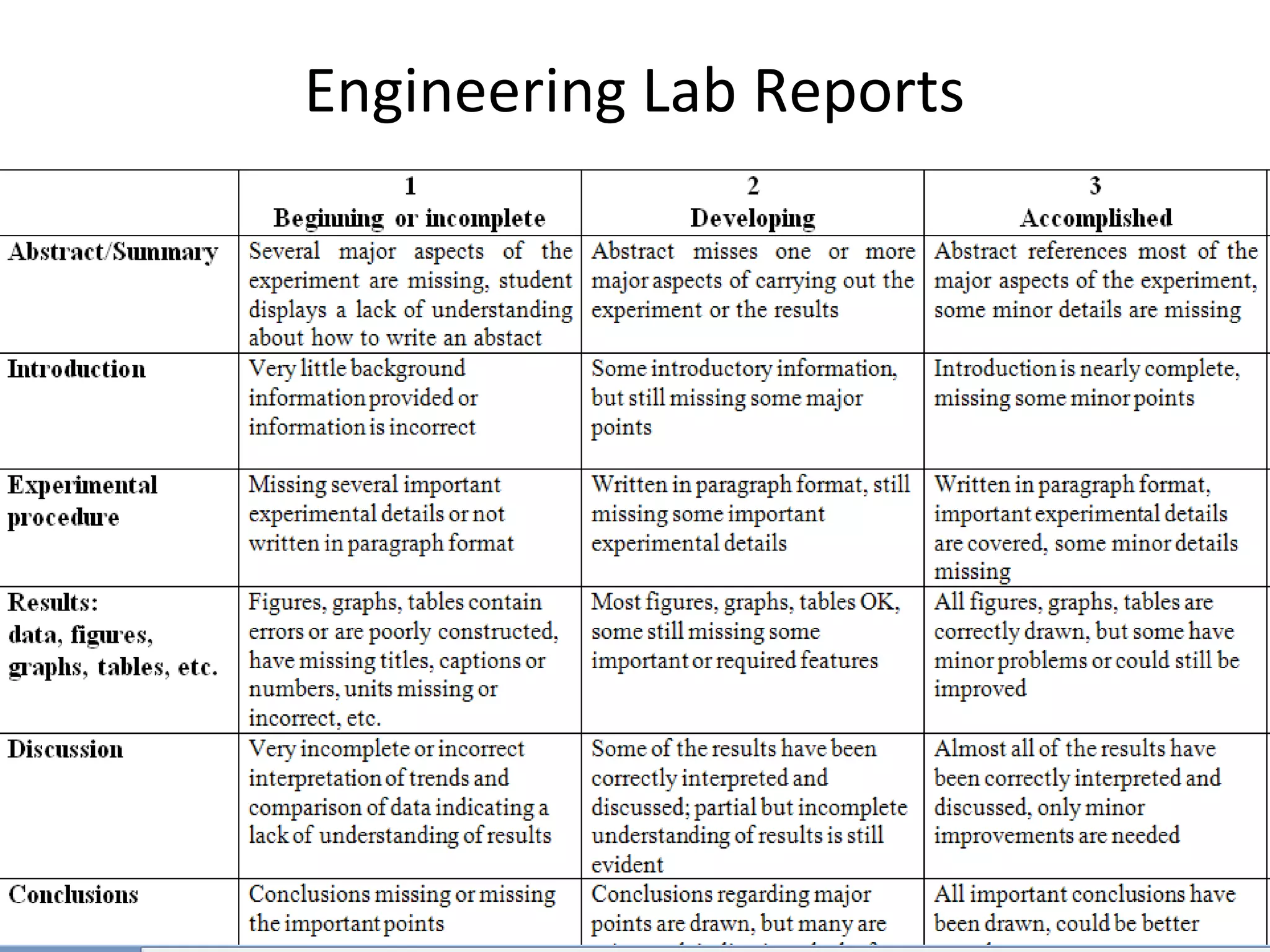

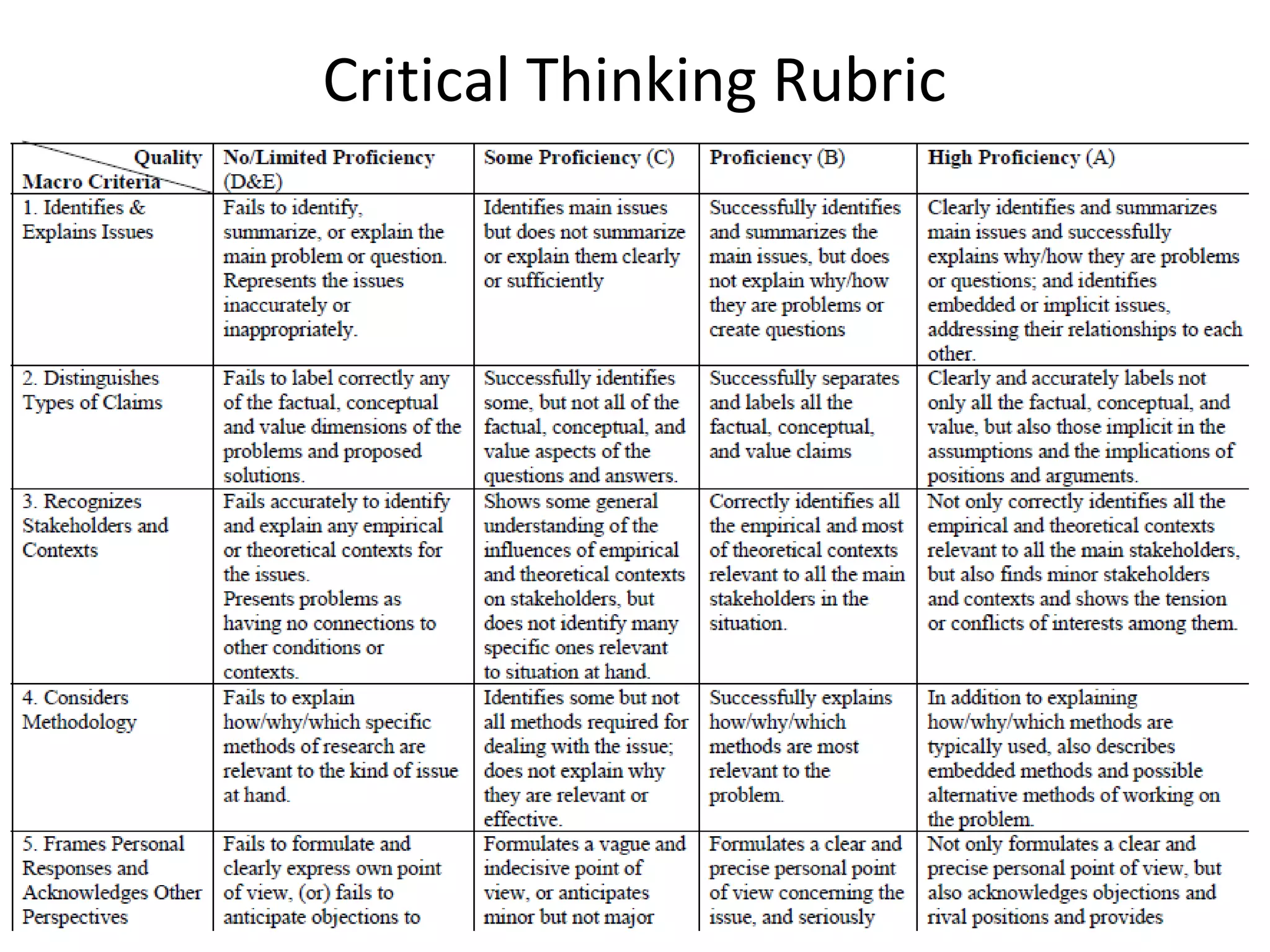

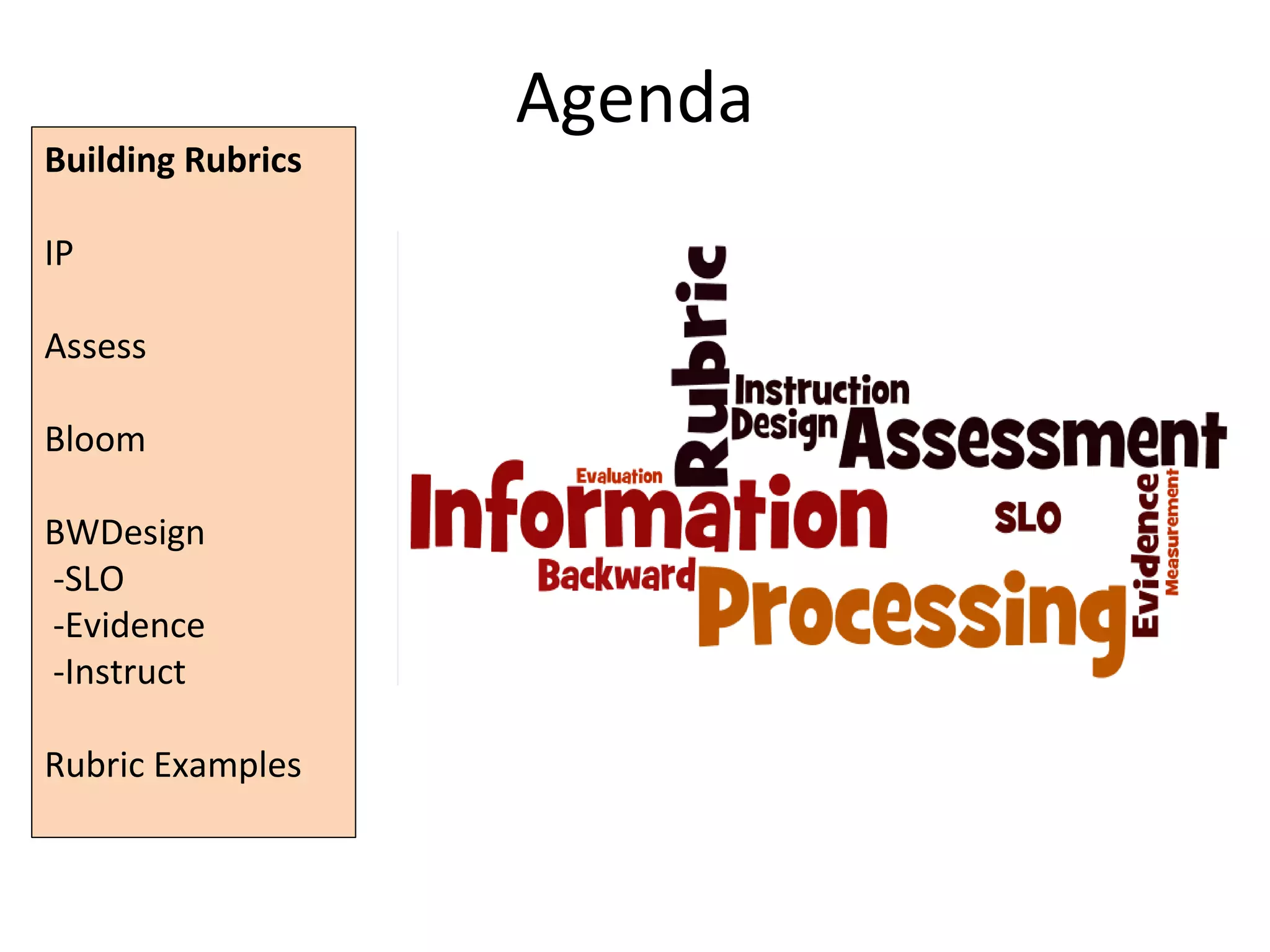

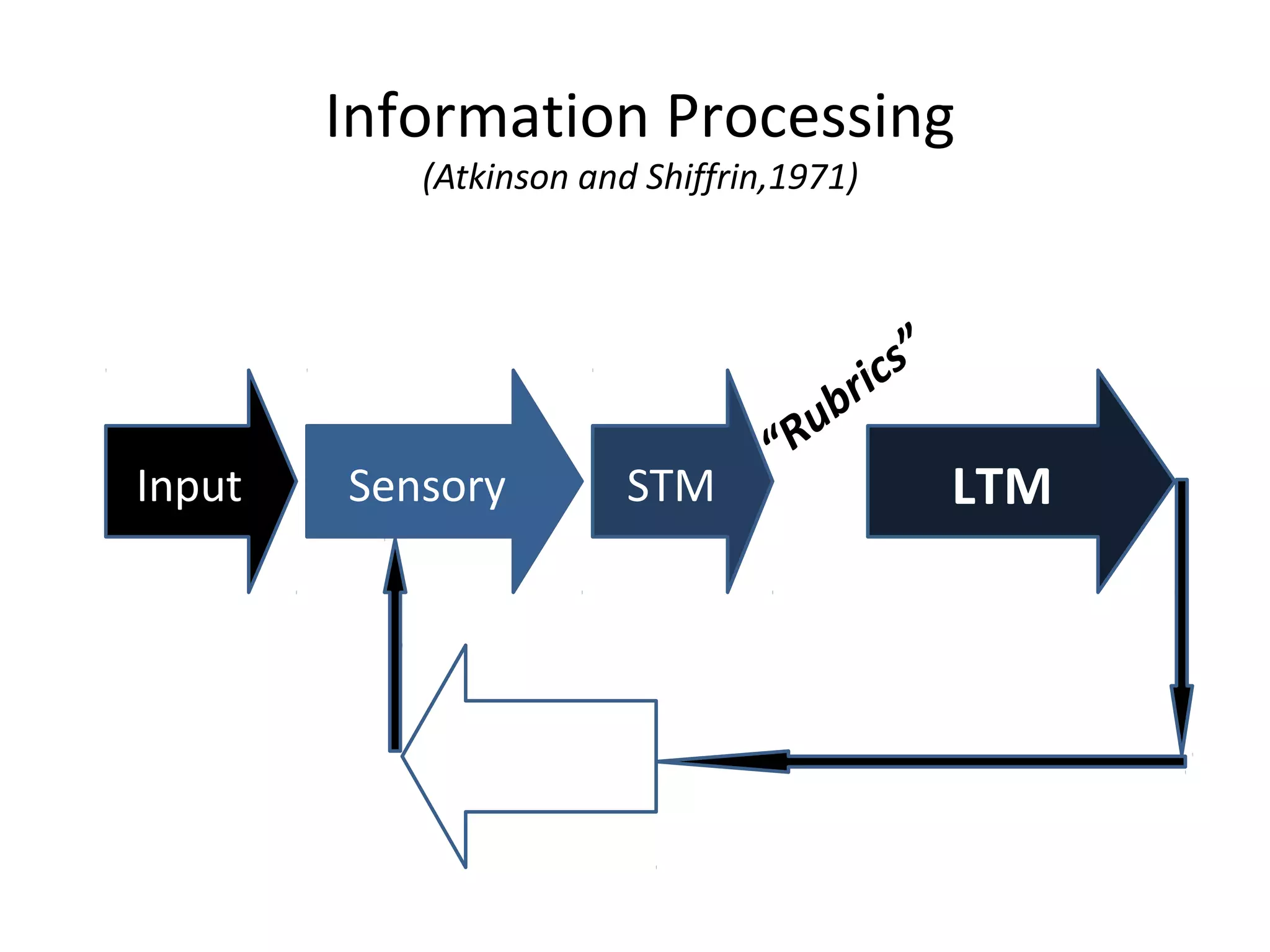

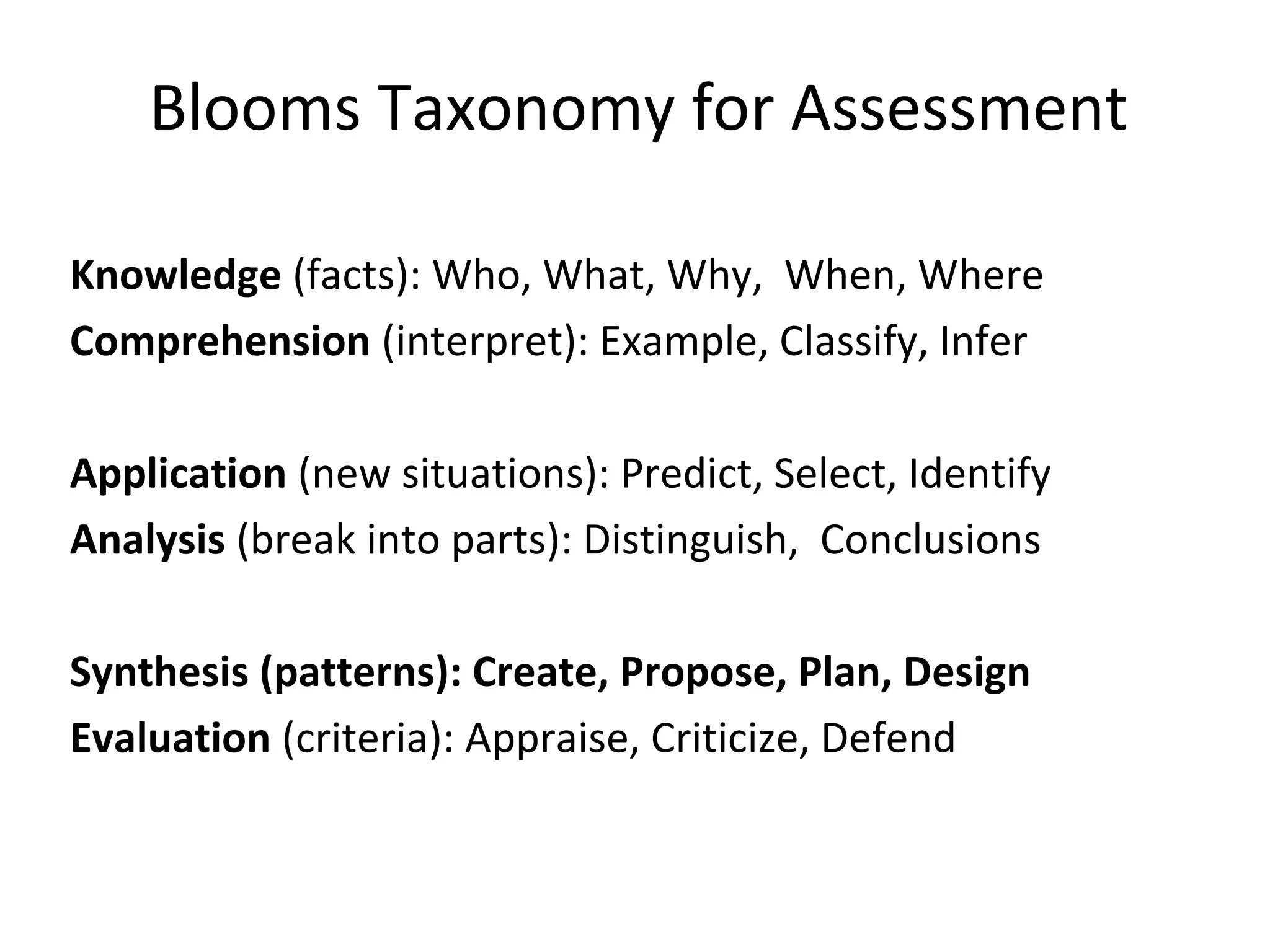

The document outlines strategies for creating rubrics in STEM higher education, focusing on integrating formative assessment and backward design principles. Key components include measuring student learning outcomes with defined criteria and ensuring rubrics are reliable and valid. It also provides resources and examples to aid educators in developing effective assessment tools.

![Course [re]Design: Understanding by

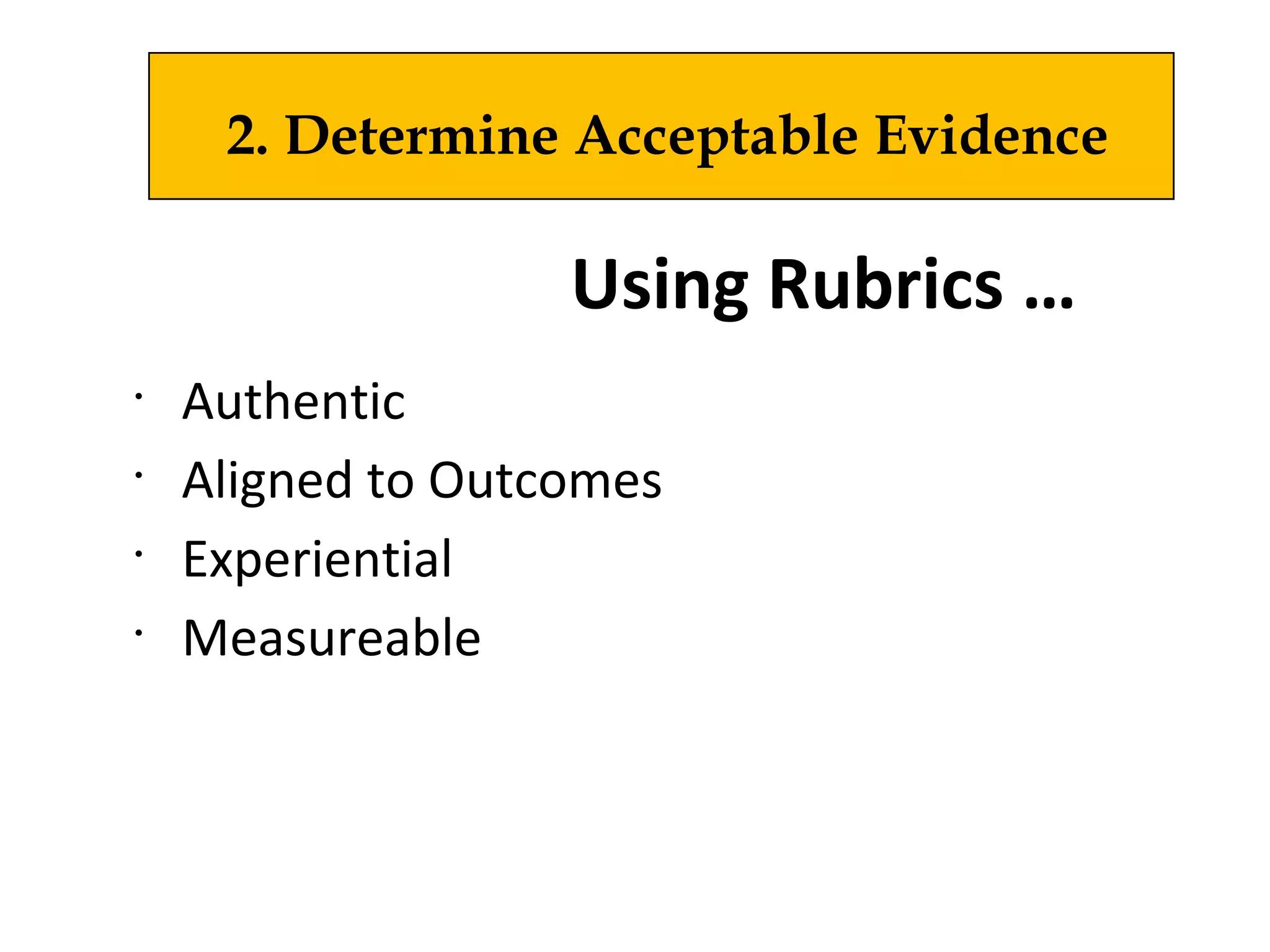

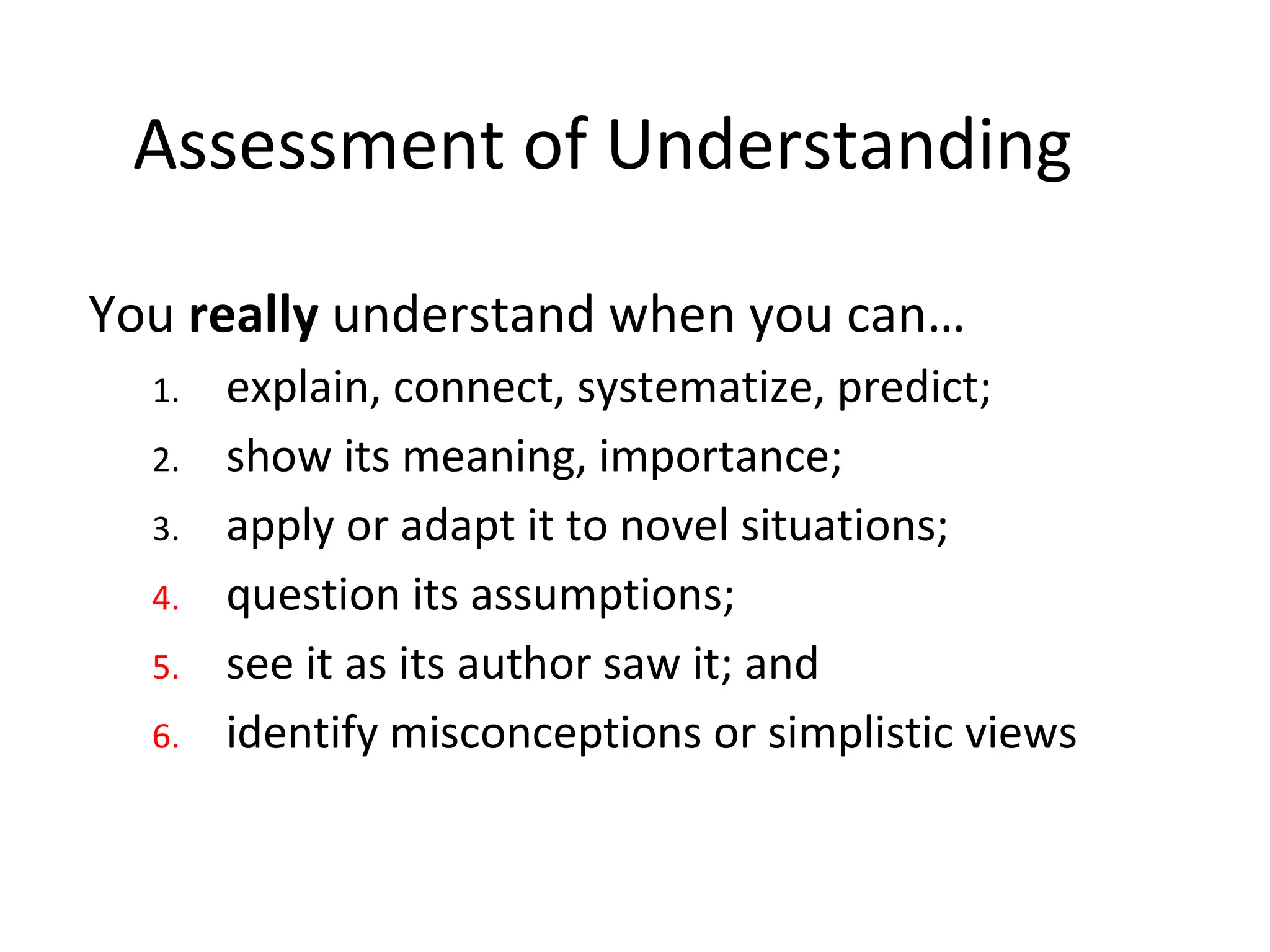

Backward Design

Wiggins &

McTighe (1998)

1. Identify desired results

2. Determine acceptable evidence

3. Plan learning experiences

& instruction](https://image.slidesharecdn.com/jacehargisrubricforstem-150201125623-conversion-gate02/75/Jace-Hargis-Rubrics-for-STEM-9-2048.jpg)

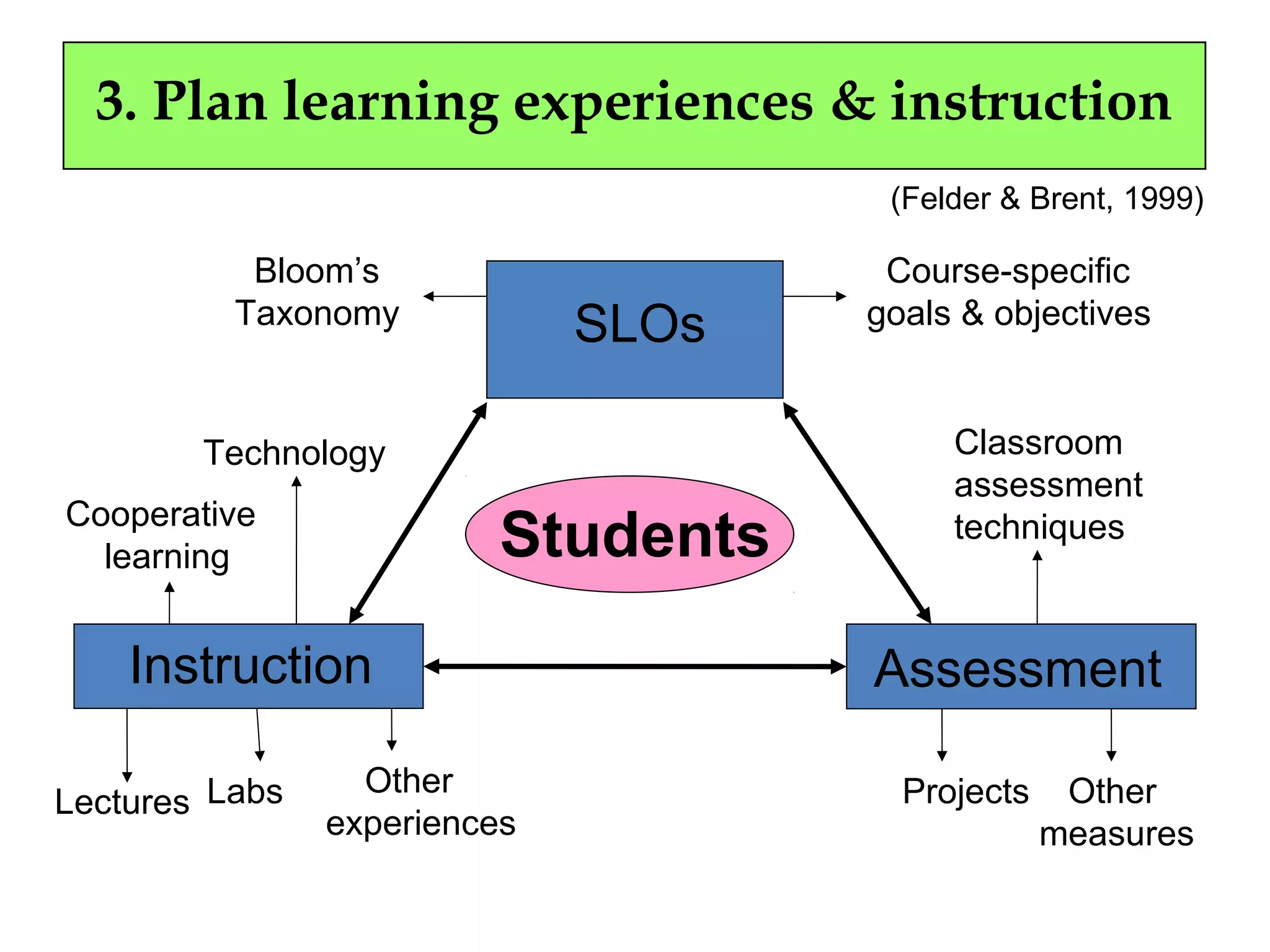

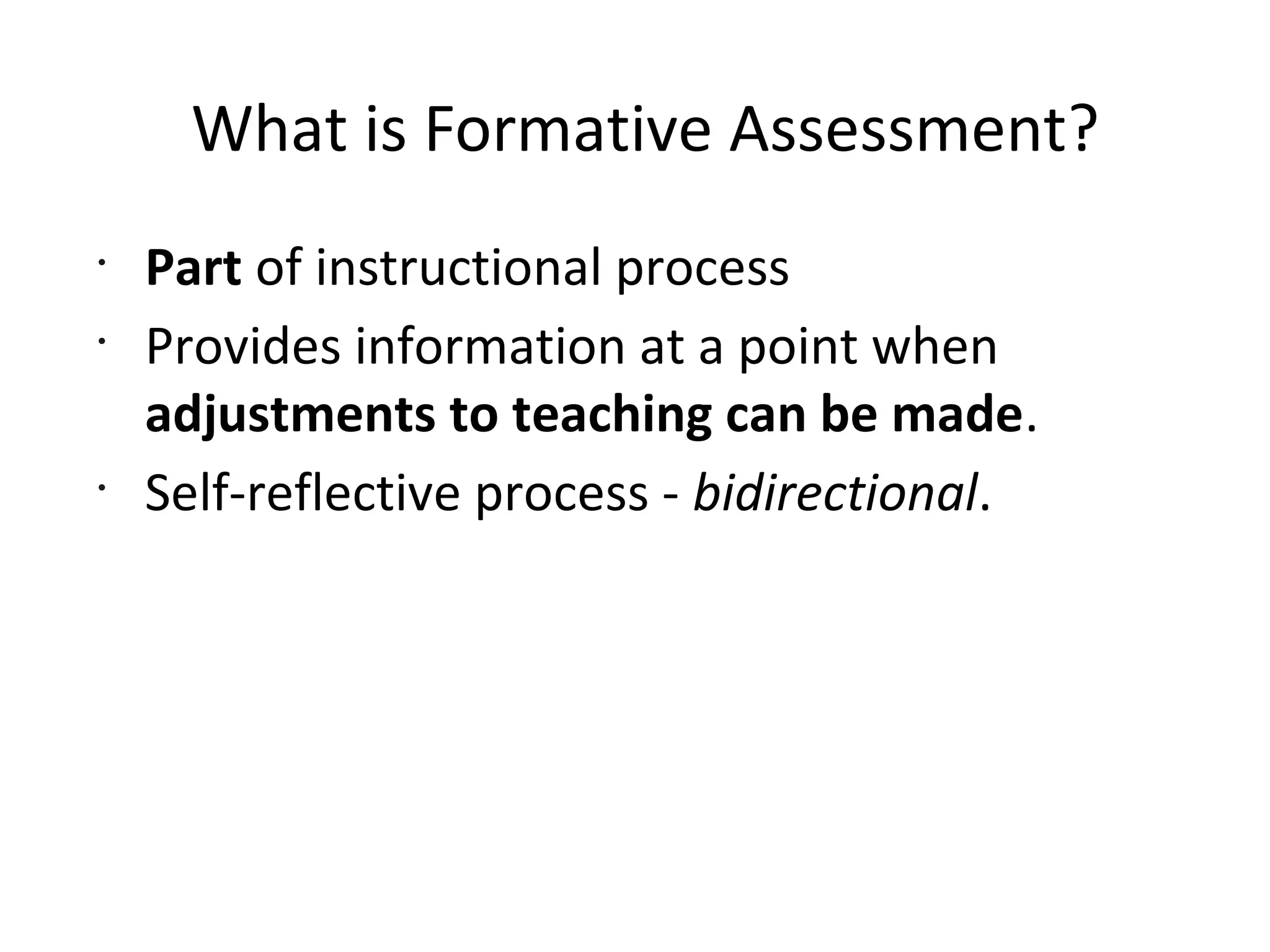

![Student Learning Outcome (SLO)

•

Knowledge, Skills & Dispositions

– active, high level

– specifically under certain conditions;

– to what degree they will be measured.

– Substance (subject);

– Form (action the learner performs)

[analyze, demonstrate, derive, integrate, interpret, propose]

1. Identify Desired Results](https://image.slidesharecdn.com/jacehargisrubricforstem-150201125623-conversion-gate02/75/Jace-Hargis-Rubrics-for-STEM-10-2048.jpg)