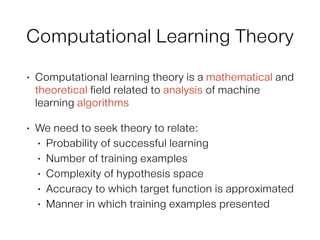

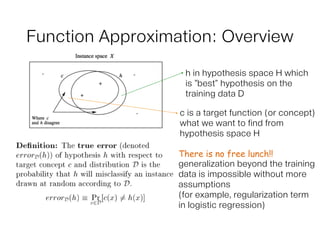

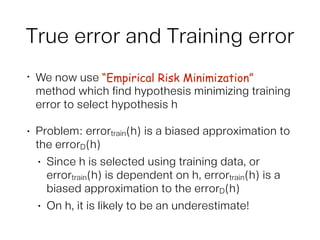

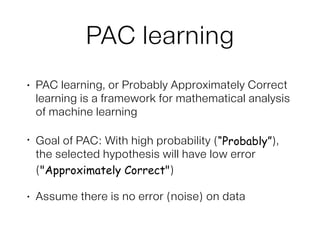

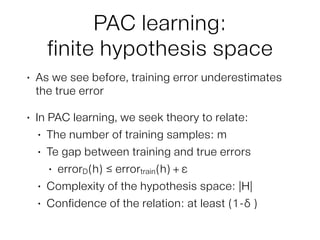

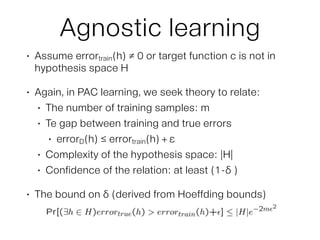

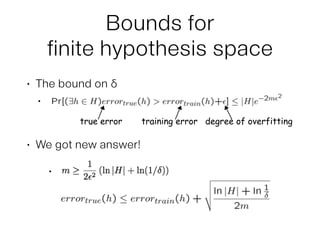

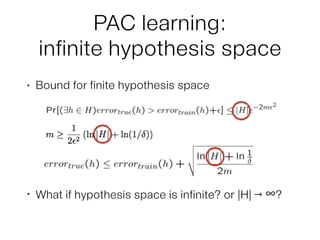

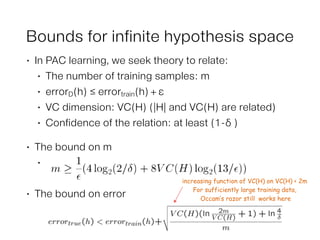

This document provides an overview of PAC (Probably Approximately Correct) learning theory. It discusses how PAC learning relates the probability of successful learning to the number of training examples, complexity of the hypothesis space, and accuracy of approximating the target function. Key concepts explained include training error vs true error, overfitting, the VC dimension as a measure of hypothesis space complexity, and how PAC learning bounds can be derived for finite and infinite hypothesis spaces based on factors like the training size and VC dimension.