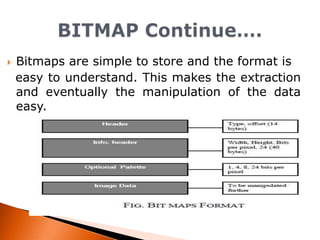

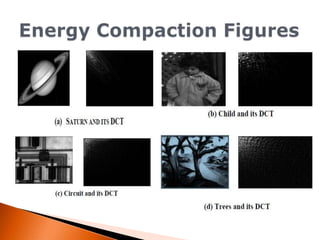

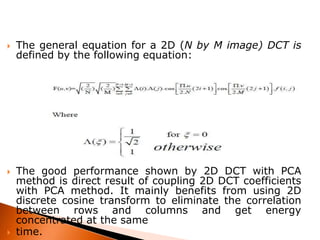

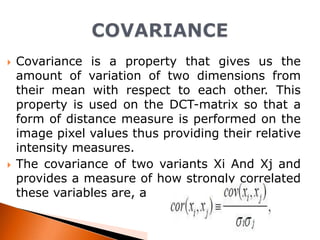

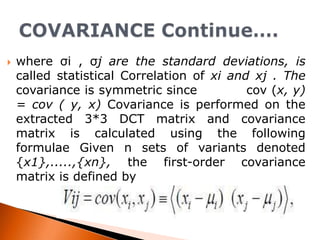

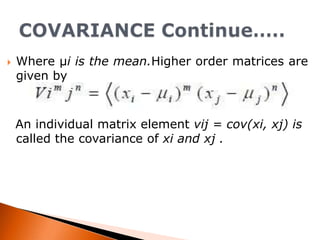

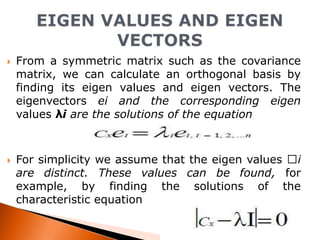

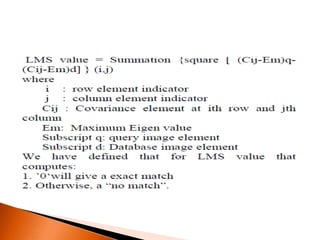

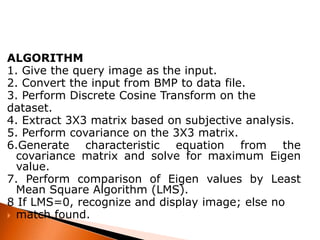

This document discusses using principal component analysis (PCA) and the discrete cosine transform (DCT) to recognize images from a database. It explains how bitmaps store image data, DCT compacts image energy, and PCA reduces dimensionality by finding the principal components via eigenvectors of the covariance matrix. An algorithm is proposed that uses DCT, PCA on a 3x3 block, characteristic equations to find the maximum eigenvector, and least mean square comparison to recognize queries against the database images.