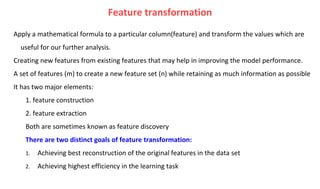

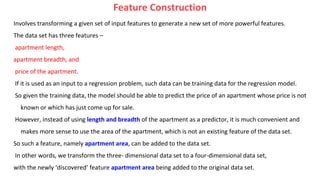

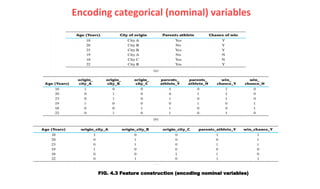

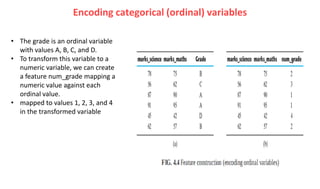

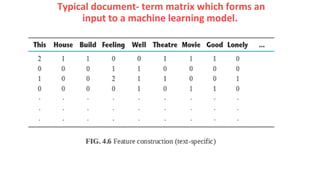

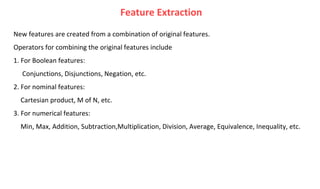

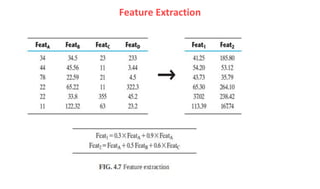

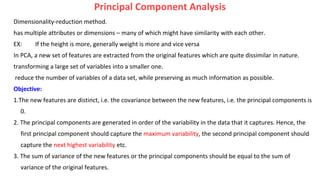

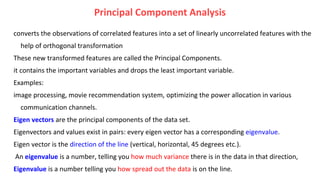

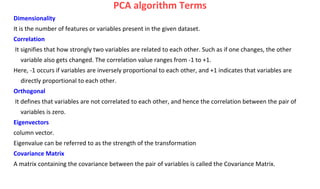

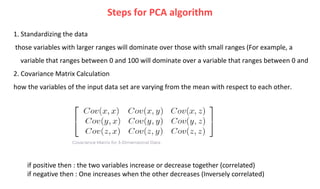

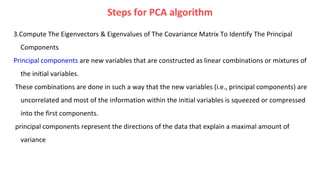

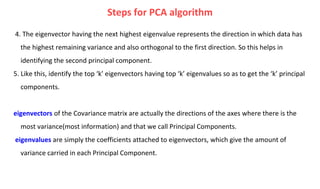

The document discusses feature engineering for machine learning. It defines feature engineering as the process of transforming raw data into features that better represent the data and improve machine learning performance. Some key techniques discussed include feature selection, construction, transformation, and extraction. Feature construction involves generating new features from existing ones, such as calculating apartment area from length and breadth. Feature extraction techniques discussed are principal component analysis, which transforms correlated features into linearly uncorrelated components capturing maximum variance. The document provides examples and steps for principal component analysis.

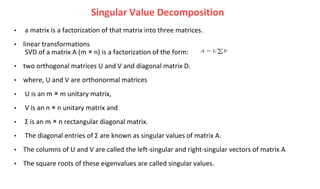

![1. Patterns in the attributes are captured by the right-singular vectors, i.e.the columns of V.

2. Patterns among the instances are captured by the left-singular, i.e. the

columns of U.

3. Larger a singular value, larger is the part of the matrix A that it accounts for and its

associated vectors.

4. New data matrix with k’ attributes is obtained using the equation

D = D × [v , v , … , v ]

Thus, the dimensionality gets reduced to k

SVD of a data matrix : properties](https://image.slidesharecdn.com/featureengineering-230302092121-5b4ecc49/85/Feature-Engineering-in-Machine-Learning-23-320.jpg)