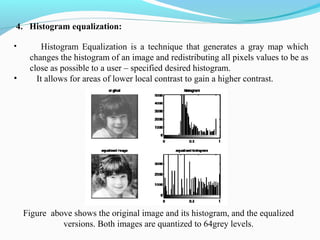

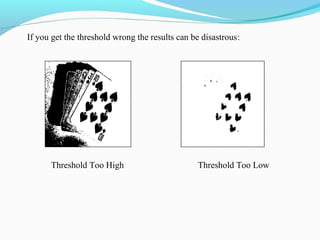

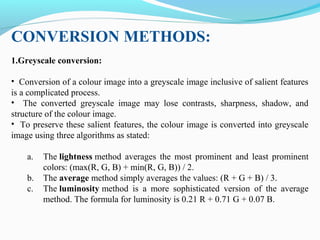

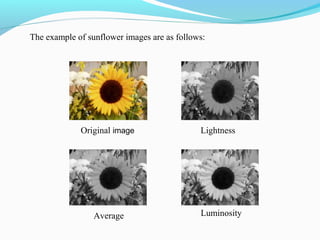

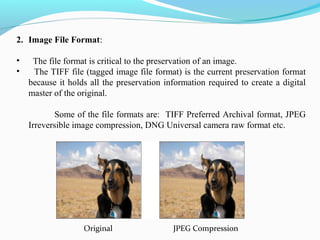

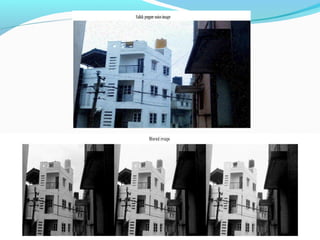

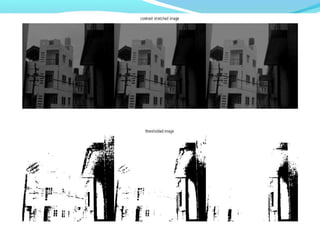

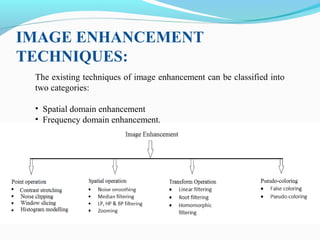

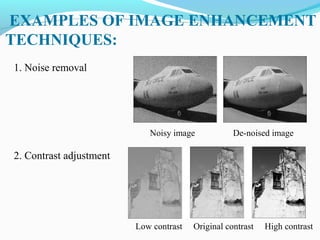

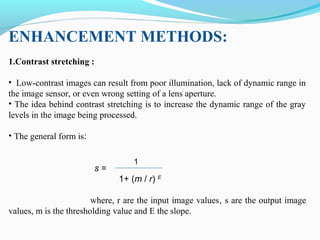

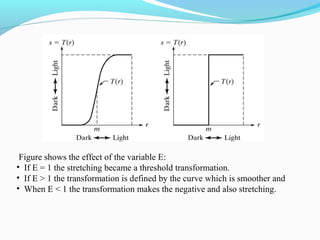

This document discusses various techniques for image enhancement in spatial domain. It defines image enhancement as improving visual quality or converting images for better analysis. Key techniques covered include noise removal, contrast adjustment, intensity adjustment, histogram equalization, thresholding, gray level slicing, and image rotation. Conversion methods like grayscale and different file formats are also summarized. Experimental results and applications in fields like medicine, astronomy, and security are mentioned.

![SPATIAL DOMAIN ENHANCEMENT:

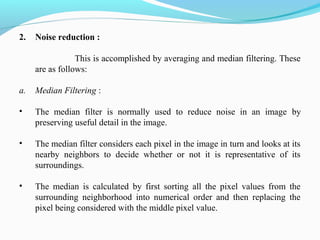

x

• Spatial domain techniques are performed to

the image plane itself and they are based on

direct manipulation of pixels in an image.

• The operation can be formulated as

g(x,y)=T[f(x,y)], where g is the output, f is the

input image and T is an operation on f defined

over some neighbourhood of (x,y).

• According to the operations on the image

pixels, it can be further divided into 2

categories:

oPoint operations and

oSpatial operations (including linear and non-

linear operations).](https://image.slidesharecdn.com/imageenhancementppt-nal2-140606021627-phpapp01/85/Image-enhancement-ppt-nal2-8-320.jpg)

![3. Intensity Adjustment :

• Intensity adjustment is a technique for mapping an image's intensity values to

a new range.

• For example, rice.tif. is a low contrast image. The histogram of rice.tif, shown

in Figure below, indicates that there are no values below 40 or above 225. If

you remap the data values to fill the entire intensity range [0, 255], you can

increase the contrast of the image.

• You can do this kind of adjustment with the imadjust function. The general

syntax of imadjust is

J = imadjust(I,[low_in high_in],[low_out high_out])](https://image.slidesharecdn.com/imageenhancementppt-nal2-140606021627-phpapp01/85/Image-enhancement-ppt-nal2-14-320.jpg)