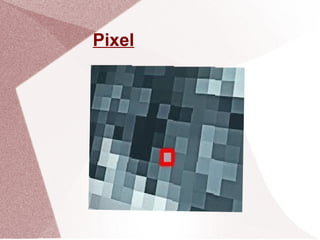

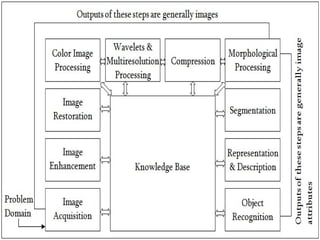

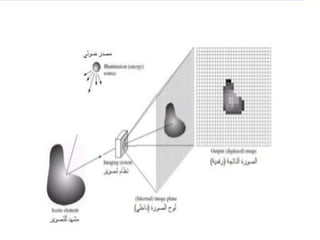

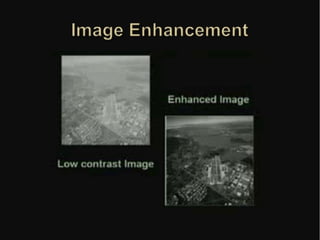

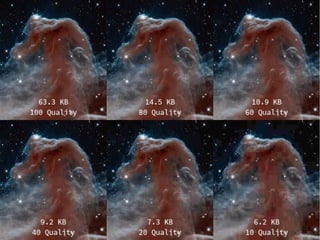

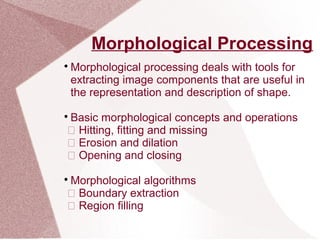

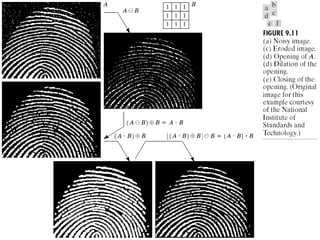

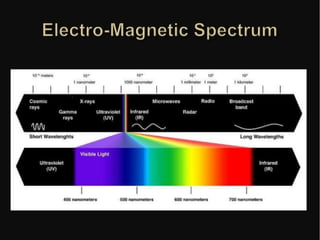

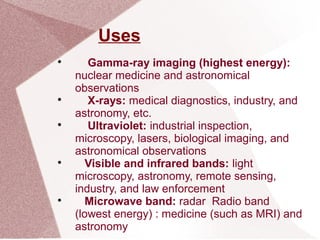

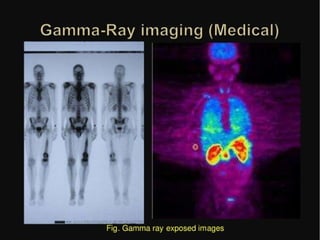

This document provides an overview of digital image processing. It discusses what digital images are composed of and how they are processed using computers. The key steps in digital image processing are described as image acquisition, enhancement, restoration, representation and description, and recognition. A variety of techniques can be used at each step like filtering, segmentation, morphological operations, and compression. The document also outlines common sources of digital images, such as from the electromagnetic spectrum, and applications like medical imaging, astronomy, security screening, and human-computer interfaces.