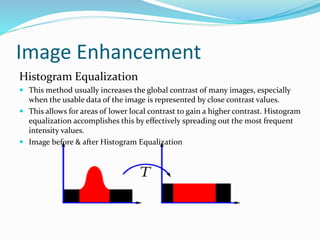

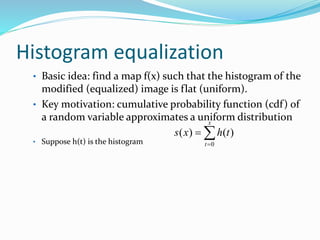

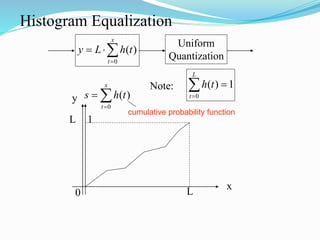

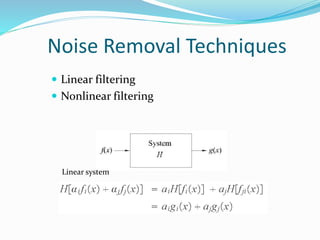

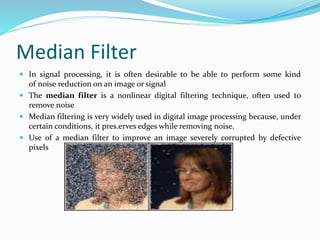

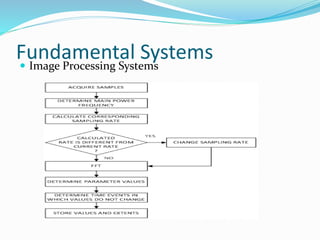

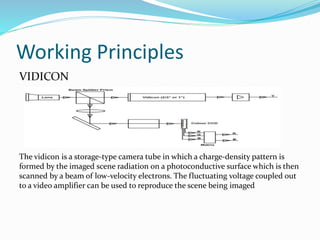

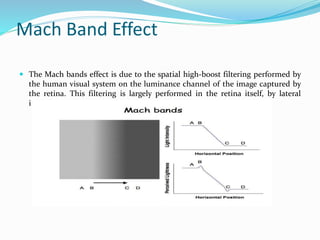

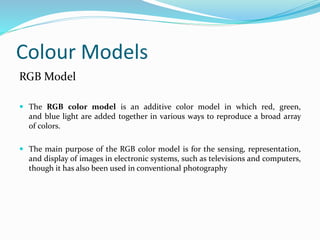

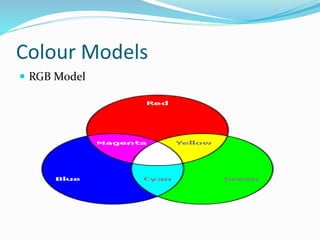

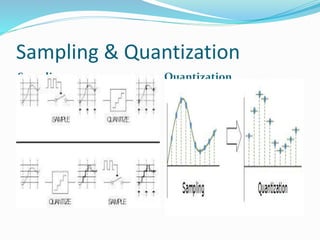

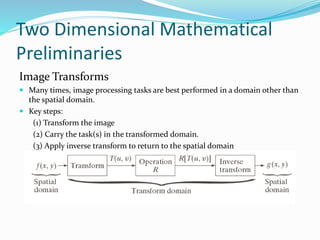

This document discusses digital image processing and various image enhancement techniques. It begins with introductions to digital image processing and fundamental image processing systems. It then covers topics like image sampling and quantization, color models, image transforms like the discrete Fourier transform, and noise removal techniques like median filtering. Histogram equalization and homomorphic filtering are also summarized as methods for image enhancement.

![Karhunen–Loève Transform

(KLT)

KLT is a representation of a stochastic process as an infinite linear

combination of orthogonal functions, analogous to a Fourier

series representation of a function on a bounded interval.

In contrast to a Fourier series where the coefficients are fixed numbers and the

expansion basis consists of sinusoidal functions (that

is, sine and cosine functions), the coefficients in the Karhunen–Loève theorem

are random variables and the expansion basis depends on the process.

Theorem. Let Xt be a zero-mean square integrable stochastic process defined

over a probability space (Ω, F, P) and indexed over a closed and bounded

interval [a, b], with continuous covariance function KX(s, t).

Then KX(s,t) is a Mercer kernel and letting ek be an orthonormal basis

of L2([a, b]) formed by the eigenfunctions of TKX with respective eigenvalues λk,

Xt admits the following representation

where the convergence is in L2, uniform in t and](https://image.slidesharecdn.com/digitalimageprocessing-160907143712/85/Digital-image-processing-19-320.jpg)