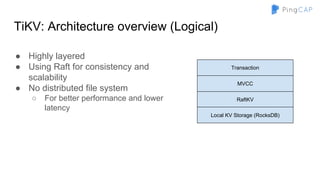

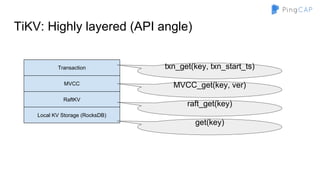

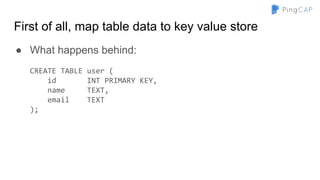

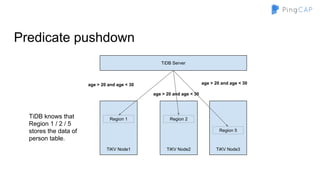

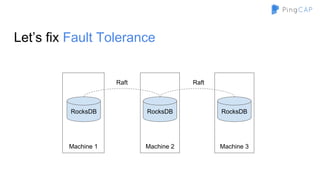

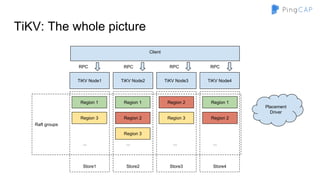

The document outlines the development of TiDB, a NewSQL database built by PingCAP, emphasizing its scalability, fault tolerance, and support for ACID transactions. It describes the architecture, which utilizes a distributed key-value store with features like data replication using Raft, horizontal scalability through partitioning, and SQL compatibility achieved through mapping relations to a key-value model. The overall goal is to create a highly available and performant database that can efficiently handle large-scale data and complex queries.

![That’s Cool, but...

● But what if we want to scan data?

○ How to support API: scan(startKey, endKey, limit)

● So, we need a globally ordered map

○ Can’t use hash partitioning

○ Use range partitioning

■ Region 1 -> [a - d]

■ Region 2 -> [e - h]

■ …

■ Region n -> [w - z]](https://image.slidesharecdn.com/howtobuildtidb-171130073818/85/How-to-build-TiDB-9-320.jpg)

![Transaction API style (go code)

txn := store.Begin() // start a transaction

txn.Set([]byte("key1"), []byte("value1"))

txn.Set([]byte("key2"), []byte("value2"))

err = txn.Commit() // commit transaction

if err != nil {

txn.Rollback()

}

I want to write

code like this.](https://image.slidesharecdn.com/howtobuildtidb-171130073818/85/How-to-build-TiDB-22-320.jpg)