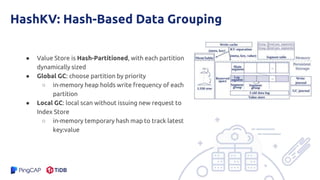

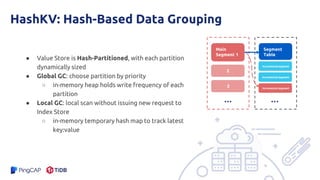

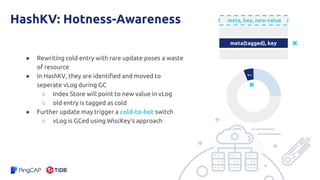

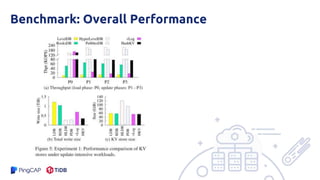

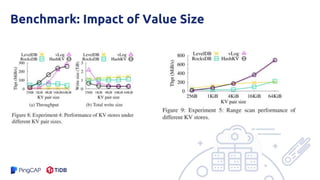

The document presents an overview of the hashkv system, which focuses on optimizing key-value storage using a hash-based data grouping approach. It discusses various aspects such as global and local garbage collection, hotness-awareness for managing cold entries, and benchmarks for performance evaluation. Additionally, it highlights the tunability of hashkv, allowing adjustments for key-value services and garbage collection without compromising performance.