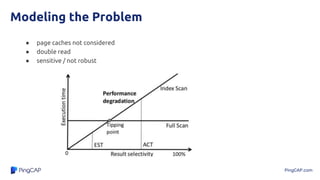

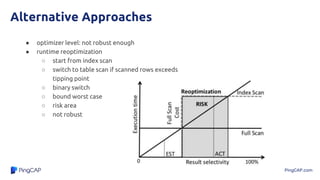

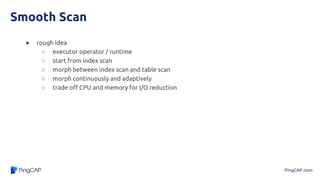

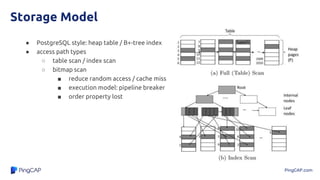

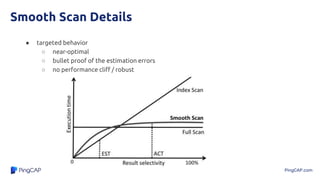

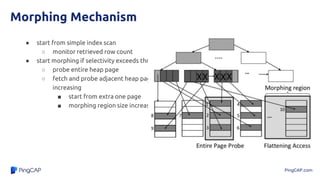

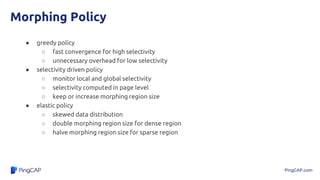

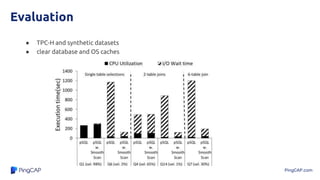

The document discusses a robust access path selection method that avoids relying on cardinality estimation, highlighting the shortcomings of classical approaches which depend on outdated statistics and naive cost models. It proposes a new scanning mechanism that adapts at runtime, allowing for more effective selection without needing extensive statistics. The document details various policies for morphing scan methods and incorporates these innovations into a PostgreSQL implementation to enhance performance in diverse data situations.