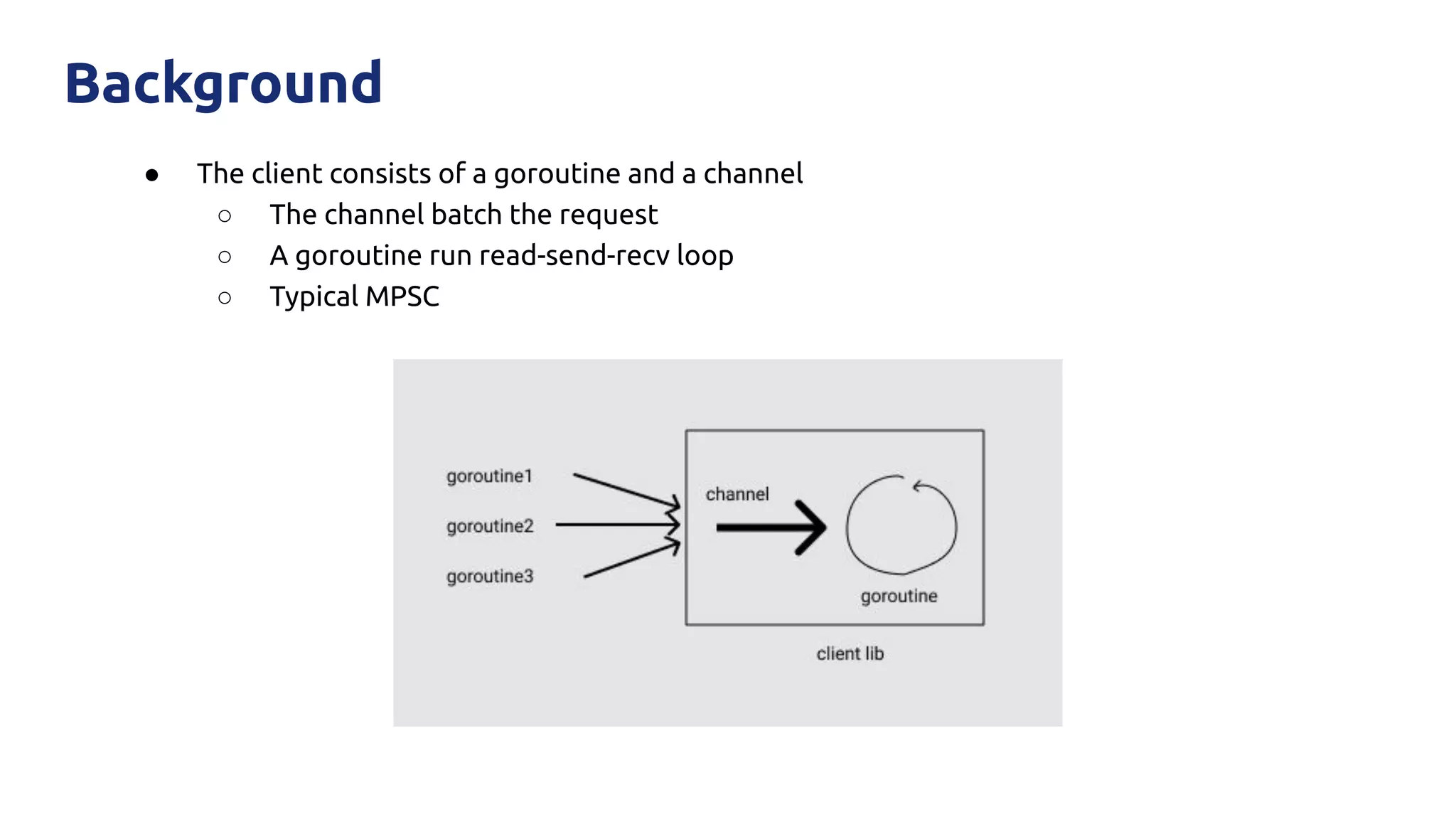

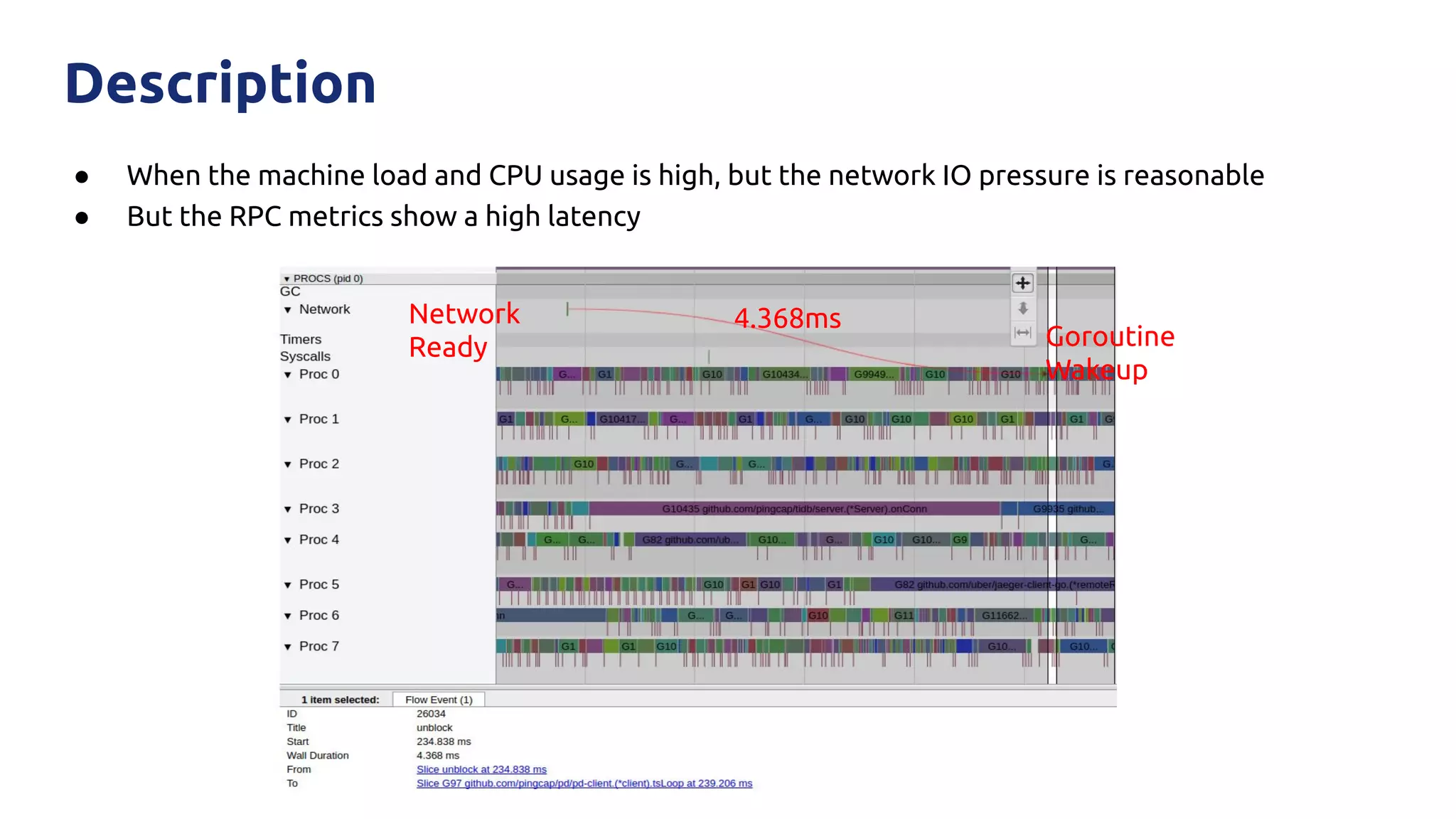

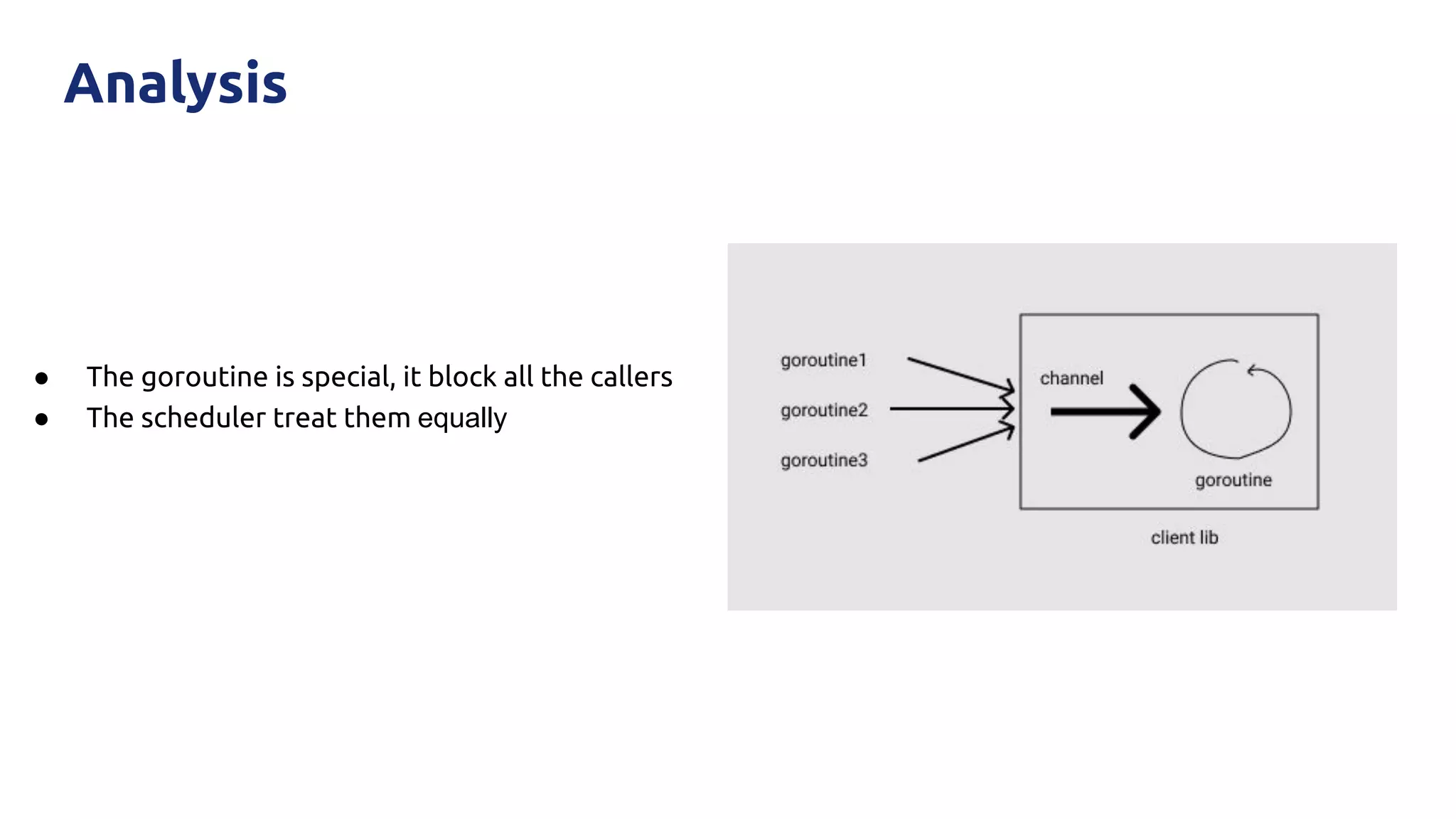

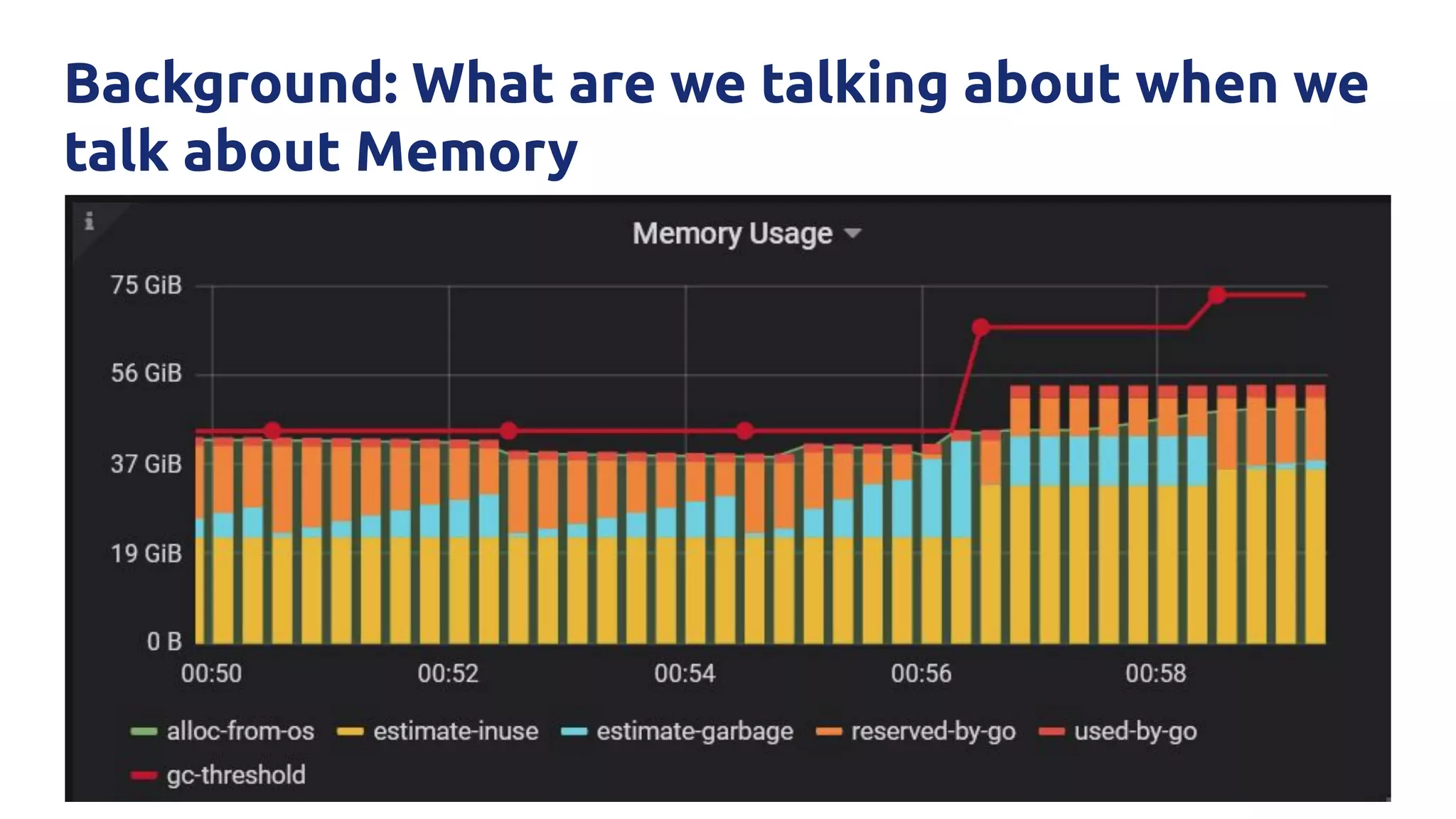

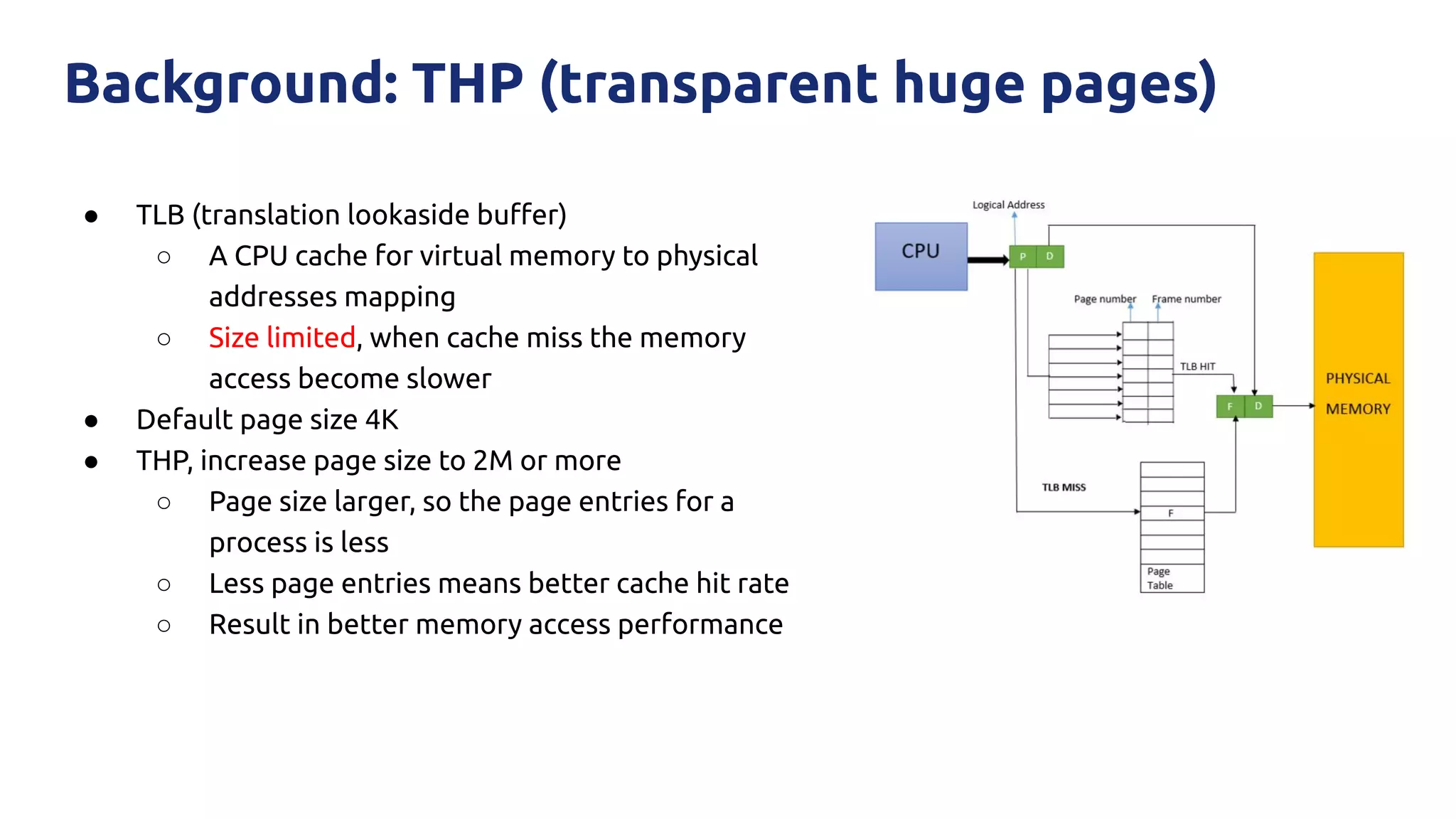

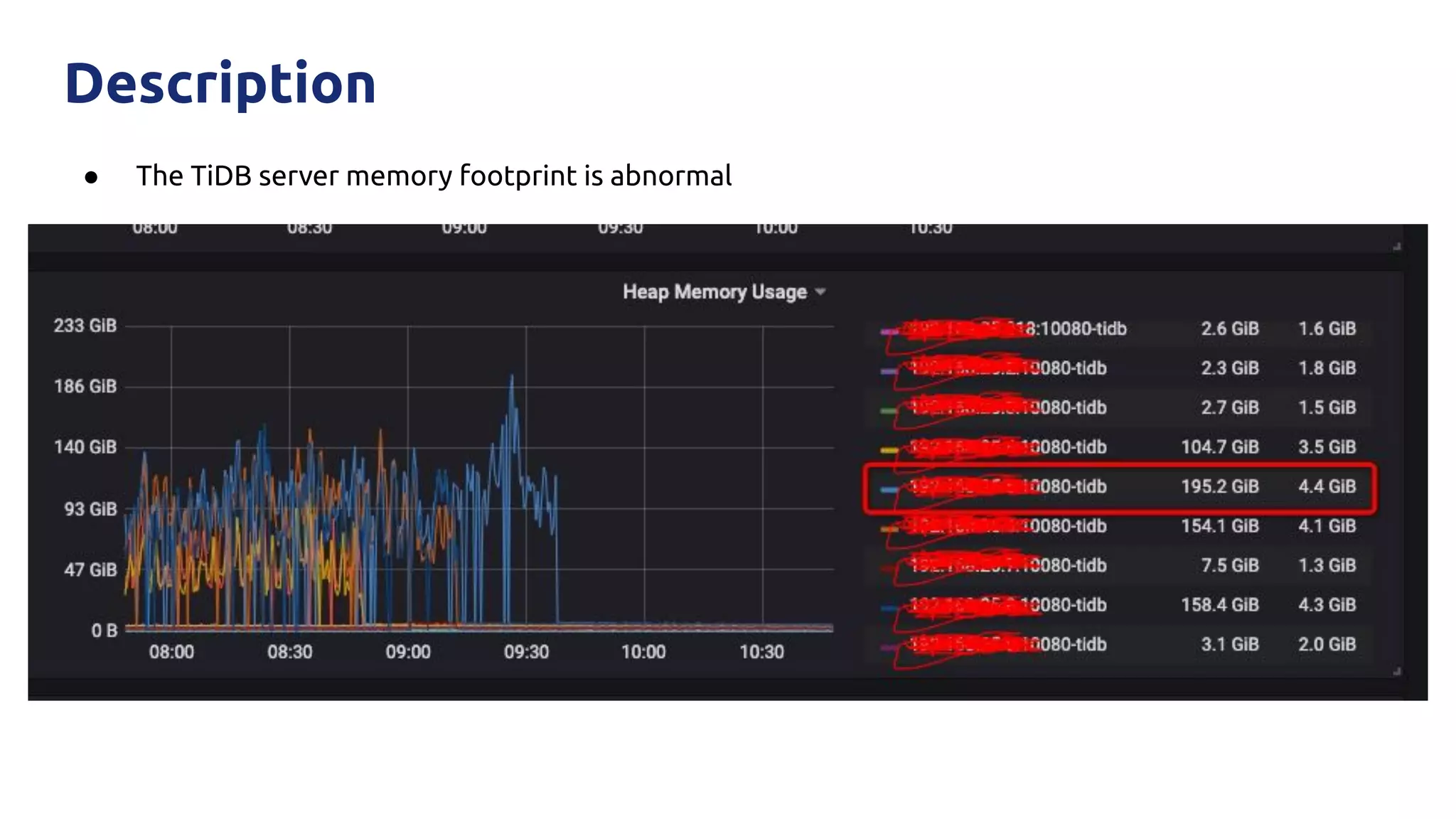

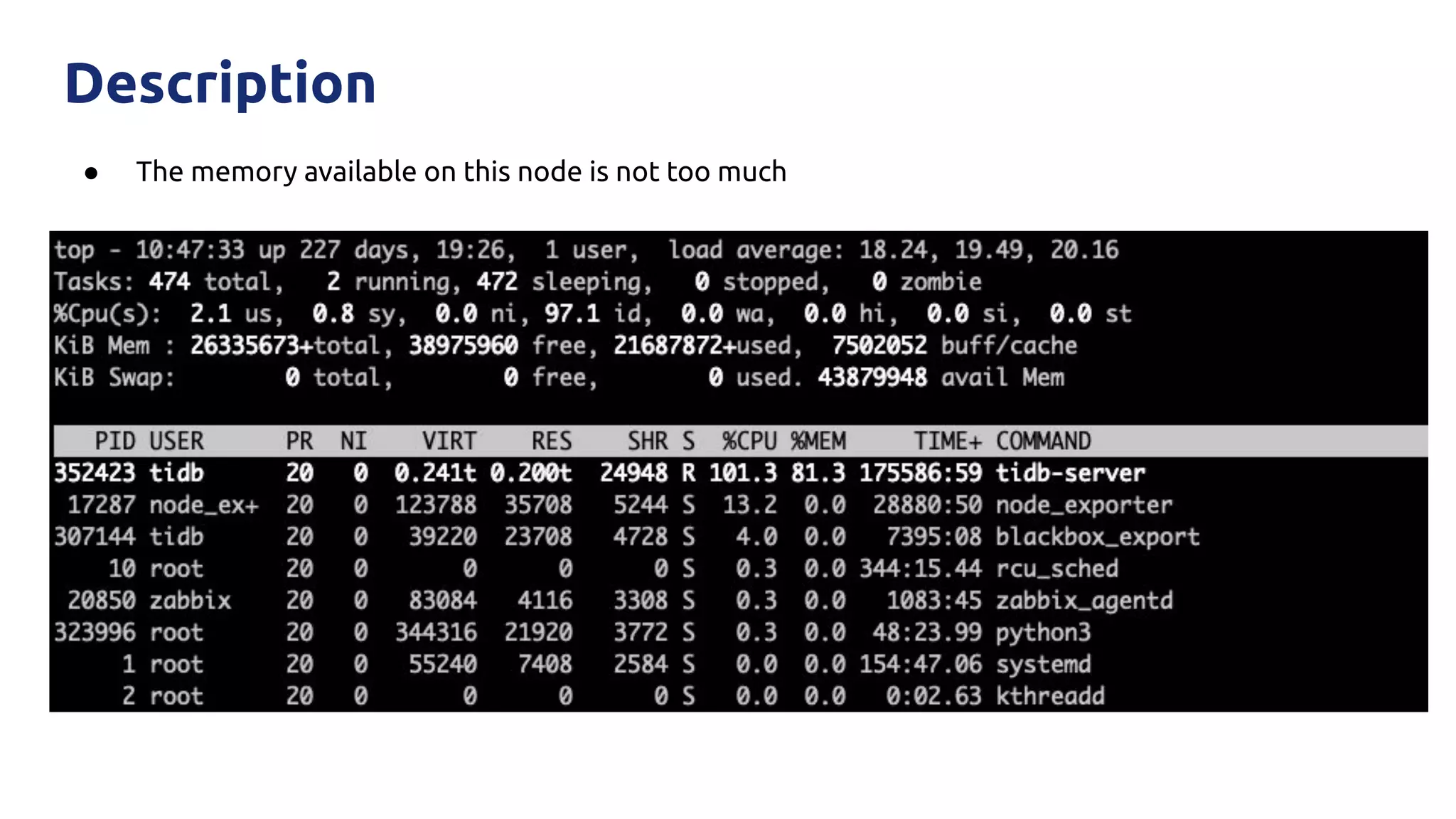

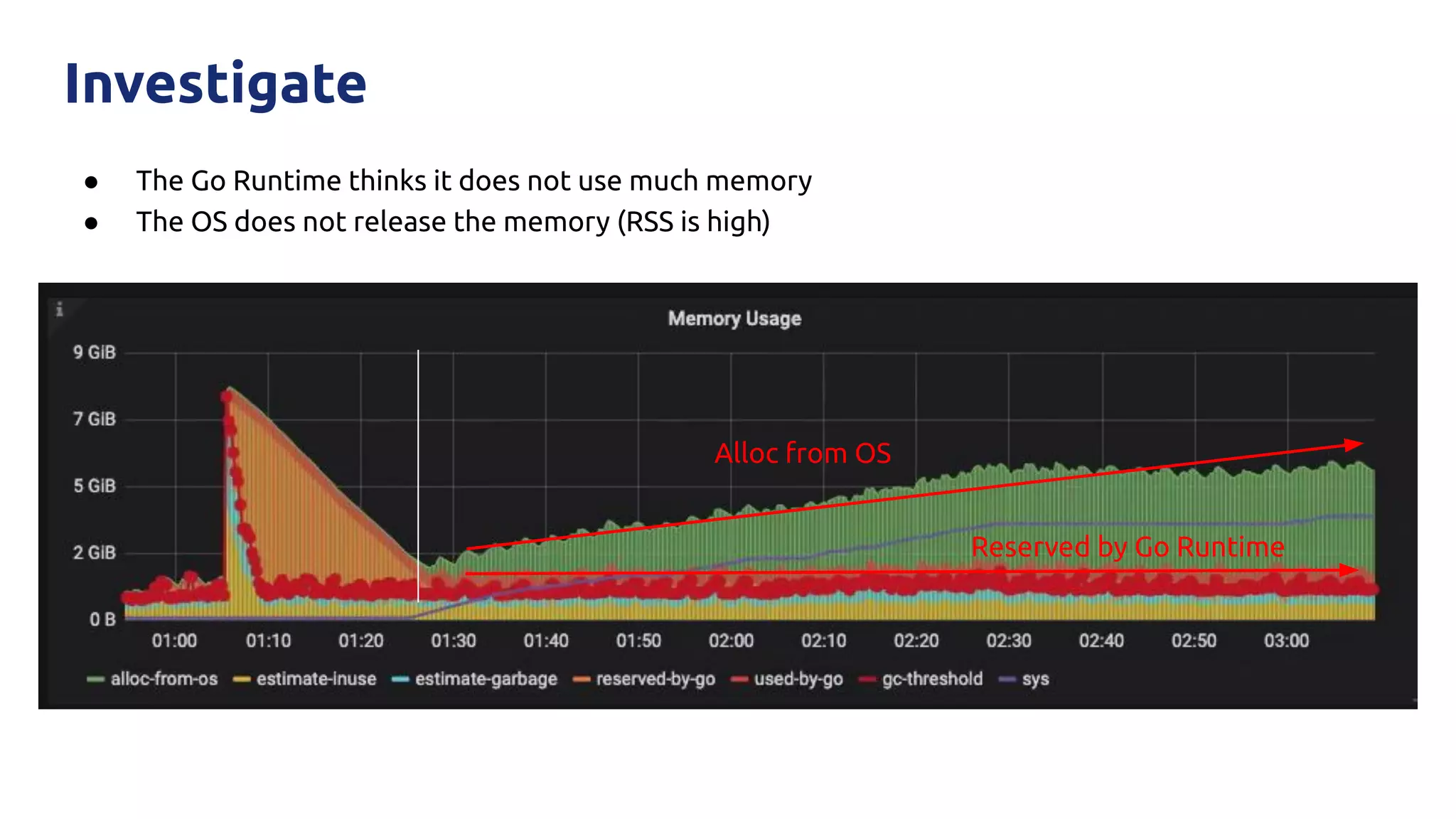

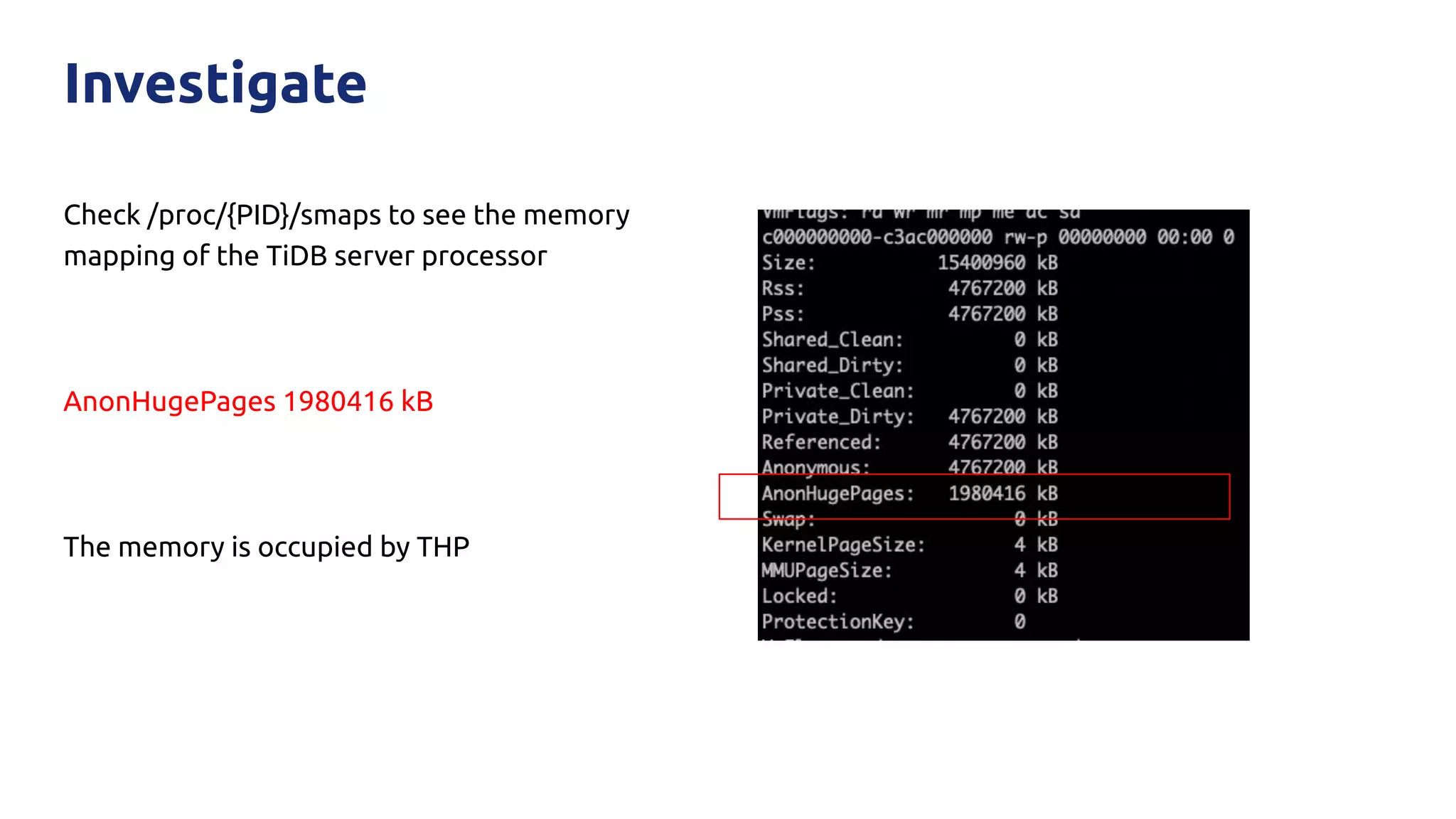

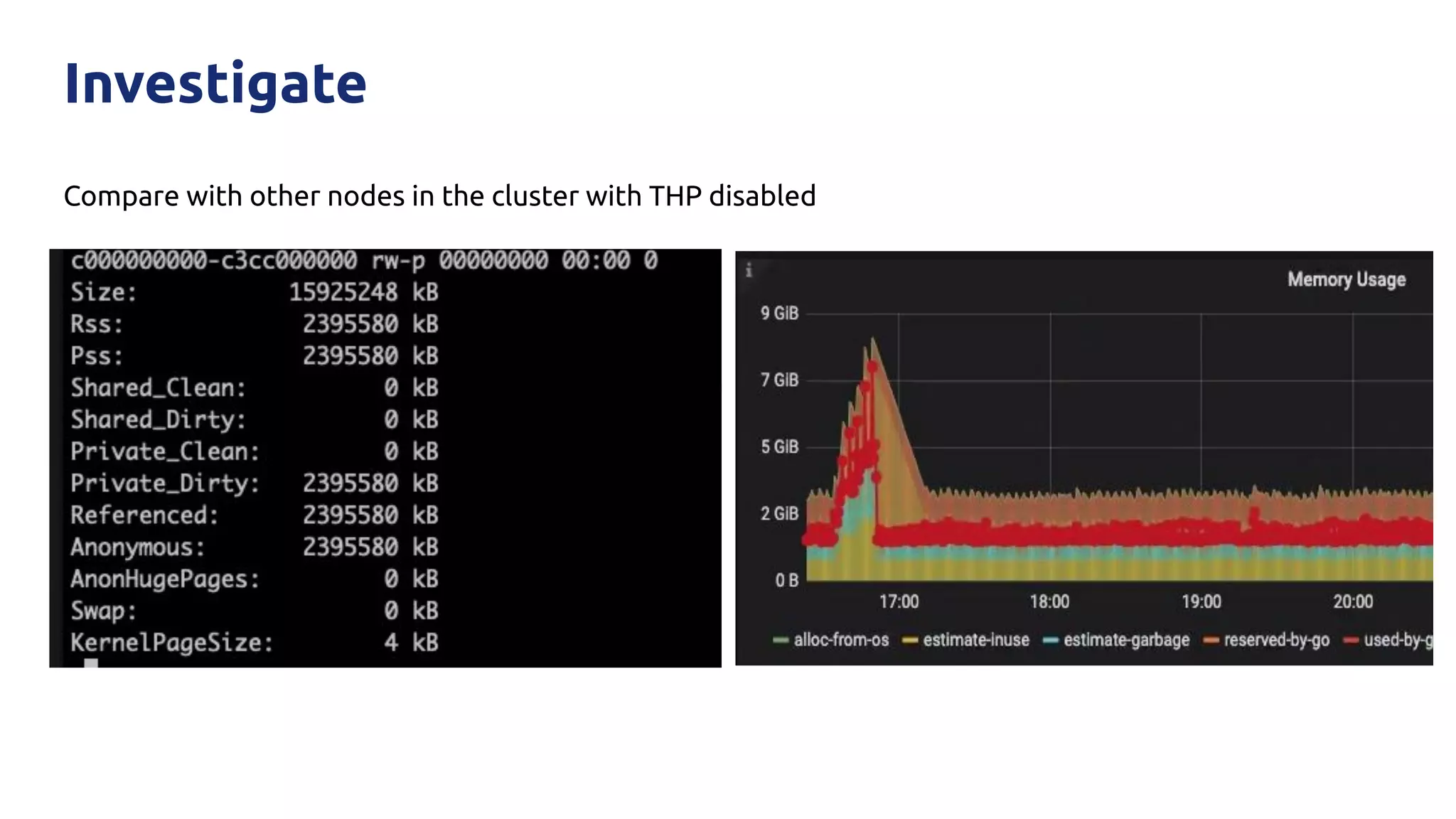

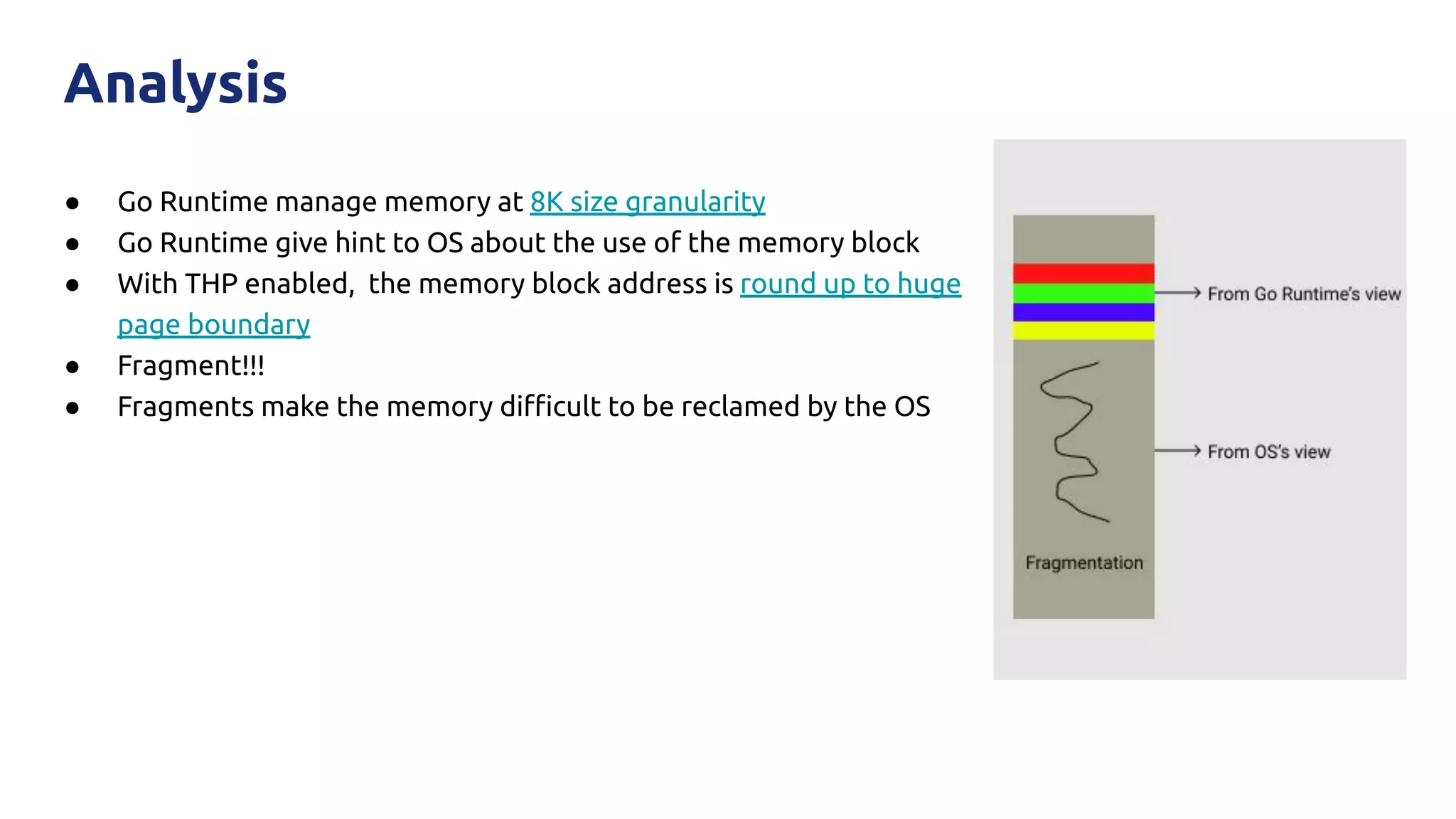

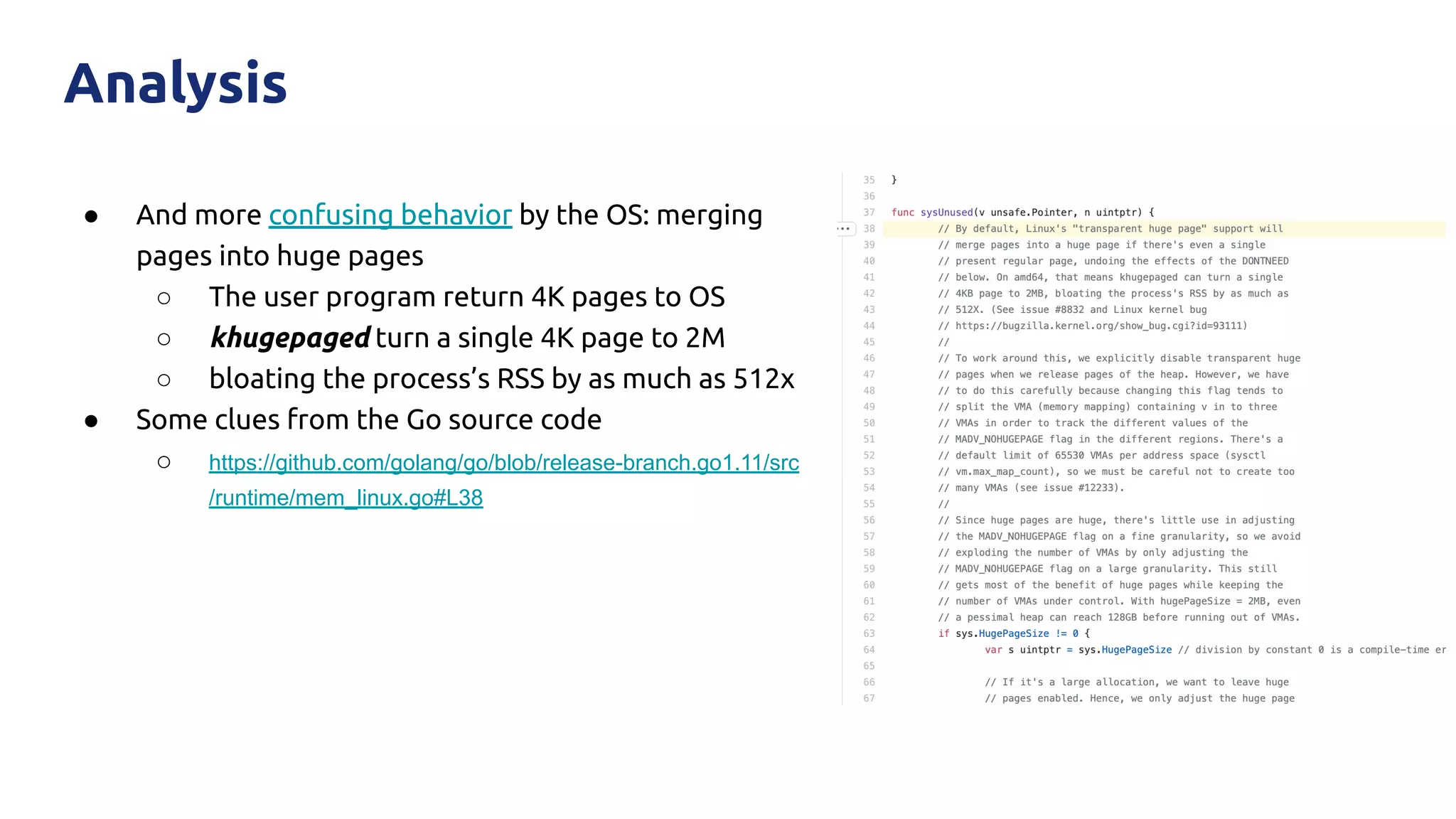

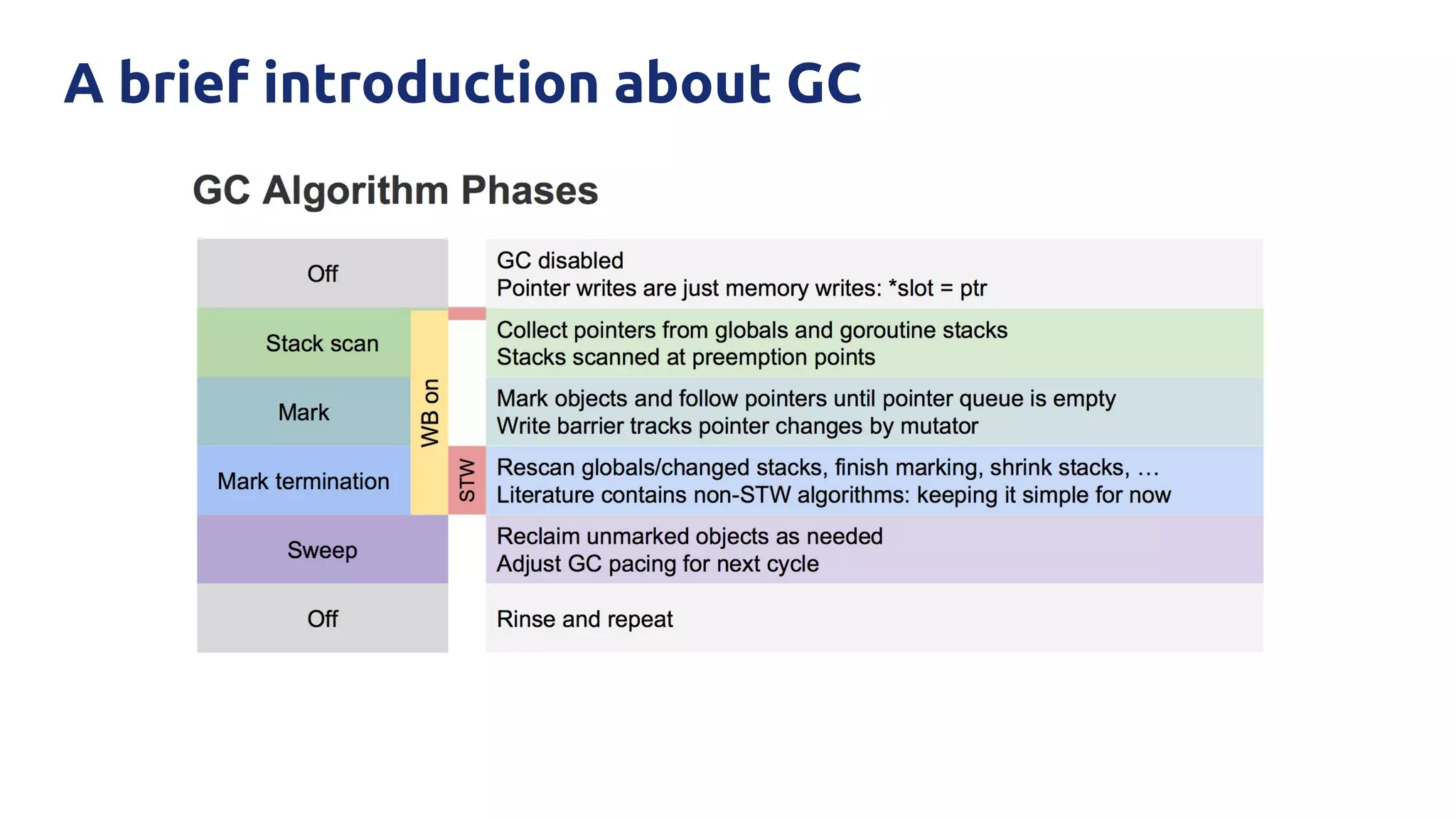

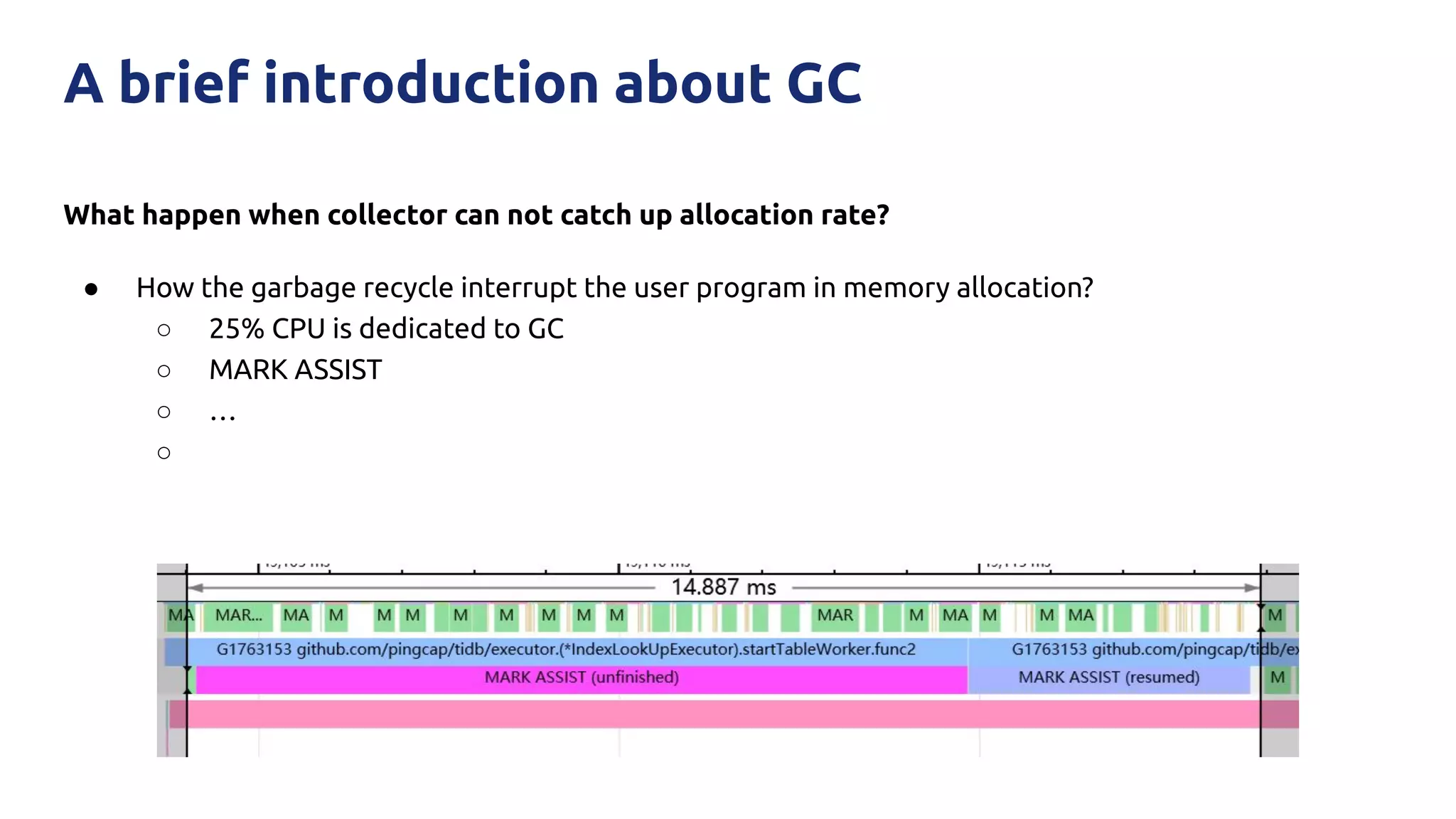

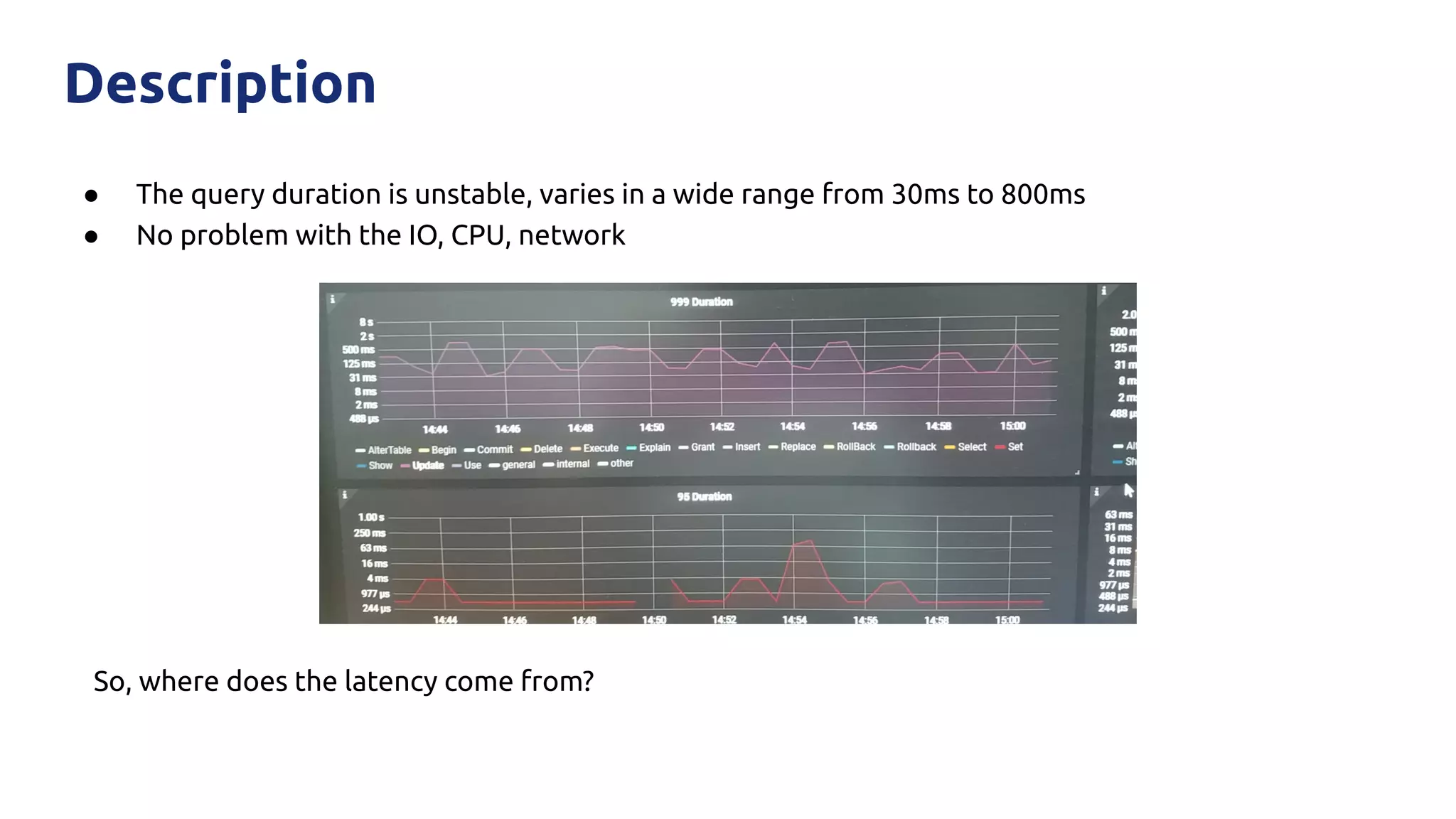

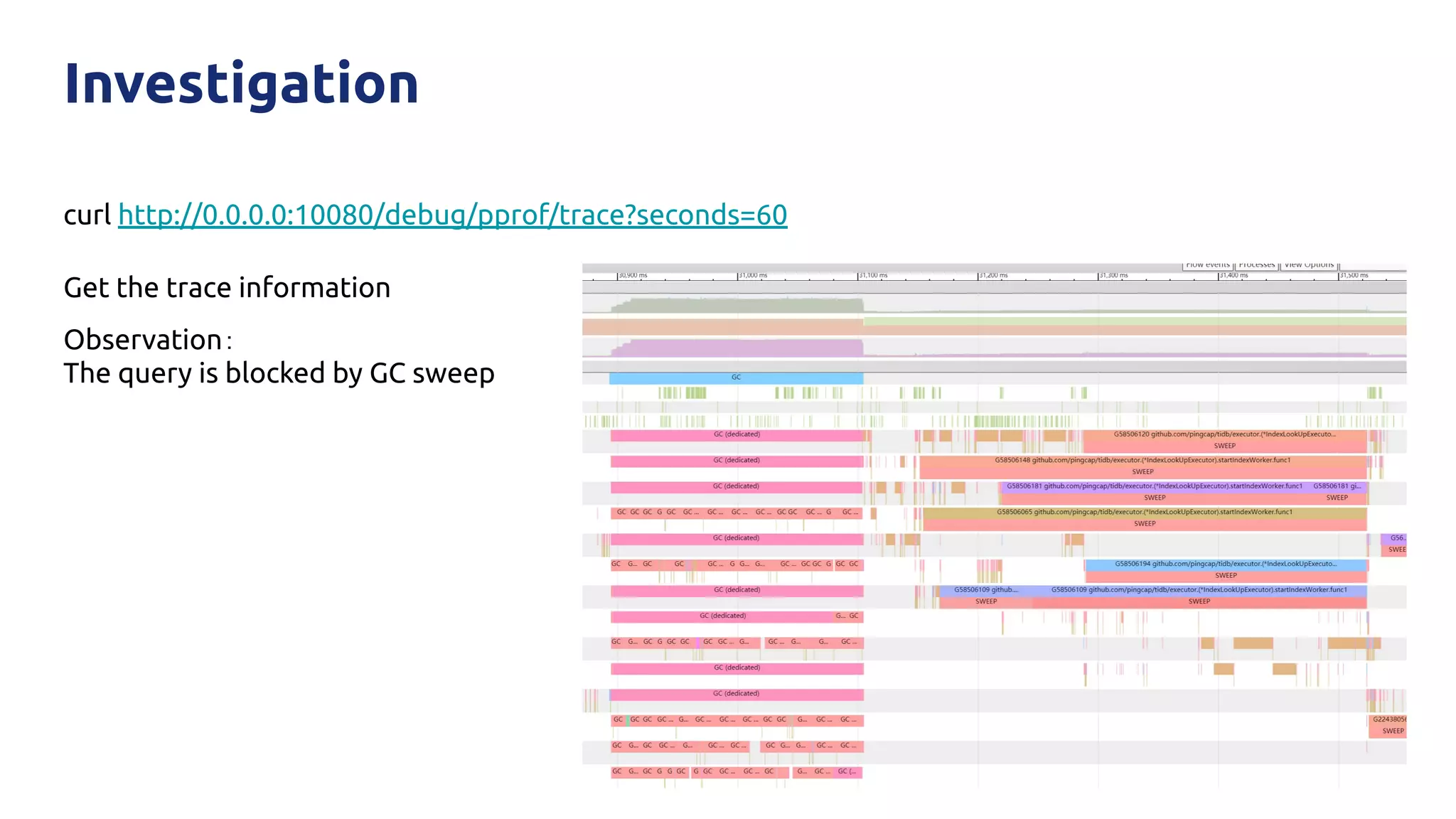

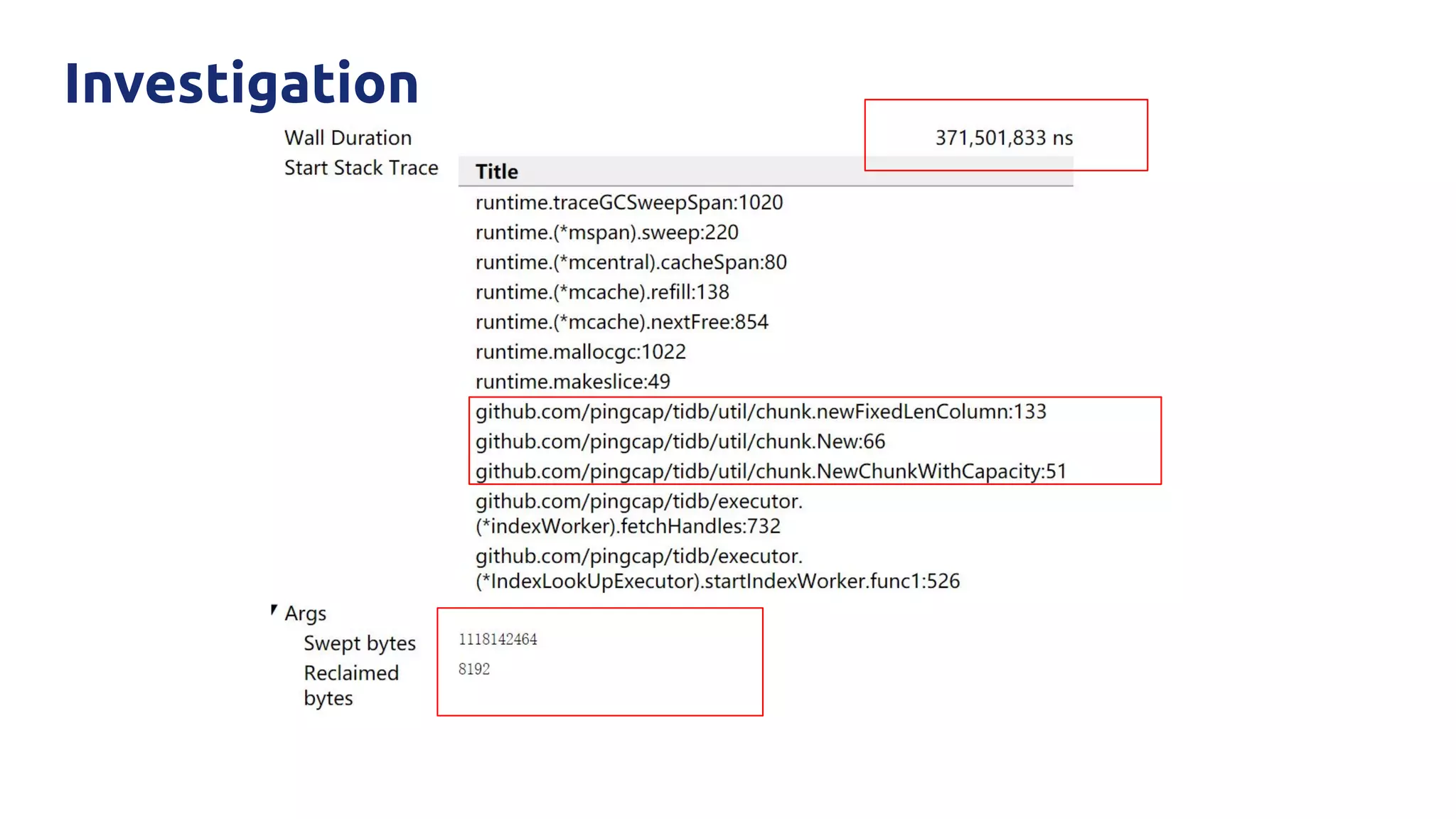

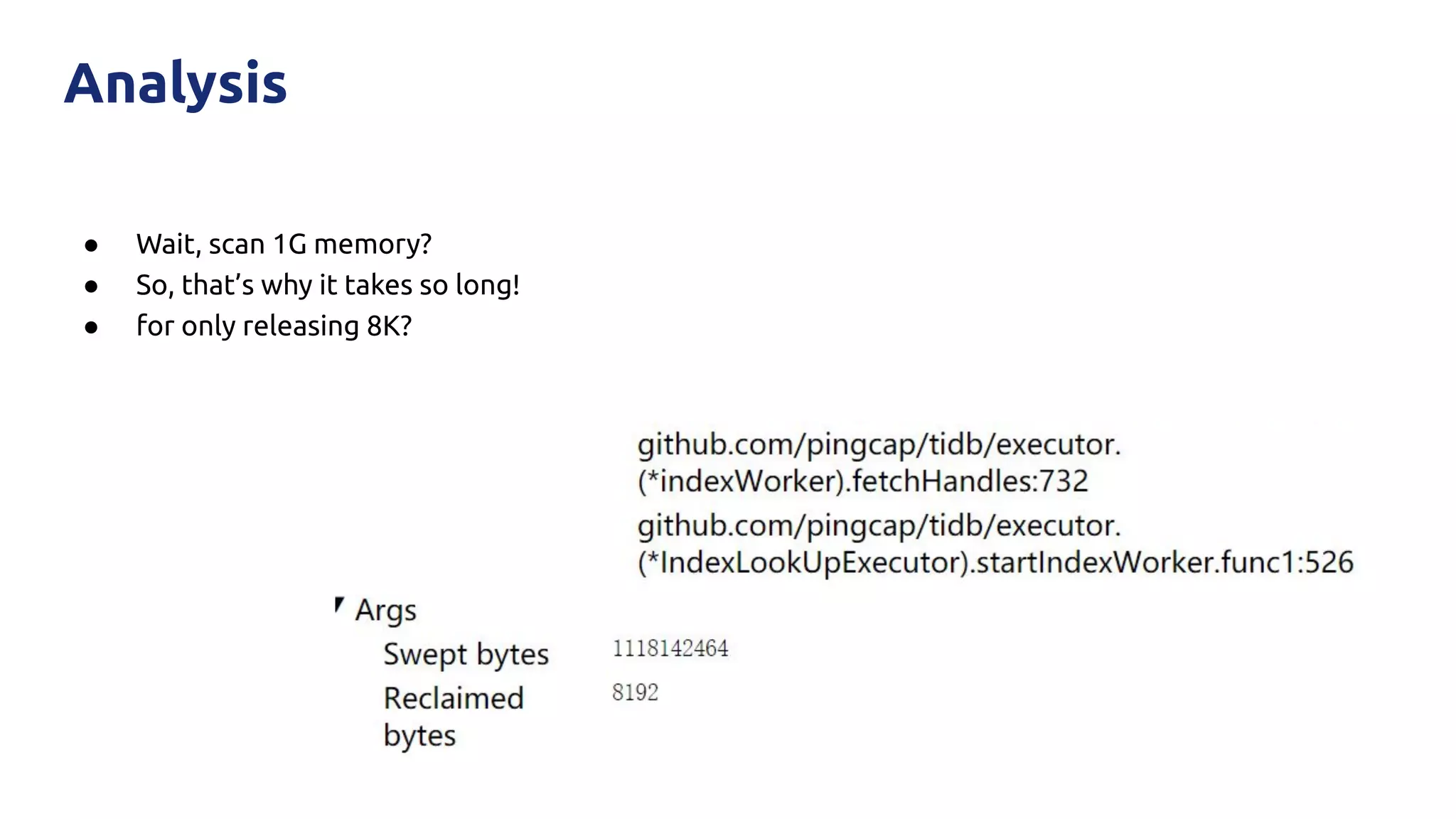

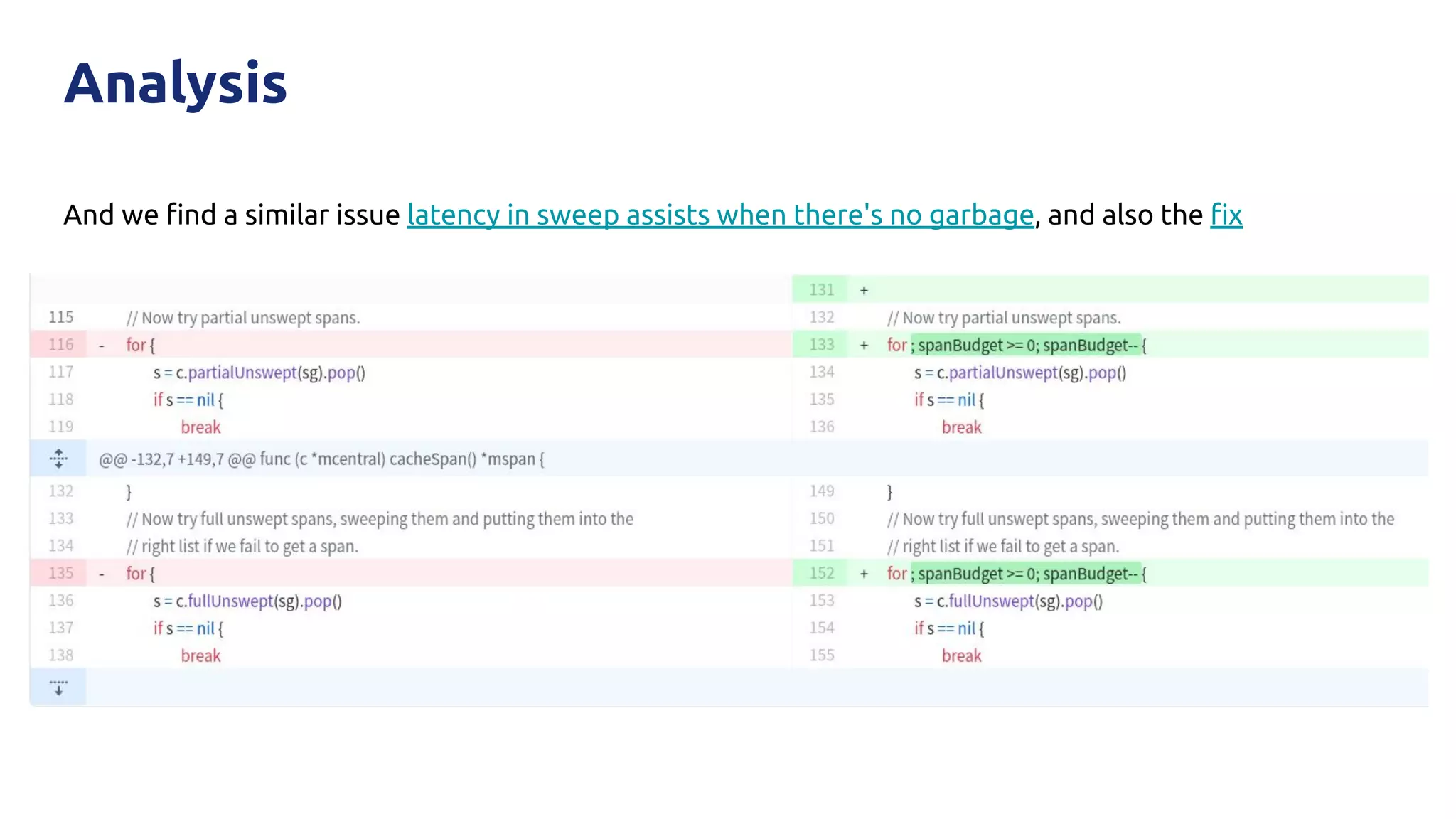

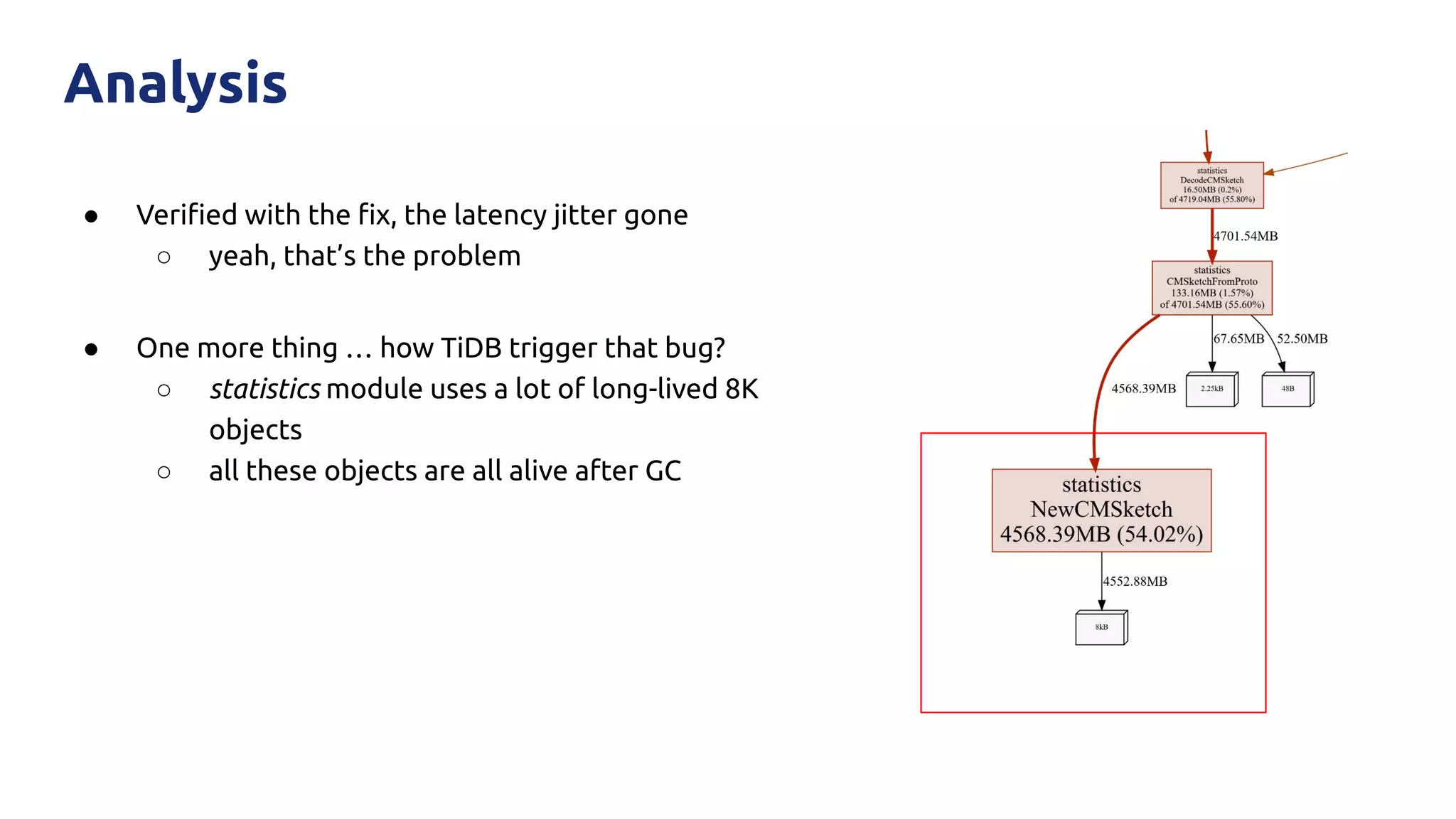

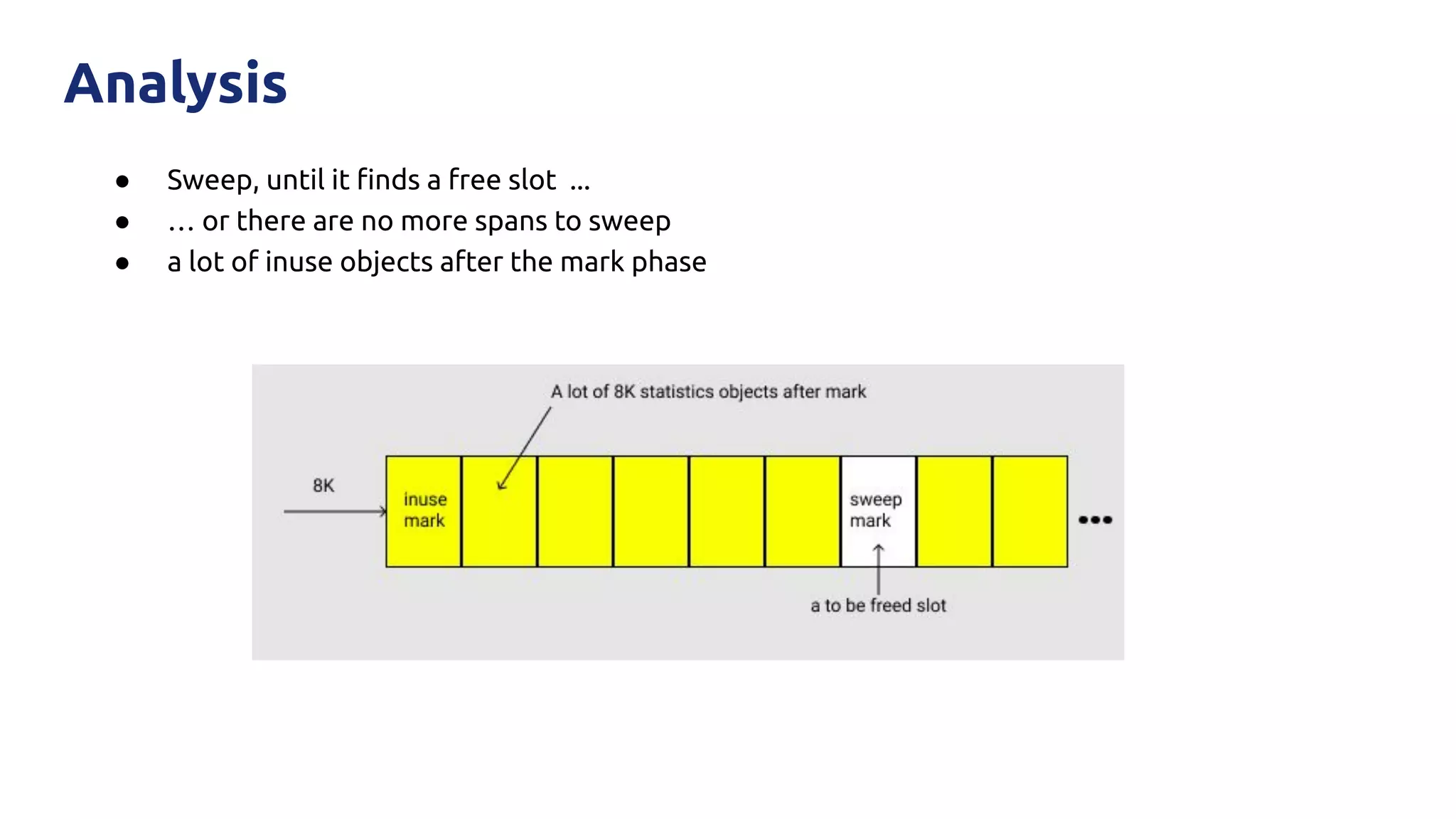

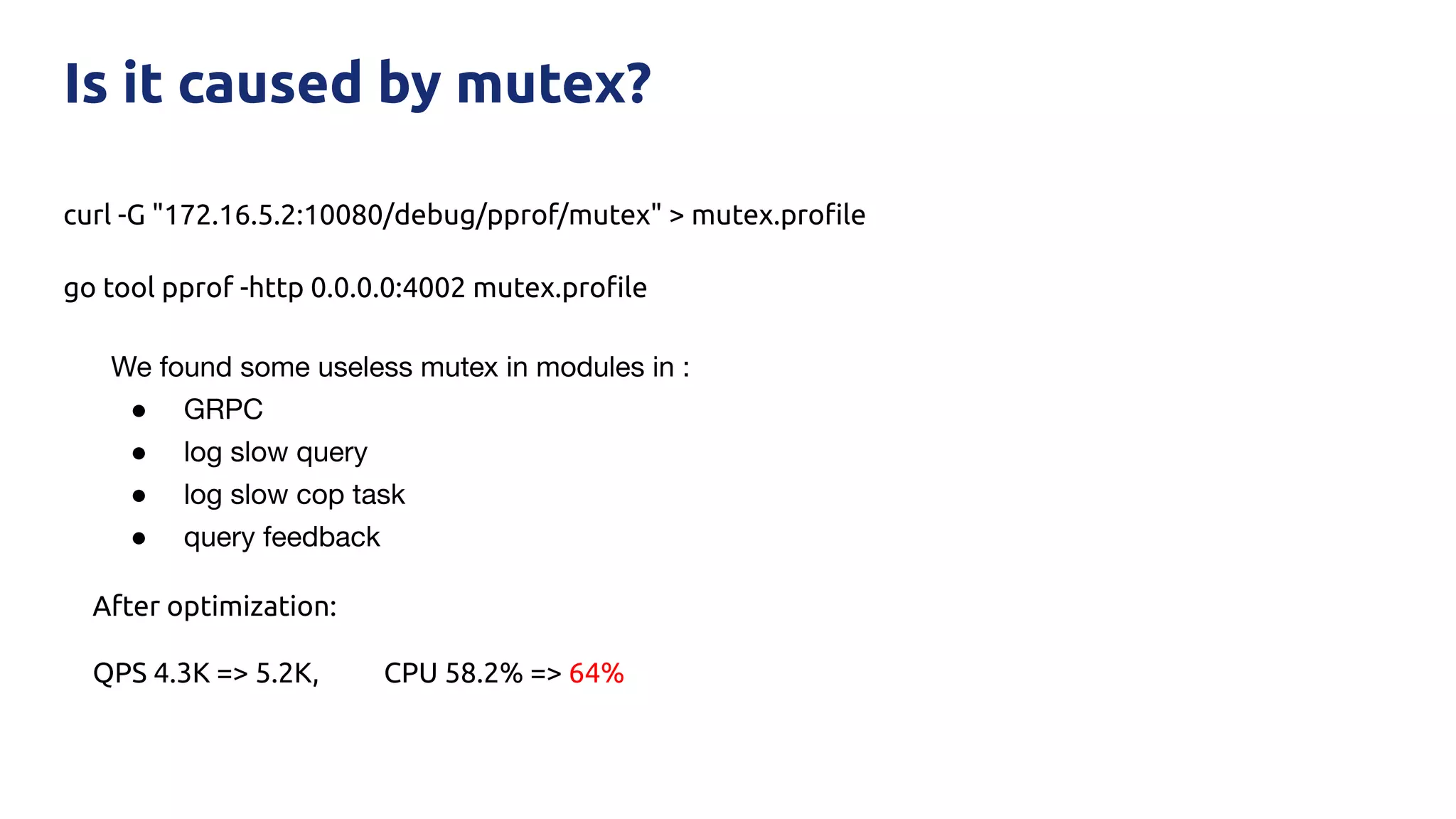

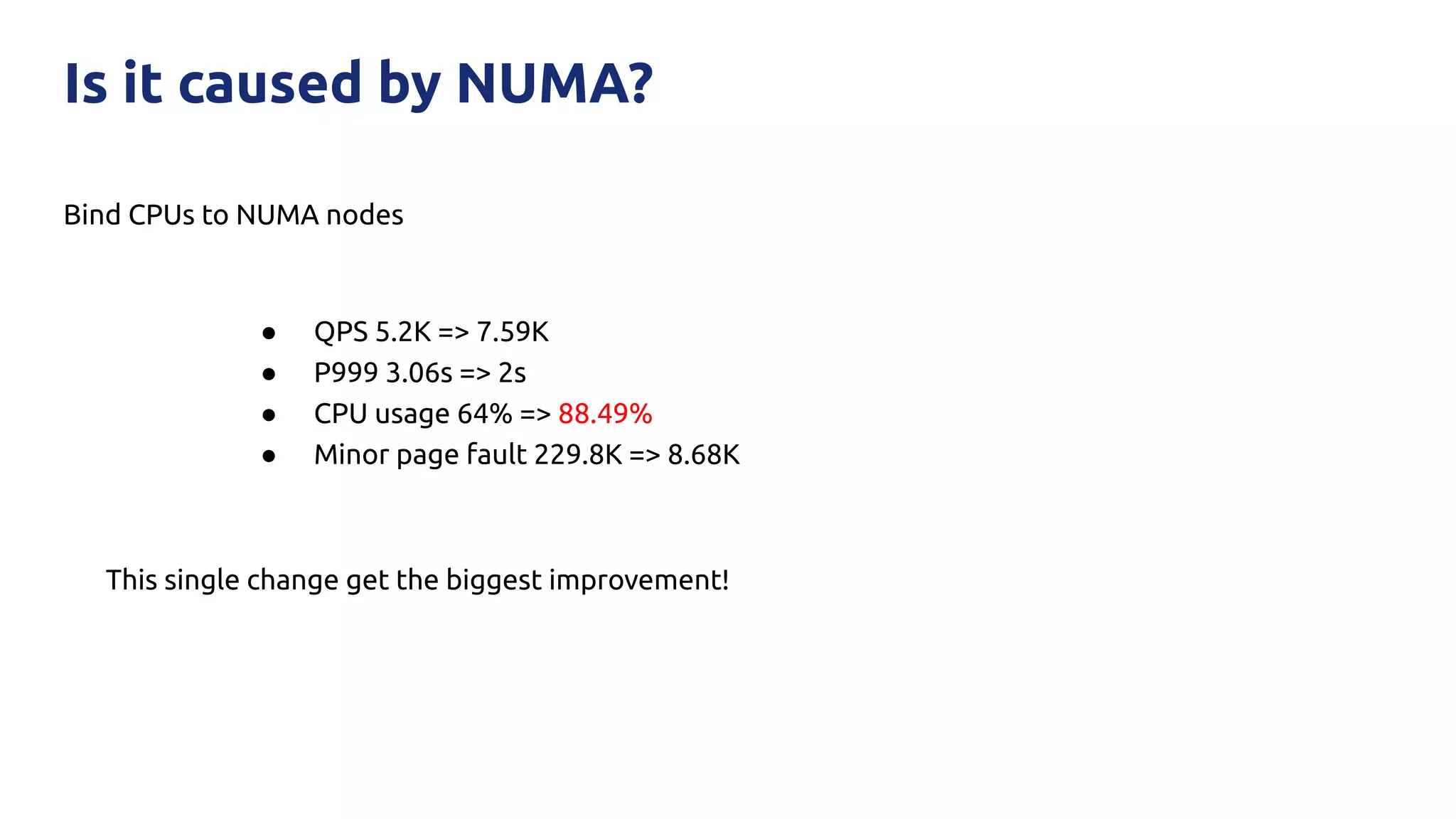

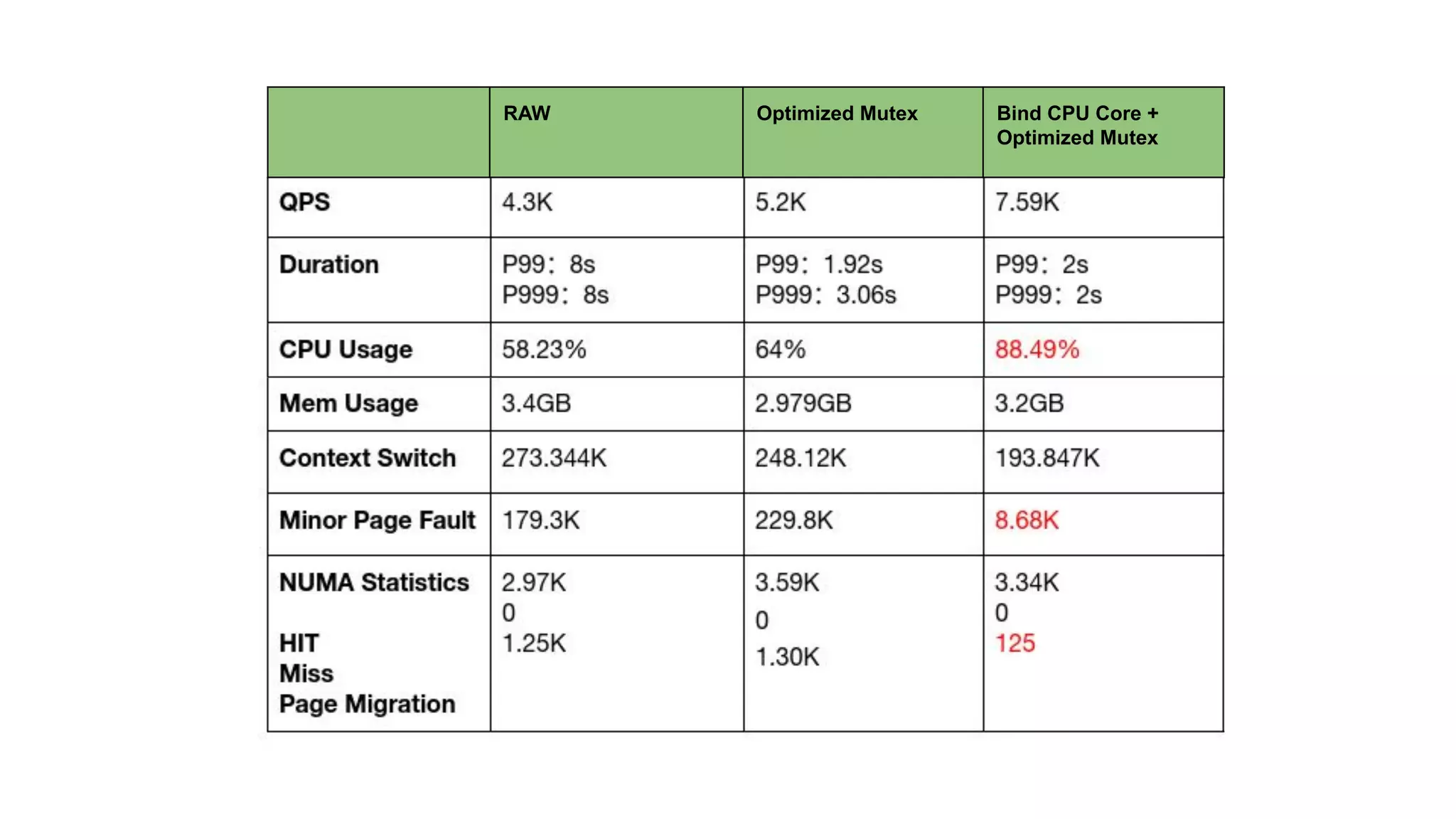

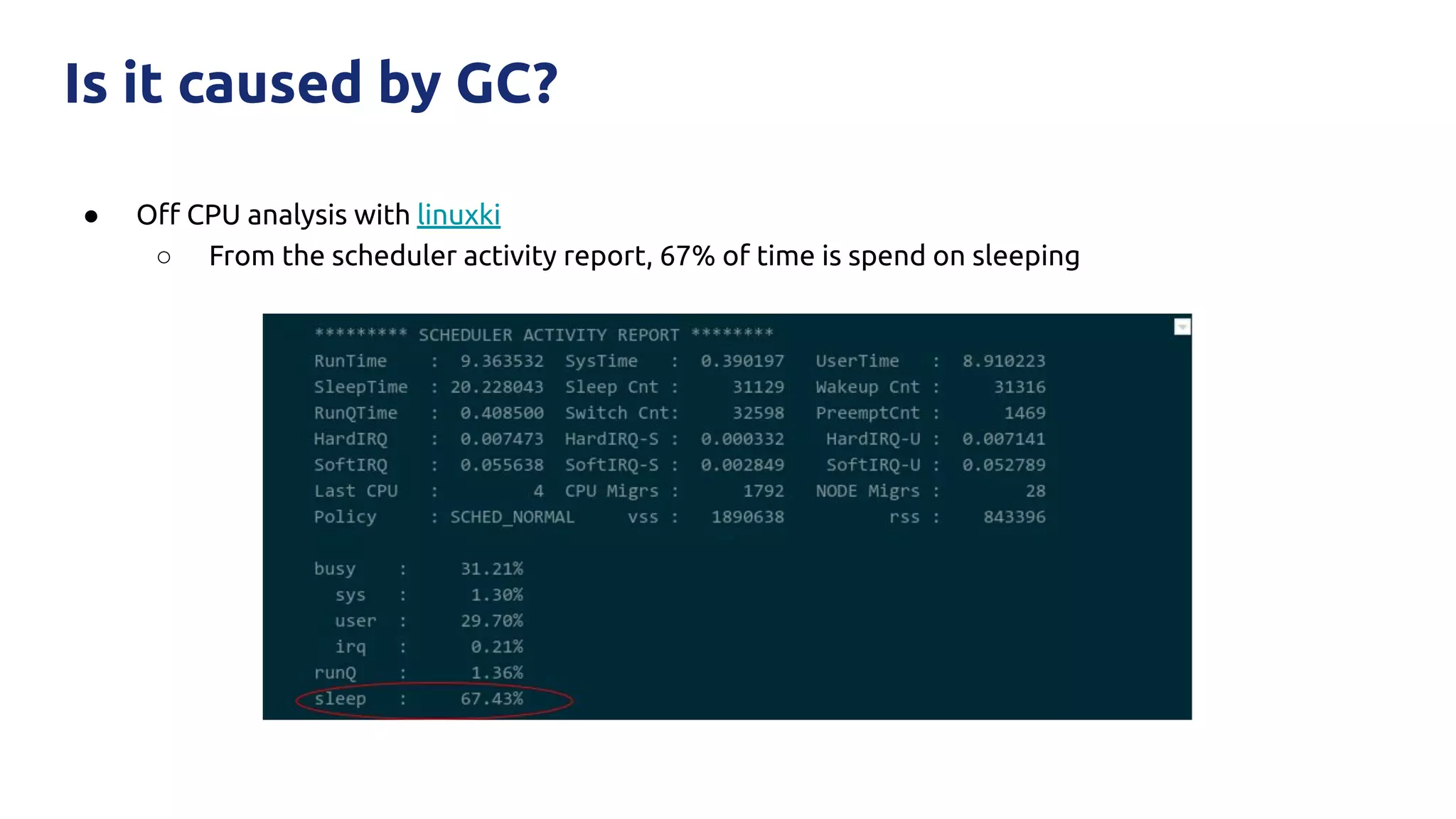

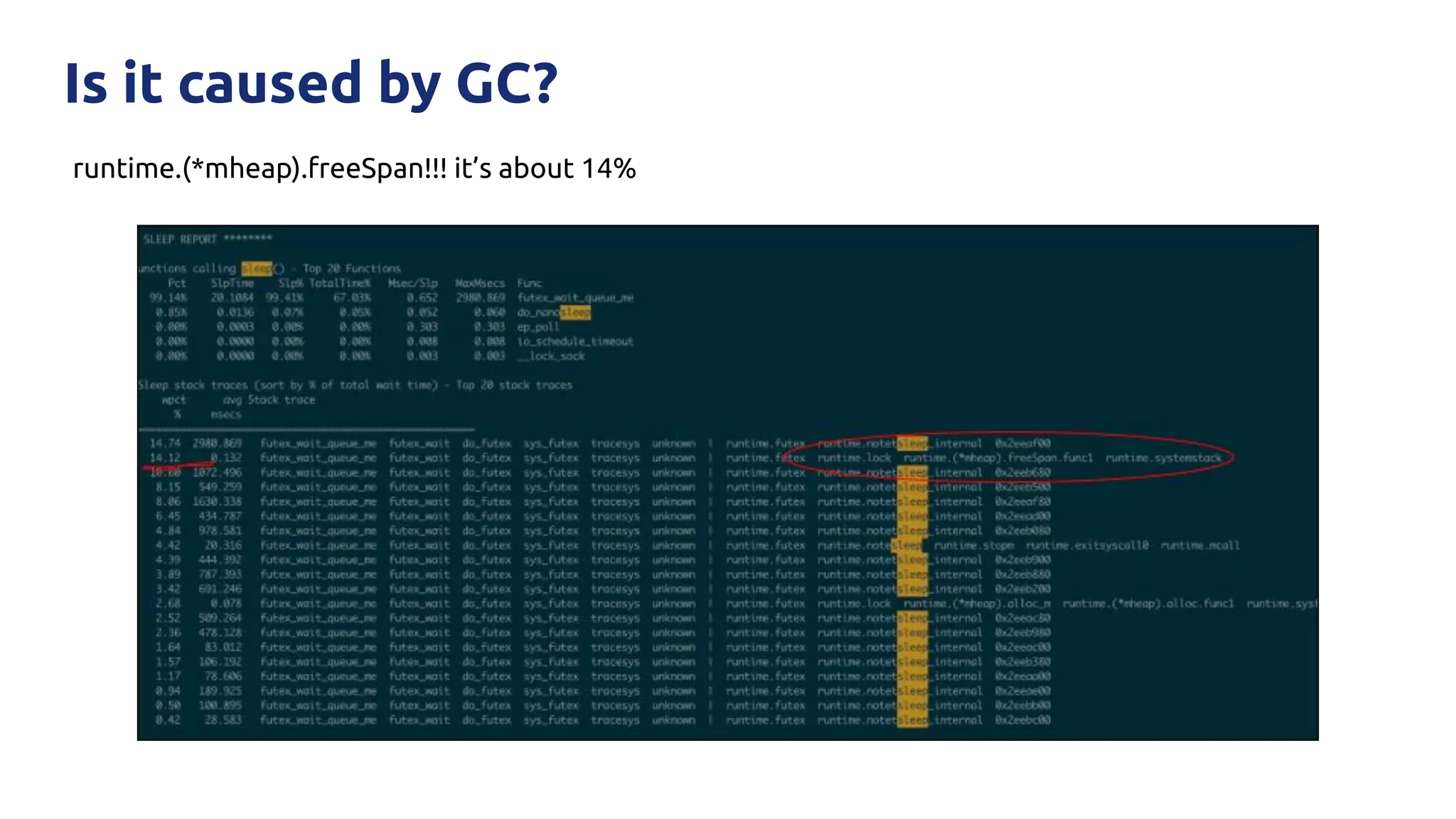

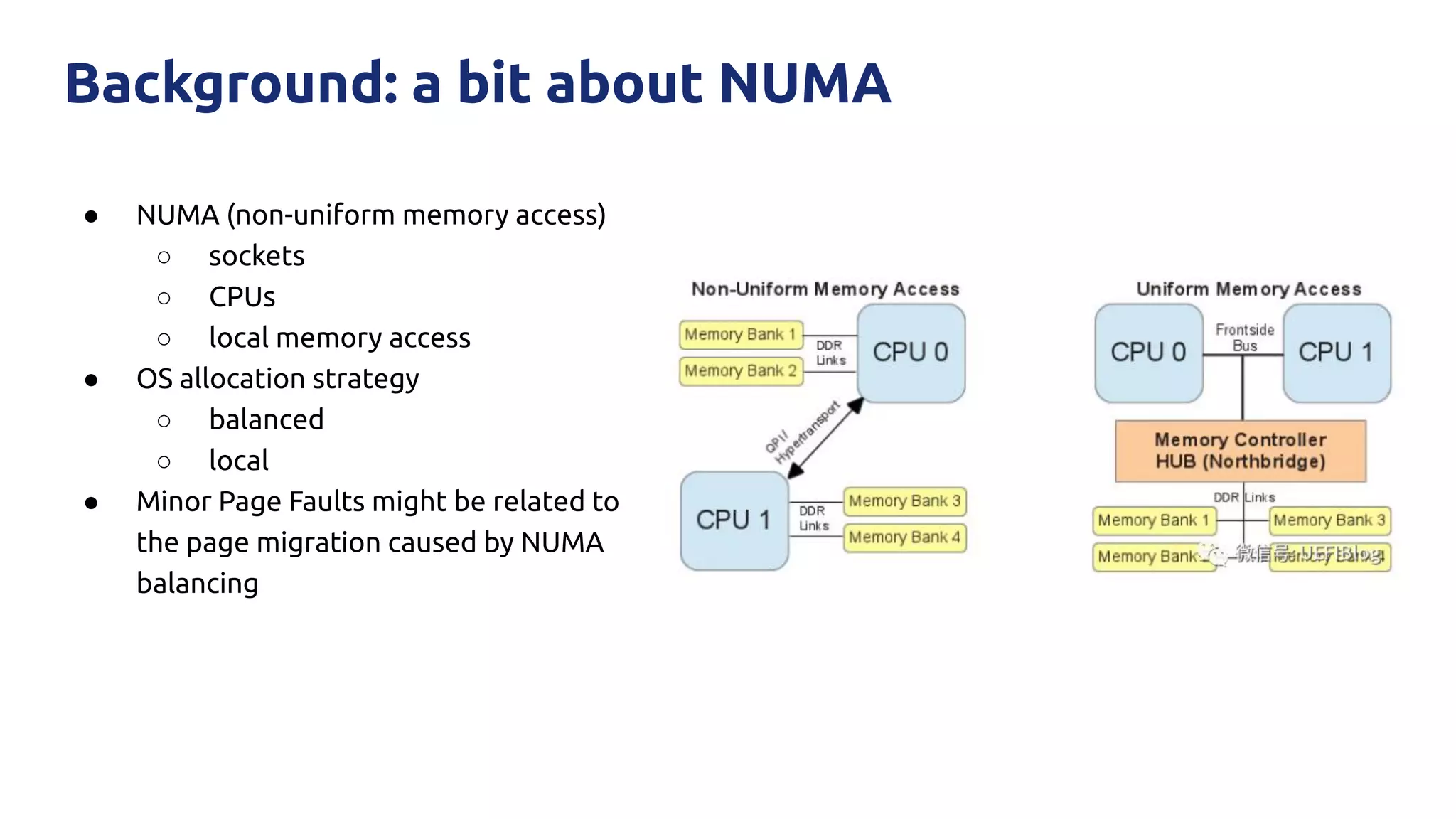

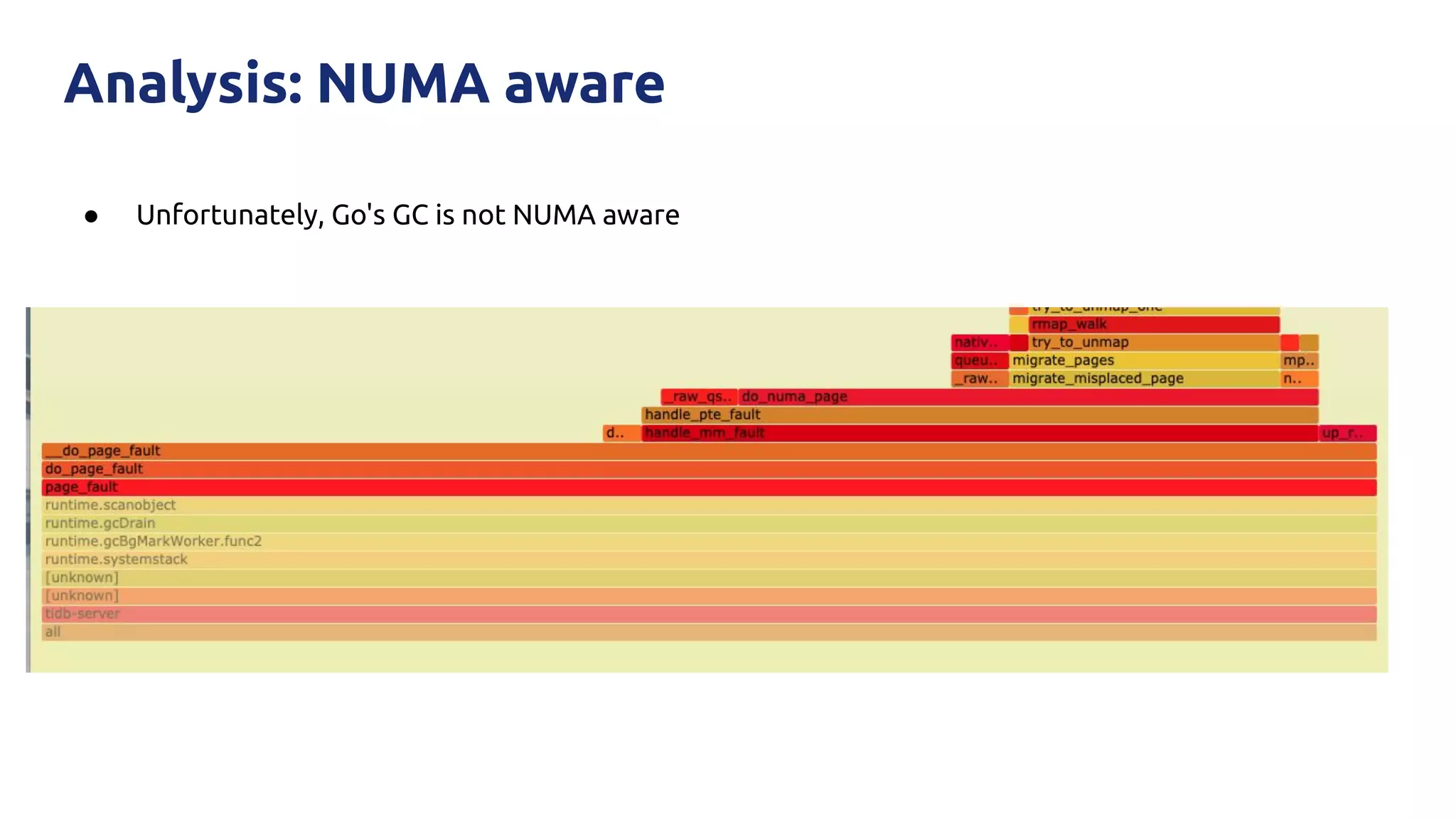

The document discusses various performance-related issues in the Go runtime within TiDB, focusing on scheduler latency, memory control with Transparent Huge Pages (THP), and garbage collection (GC) related latency jitter. It details case studies revealing the effects of high loads on scheduling decisions, memory management challenges, and how GC can disrupt service by introducing latency variations. Recommendations for improvements, such as disabling THP and optimizing mutexes and CPU bindings, are also provided to enhance performance.