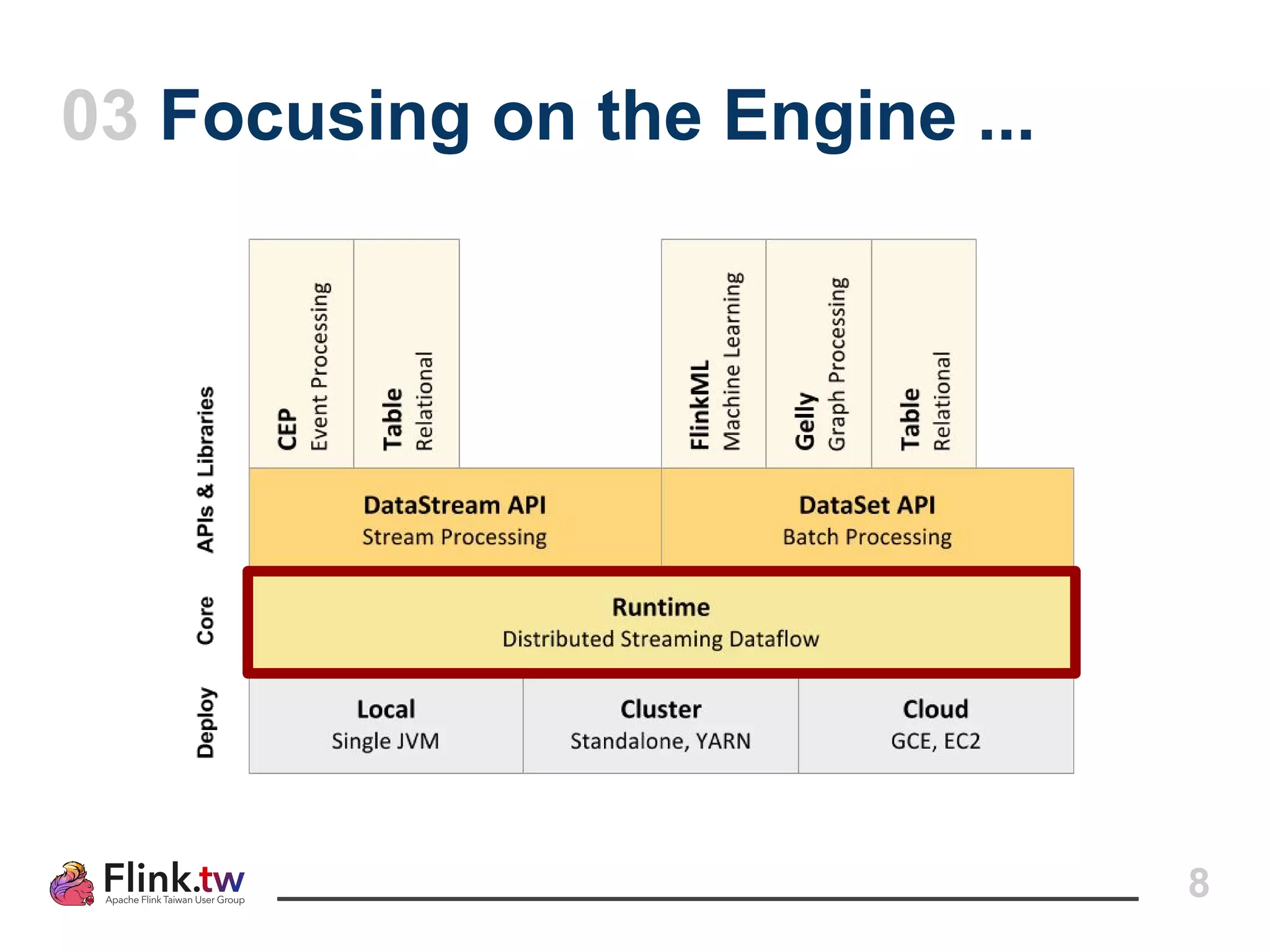

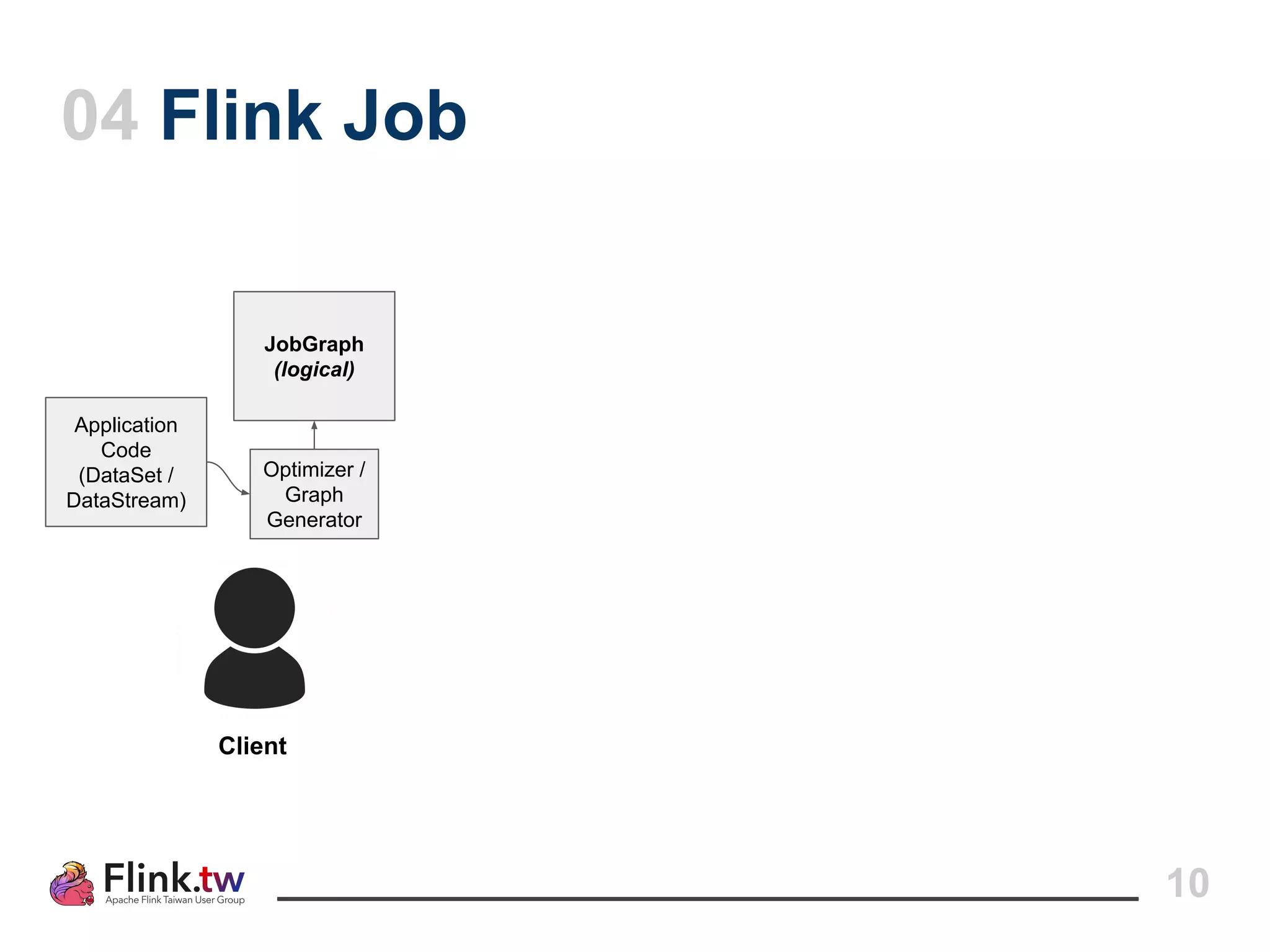

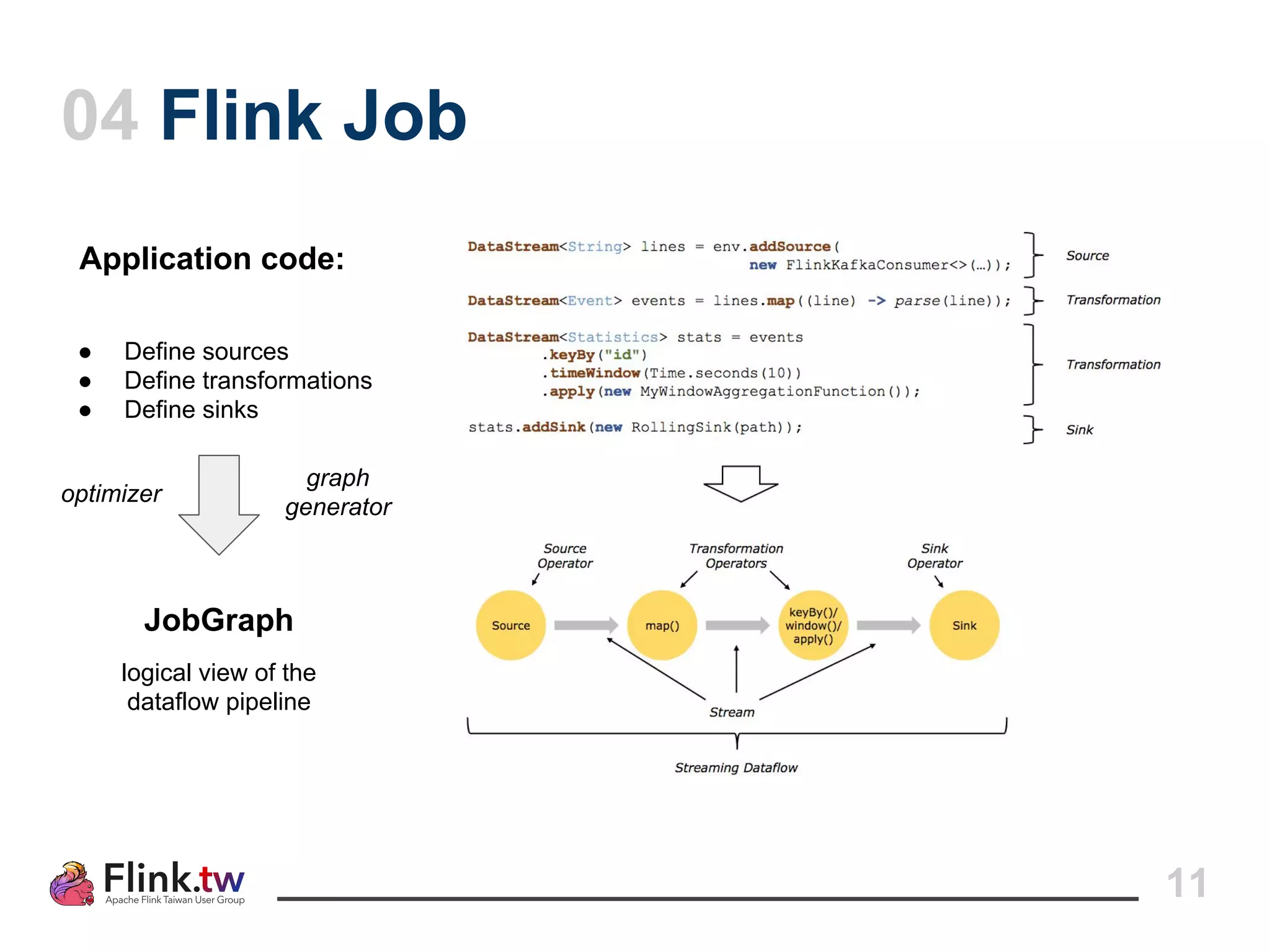

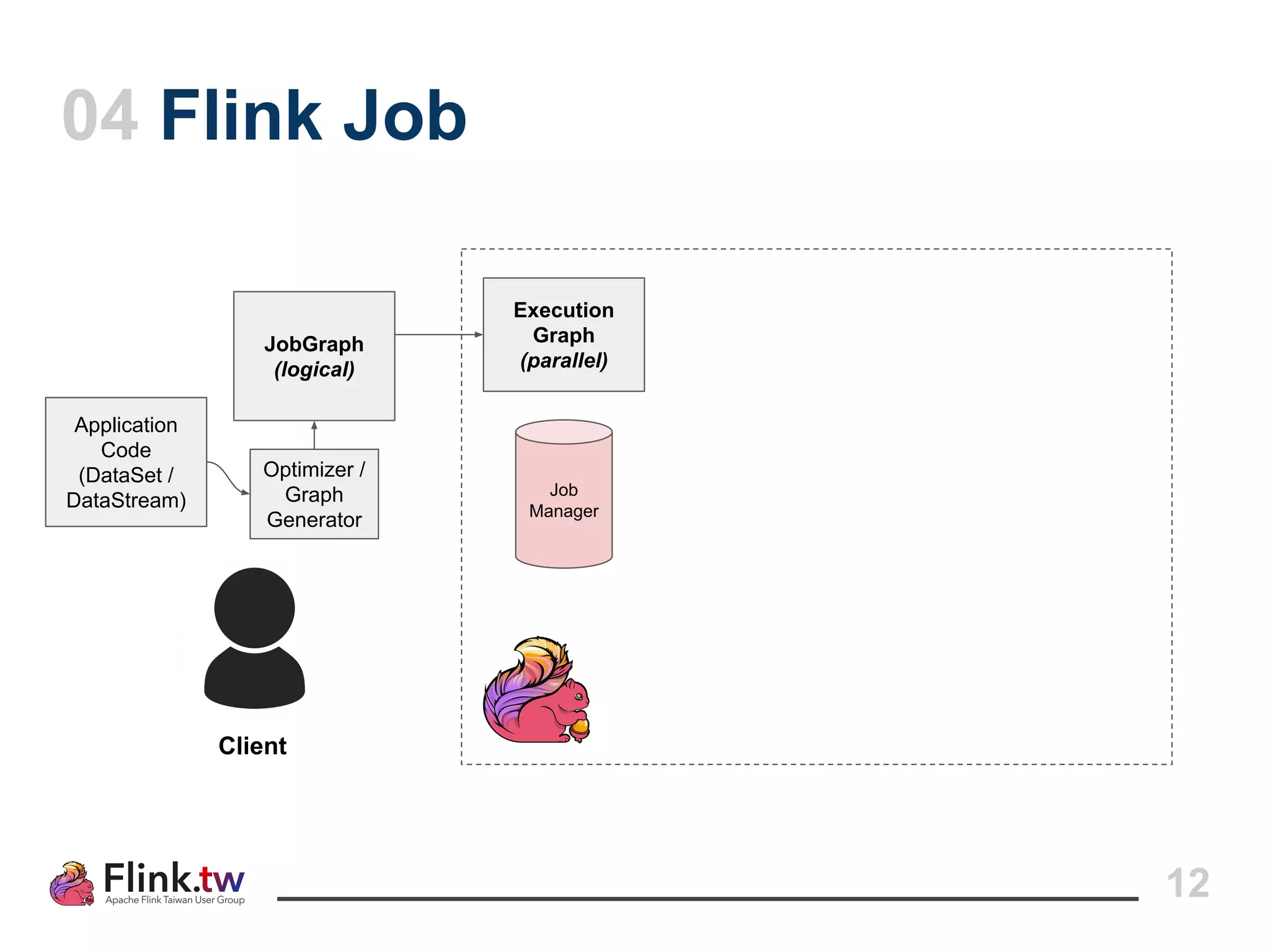

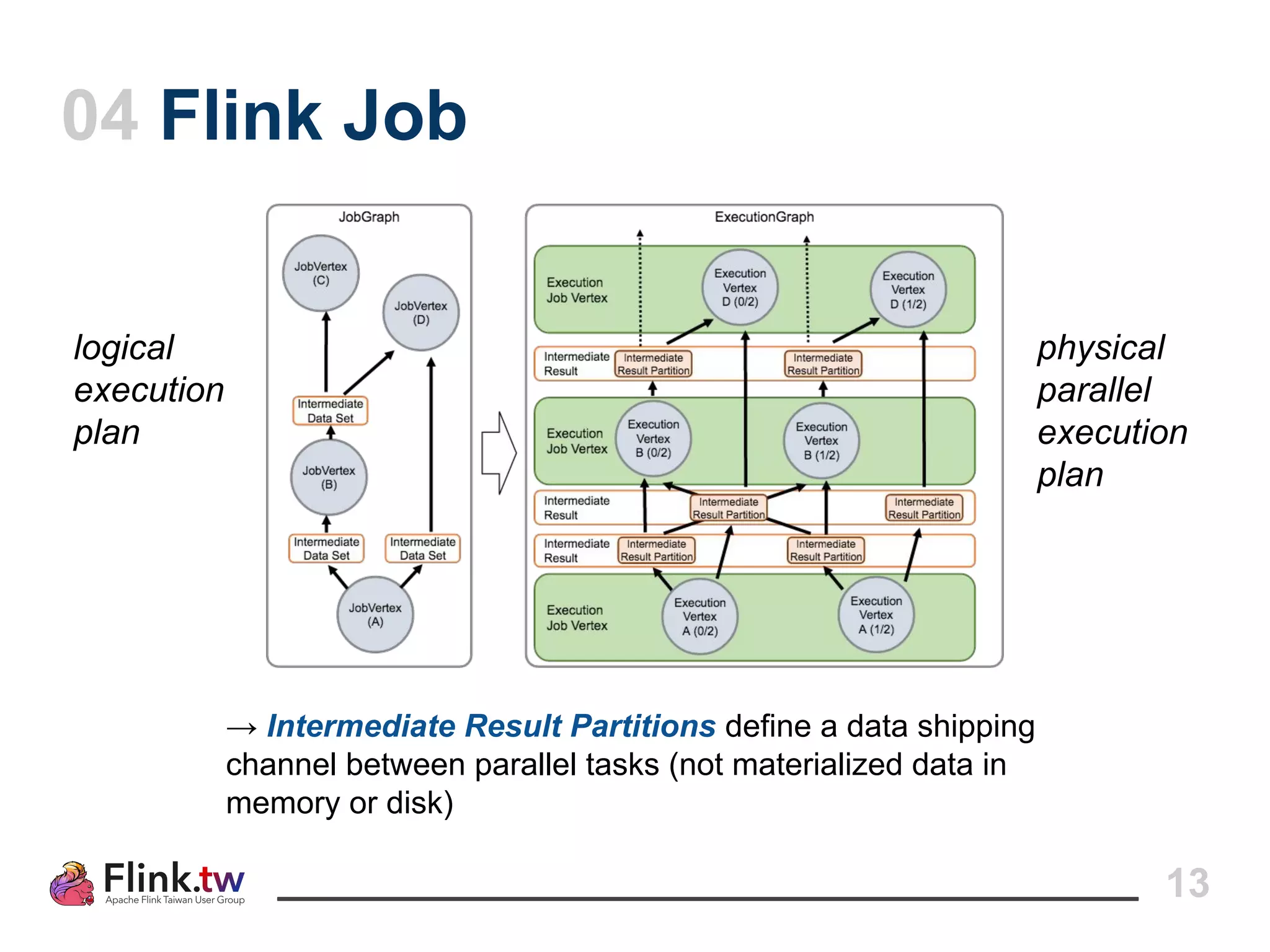

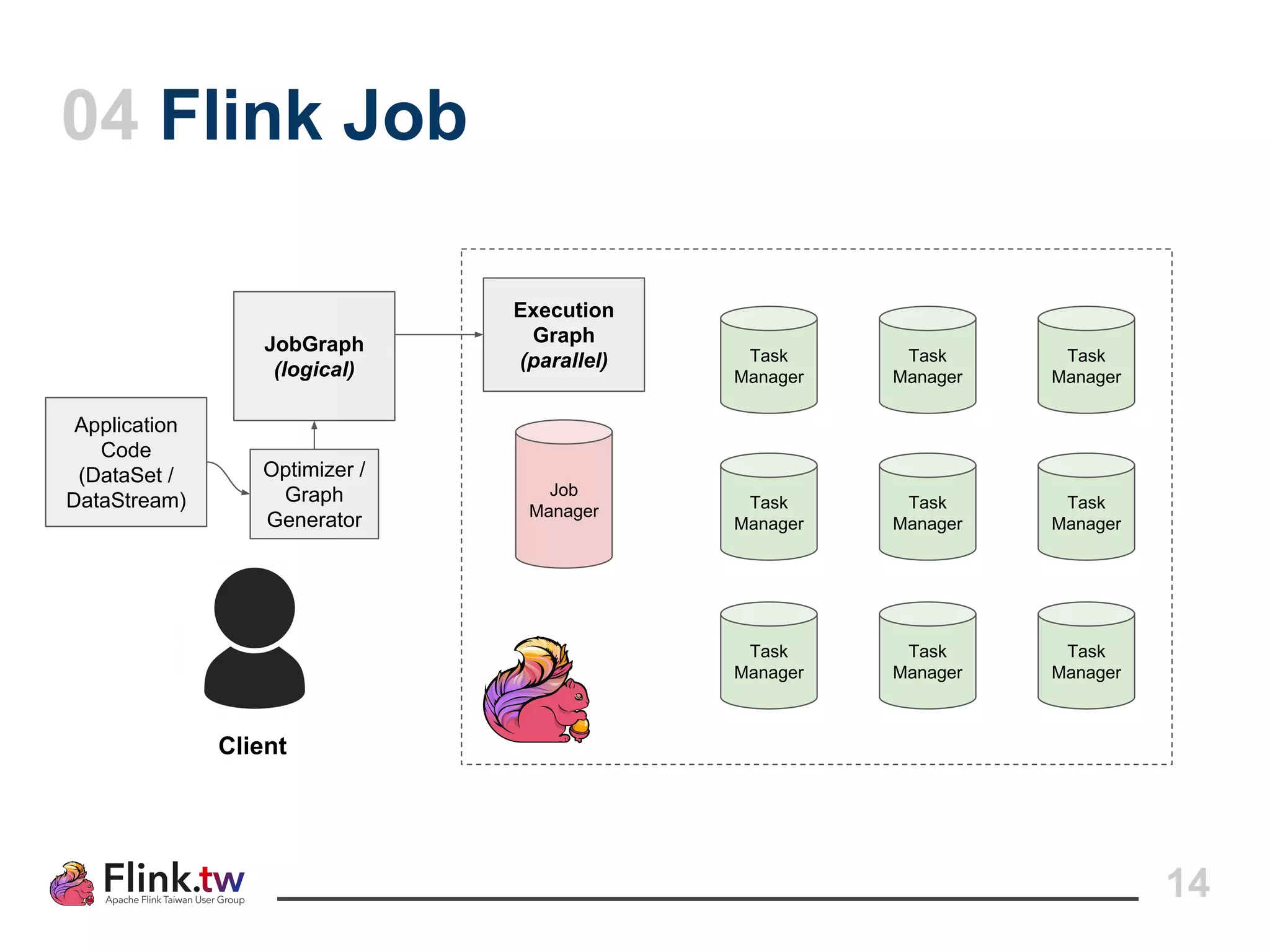

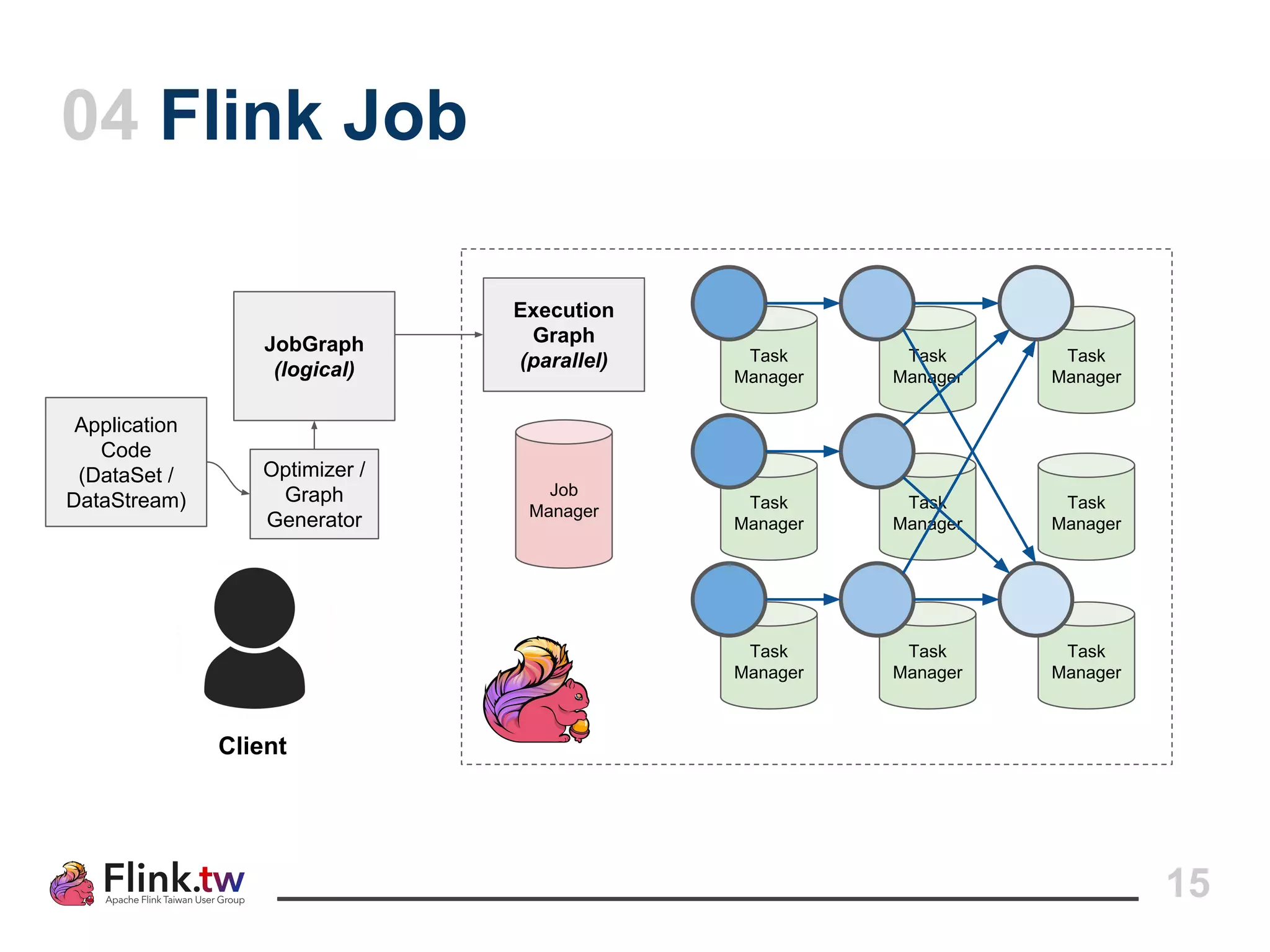

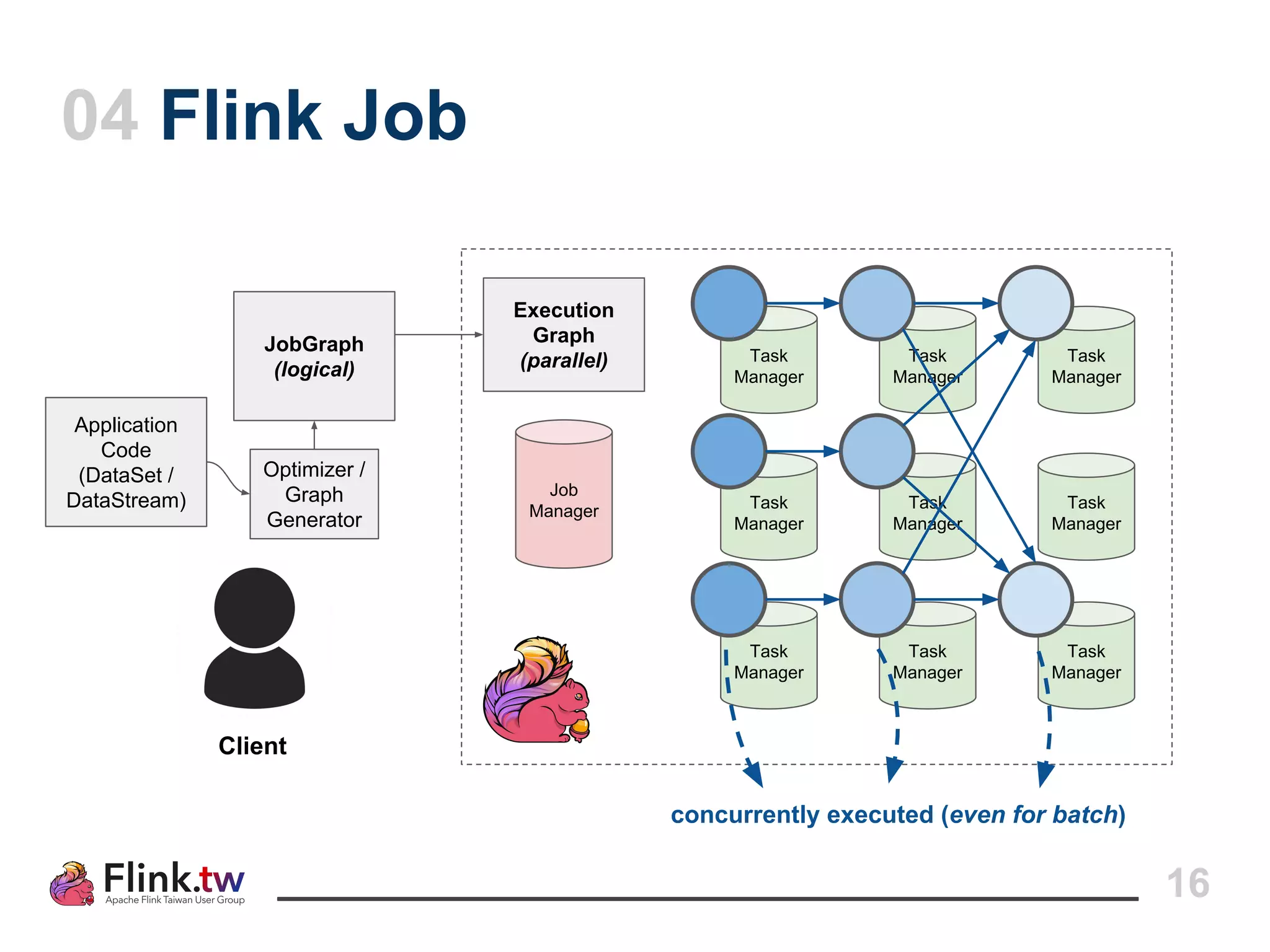

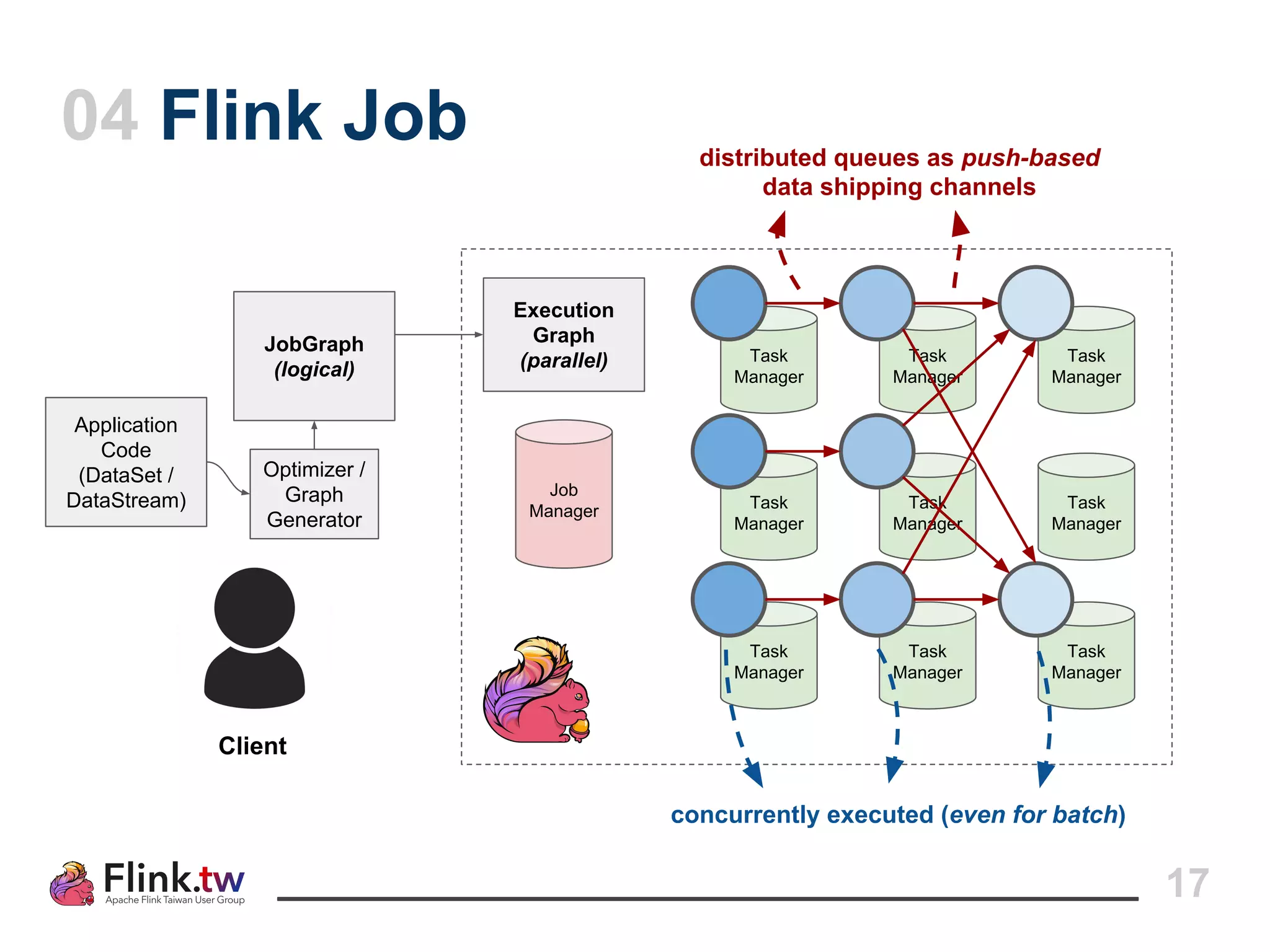

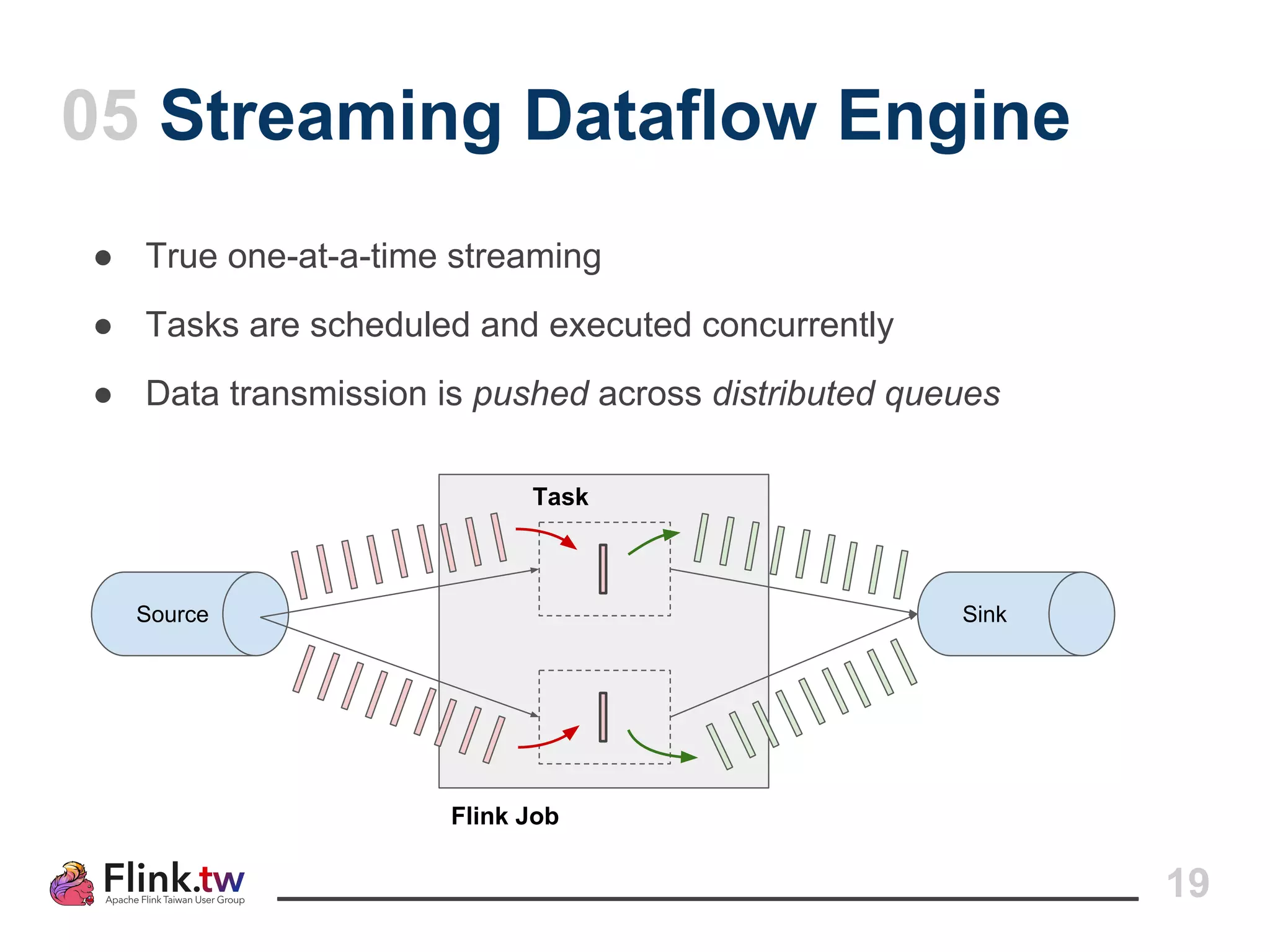

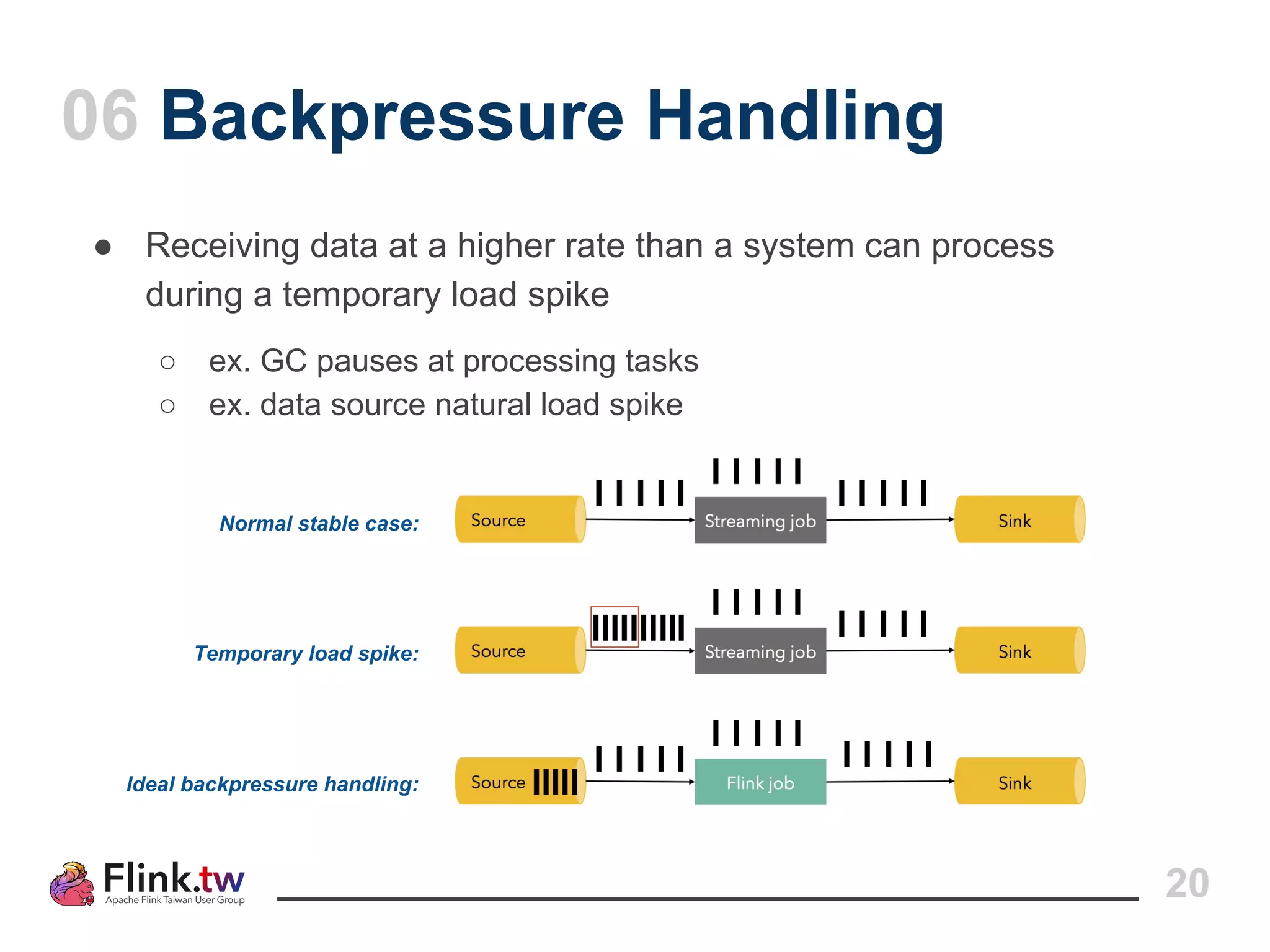

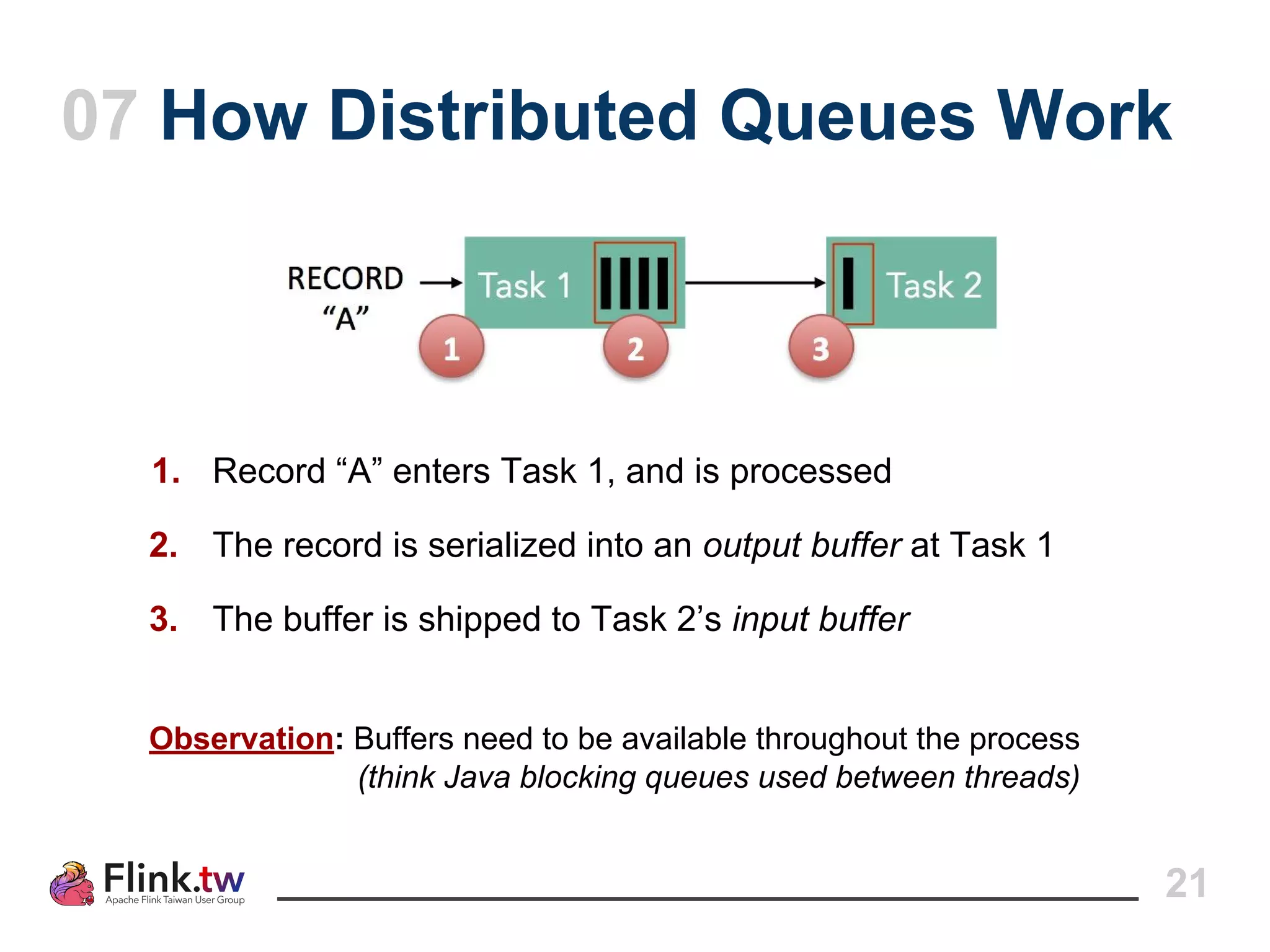

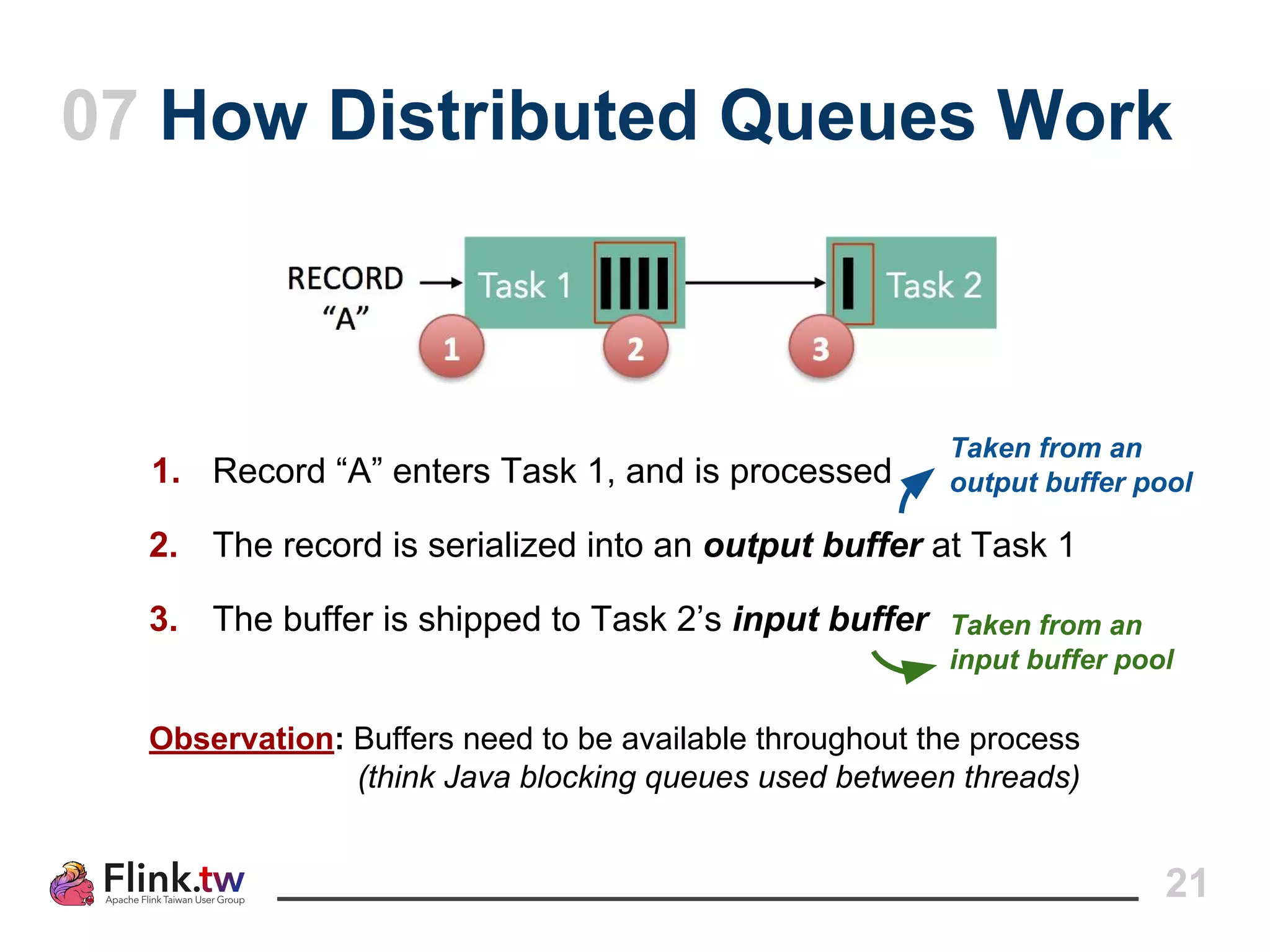

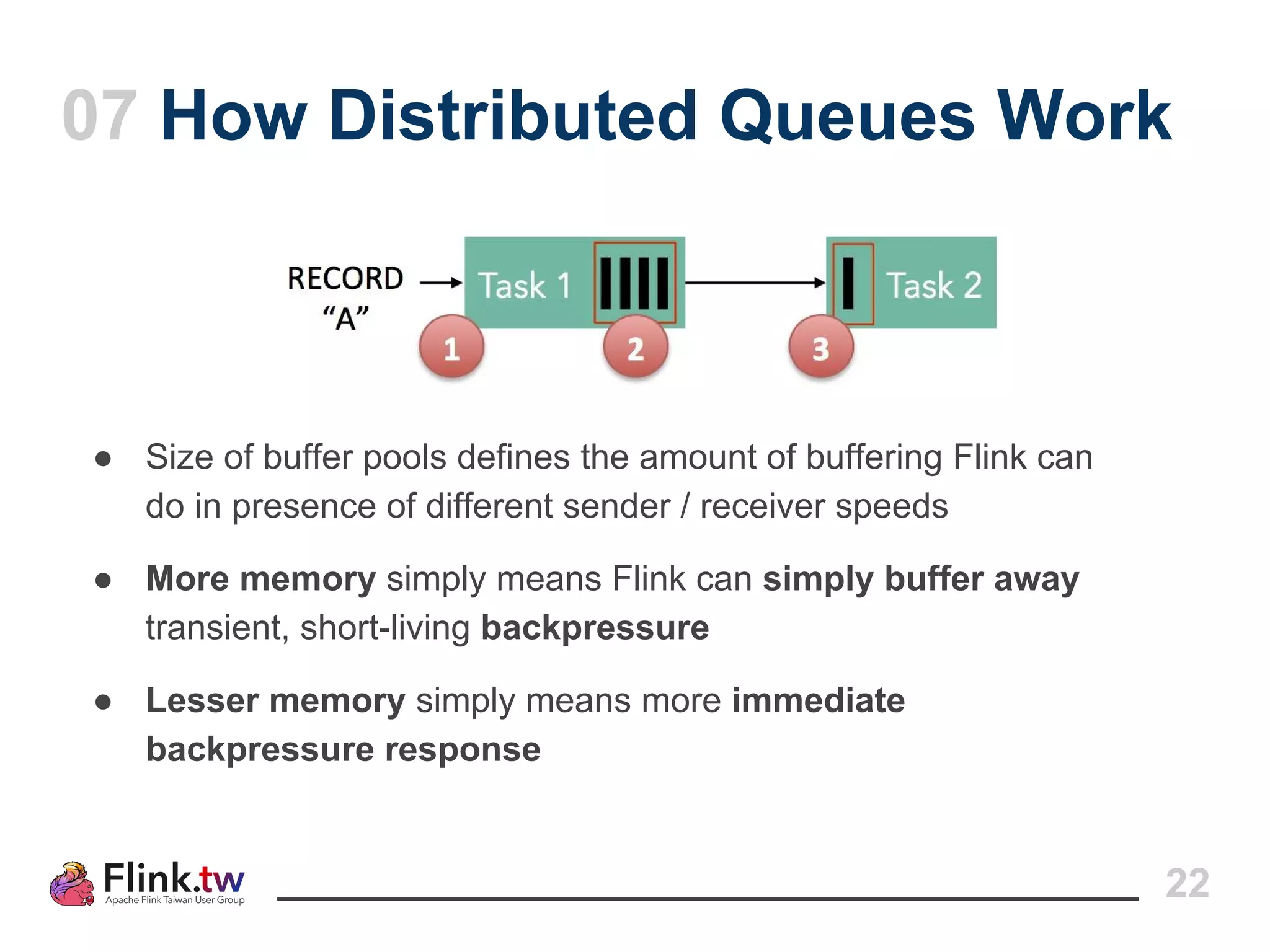

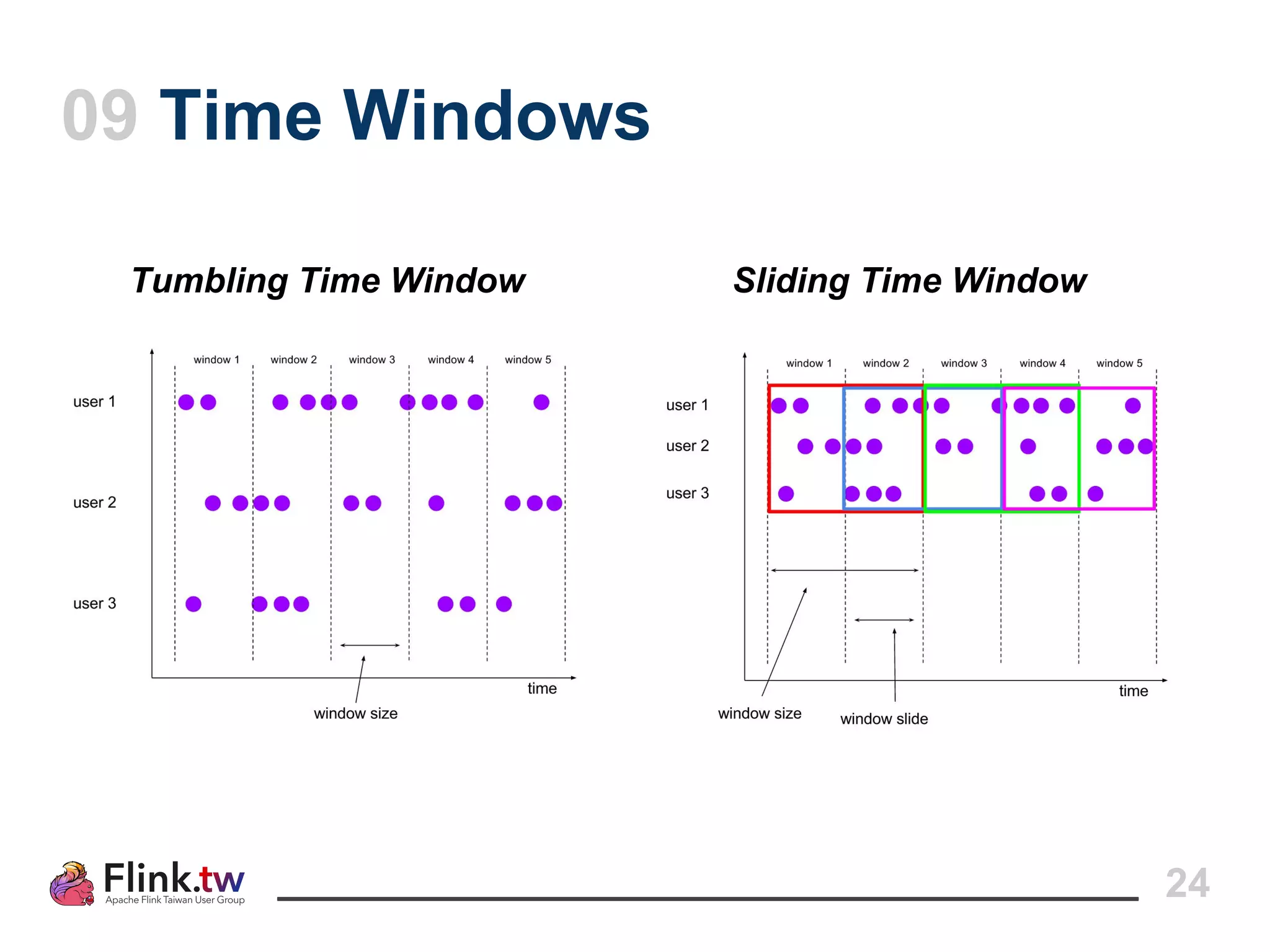

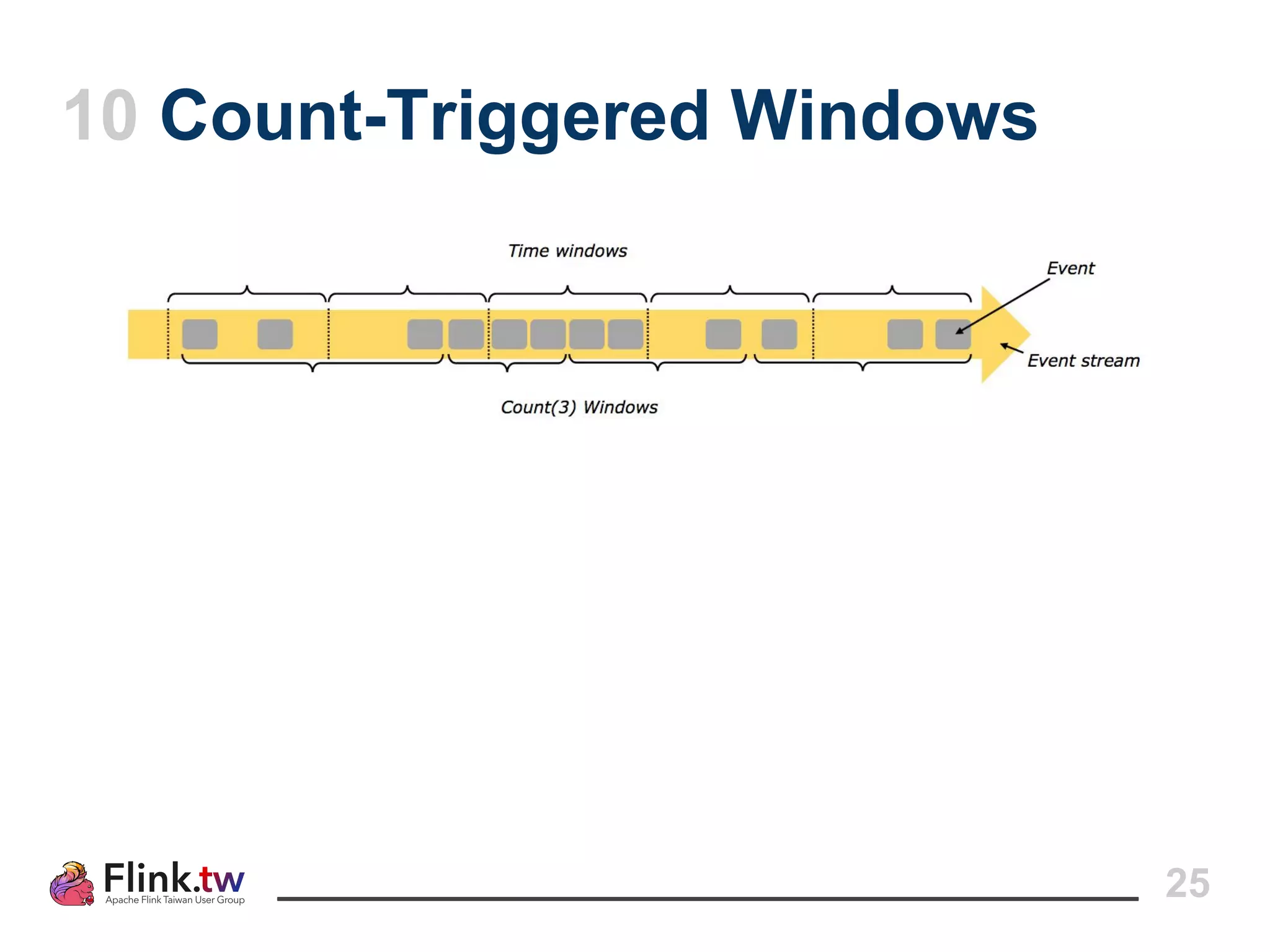

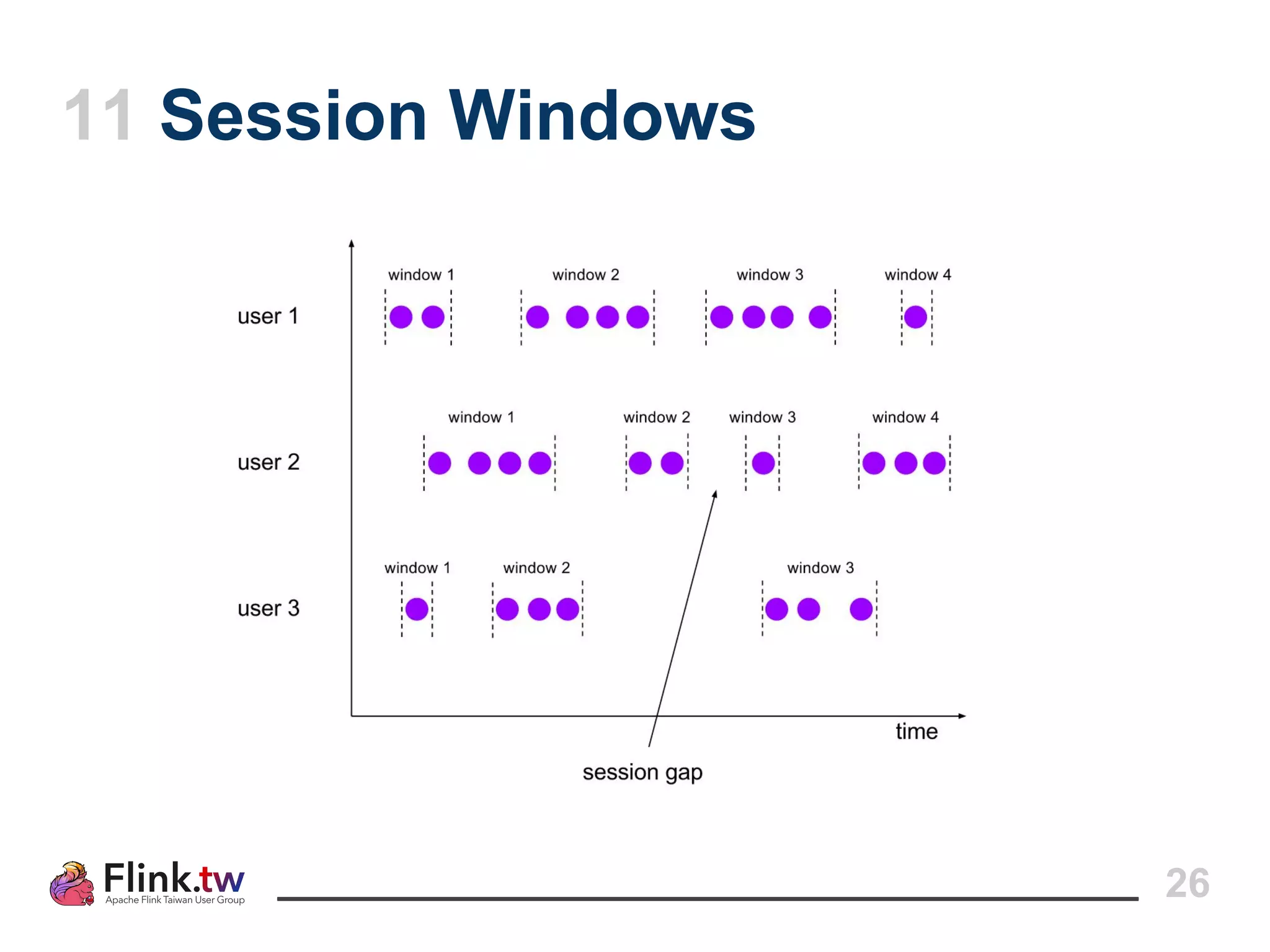

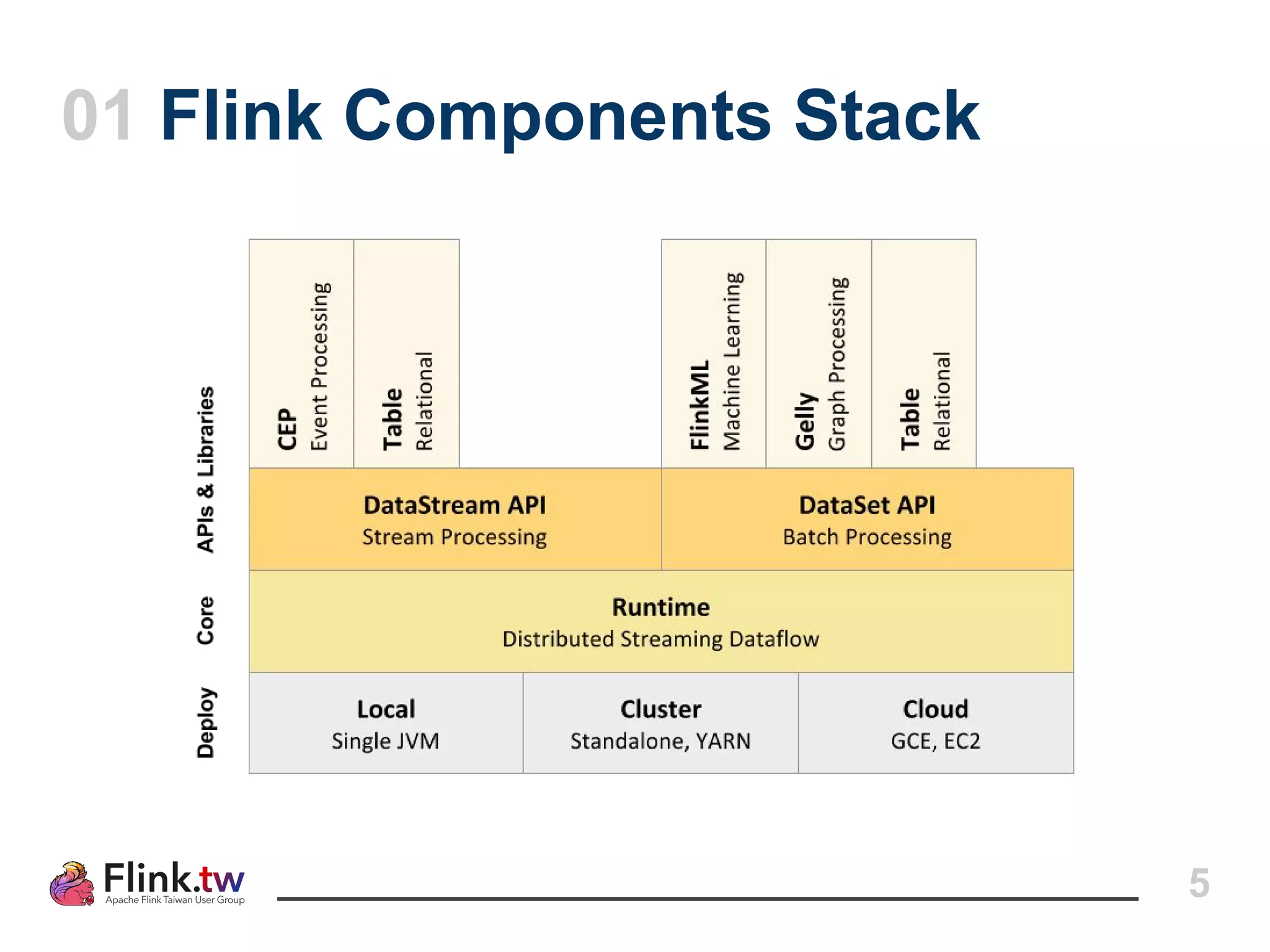

This document provides an overview of Apache Flink, an open-source platform for distributed stream and batch data processing, highlighting its components and features such as low latency and high throughput. It details the architecture of a Flink job, including the roles of application code, optimizers, and managers, and describes Flink's streaming-first philosophy and advantages. The document also covers backpressure handling, distributed queues, and flexible windowing options for processing data streams.

![6

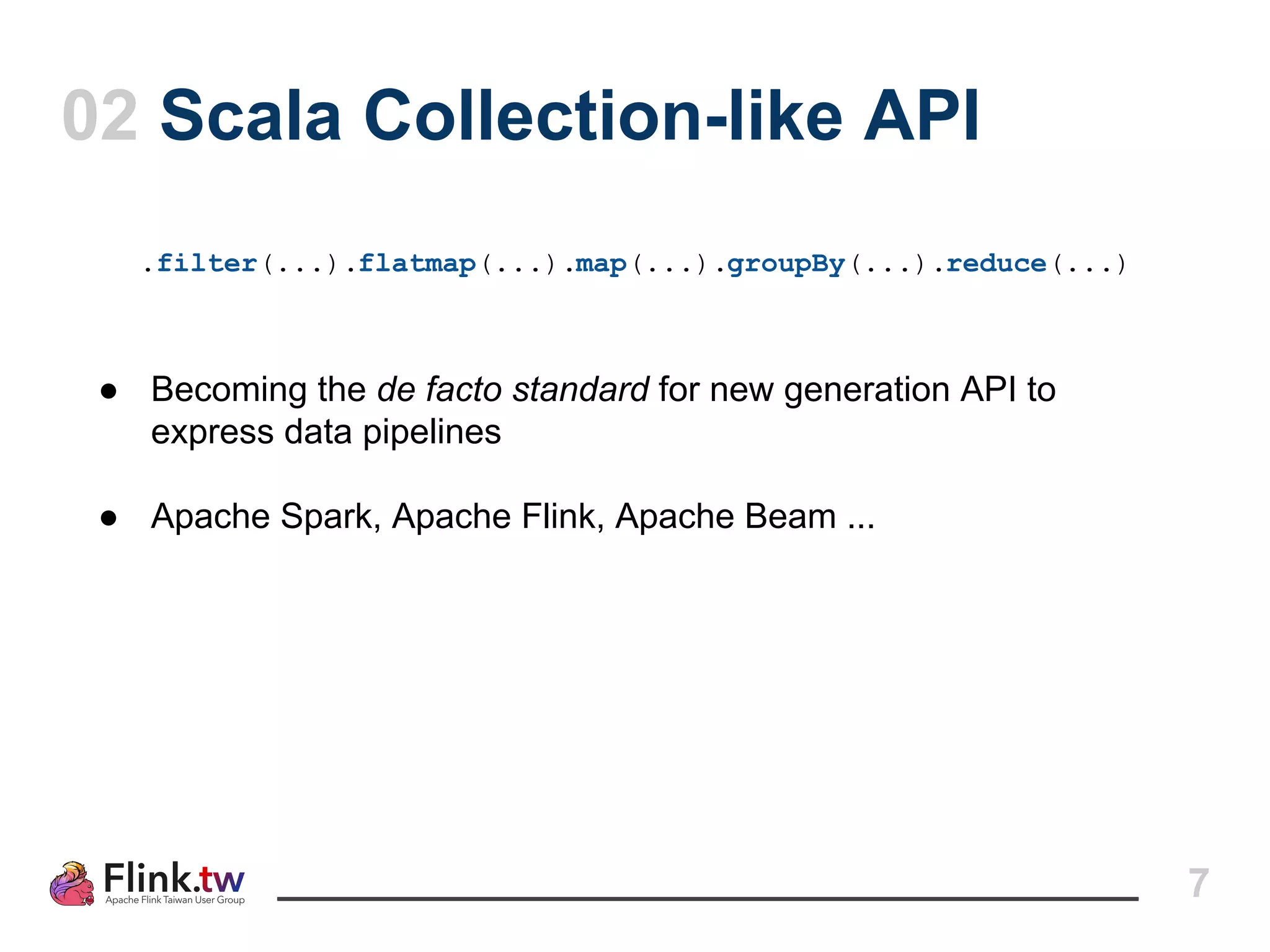

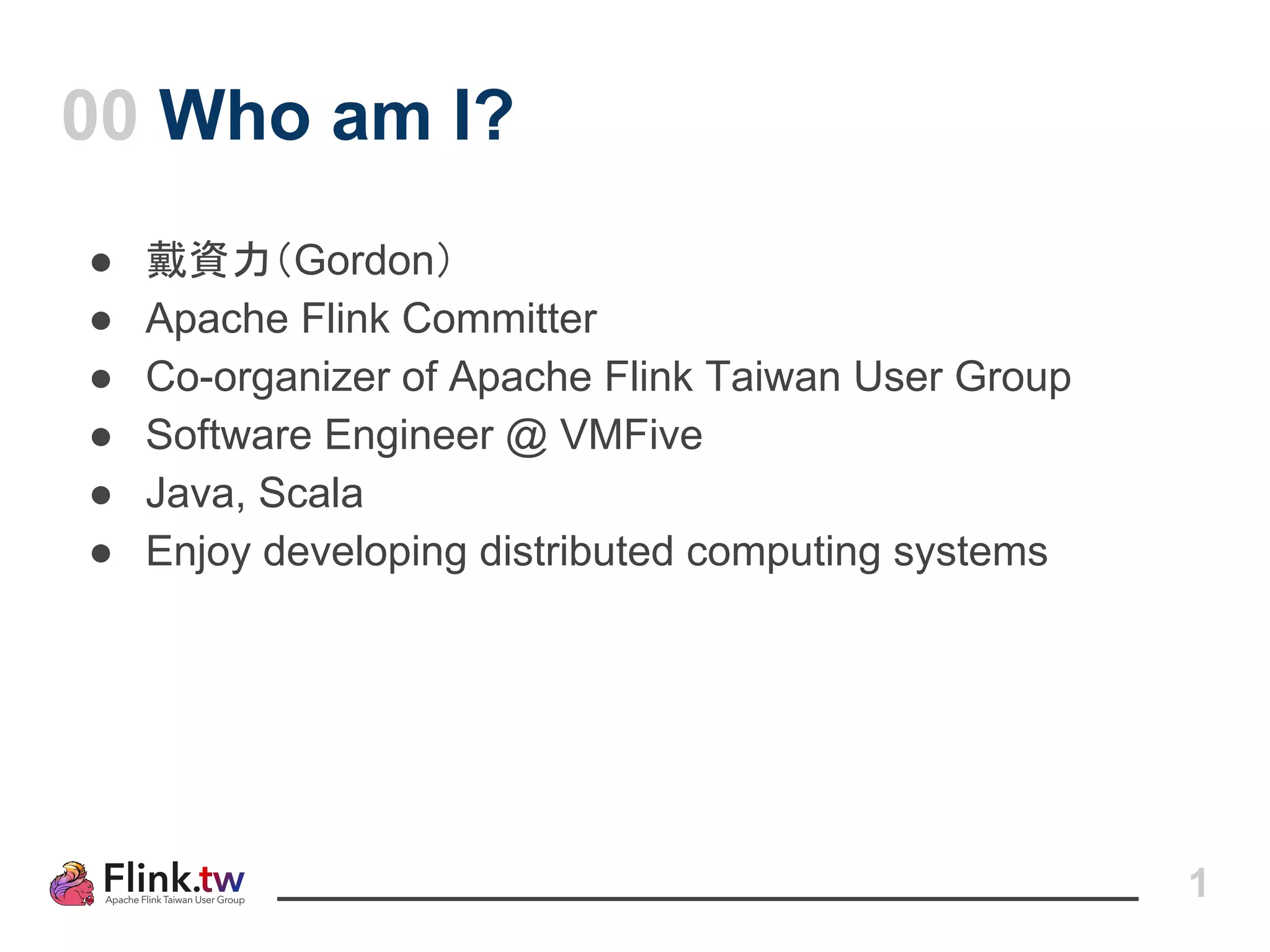

02 Scala Collection-like API

case class Word (word: String, count: Int)

val lines: DataSet[String] = env.readTextFile(...)

lines.flatMap(_.split(“ ”)). map(word => Word(word,1))

.groupBy(“word”).sum(“count”)

.print()

val lines: DataStream[String] = env.addSource(new KafkaSource(...))

lines.flatMap(_.split(“ ”)). map(word => Word(word,1))

.keyBy(“word”).timeWindow(Time.seconds(5)).sum(“count”)

.print()

DataSet API

DataStream API](https://image.slidesharecdn.com/hadoopcon2016-session1-160909155319/75/Apache-Flink-Training-Workshop-HadoopCon2016-1-System-Overview-7-2048.jpg)