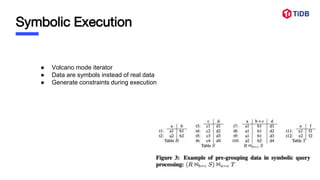

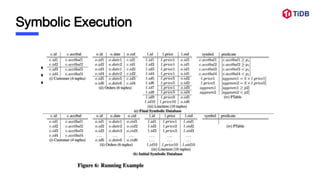

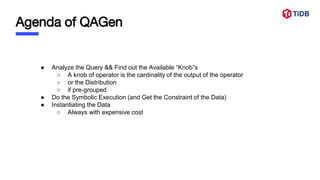

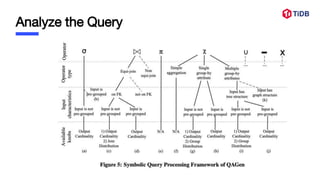

The document discusses the challenges and methods for simulating clients' business needs through query-aware database generators. It highlights two approaches: qagen, which uses symbolic execution to generate test databases, and dgl, a flexible database generation language for better workload handling. Both methods have pros and cons regarding efficiency and complexity in generating effective test data for complex queries.

![Hassles of Making a Generator

● For simple query, the solution is simple and direct

○ select [cols...] from t1 join t2 on t1.pk = t2.fk

● What if we need to simulate some correlation

○ where a < ‘xx’ and b > ‘yy’

● Or proccess complex projection

○ select case when cond1 then ***, case when cond2

● And the combination of above circumstances….](https://image.slidesharecdn.com/datagenerator-211105075703/85/Paper-Reading-QAGen-Generating-query-aware-test-databases-4-320.jpg)

![Symbolic Execution - Selection

● GetNext for child, read tuple “t”

● [Positive Tuple Annotation] if output not reach c

○ for each symbol s in t, insert <s, p>

○ return t to parent

● [Negative Tuple Processing]

○ insert <s, !p>](https://image.slidesharecdn.com/datagenerator-211105075703/85/Paper-Reading-QAGen-Generating-query-aware-test-databases-11-320.jpg)

![Symbolic Execution - Join

● Generate Join Distribution

● GetNext for (outer) child, read tuple “t”

● [Positive Tuple Annotation] replace the fk with pk

● [Negative Tuple Processing]](https://image.slidesharecdn.com/datagenerator-211105075703/85/Paper-Reading-QAGen-Generating-query-aware-test-databases-12-320.jpg)