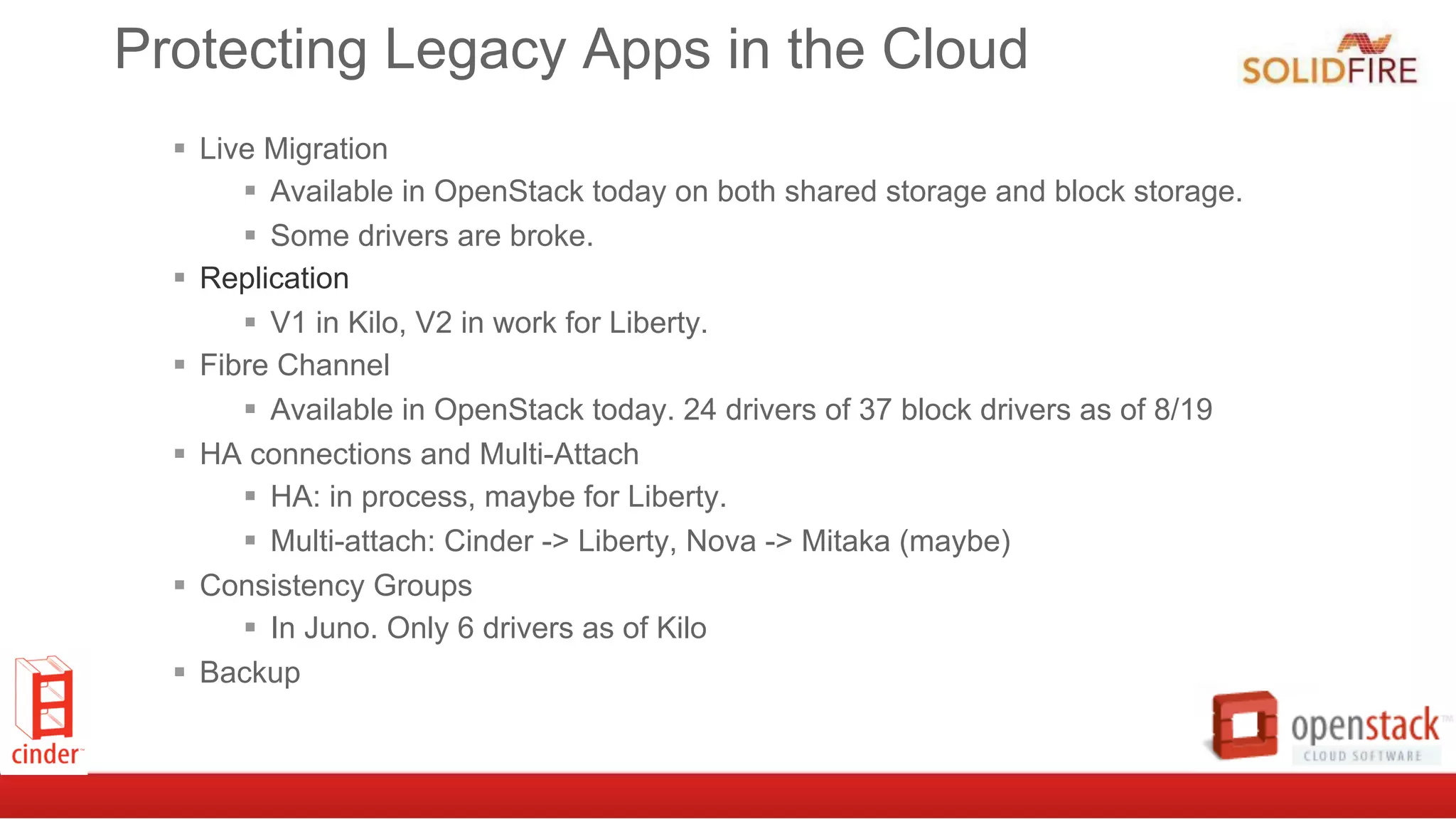

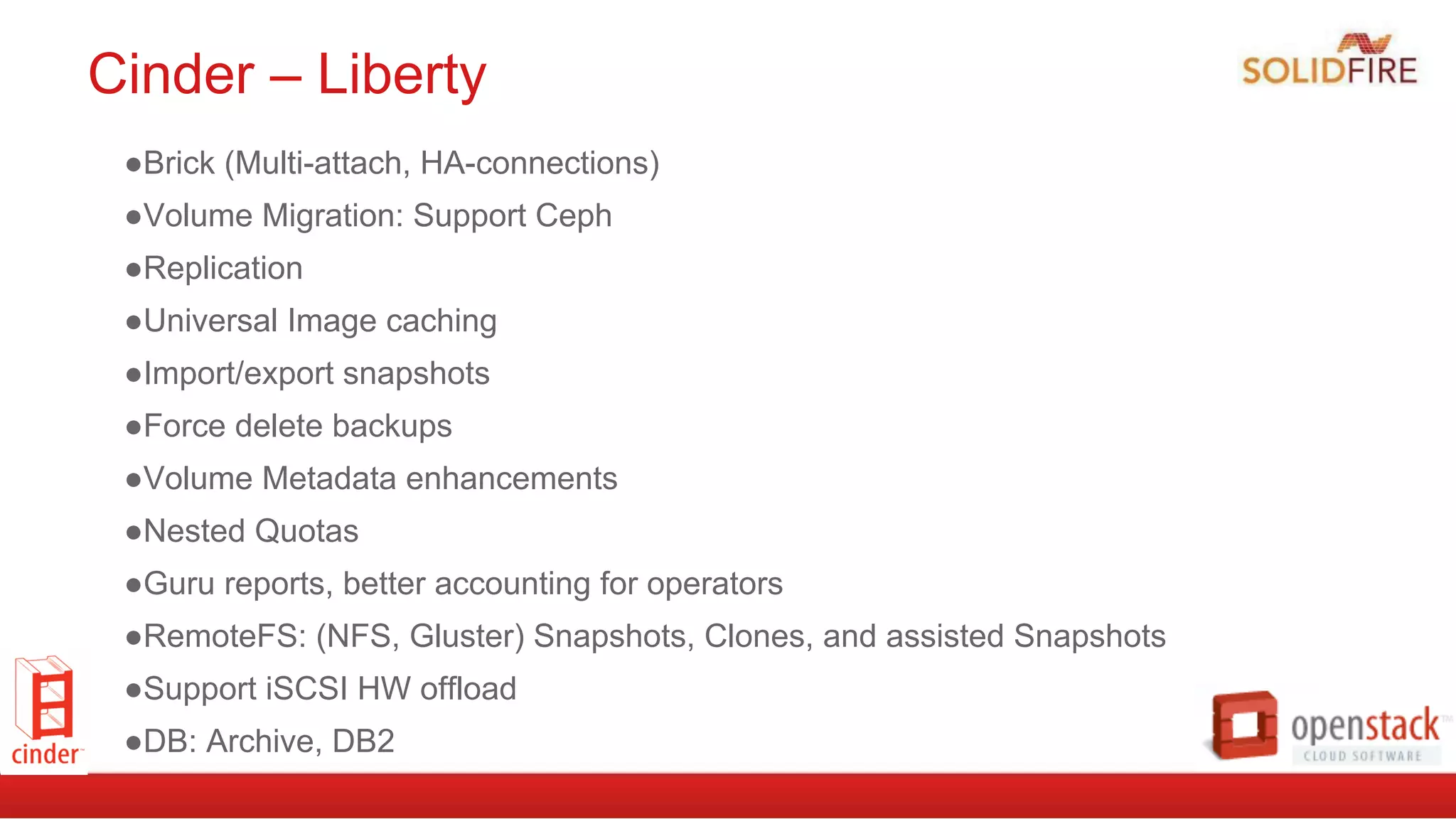

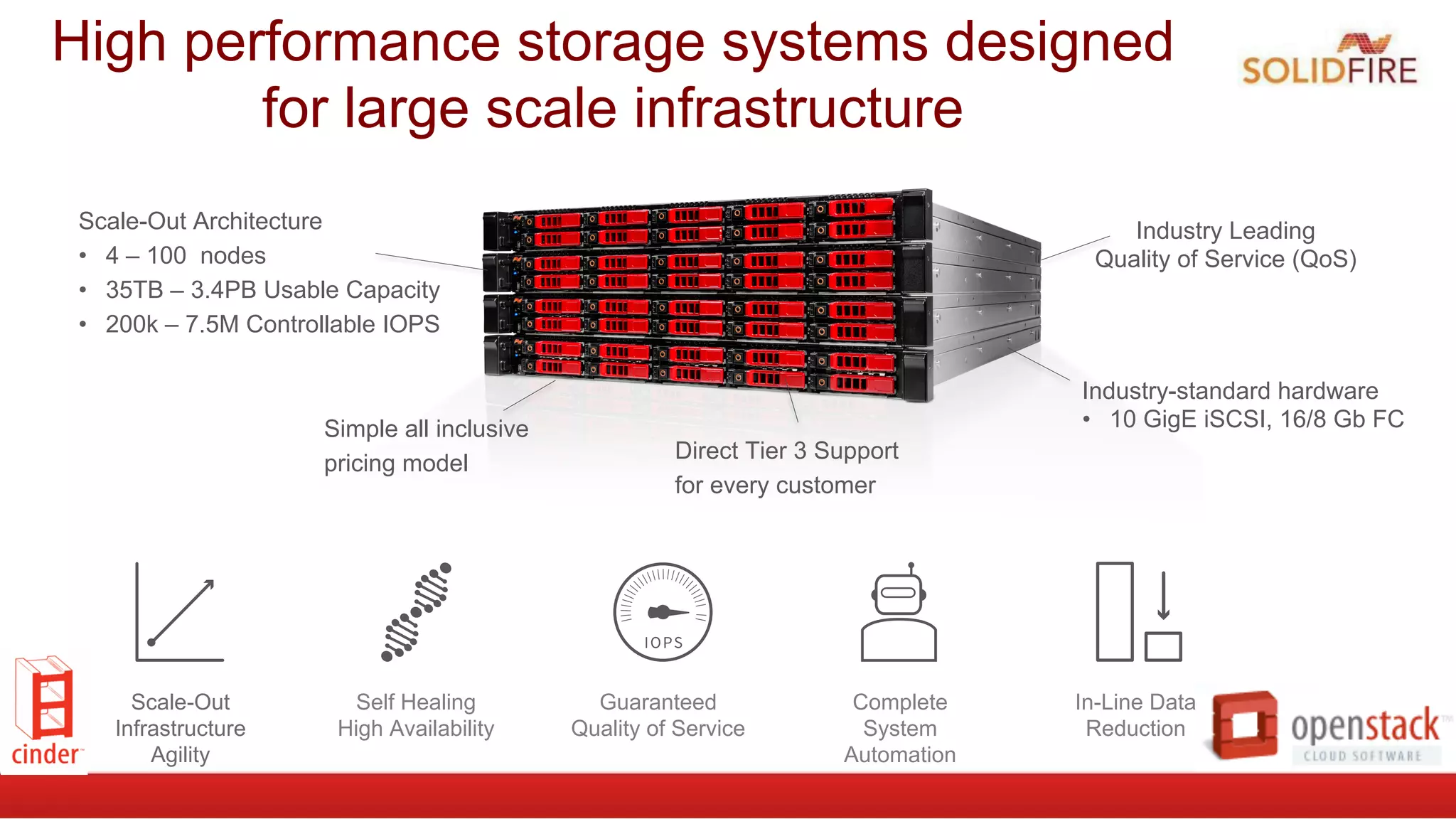

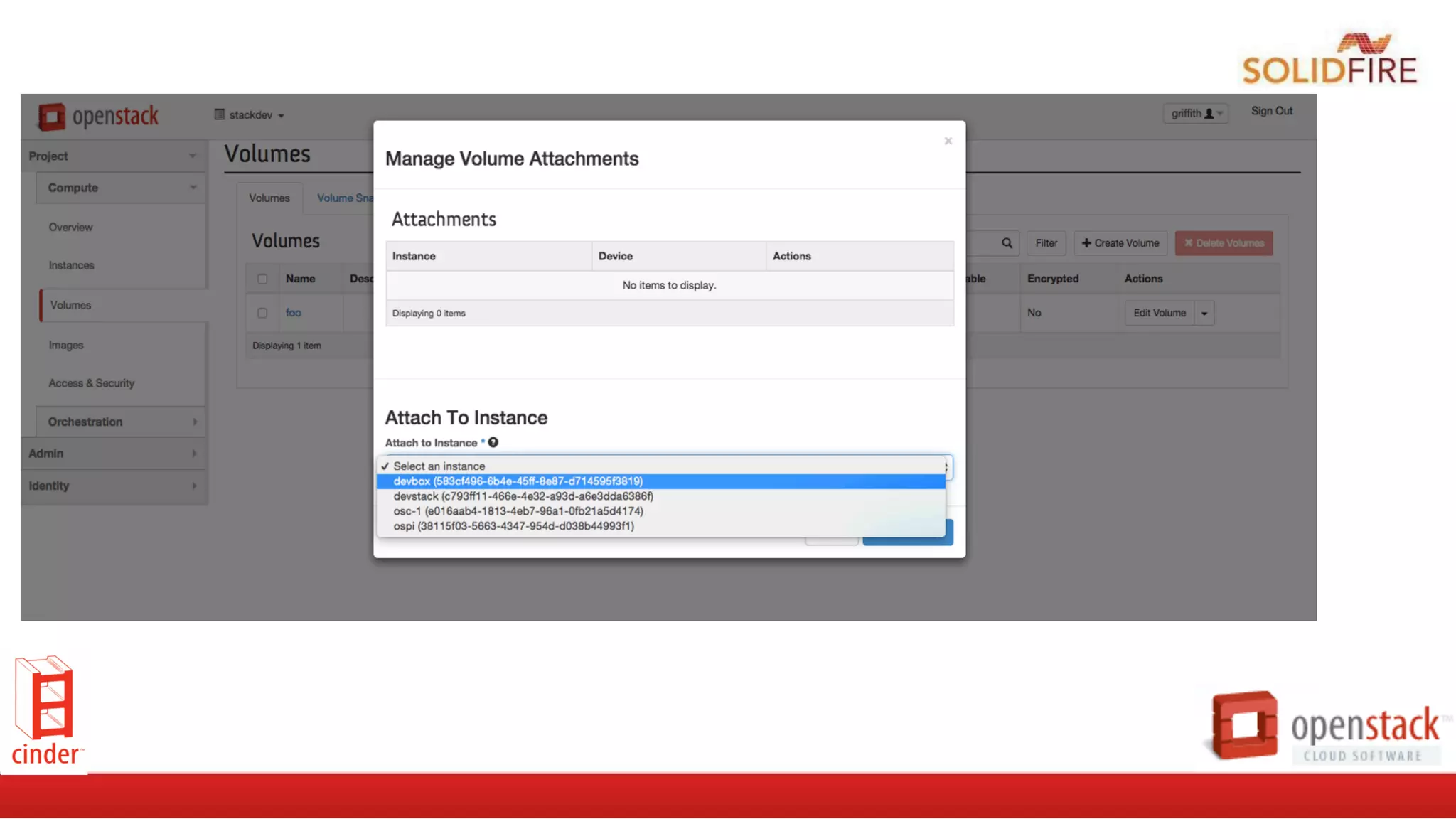

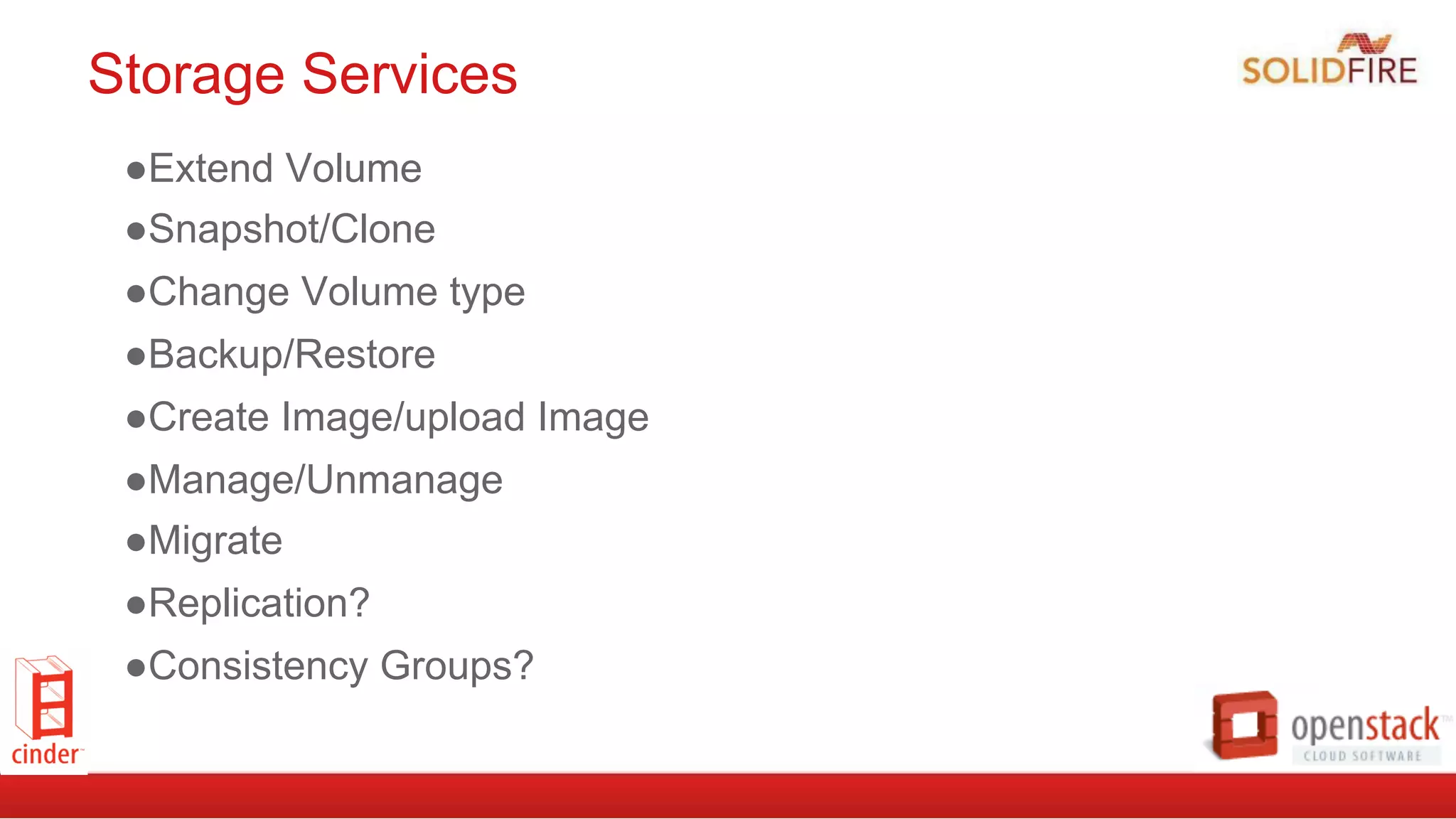

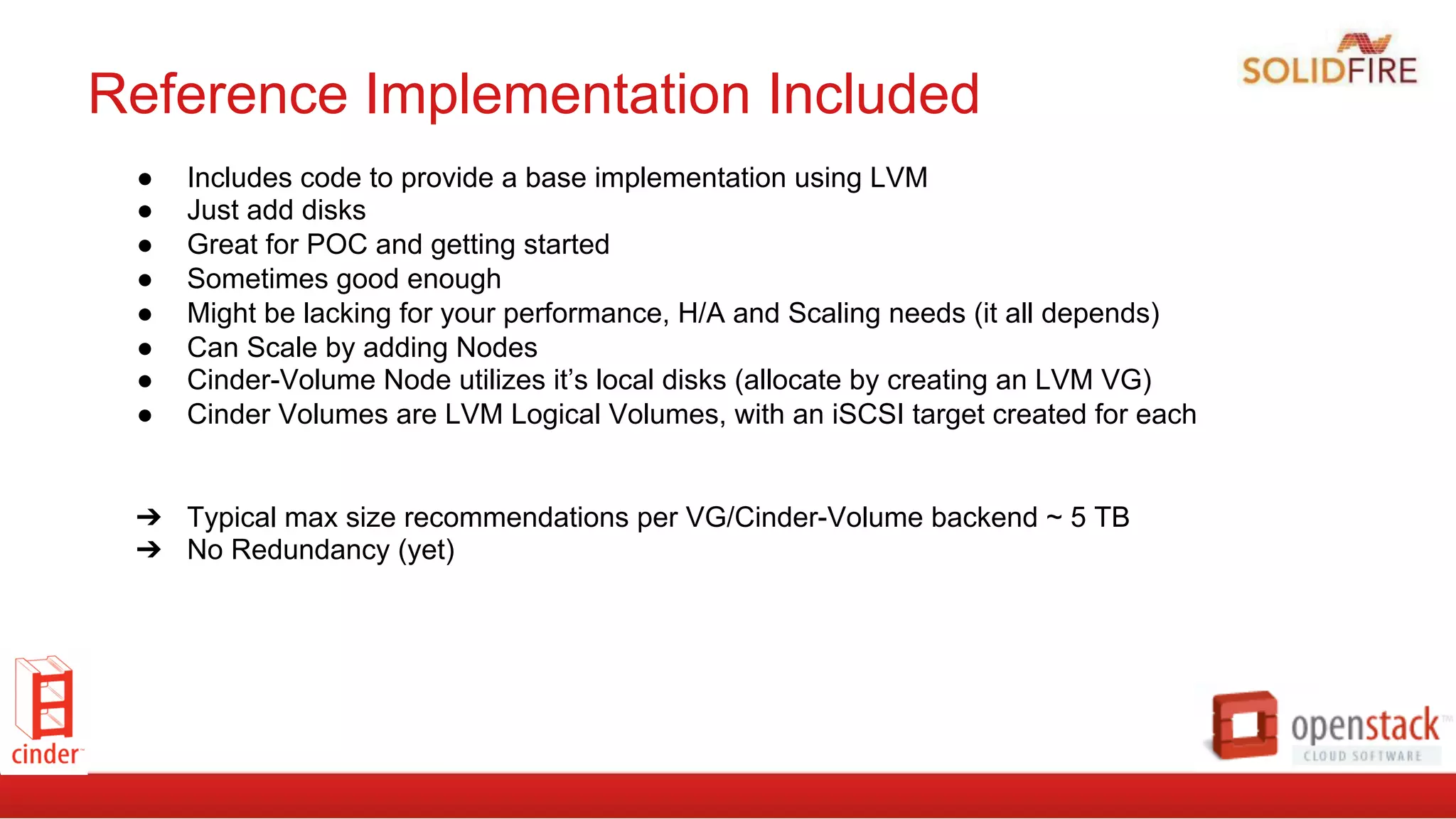

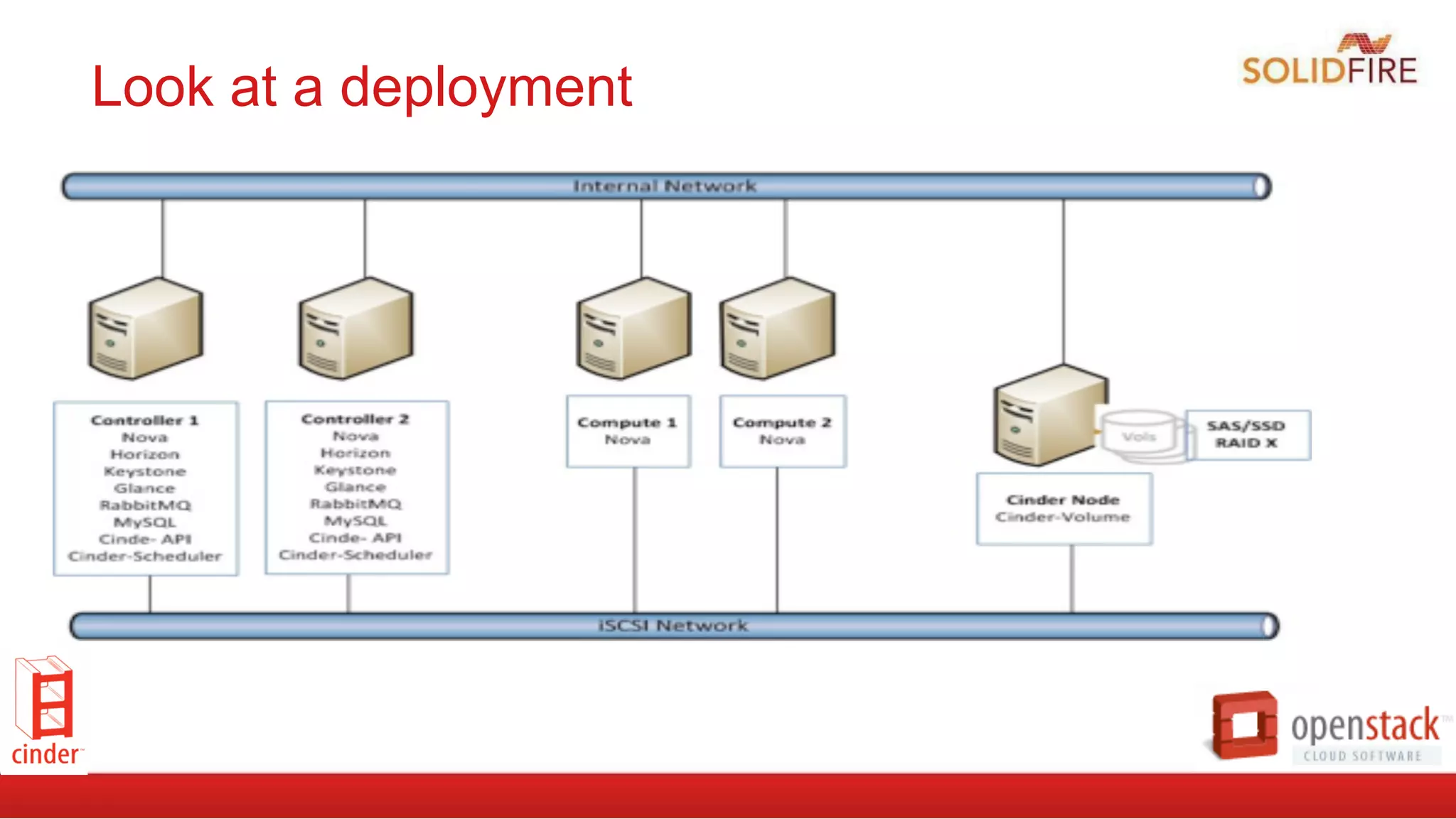

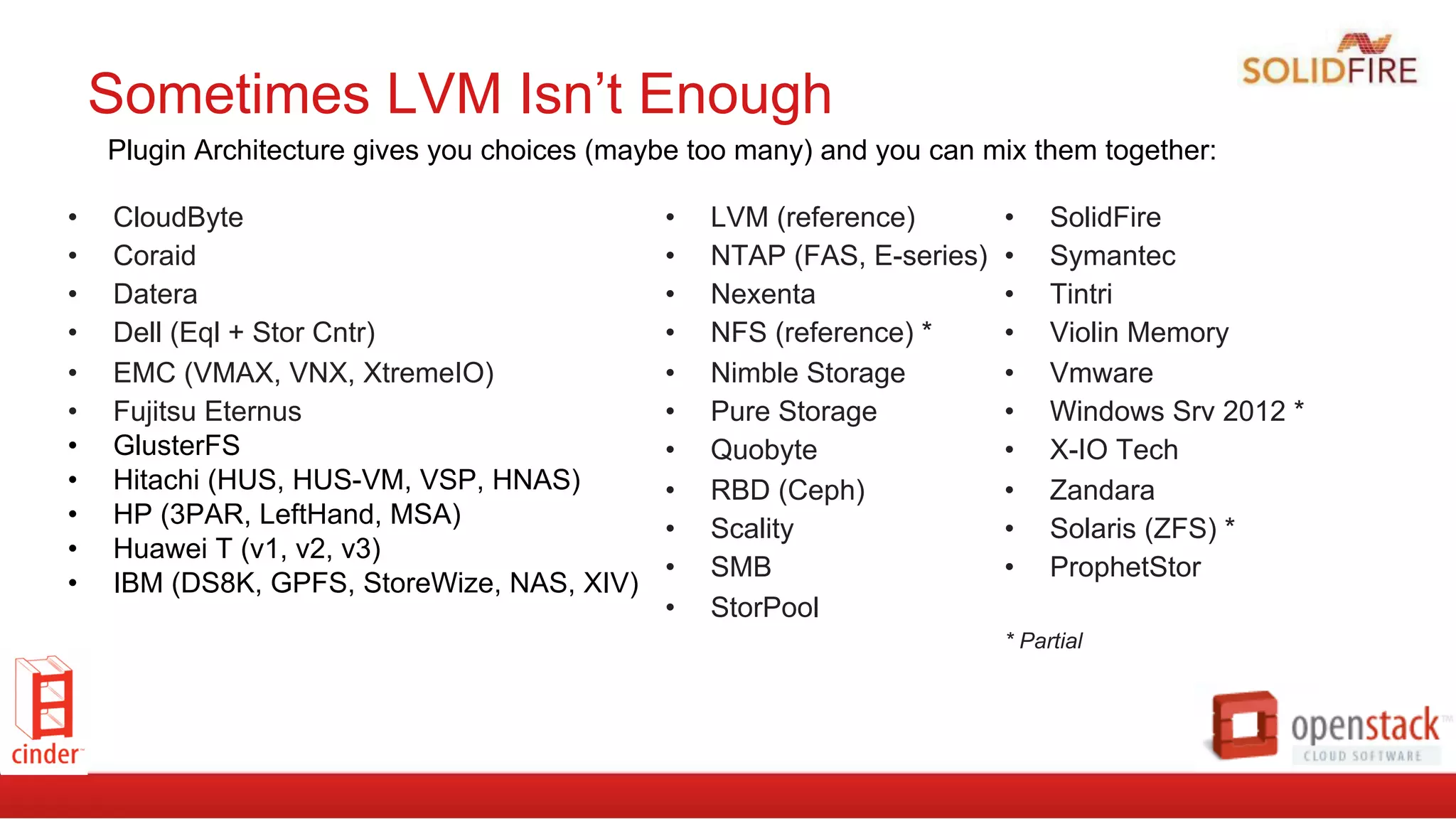

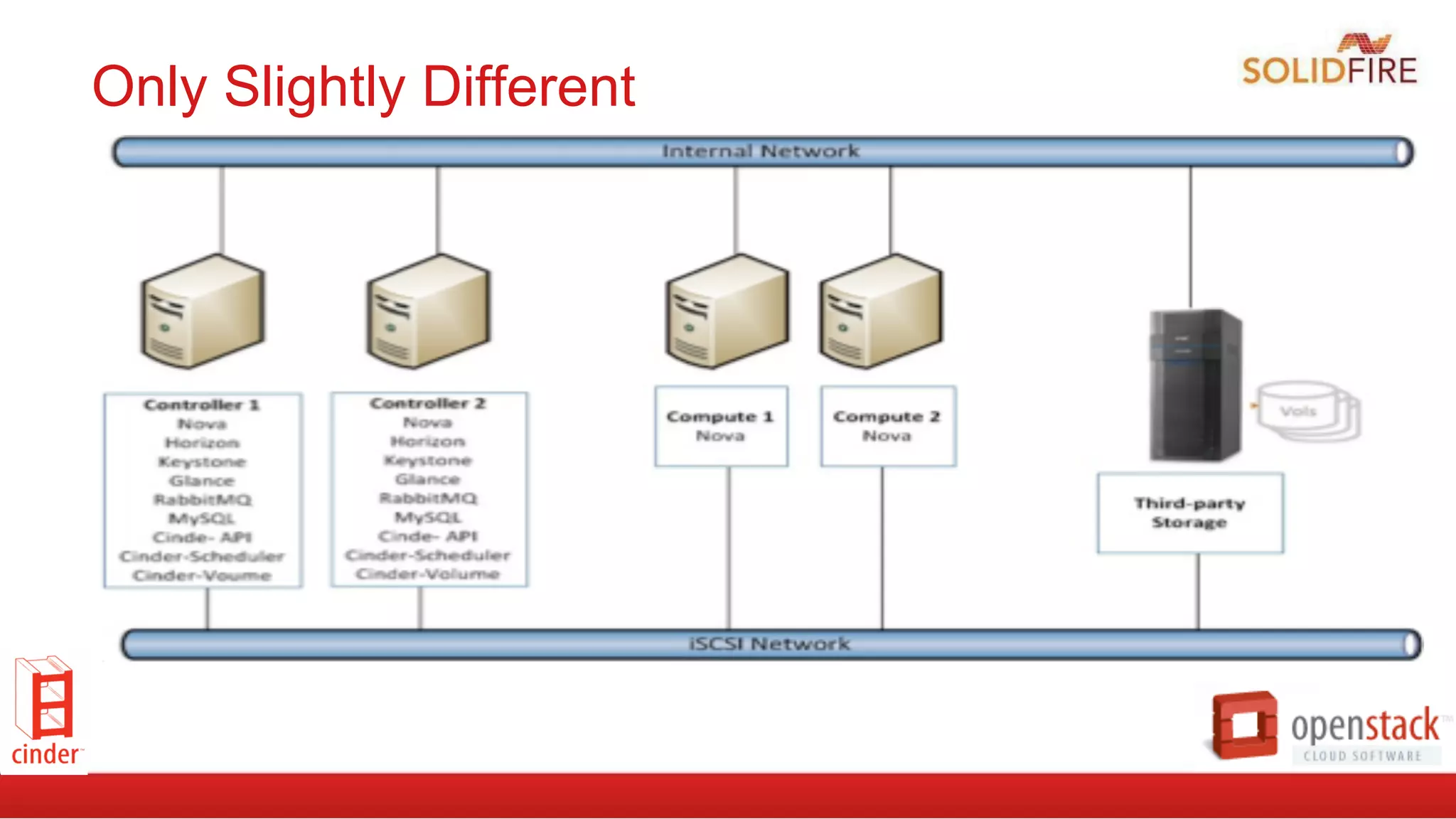

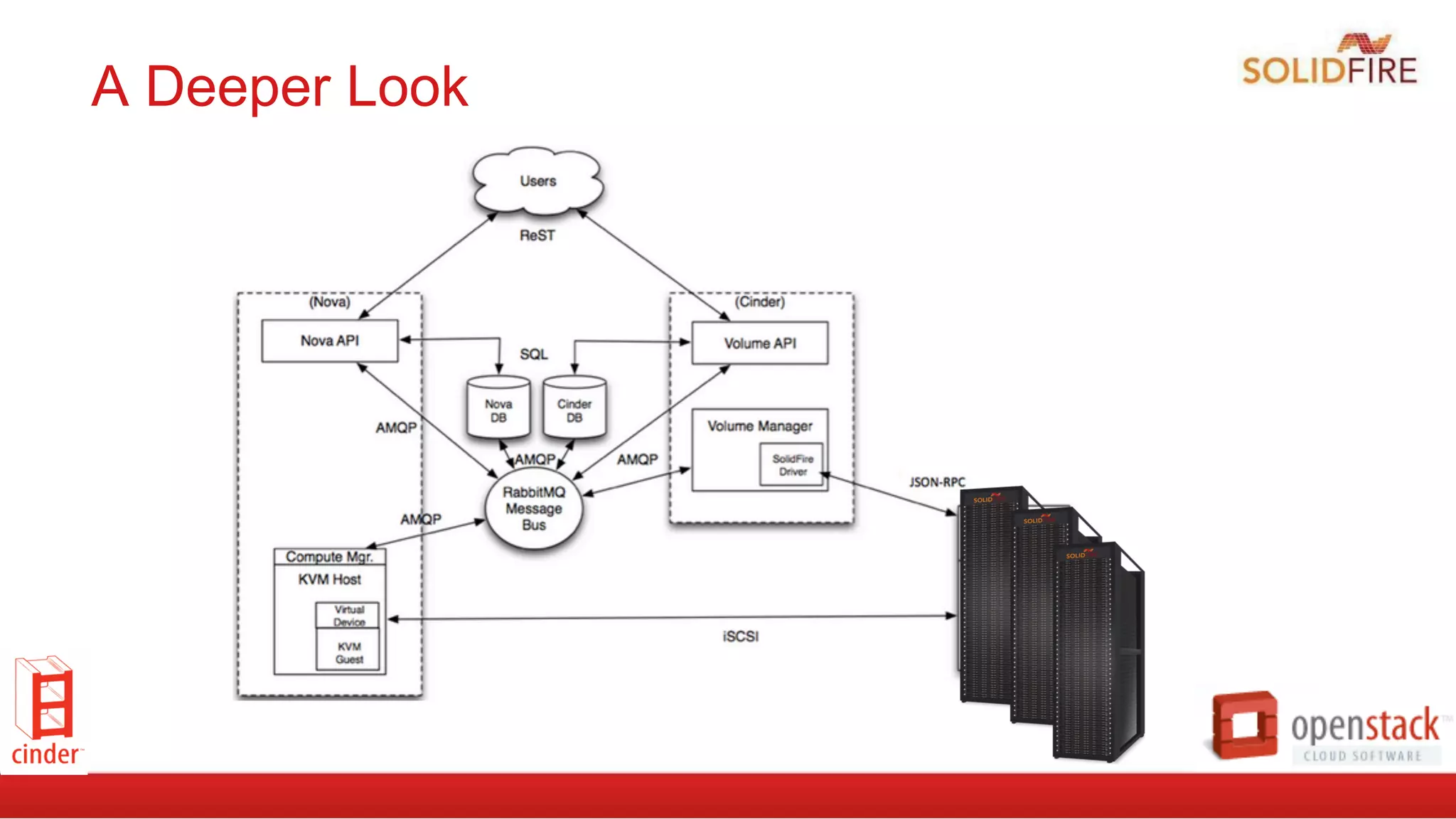

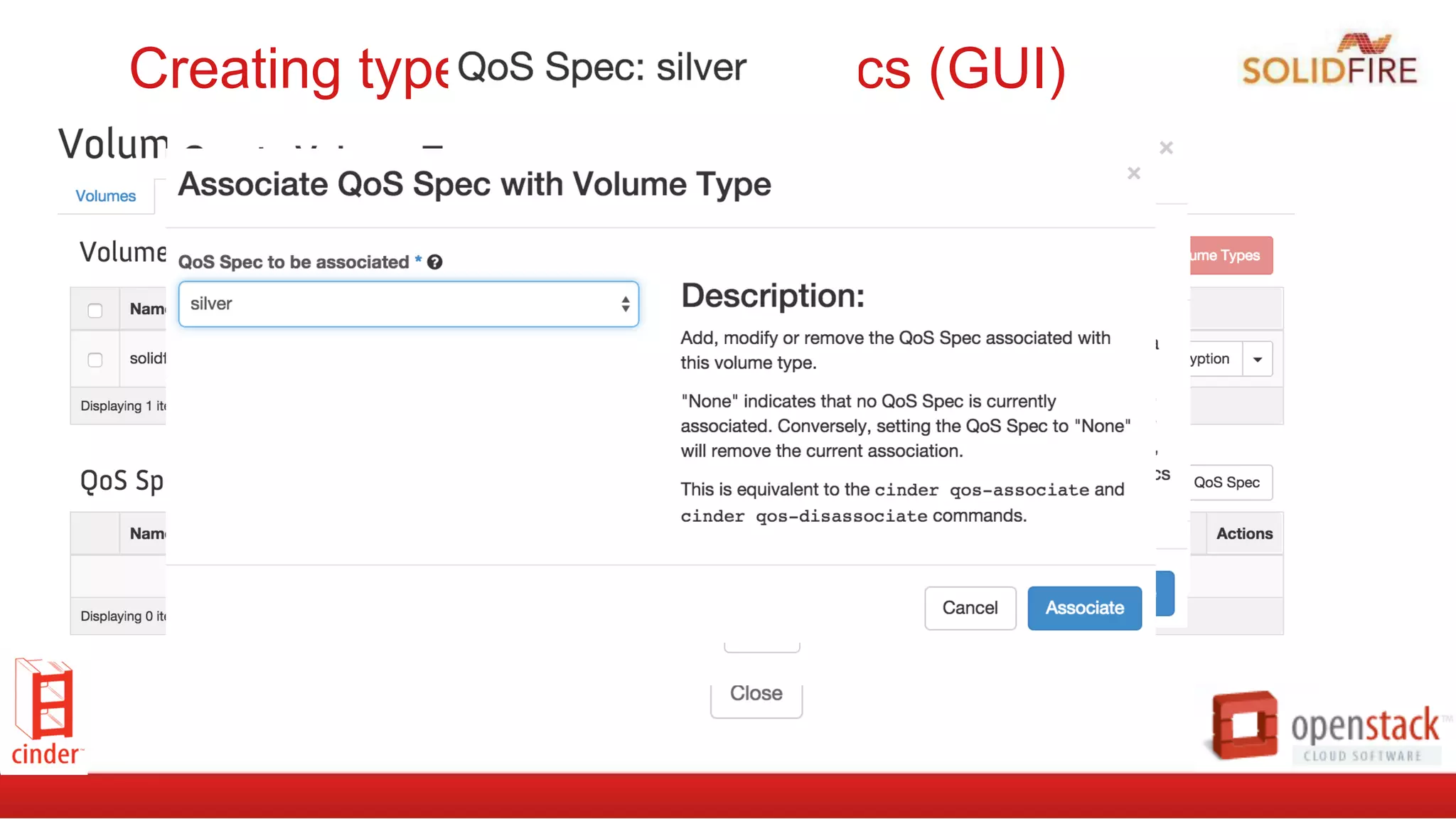

This document discusses OpenStack Block Storage (Cinder) implementations, trends, and the future direction of Cinder. It provides an overview of Cinder's mission to provide on-demand, self-service block storage and its plugin architecture that supports various backend storage devices. It also discusses some common storage types in OpenStack and looks at specific Cinder features, configurations, and the user experience. The document concludes by exploring how Cinder may evolve to better support enterprise applications and looks at upcoming changes in the Liberty release.

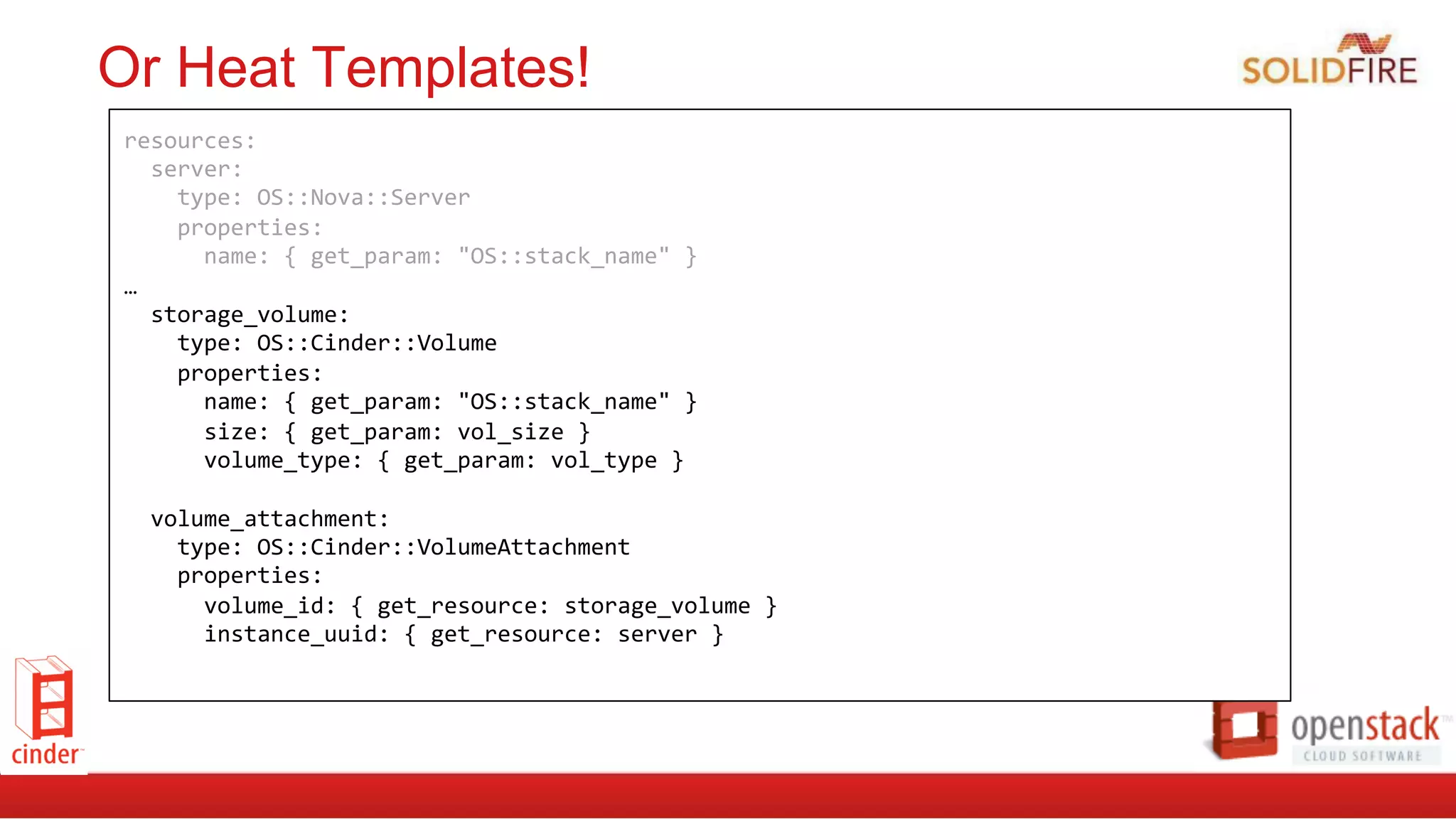

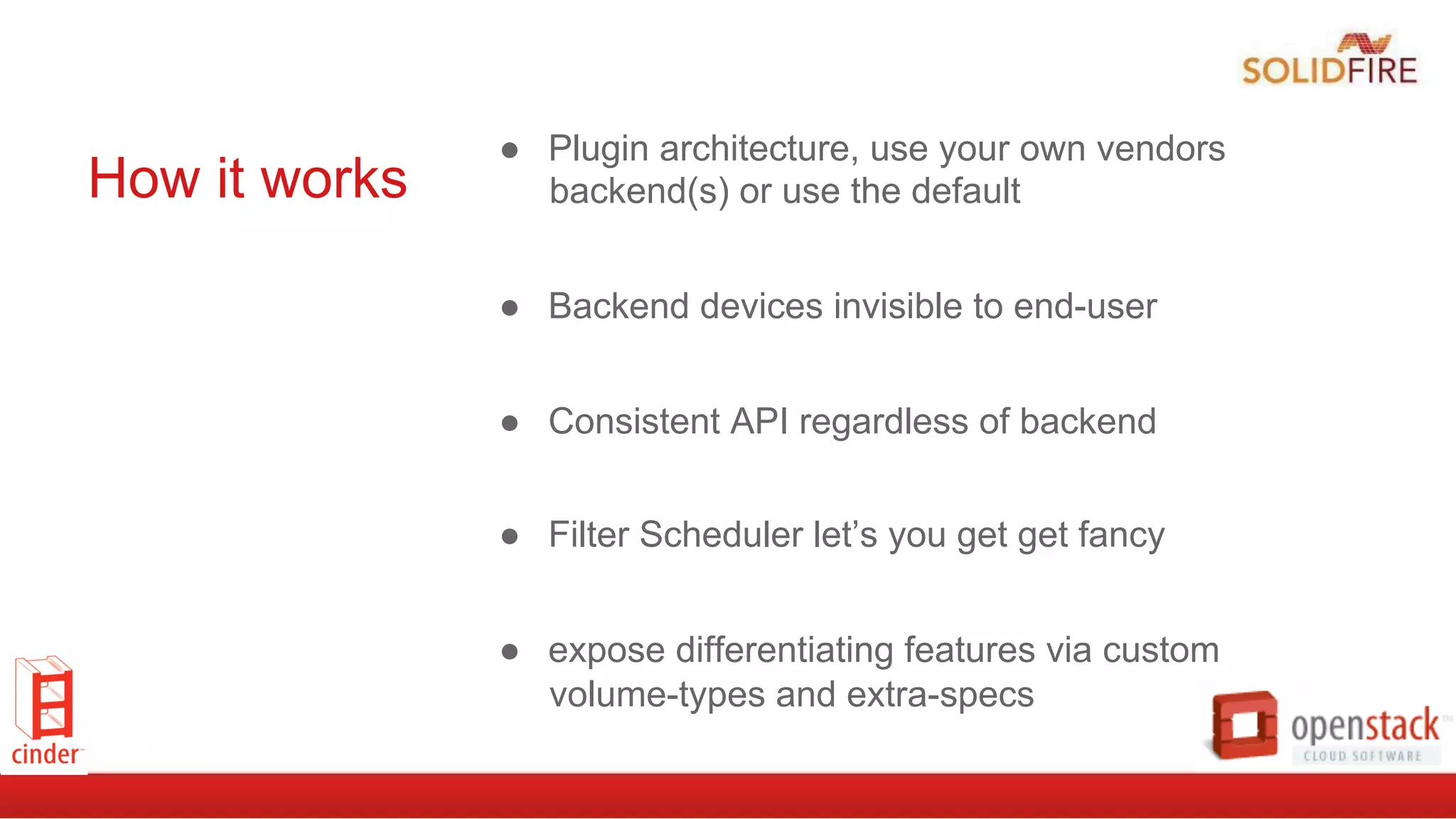

![cinder.conf file entries

#Append

to

/etc/cinder.conf

enabled_backends=lvm,solidfire

default_volume_type=SolidFire

[lvm]

volume_group=cinder_volumes

volume_driver=cinder.volume.drivers.lvm.LVMISCSIDriver

volume_backend_name=LVM_iSCSI

[solidfire]

volume_driver=cinder.volume.drivers.solidfire.SolidFire

san_ip=192.168.138.180

san_login=admin

san_password=solidfire

volume_backend_name=SolidFire](https://image.slidesharecdn.com/cinder-meetups-baldufv3-150917162250-lva1-app6892/75/OpenStack-Cinder-Implementation-Today-and-New-Trends-for-Tomorrow-13-2048.jpg)

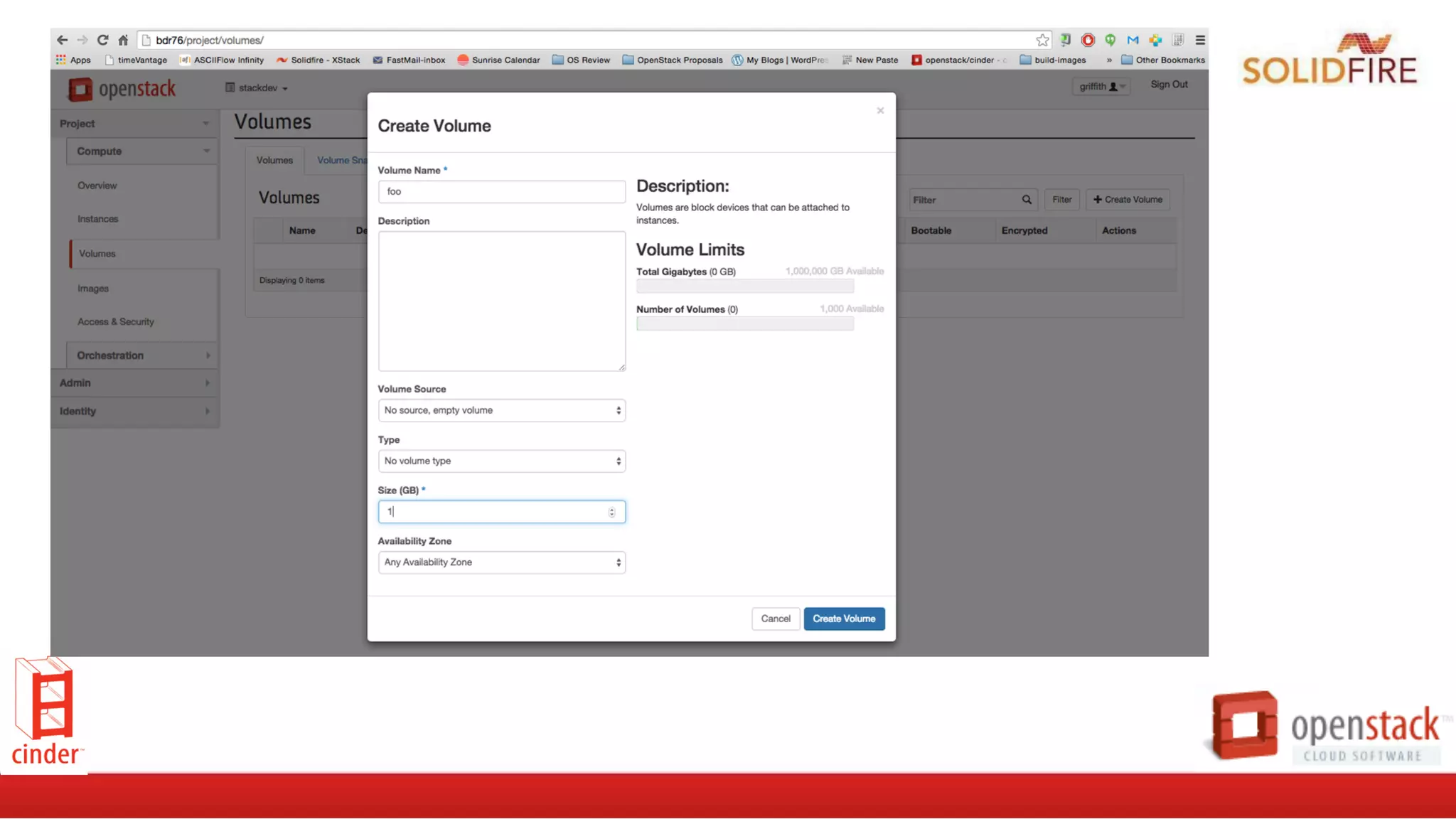

![Web UI’s are neat, but automation rules!

ebalduf@bdr76

~

$

cinder

create

-‐-‐volume-‐type

SF-‐Silver

–-‐display-‐name

MyVolume

100

or in code:

>>>

from

cinderclient.v2

import

client

>>>

cc

=

client.Client(USER,

PASS,

TENANT,

AUTH_URL,

service_type="volume")

>>>

cc.volumes.create(100,

volume_type=f76f2fbf-‐f5cf-‐474f-‐9863-‐31f170a29b74)

[...]](https://image.slidesharecdn.com/cinder-meetups-baldufv3-150917162250-lva1-app6892/75/OpenStack-Cinder-Implementation-Today-and-New-Trends-for-Tomorrow-17-2048.jpg)