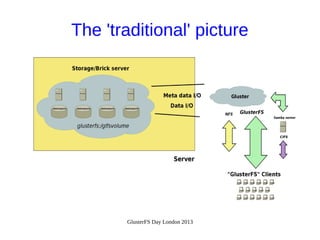

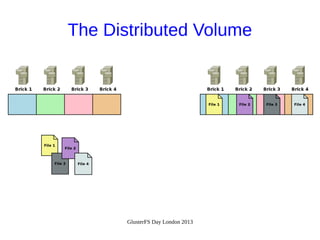

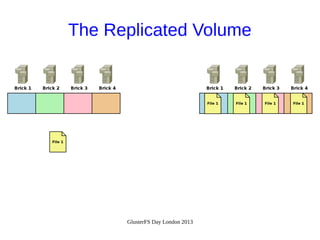

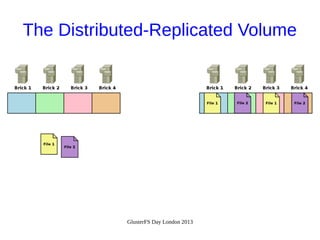

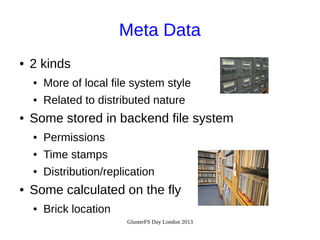

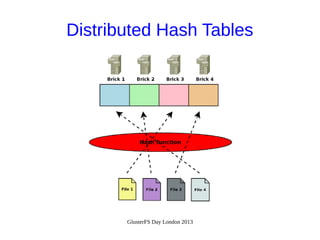

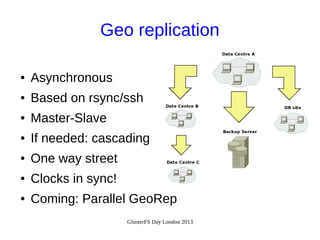

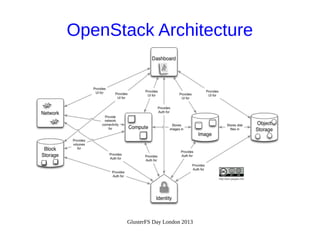

The document presents a talk by Dr. Udo Seidel about using GlusterFS as a replacement for Swift in OpenStack, detailing its architecture, features, and integration capabilities. It covers the evolution of GlusterFS, its distributed storage model, and the advantages of using it within the OpenStack ecosystem. Key takeaways include its modular architecture, operational readiness, and active community support.