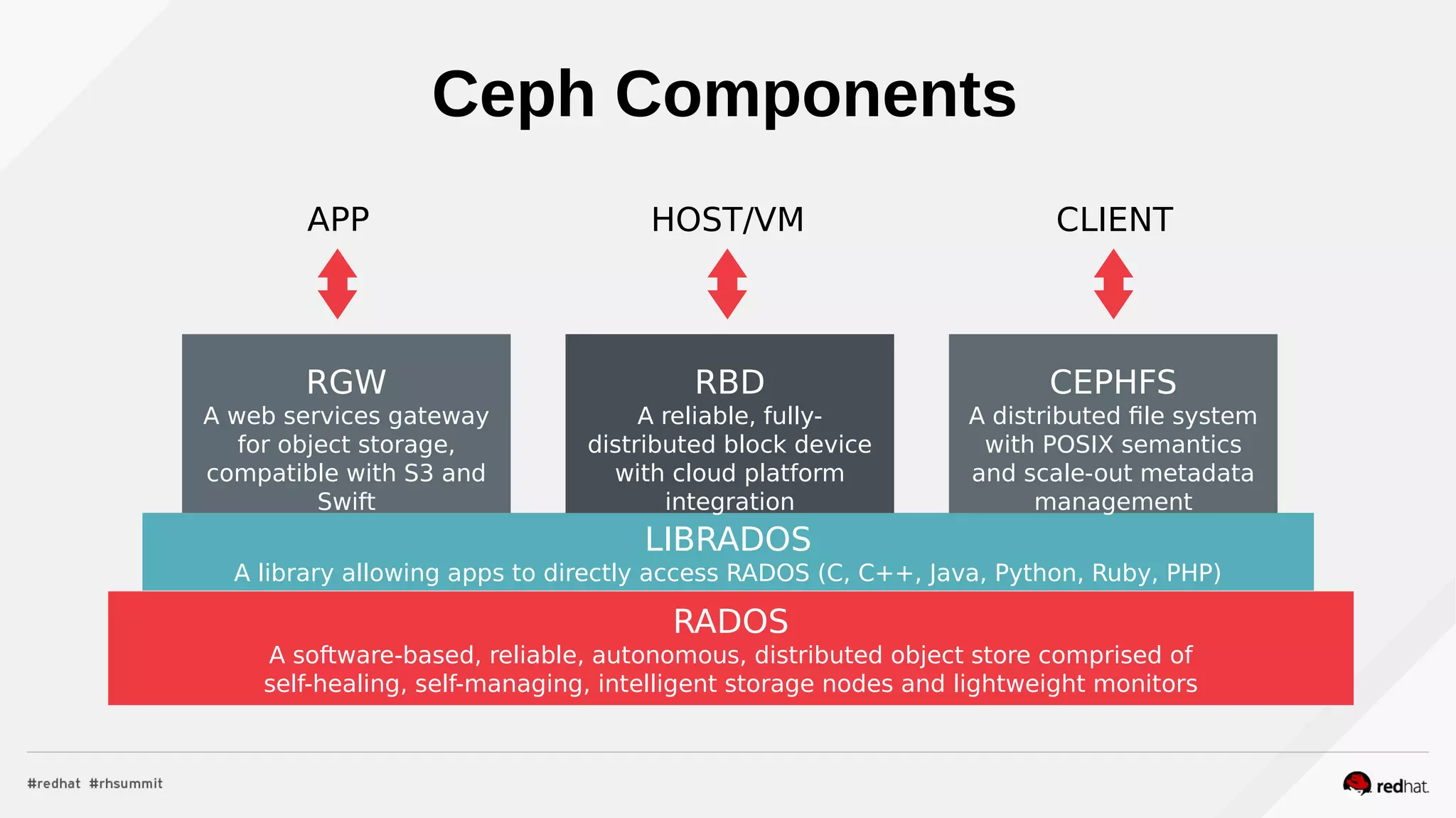

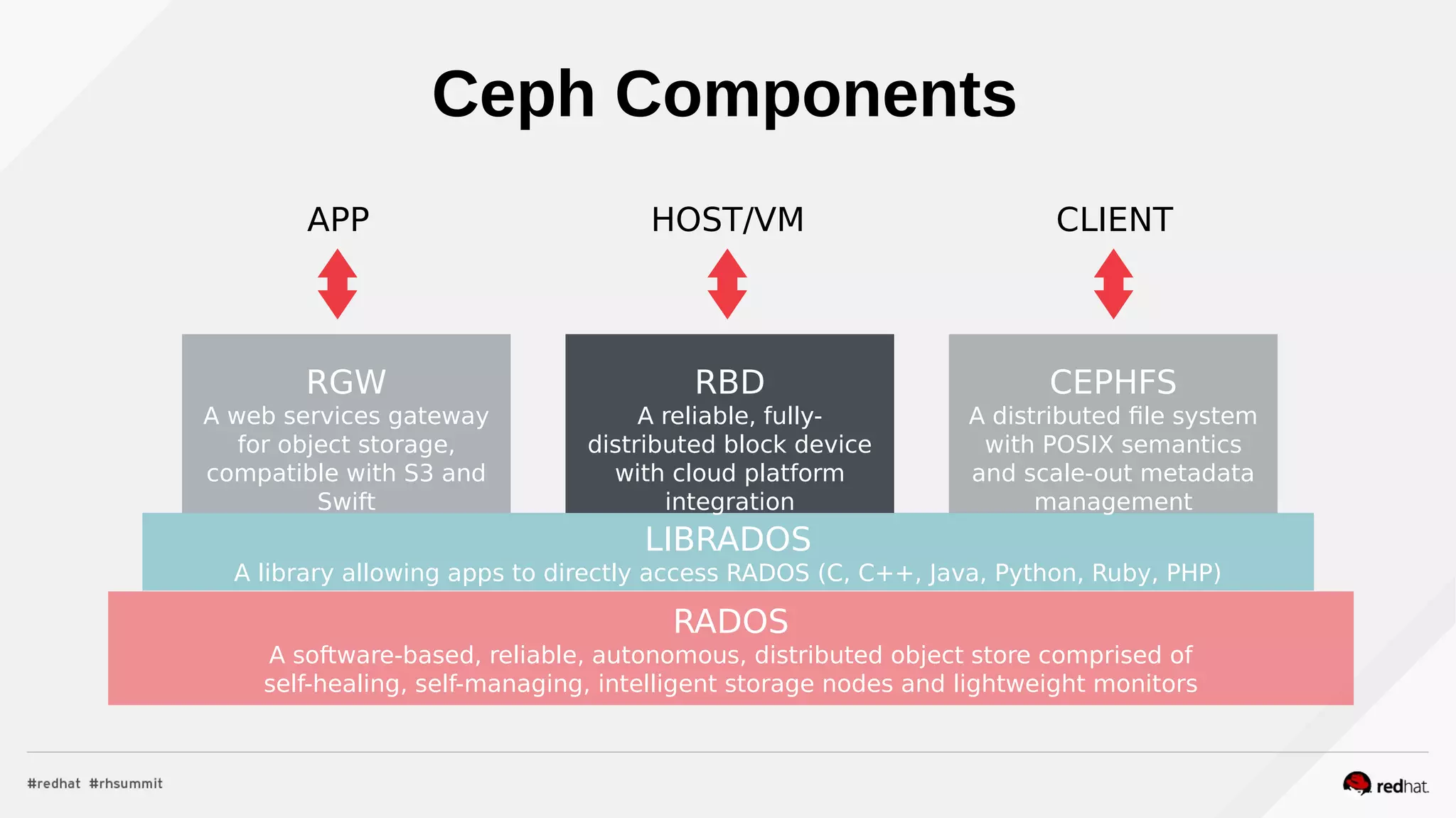

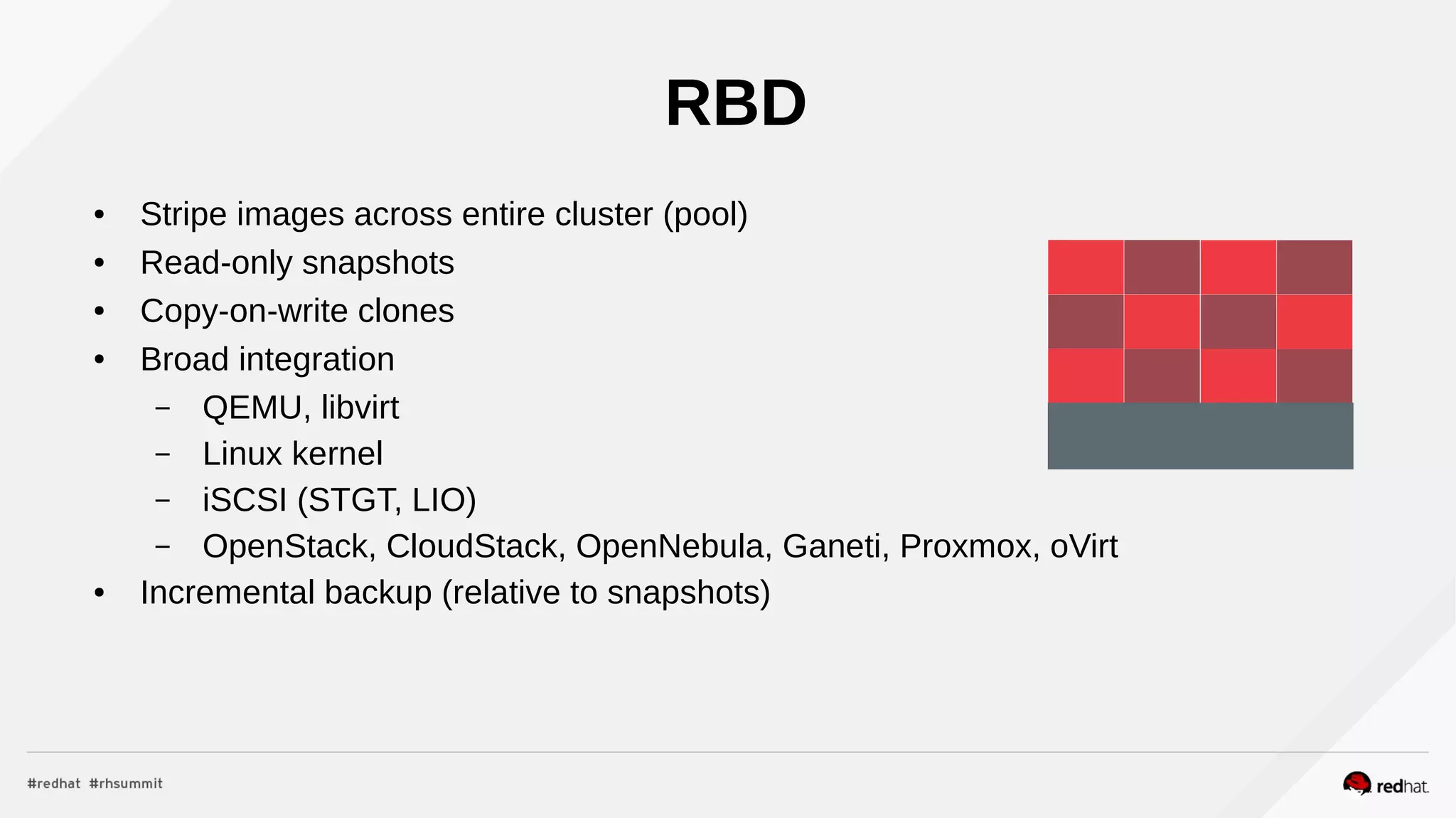

The document provides an in-depth overview of Ceph block devices, detailing its architecture, components, and functionality. It emphasizes key principles like horizontal scalability, hardware agnosticism, and self-management, while describing essential components such as RADOS, RBD, and CephFS. Additionally, it discusses features like snapshots, clones, and integration with cloud platforms, alongside future work plans for further enhancements.

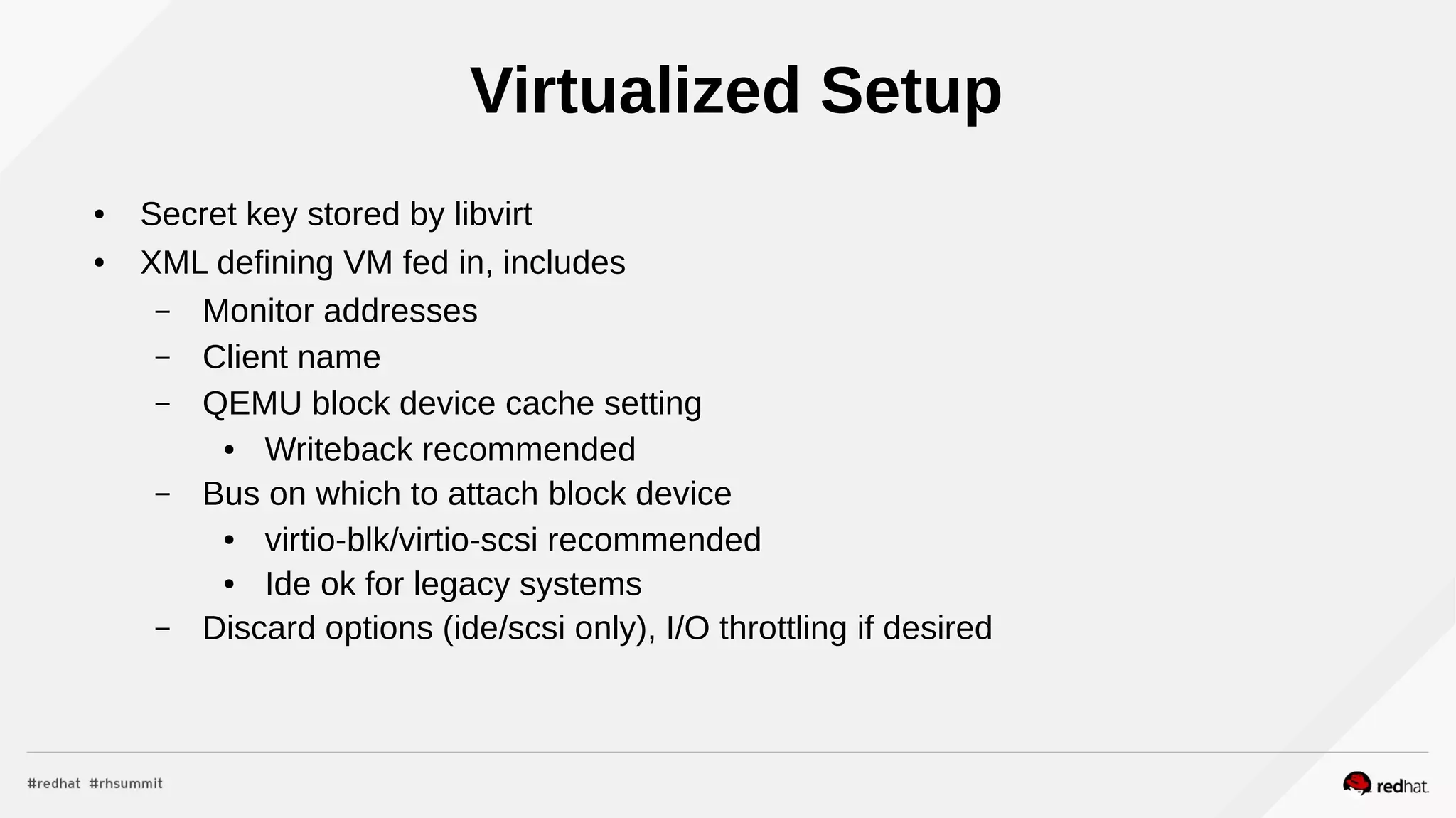

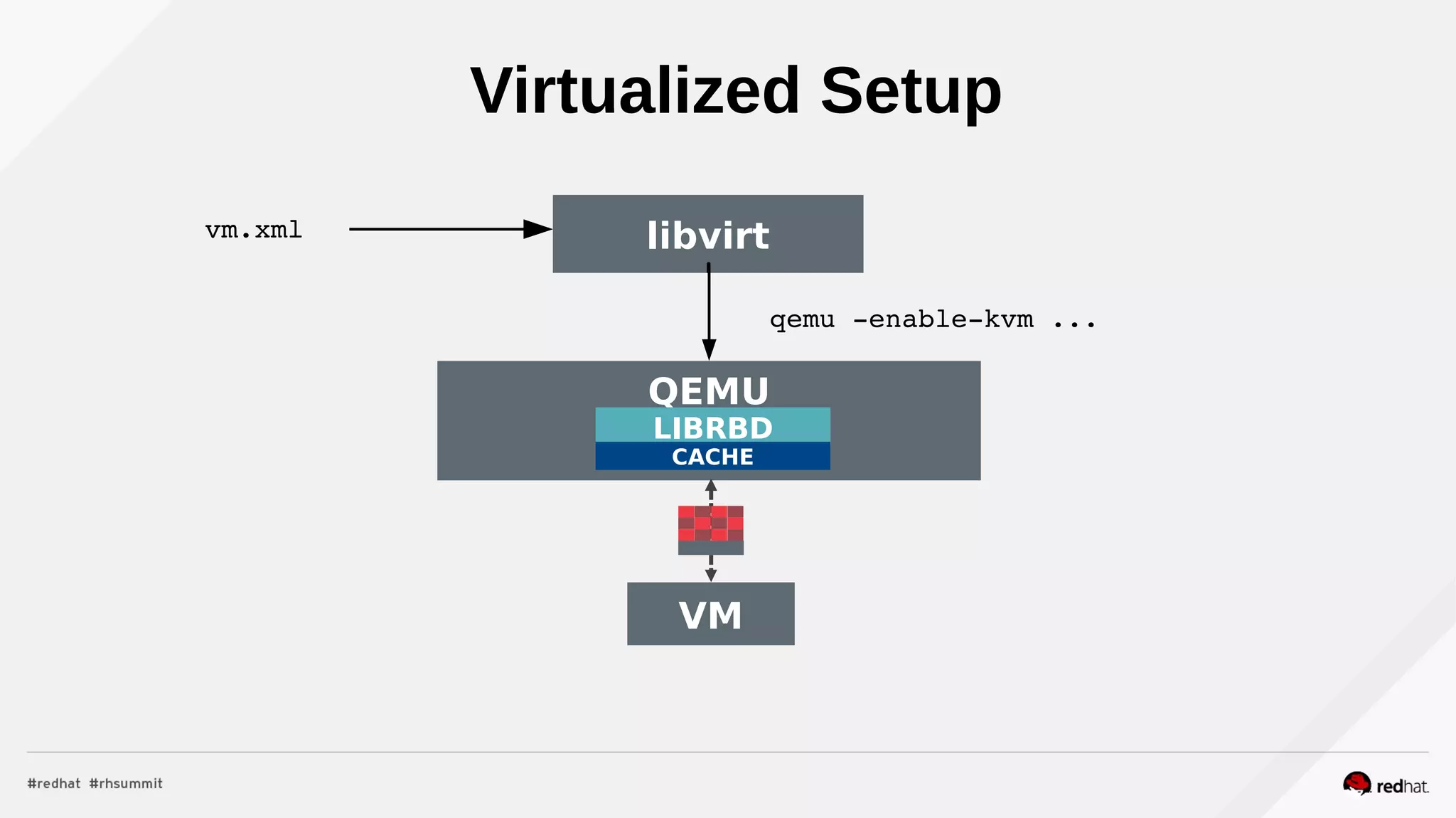

![Snapshots

● Object granularity

● Snapshot context [list of snap ids, latest snap id]

– Stored in rbd image header (self-managed)

– Sent with every write

● Snapshot ids managed by monitors

● Deleted asynchronously

● RADOS keeps per-object overwrite stats, so diffs are easy](https://image.slidesharecdn.com/2015-06-24-rhsummitrbddeepdive-150629182622-lva1-app6891/75/Ceph-Block-Devices-A-Deep-Dive-17-2048.jpg)

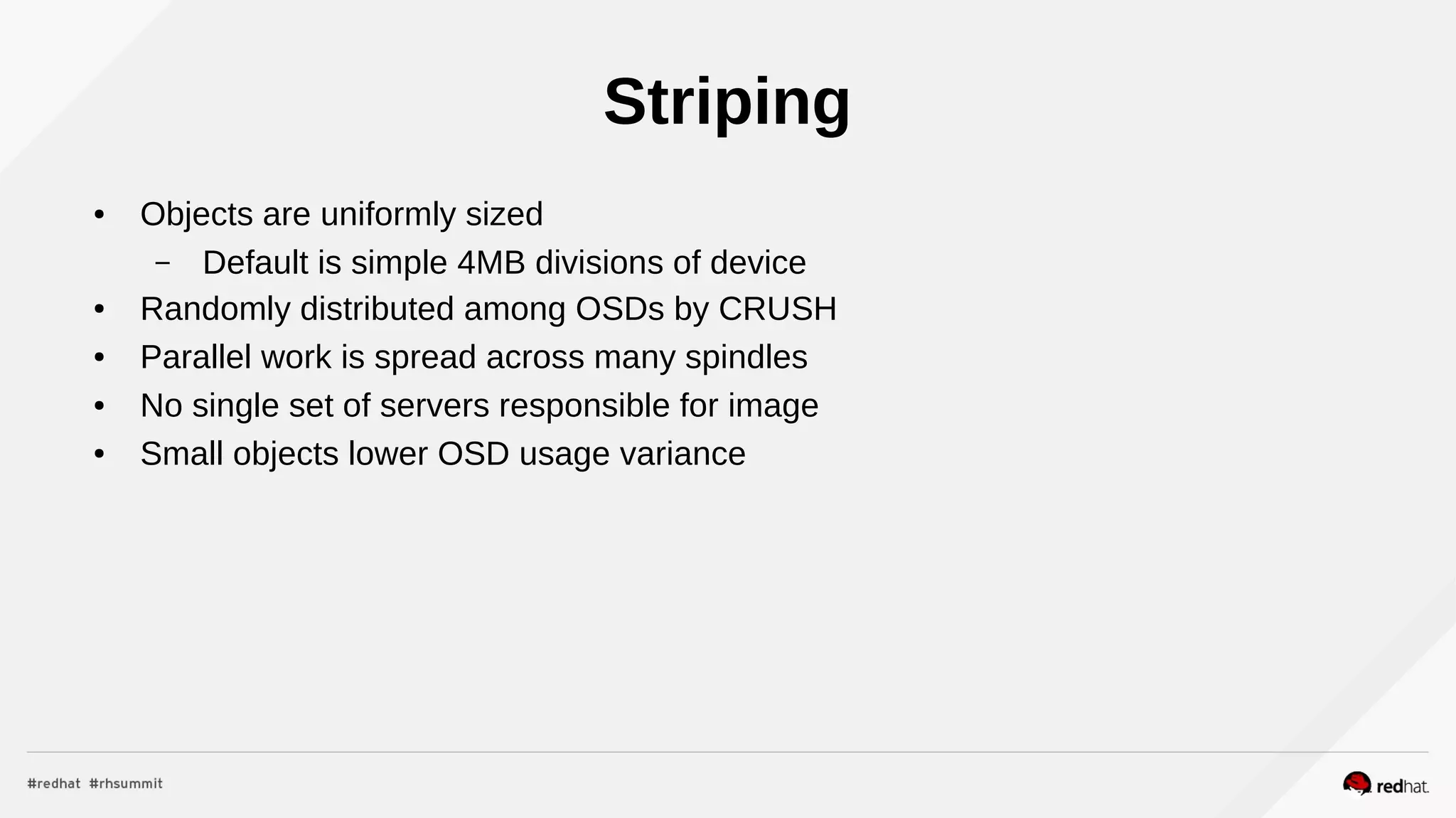

![LIBRBD

Snap context: ([], 7)

write

Snapshots](https://image.slidesharecdn.com/2015-06-24-rhsummitrbddeepdive-150629182622-lva1-app6891/75/Ceph-Block-Devices-A-Deep-Dive-18-2048.jpg)

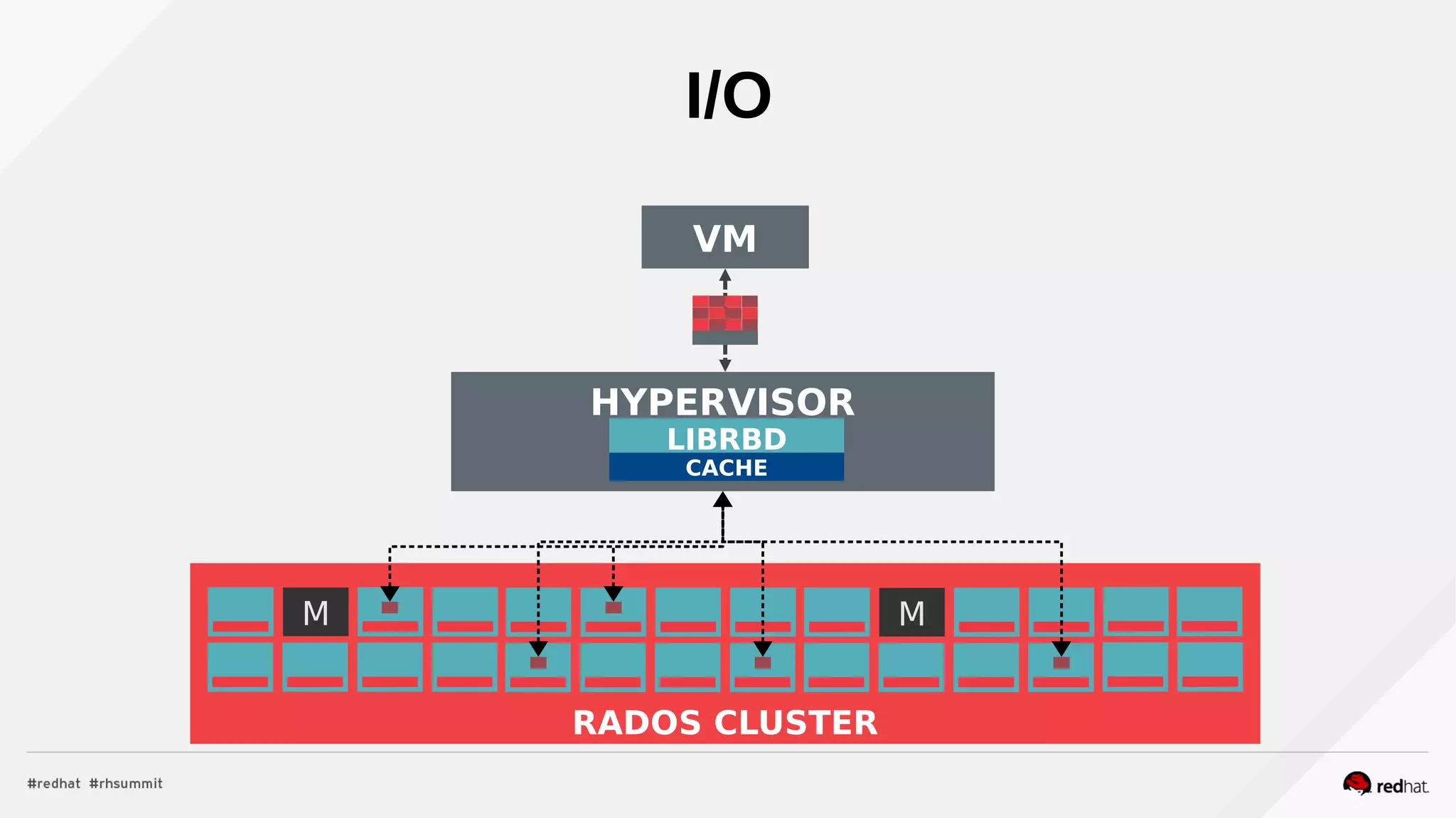

![LIBRBD

Set snap context to ([8], 8)

Snapshots](https://image.slidesharecdn.com/2015-06-24-rhsummitrbddeepdive-150629182622-lva1-app6891/75/Ceph-Block-Devices-A-Deep-Dive-20-2048.jpg)

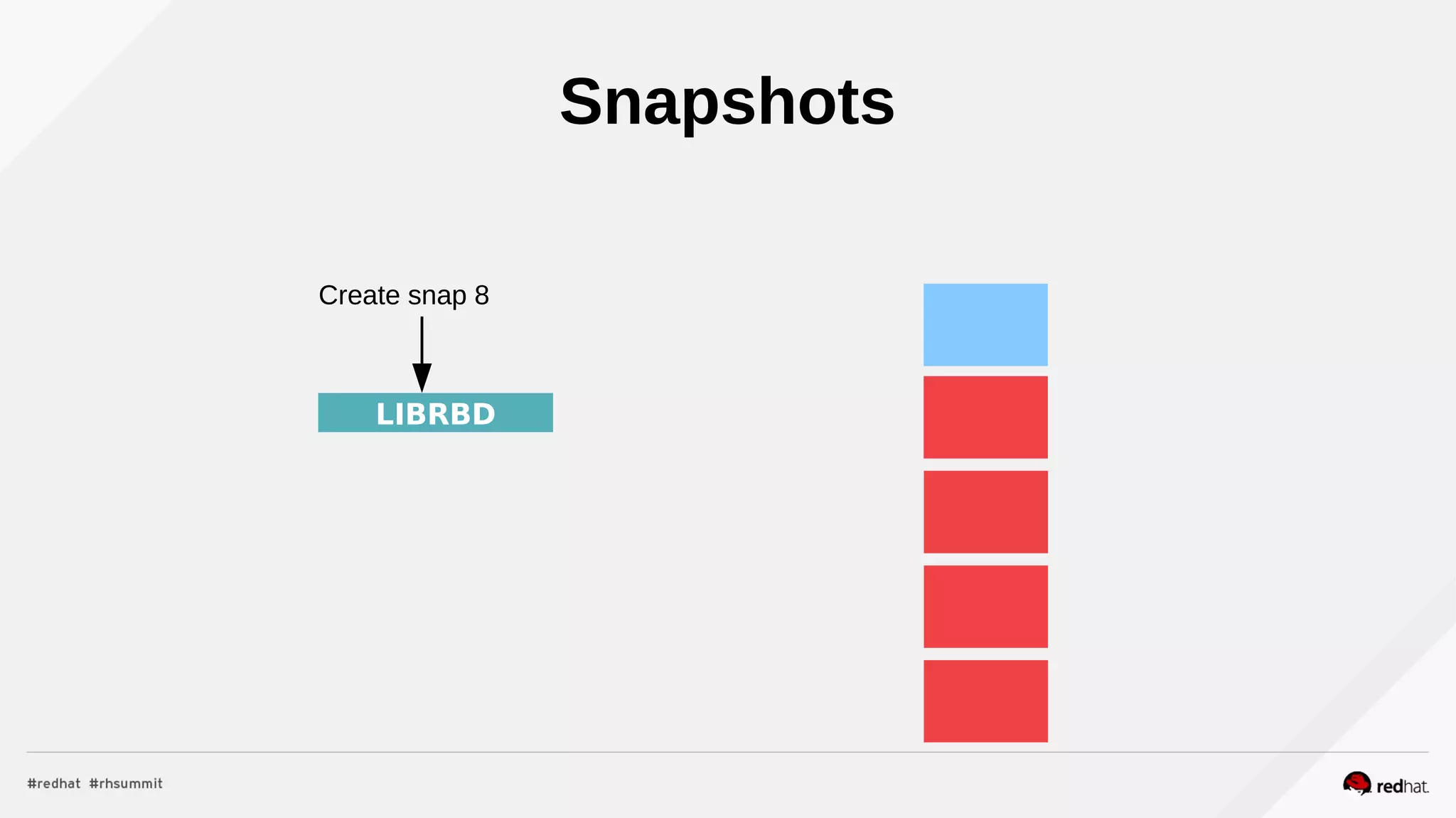

![LIBRBD

Write with ([8], 8)

Snapshots](https://image.slidesharecdn.com/2015-06-24-rhsummitrbddeepdive-150629182622-lva1-app6891/75/Ceph-Block-Devices-A-Deep-Dive-21-2048.jpg)

![LIBRBD

Write with ([8], 8)

Snap 8

Snapshots](https://image.slidesharecdn.com/2015-06-24-rhsummitrbddeepdive-150629182622-lva1-app6891/75/Ceph-Block-Devices-A-Deep-Dive-22-2048.jpg)

![Kernel RBD

● rbd map sets everything up

● /etc/ceph/rbdmap is like /etc/fstab

● udev adds handy symlinks:

– /dev/rbd/$pool/$image[@snap]

● striping v2 and later feature bits not supported yet

● Can be used to back LIO, NFS, SMB, etc.

● No specialized cache, page cache used by filesystem on top](https://image.slidesharecdn.com/2015-06-24-rhsummitrbddeepdive-150629182622-lva1-app6891/75/Ceph-Block-Devices-A-Deep-Dive-37-2048.jpg)