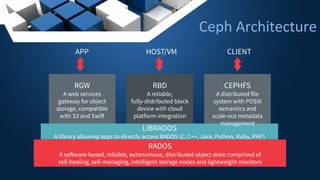

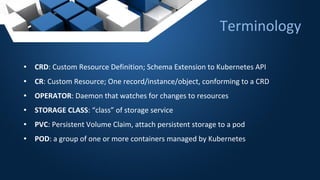

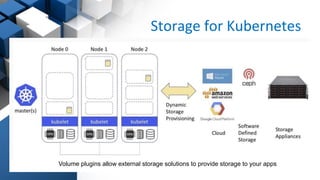

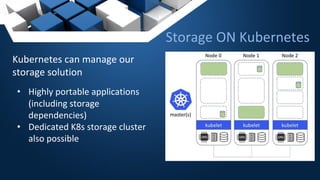

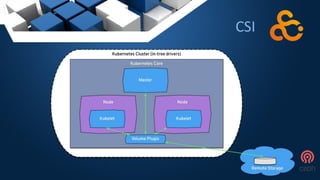

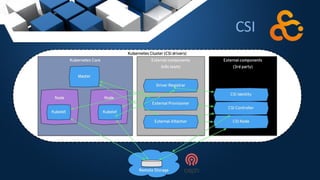

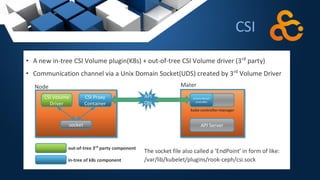

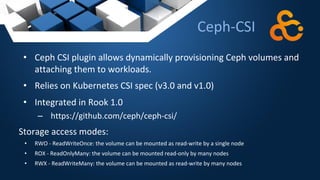

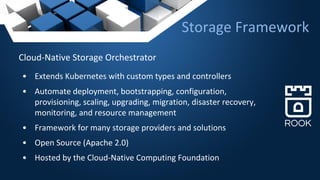

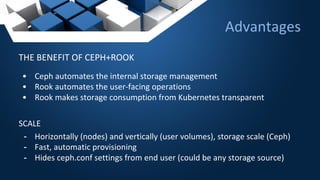

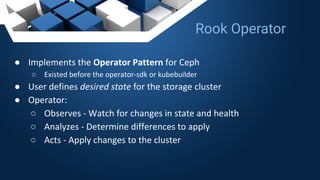

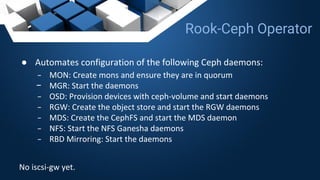

The document presents an overview of storage orchestration using Kubernetes and Ceph, highlighting how Ceph's distributed storage capabilities integrate with Kubernetes through the Rook project. It discusses the challenges of managing storage in a dynamic Kubernetes environment and introduces the Container Storage Interface (CSI) for improved storage plugin management. Rook simplifies Ceph deployment and management while automating storage-related operations, ensuring a more efficient cloud-native storage solution.