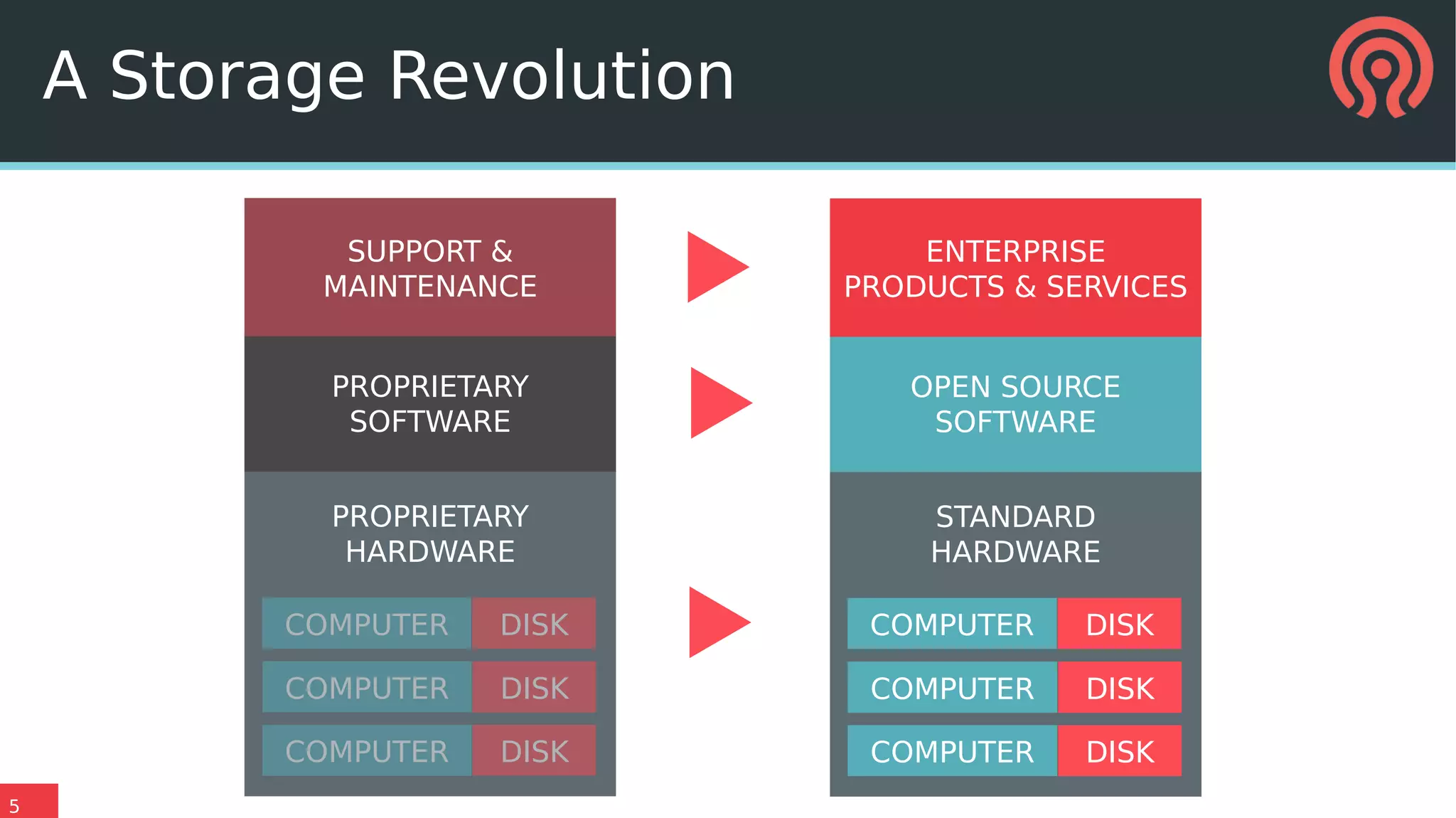

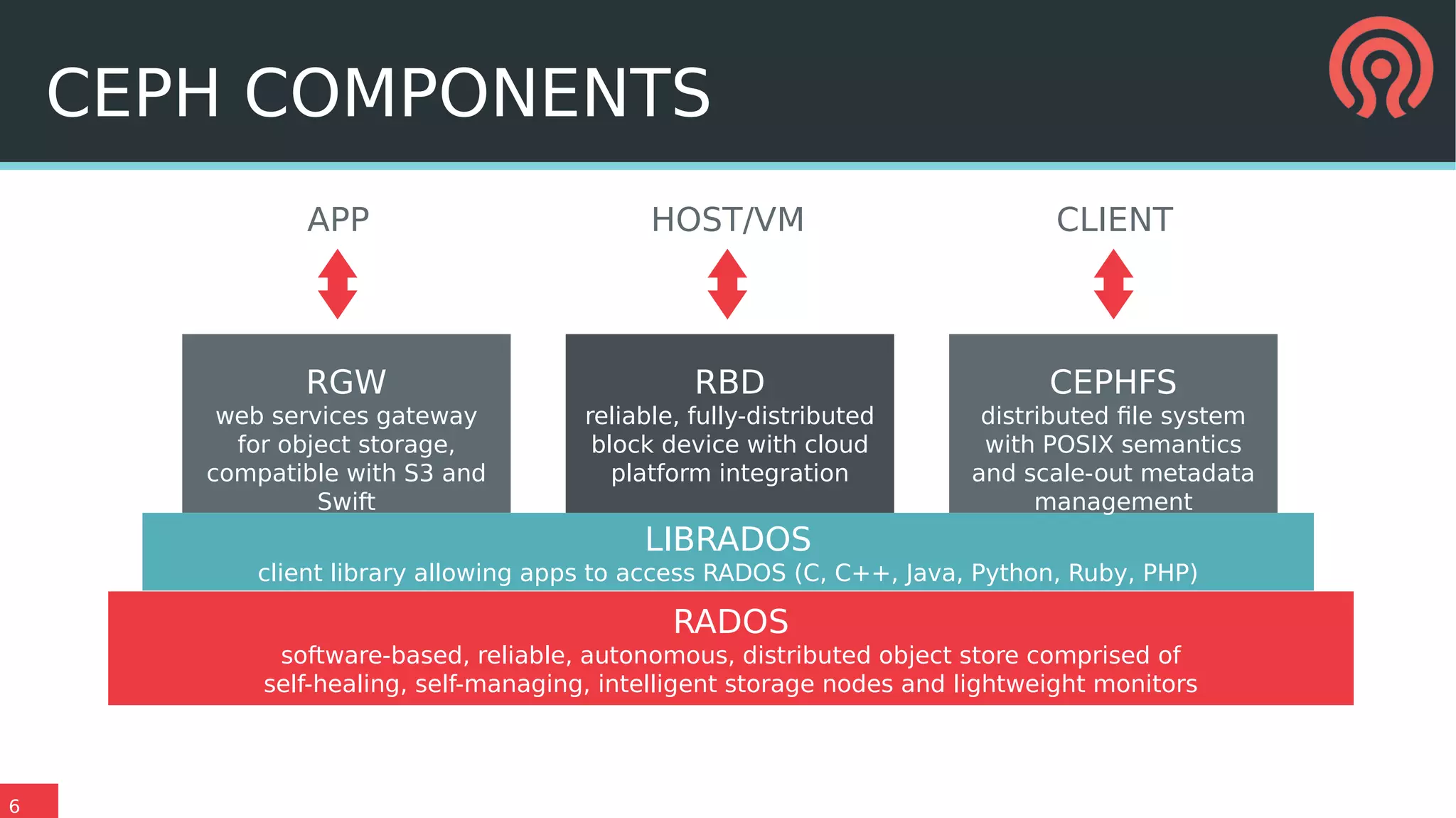

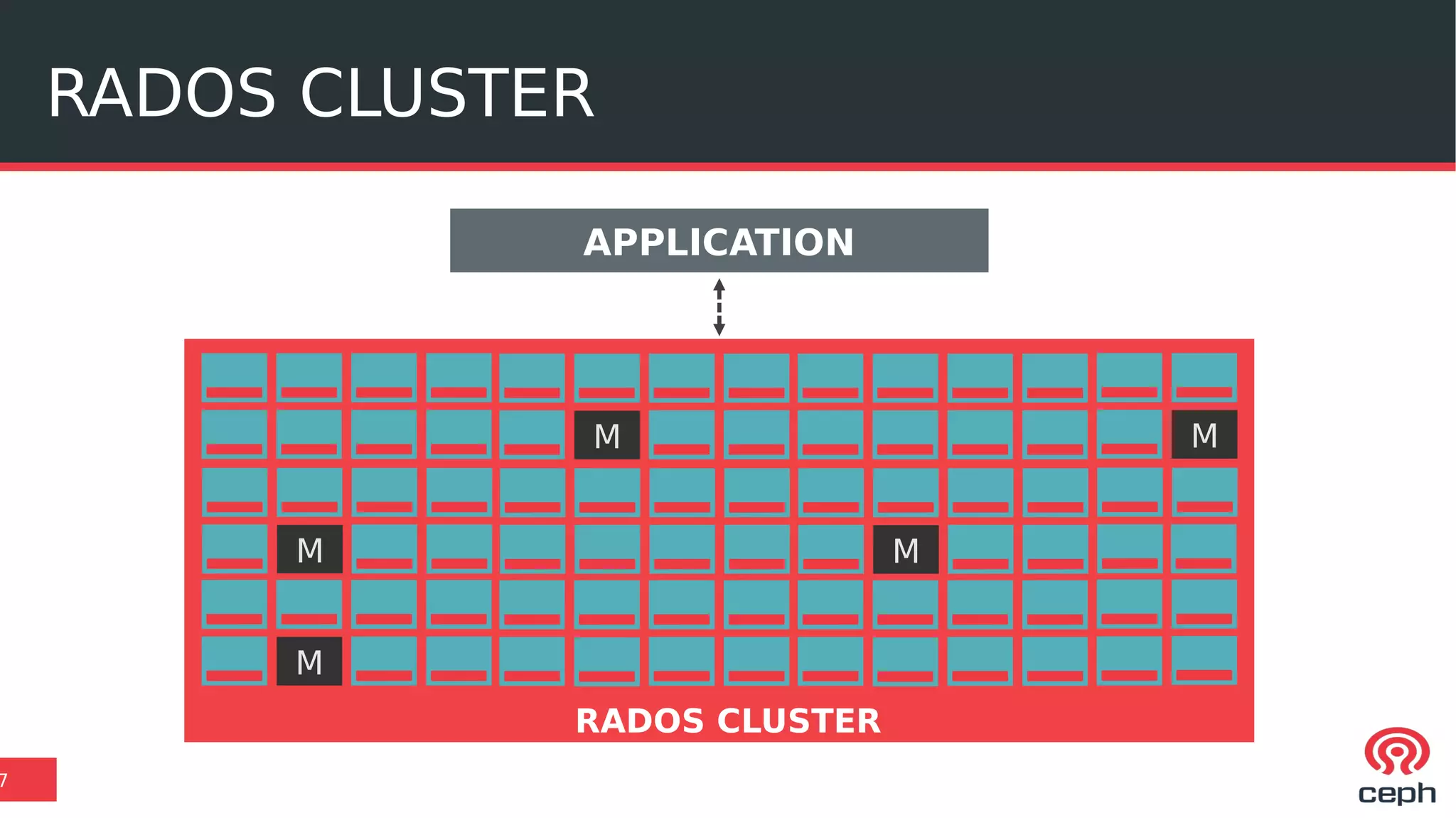

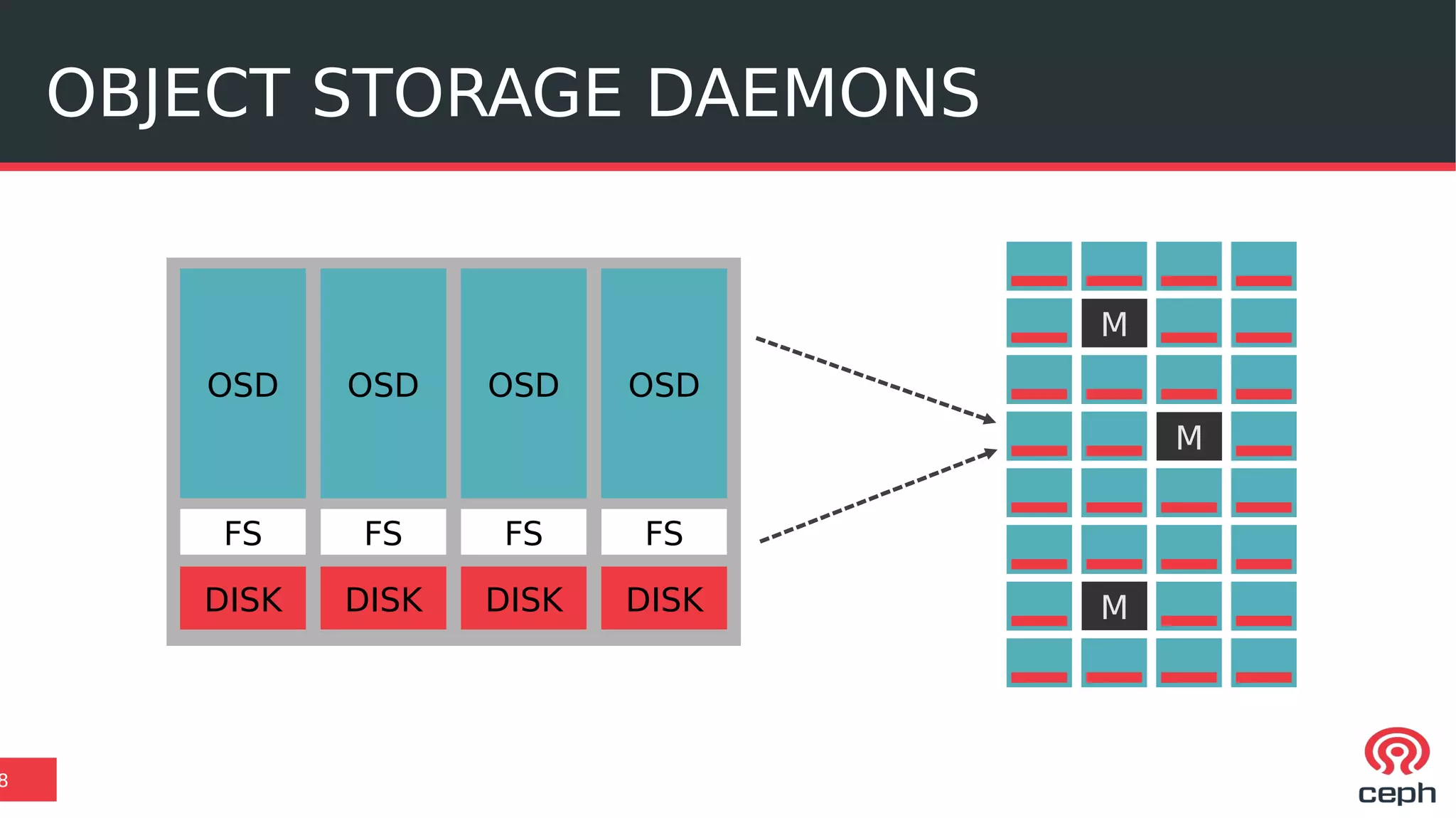

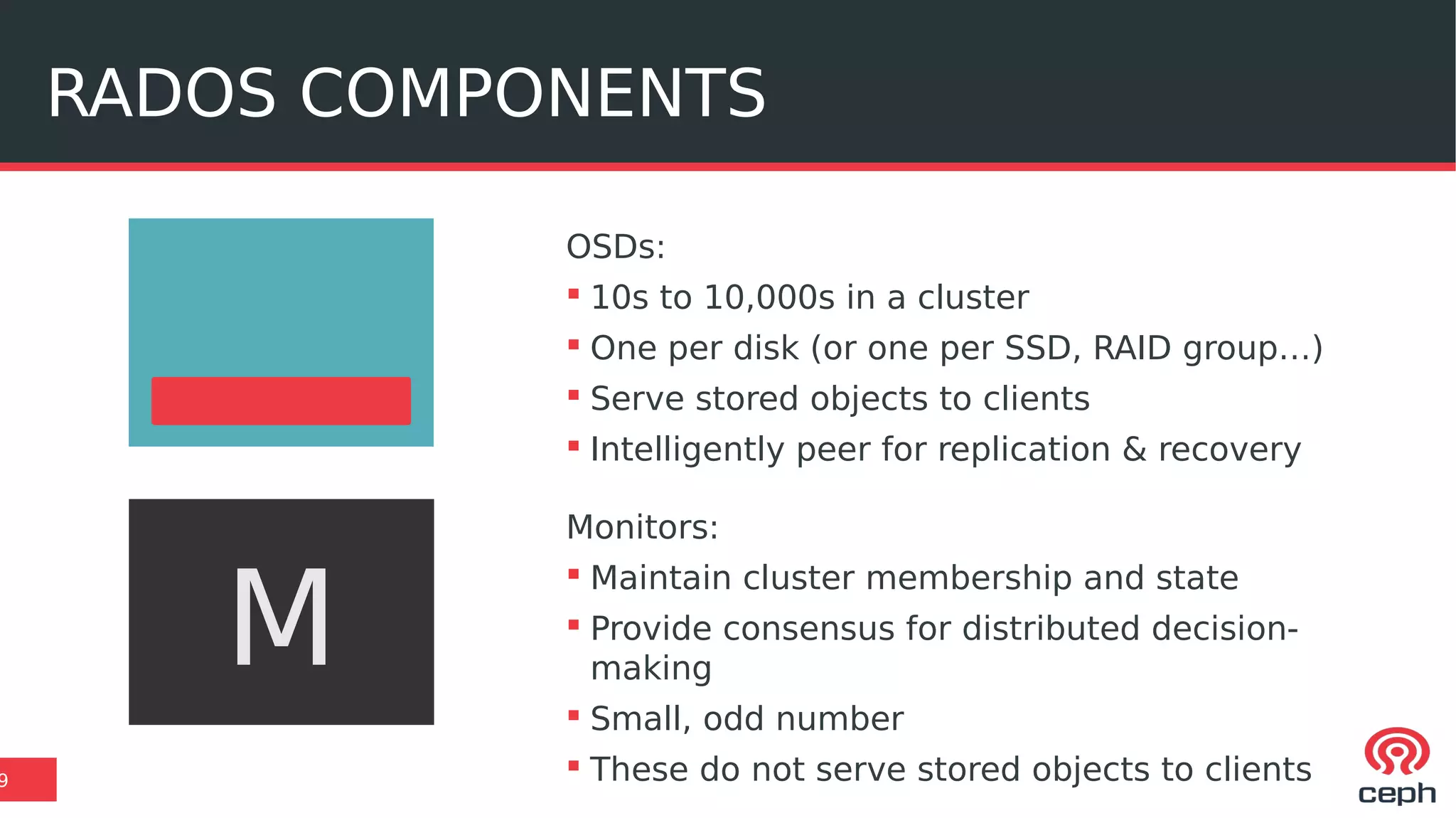

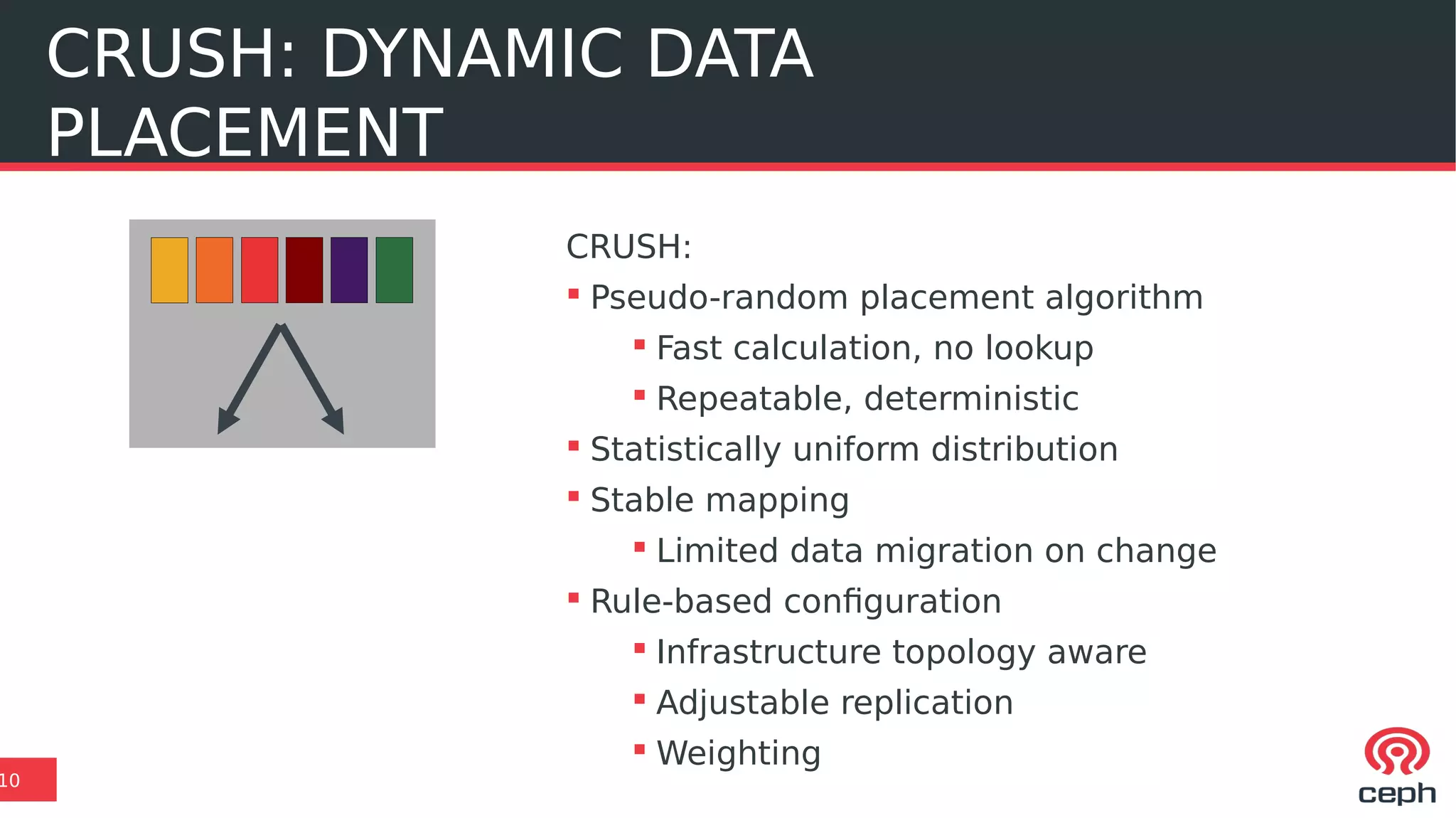

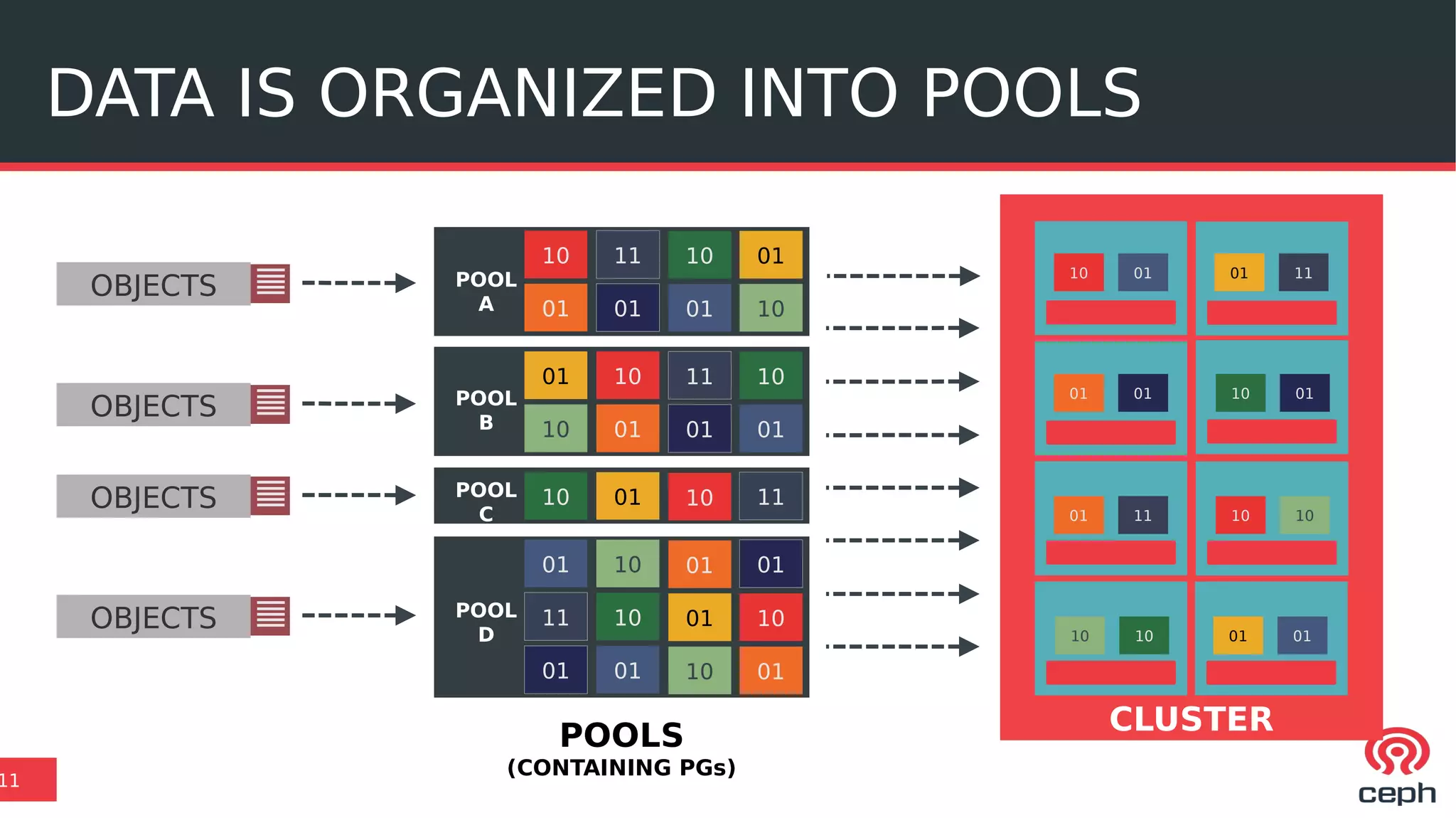

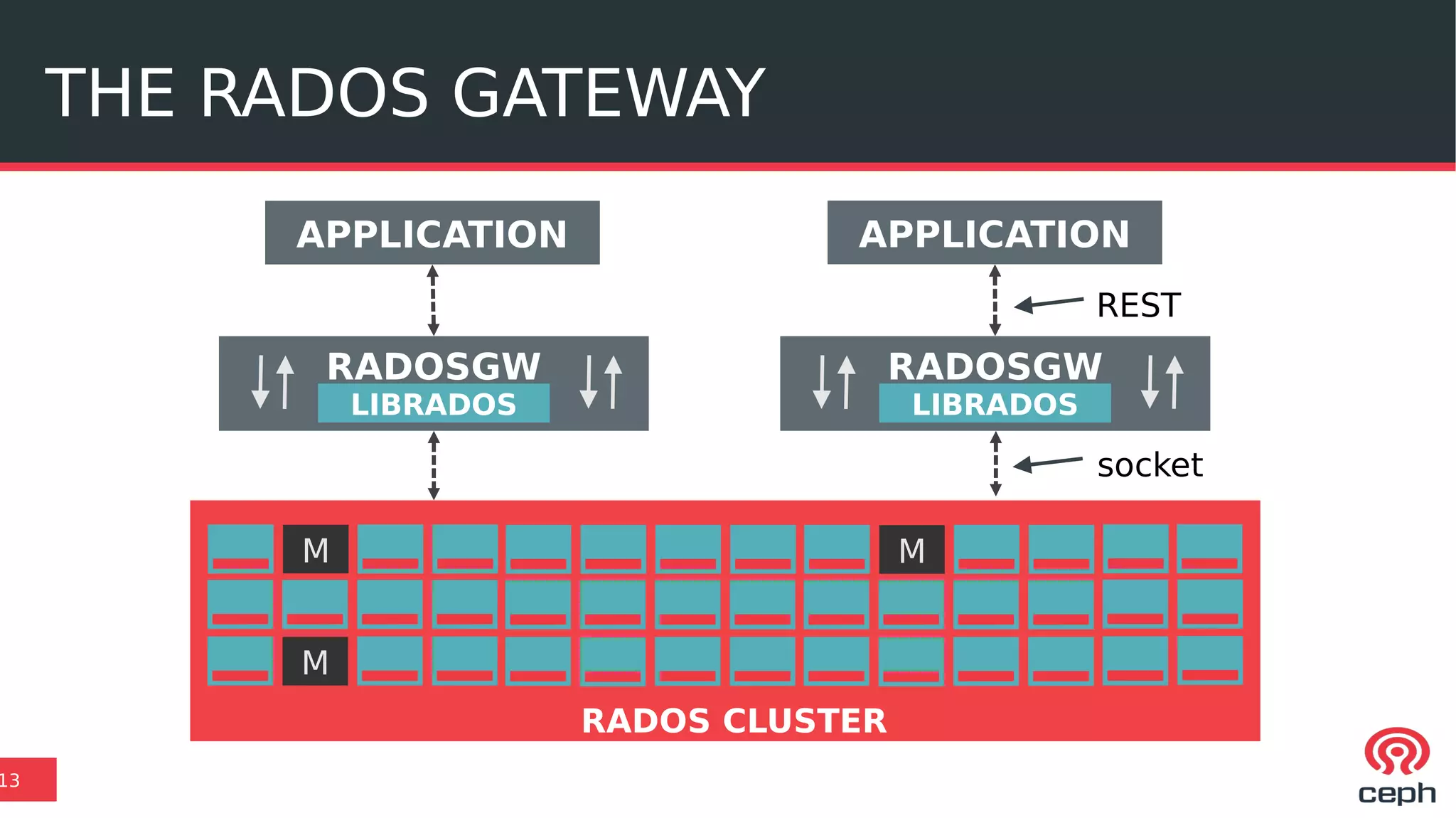

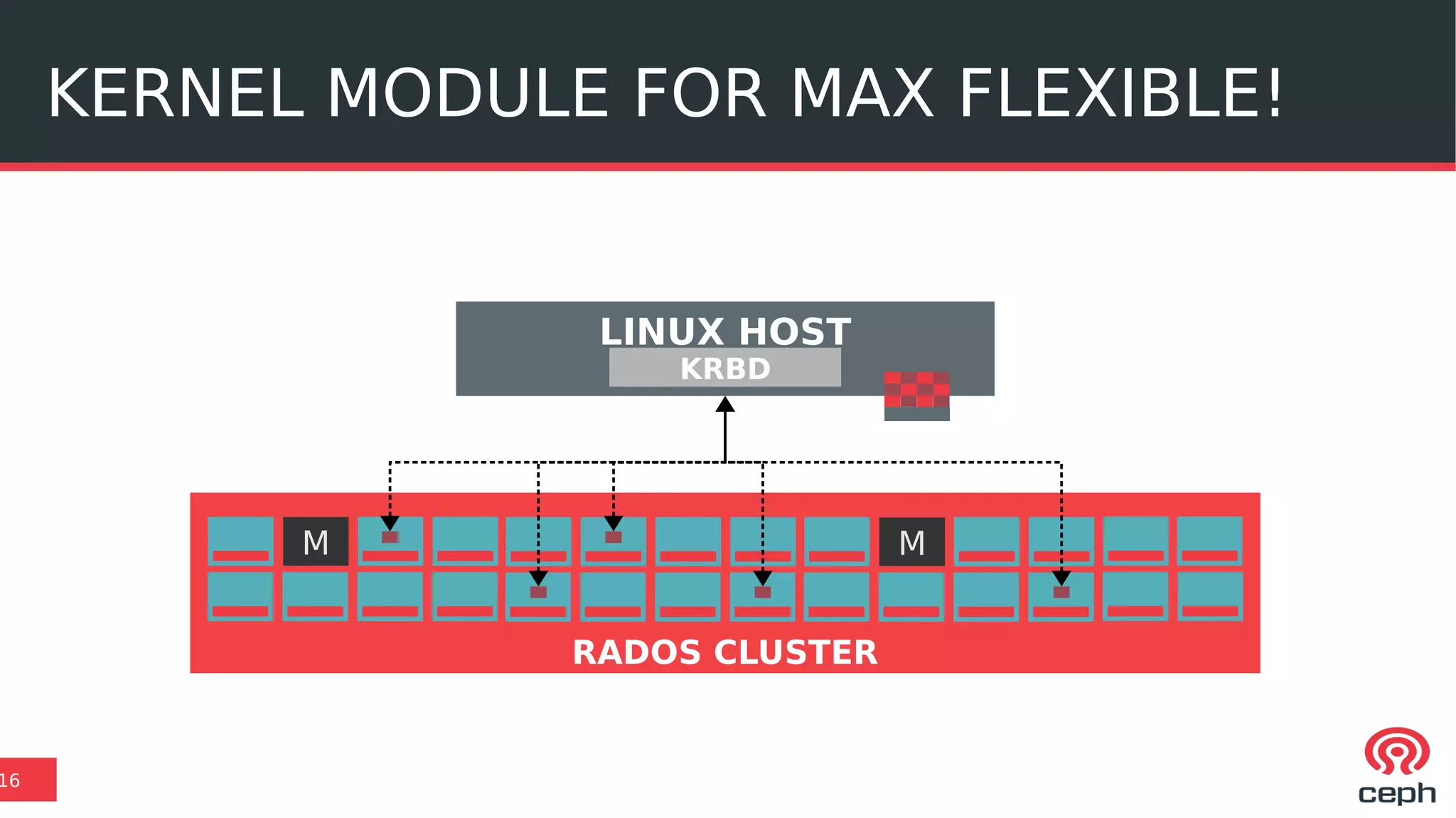

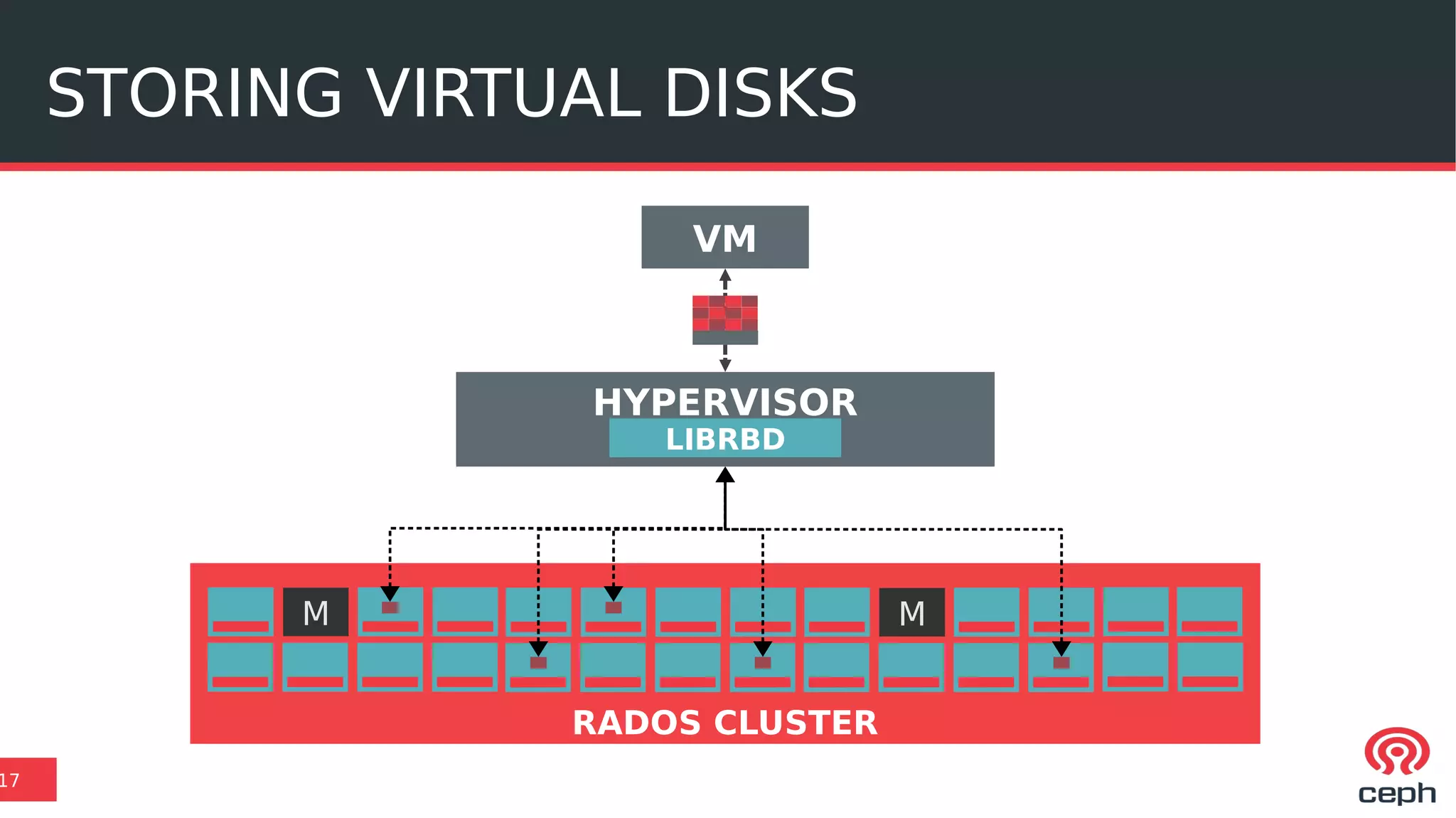

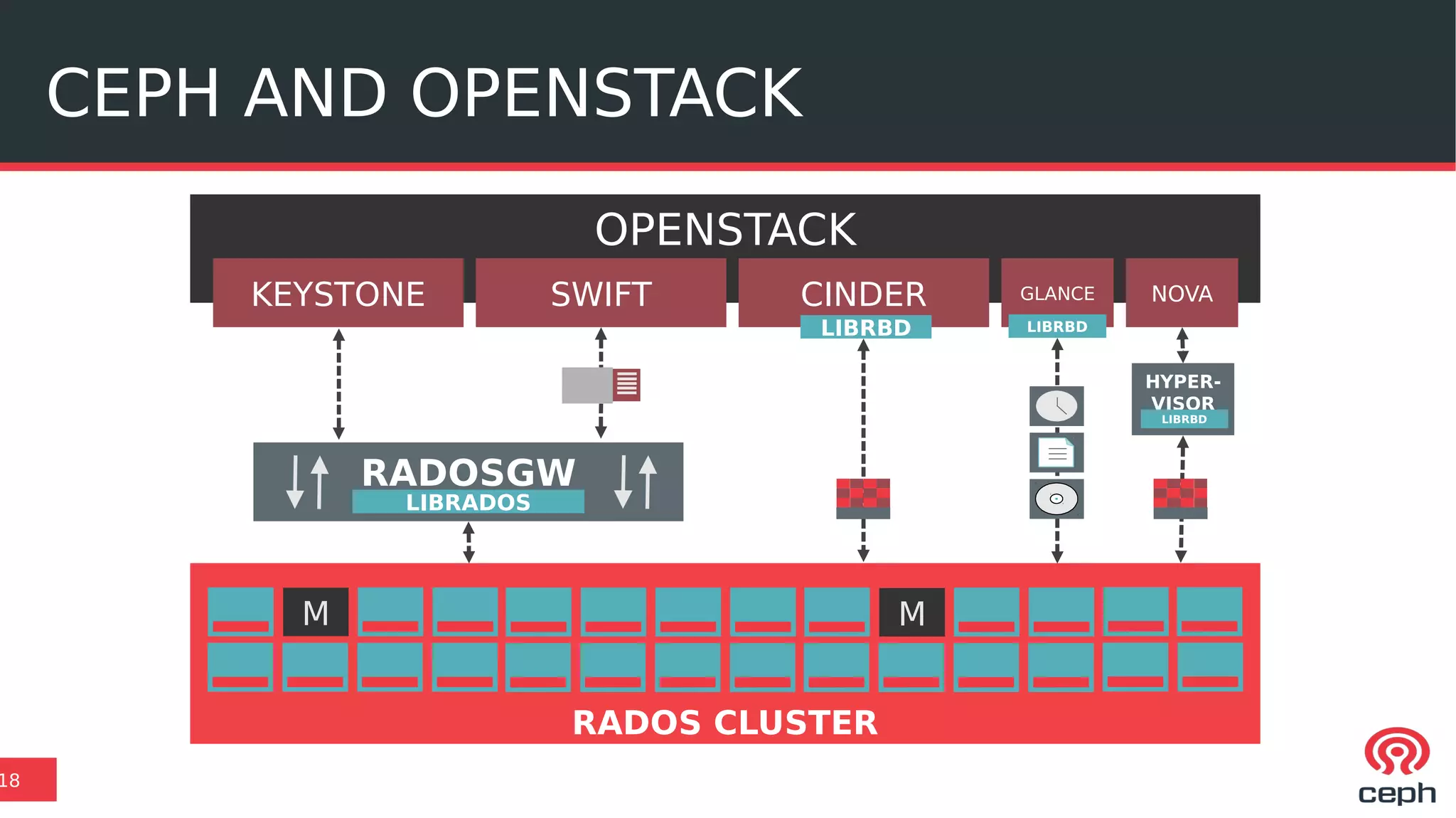

The document outlines the features and benefits of Ceph, an open-source software-defined storage solution that integrates with OpenStack. It highlights key components like RADOS, RBD, and CephFS, which facilitate object, block, and file storage, respectively. Recent updates indicate that Ceph has become the preferred storage choice for OpenStack, featuring new functionalities like improved deployment and enhanced support for legacy systems.