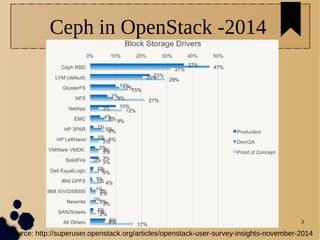

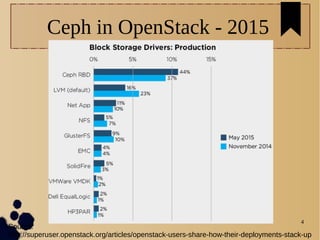

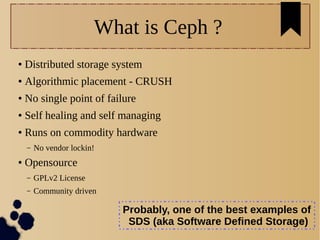

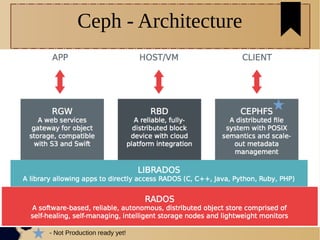

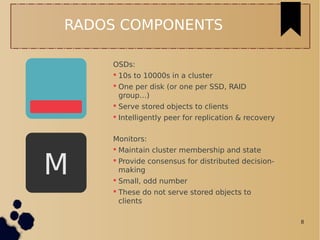

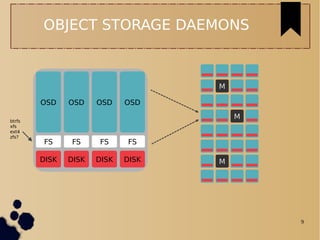

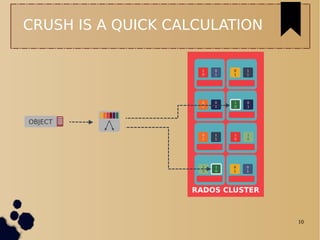

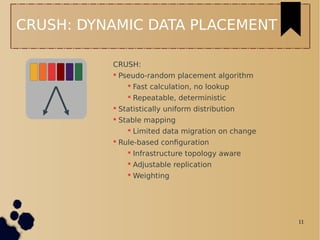

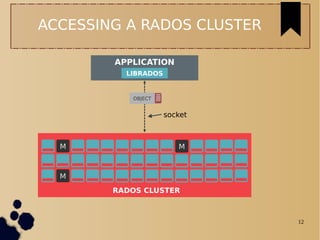

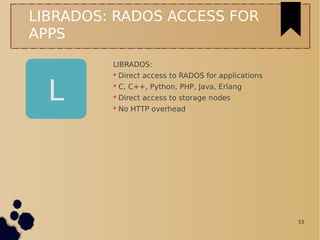

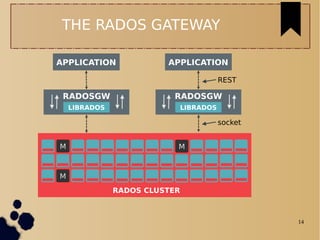

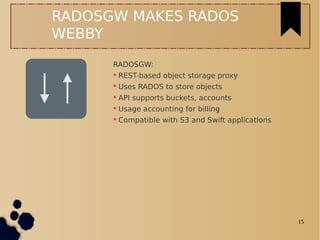

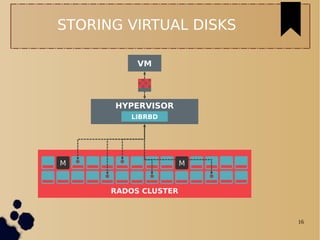

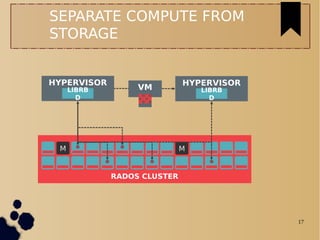

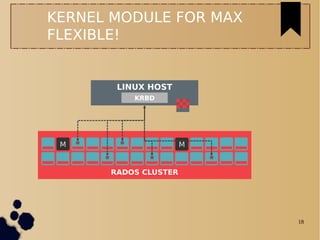

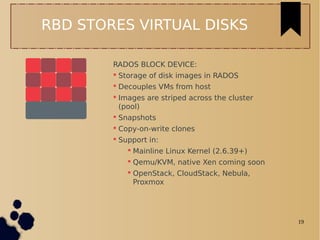

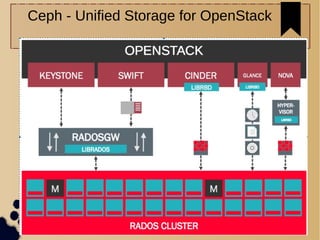

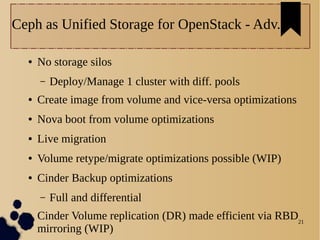

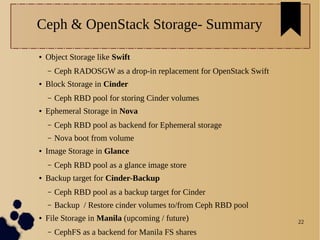

The document provides an overview of Ceph as a distributed storage system and its integration with OpenStack. It highlights Ceph's architecture, its capabilities such as self-healing and self-managing features, and its support for different storage types. Additionally, it discusses the advantages of using Ceph as unified storage for OpenStack, including optimizations for block, object, and ephemeral storage.