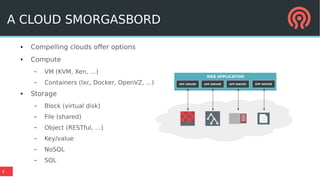

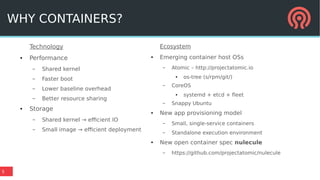

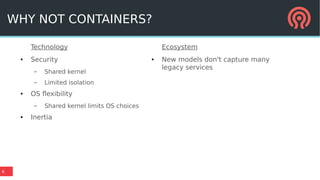

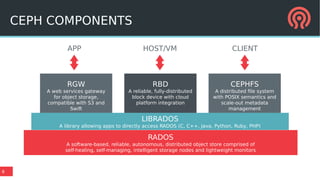

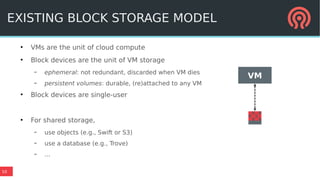

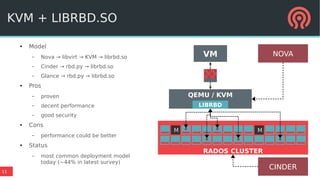

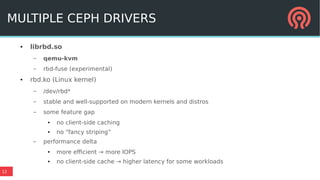

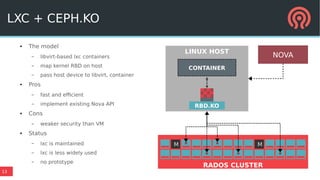

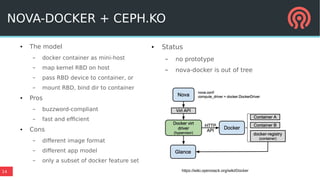

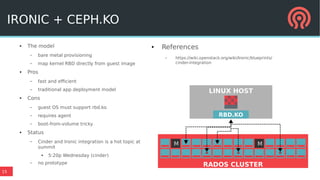

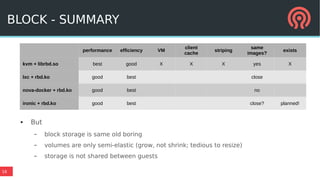

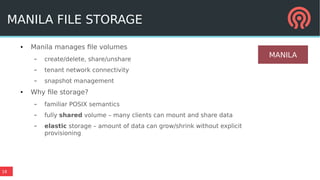

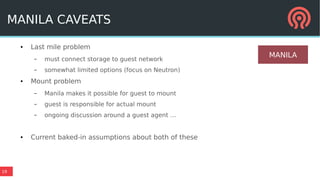

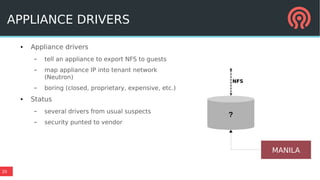

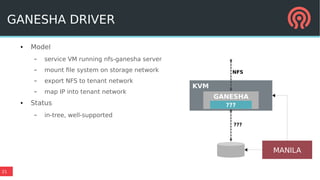

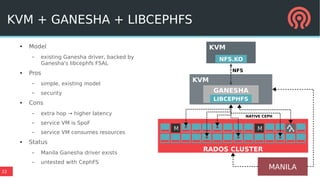

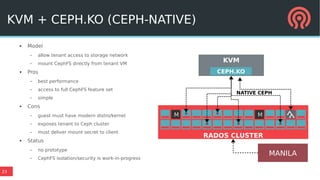

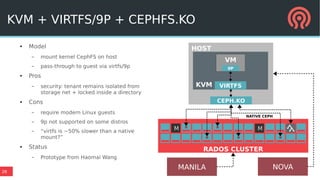

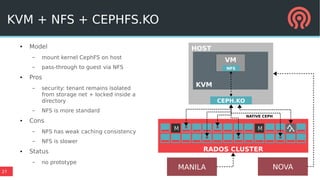

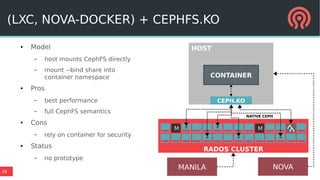

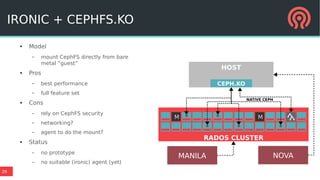

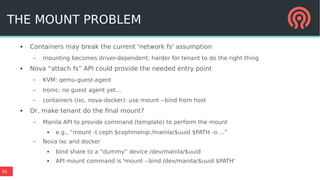

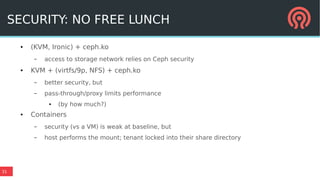

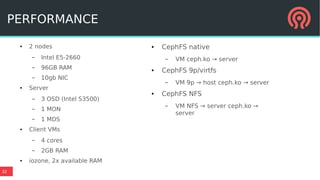

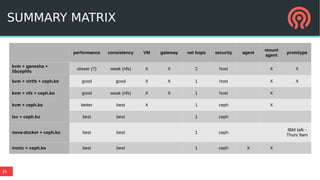

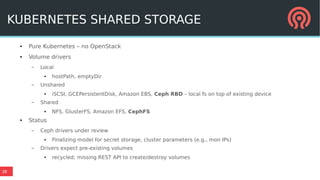

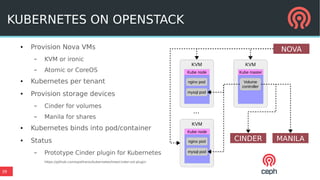

The document discusses the integration of OpenStack with Ceph and containers, highlighting the advantages of using containers for performance and resource sharing while addressing challenges such as security and legacy service compatibility. It outlines various models for implementing storage solutions, including block and file storage, as well as the architecture of Ceph components like RADOS and RBD. It concludes with insights on container orchestration, specifically Kubernetes, and the future directions for enhancing storage capabilities in OpenStack environments.