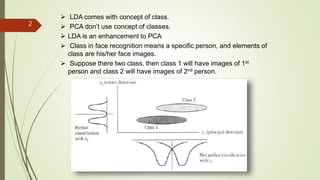

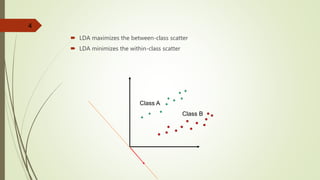

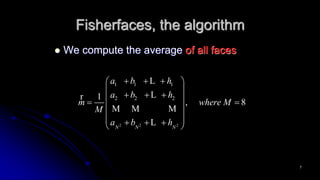

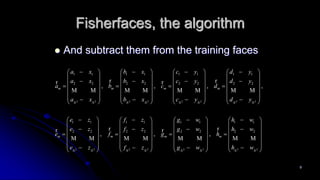

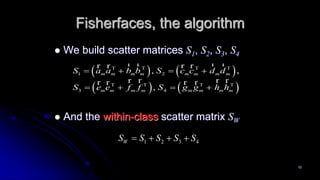

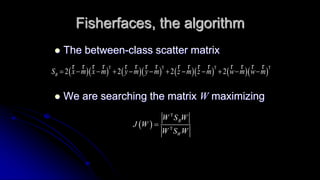

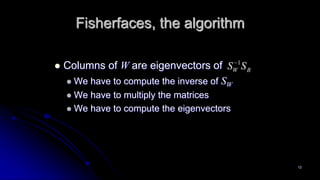

This document discusses linear discriminant analysis (LDA) for face recognition. It explains that LDA, unlike PCA, takes into account class labels to maximize between-class variance and minimize within-class variance when performing dimensionality reduction. The algorithm section describes how LDA computes within-class and between-class scatter matrices and finds the projection matrix by solving a generalized eigenvalue problem to maximize class separability. Recognition is done by projecting test faces onto the LDA space and running a nearest neighbor classifier to assign labels.