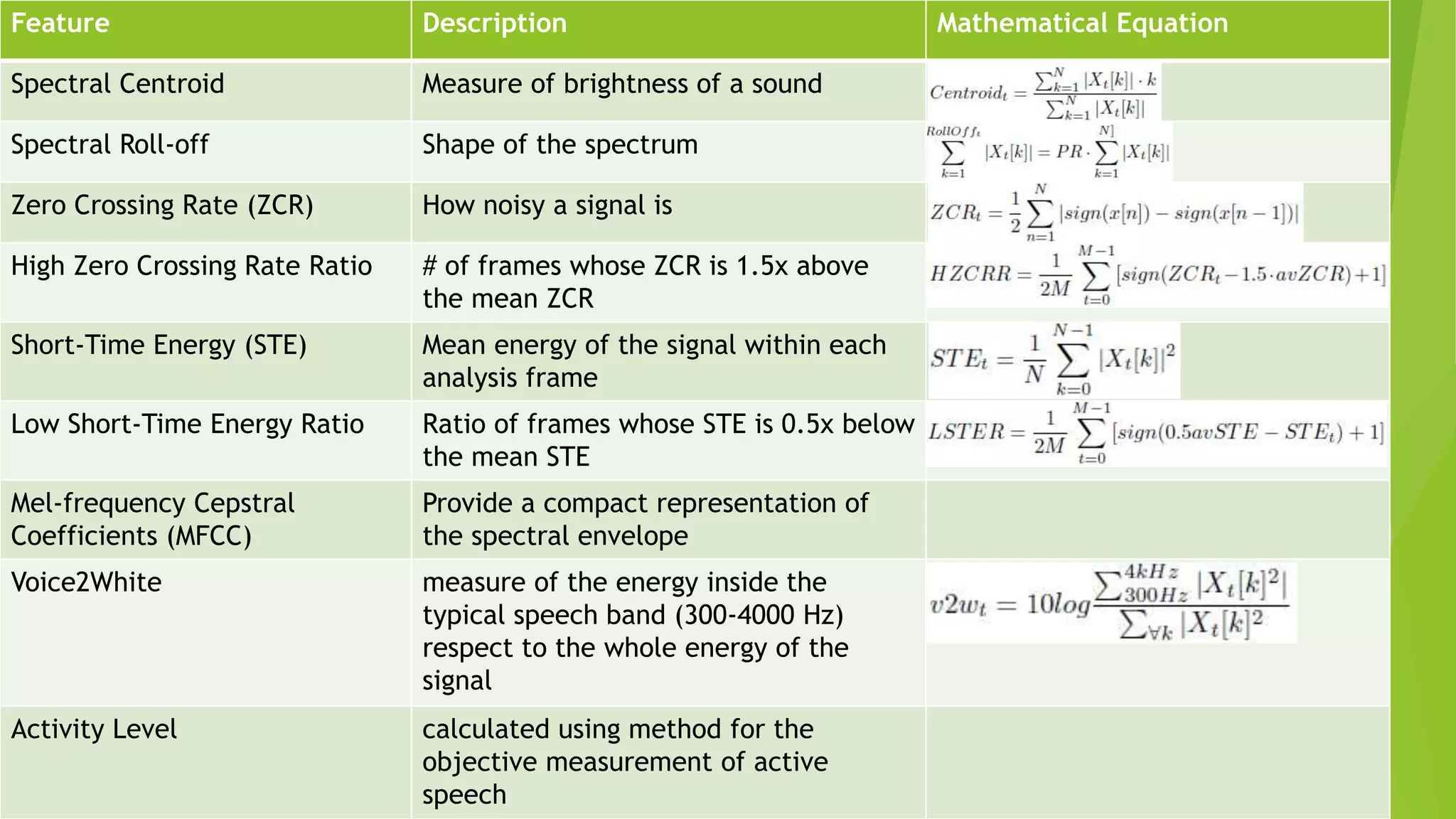

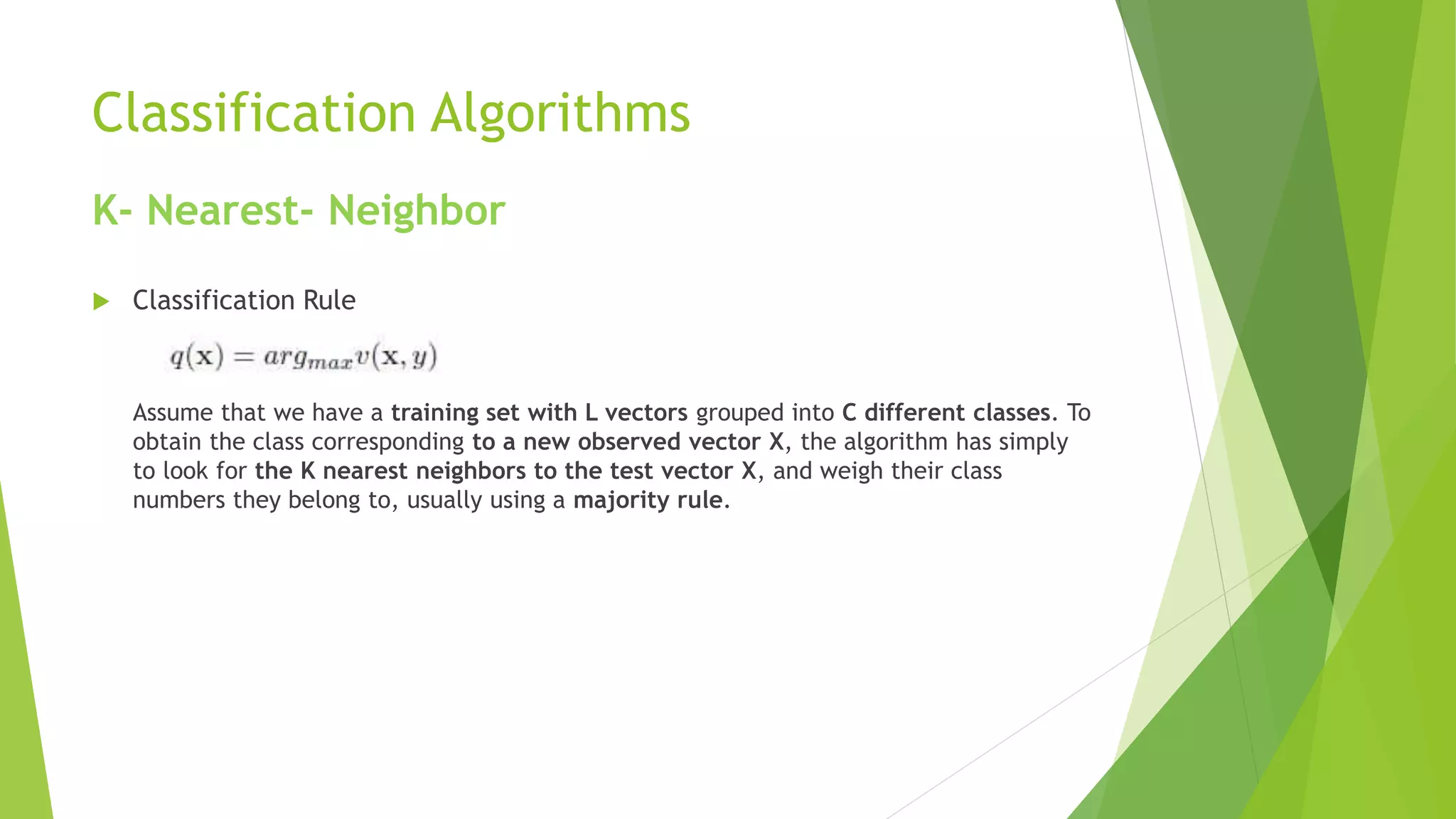

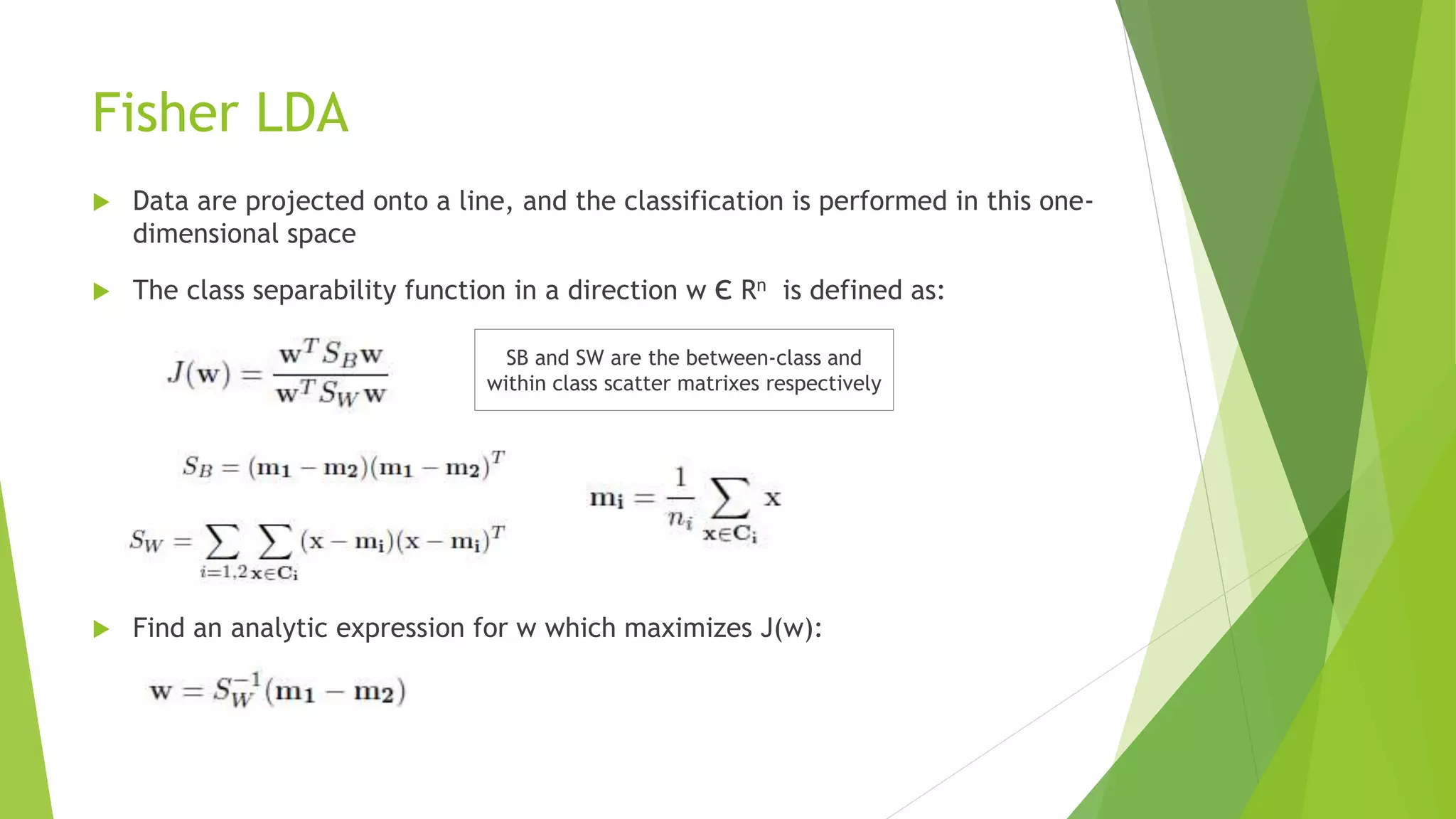

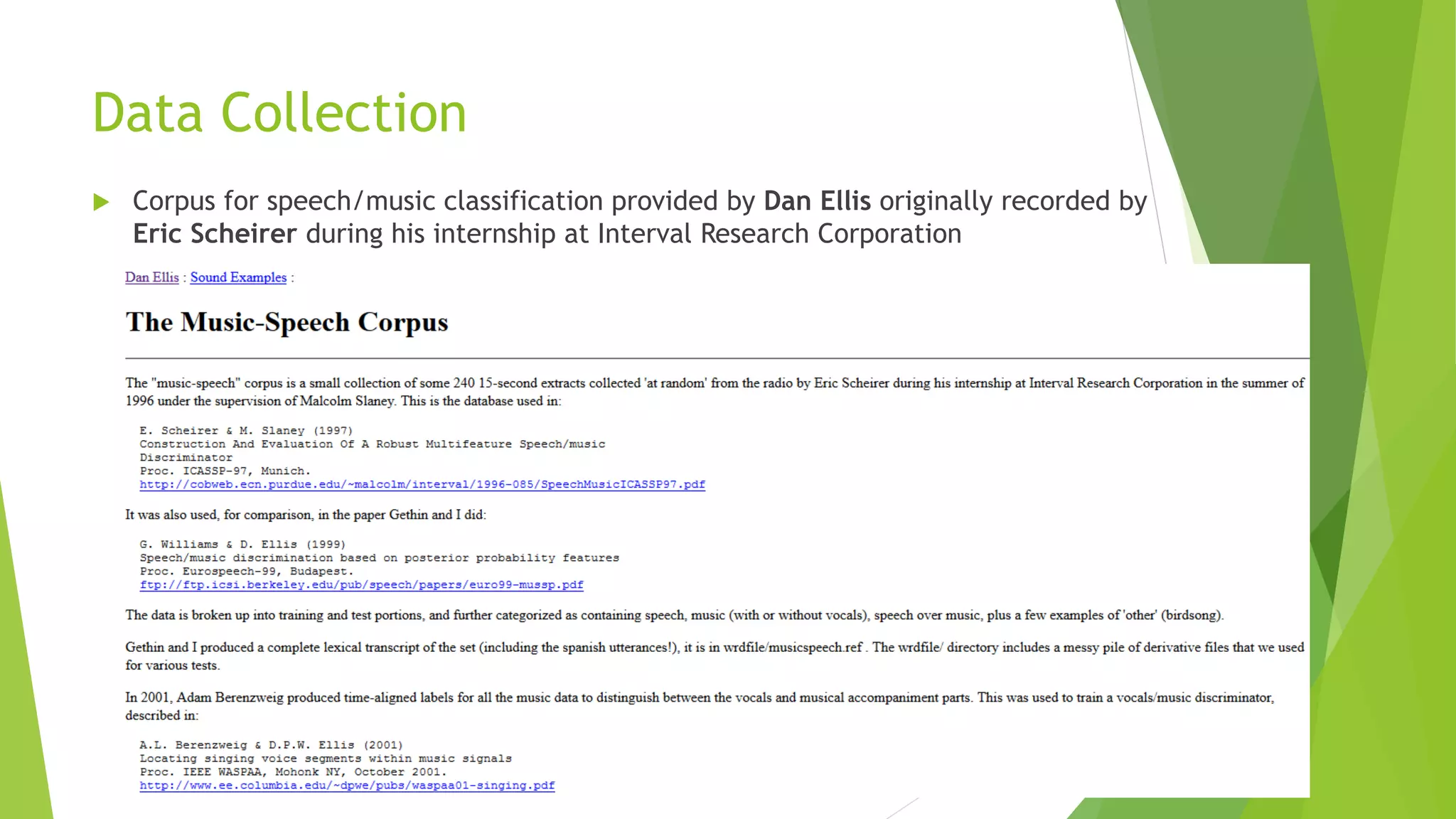

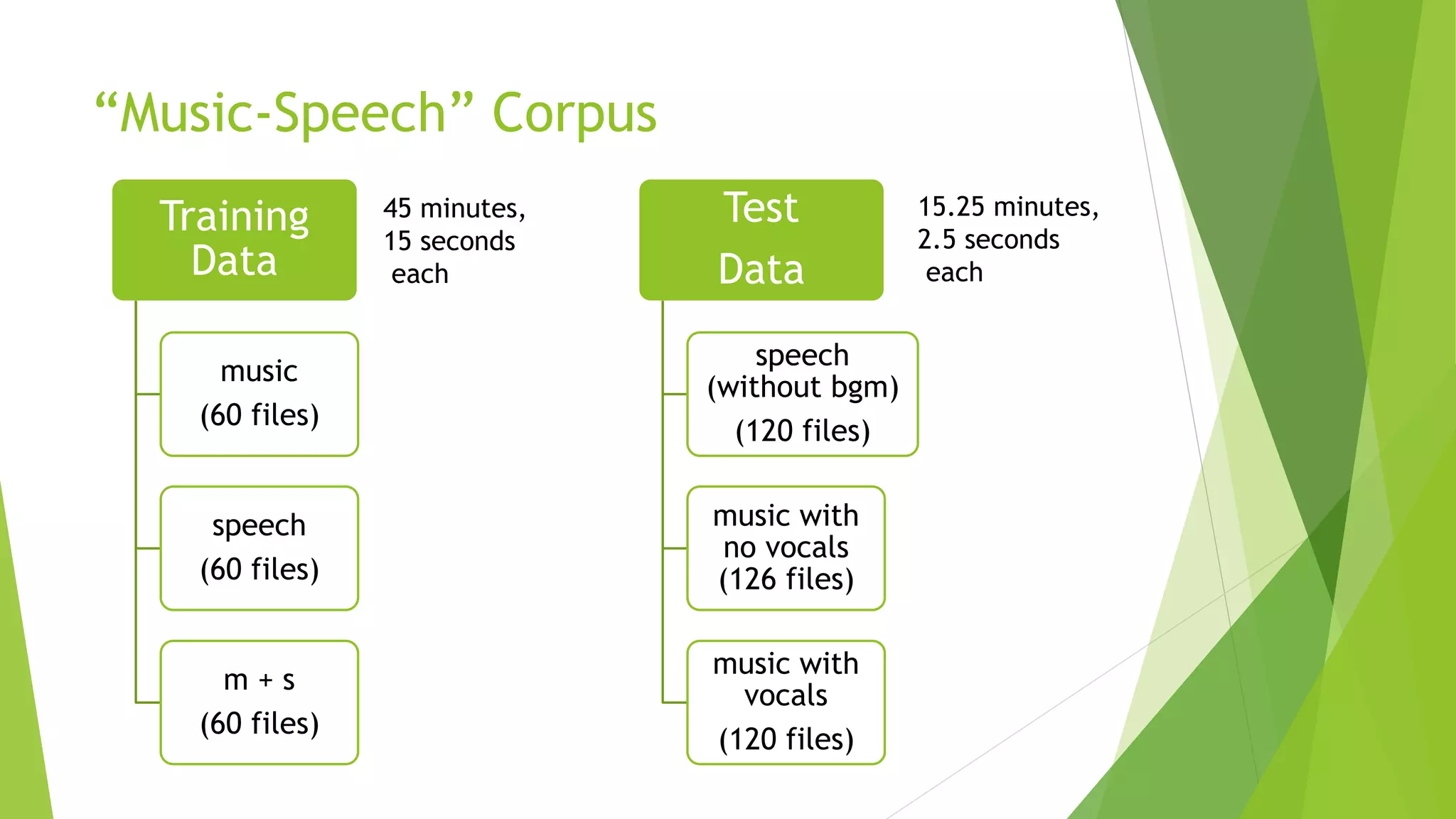

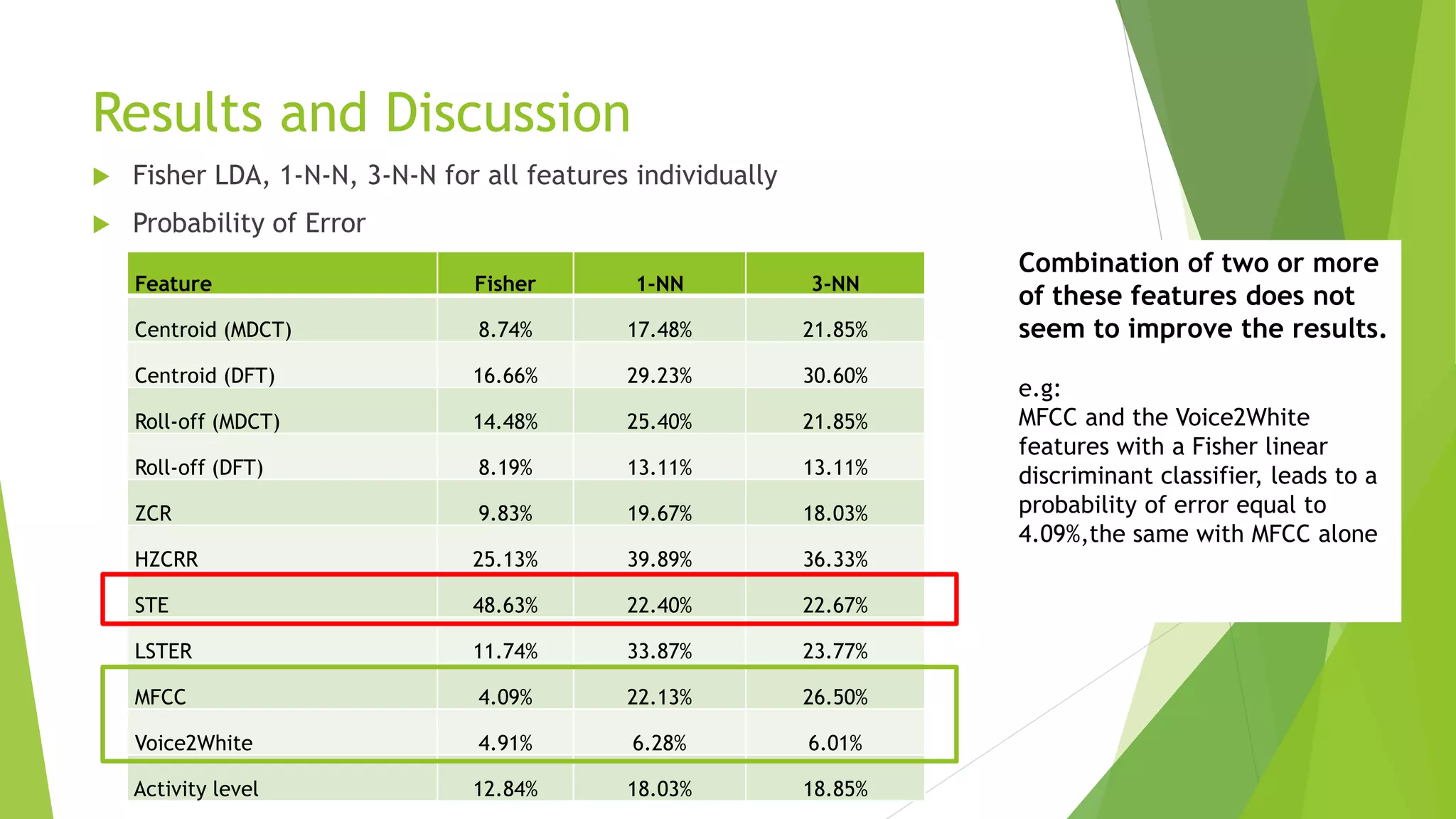

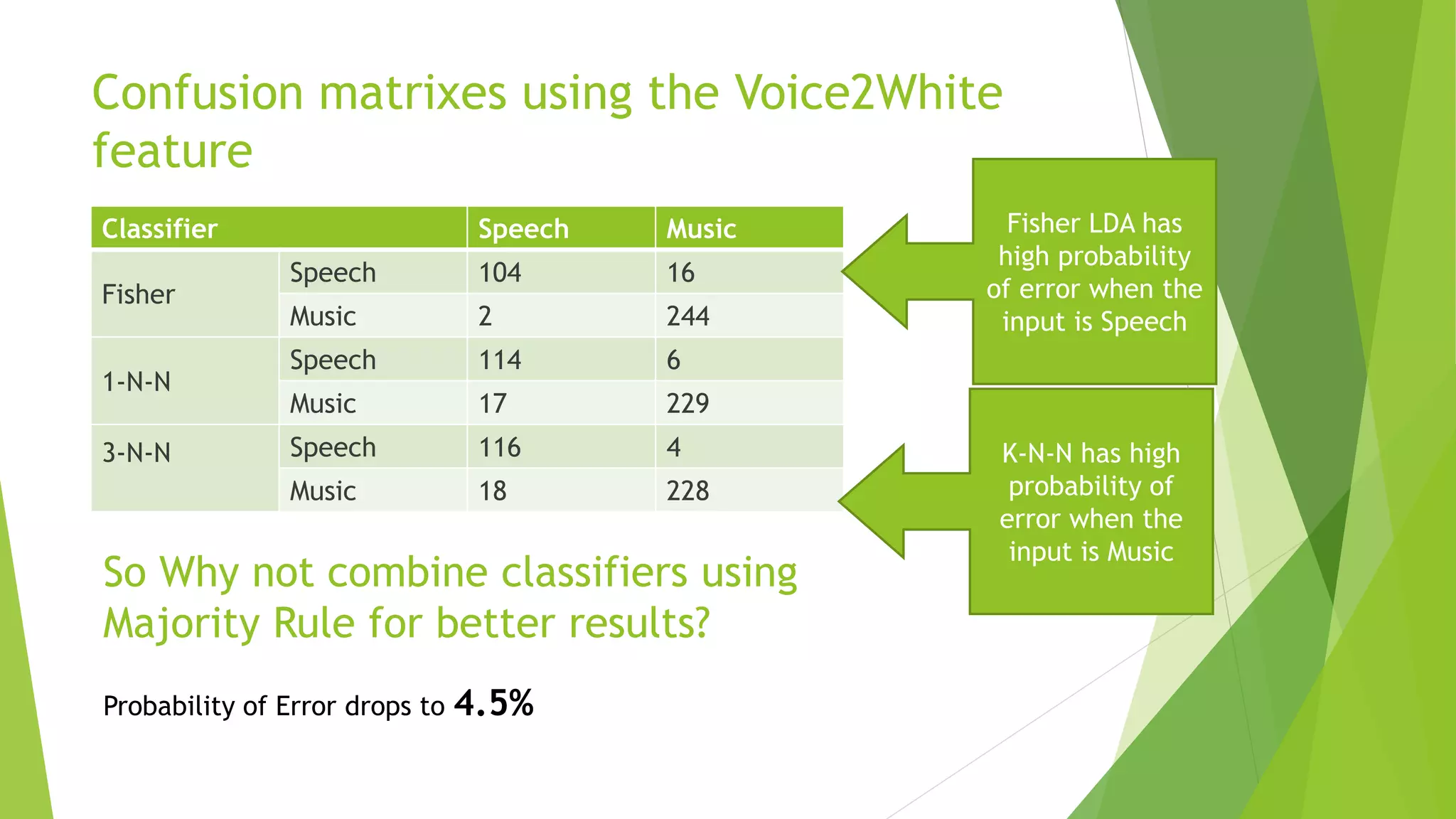

This document describes research applying Fisher Linear Discriminant Analysis (LDA) and K-Nearest Neighbors (K-NN) algorithms to classify speech and music audio clips. It finds that Fisher LDA using single features like mel-frequency cepstral coefficients achieves classification error rates below 5%, outperforming K-NN. While combining multiple features does not improve LDA results, combining the outputs of LDA and K-NN classifiers using majority voting further lowers the error rate to 4.5%, demonstrating the benefit of classifier ensembles for this task.