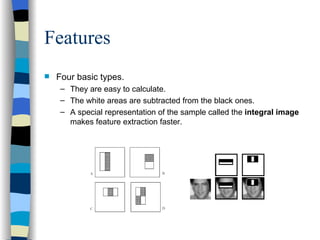

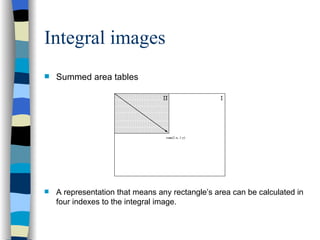

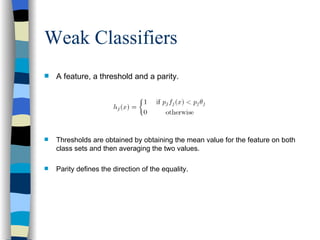

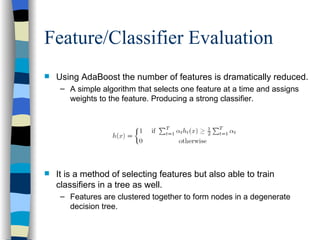

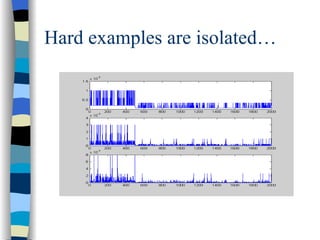

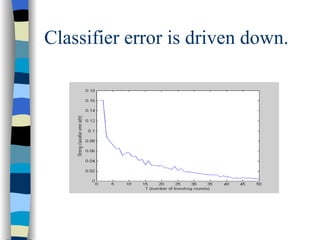

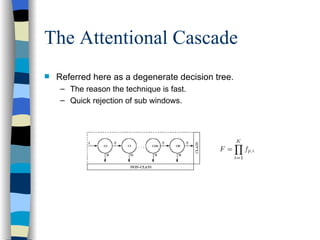

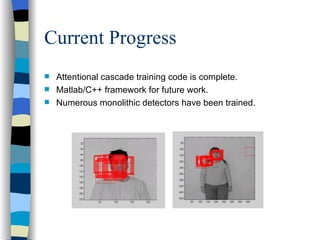

This document summarizes a student project on implementing object detection using the Viola-Jones technique. The technique uses Haar feature extraction and an AdaBoost classifier cascade to quickly and accurately detect objects like faces in images. The student developed implementations in Matlab and C++ to train classifiers and detect faces. The Viola-Jones technique was groundbreaking for providing real-time object detection with high accuracy rates compared to previous methods.