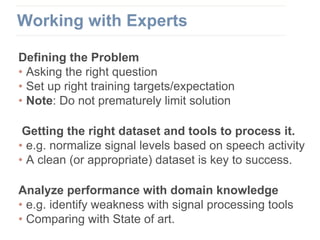

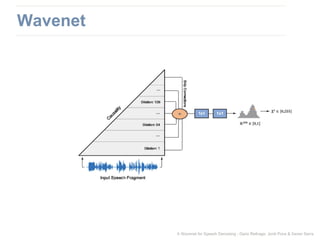

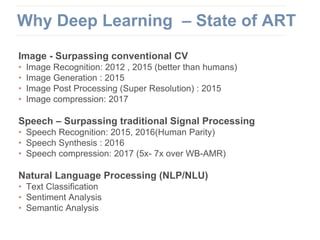

The document discusses the intersection of deep learning and signal processing, outlining various applications and challenges in areas such as noise cancellation, speech recognition, and image processing. It emphasizes the importance of collaborating with domain experts and understanding the problem space while leveraging deep learning techniques. Key insights include the necessity of clean datasets, the potential of deep learning to surpass traditional methods, and the value of having a growth mindset in research.

![Using digital operations to perform signal processing

operations

Digital Signal Processing

https://en.wikipedia.org/wiki/Digital_signal_processing

Image By en:User:Cburnett [CC-BY-SA-3.0 )], via Wikimedia Commons](https://image.slidesharecdn.com/deeplearningtakesonsignalprocessingv3-180512030946/85/Deep-learning-takes-on-Signal-Processing-6-320.jpg)

![Unclear problem definition

Unclear pass fail metric

Requires computing power, memory & Data

• Lots of data - substantially more than humans

Kind of a black box – hard to draw right conclusions

• Very easy to draw the wrong conclusions.

[SKIP TO END]

Why not Deep Learning](https://image.slidesharecdn.com/deeplearningtakesonsignalprocessingv3-180512030946/85/Deep-learning-takes-on-Signal-Processing-18-320.jpg)