Embed presentation

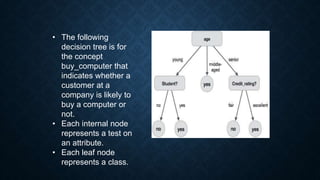

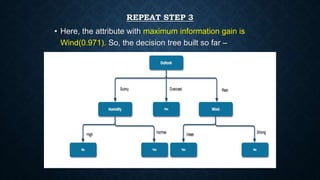

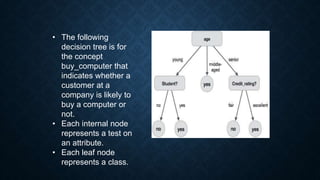

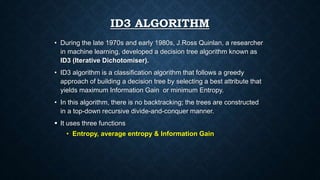

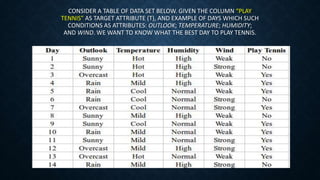

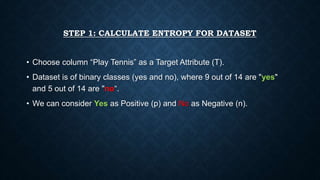

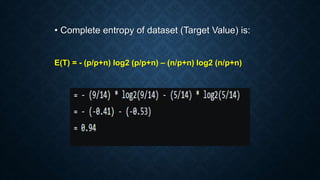

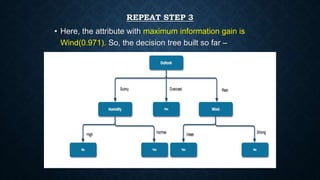

A decision tree is a supervised machine learning algorithm represented as a flowchart-like structure with nodes, branches, and leaf nodes, where each internal node denotes a test on an attribute, branches denote test outcomes, and leaf nodes represent class labels. The ID3 algorithm, developed by J. Ross Quinlan, uses information gain to determine the best attribute for splitting data and is popular due to its ability to handle multidimensional data without extensive data cleaning. Decision trees perform classification quickly and effectively, accommodating both categorical and continuous variables.