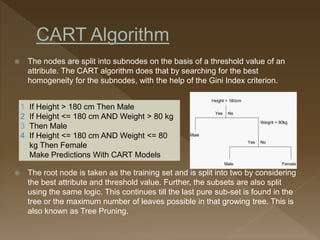

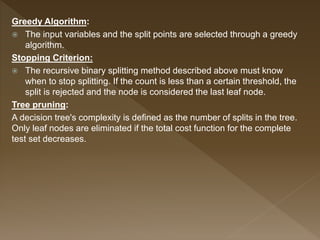

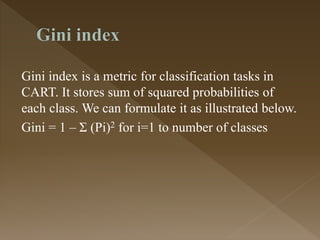

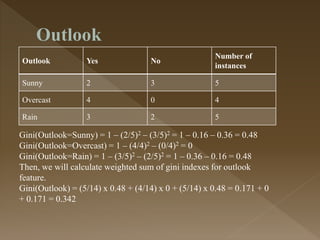

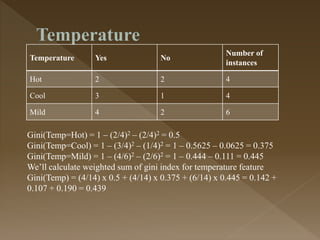

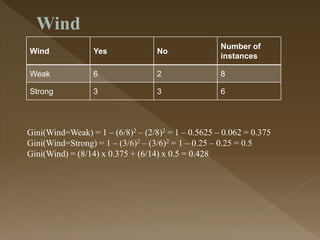

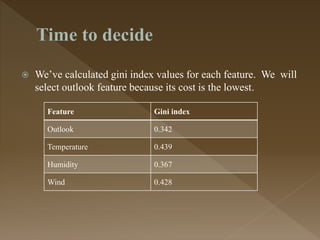

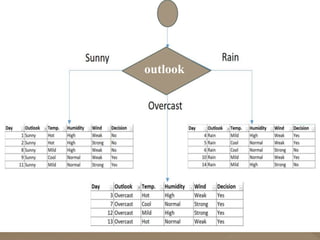

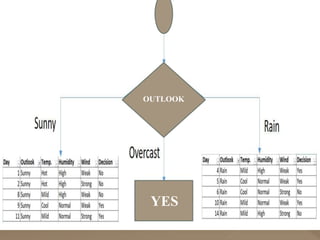

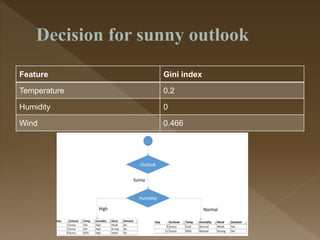

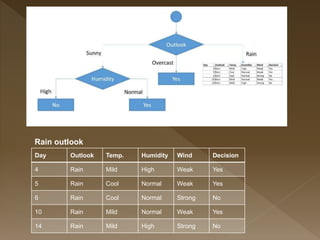

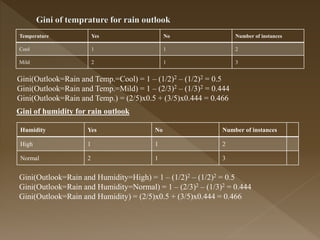

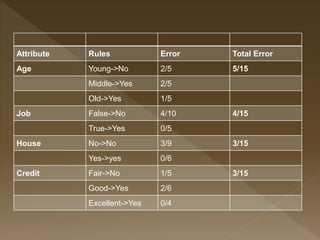

The document discusses the classification and regression tree (CART) algorithm. It provides details on how CART builds decision trees using a greedy algorithm that recursively splits nodes based on thresholds of predictor variables. CART uses the Gini index criterion to find the optimal splits that result in homogenous subsets. An example is provided to demonstrate how CART constructs a decision tree to classify examples based on various predictor variables like outlook, temperature, humidity, and wind.