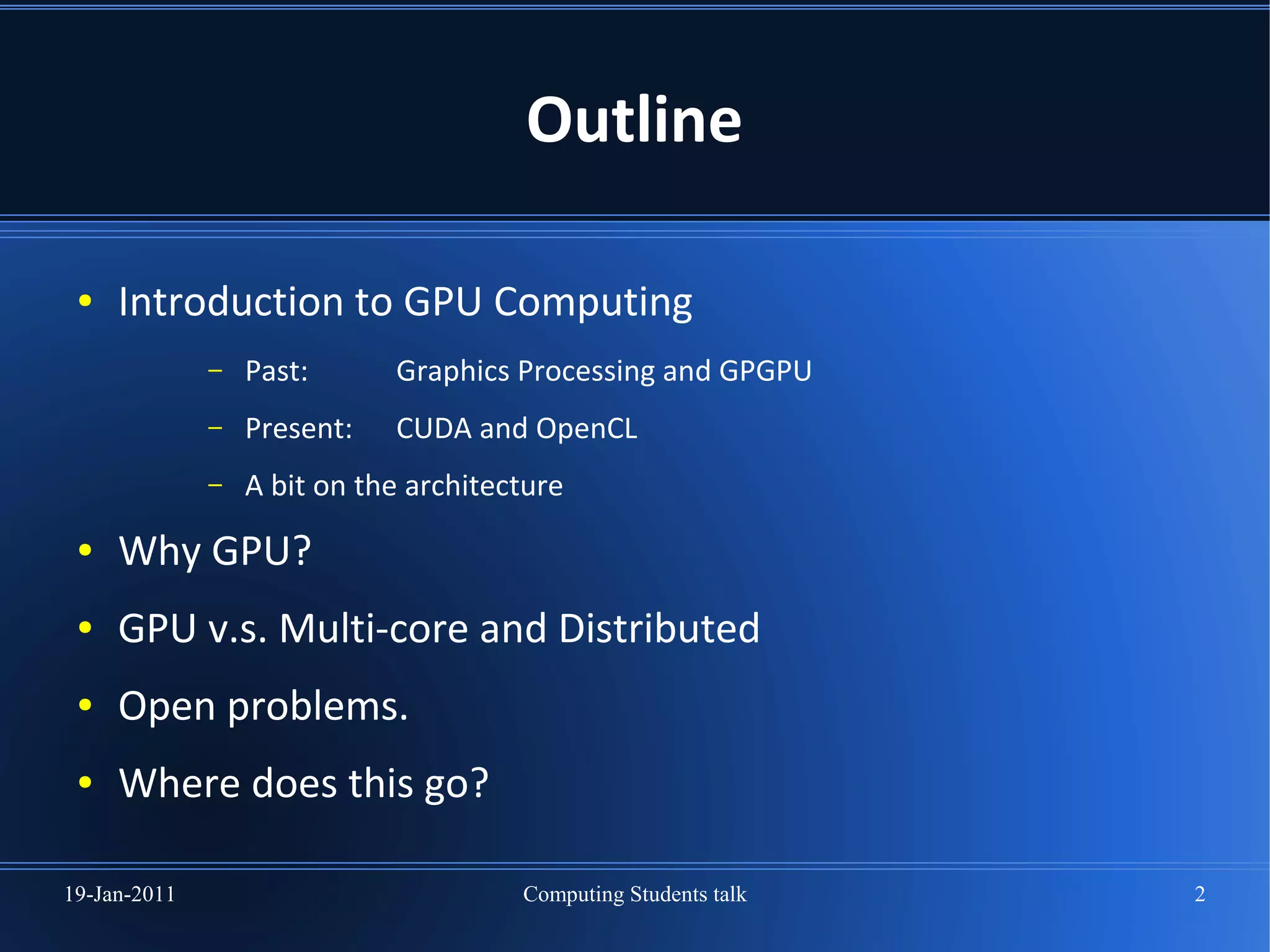

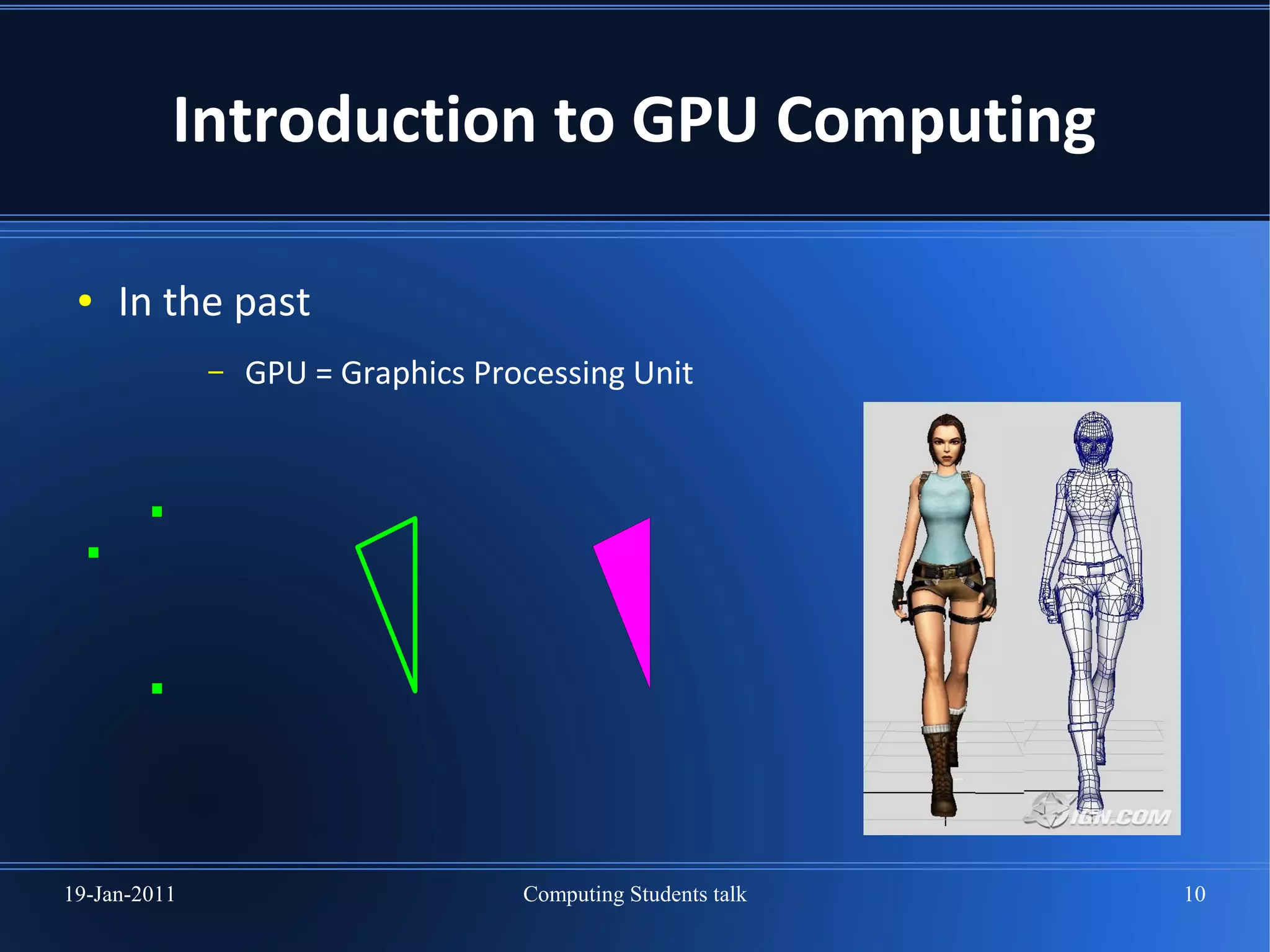

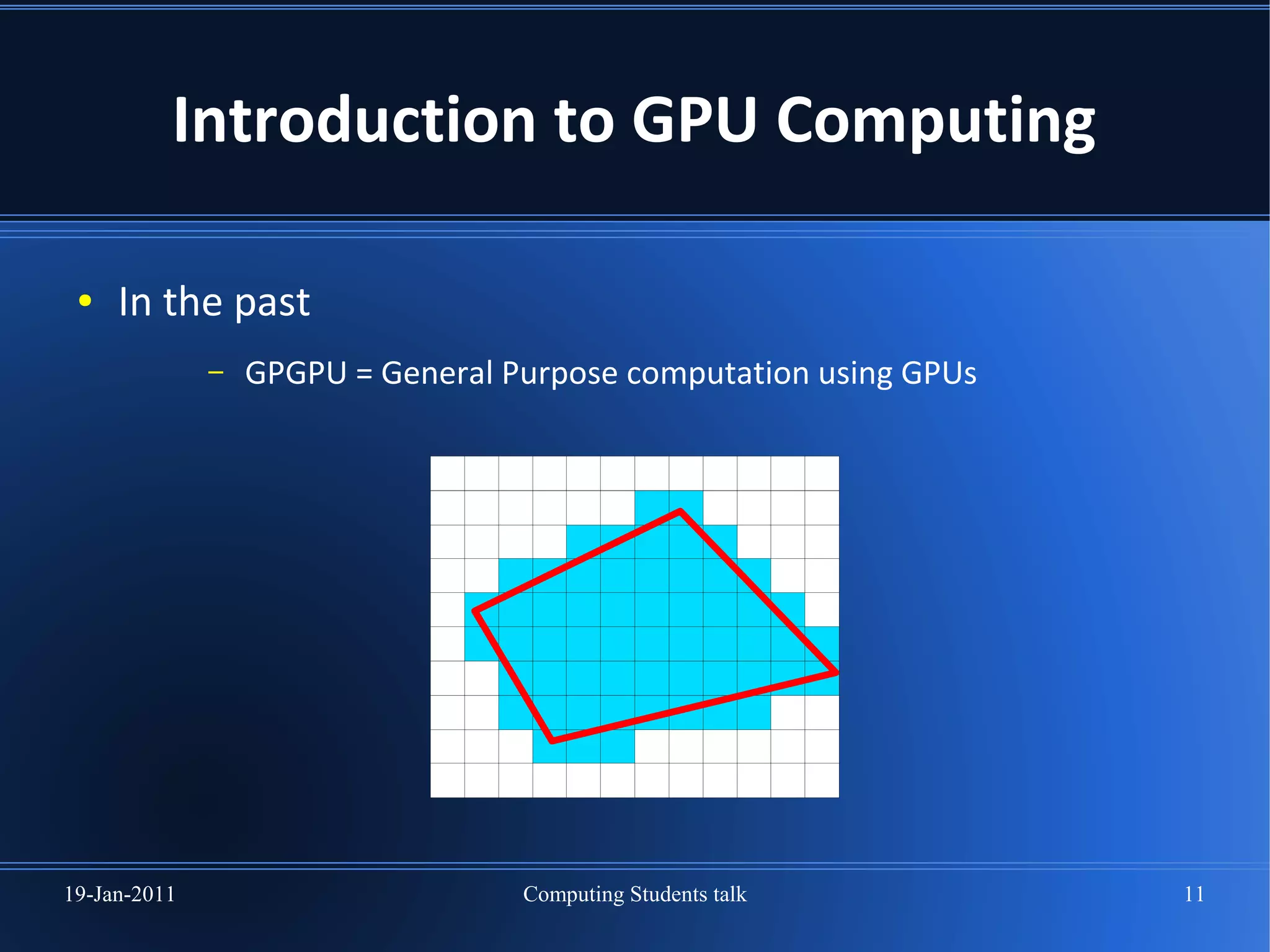

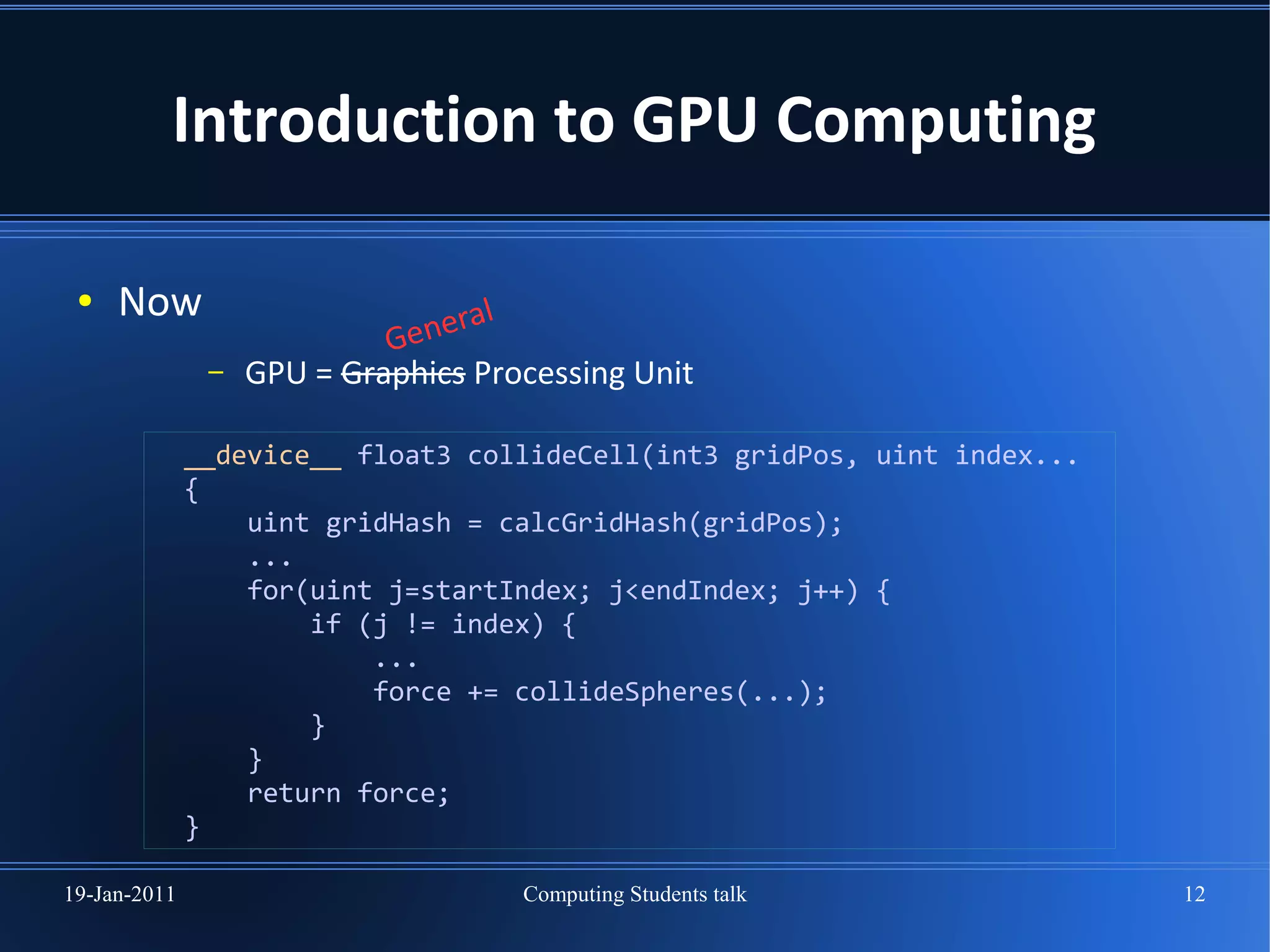

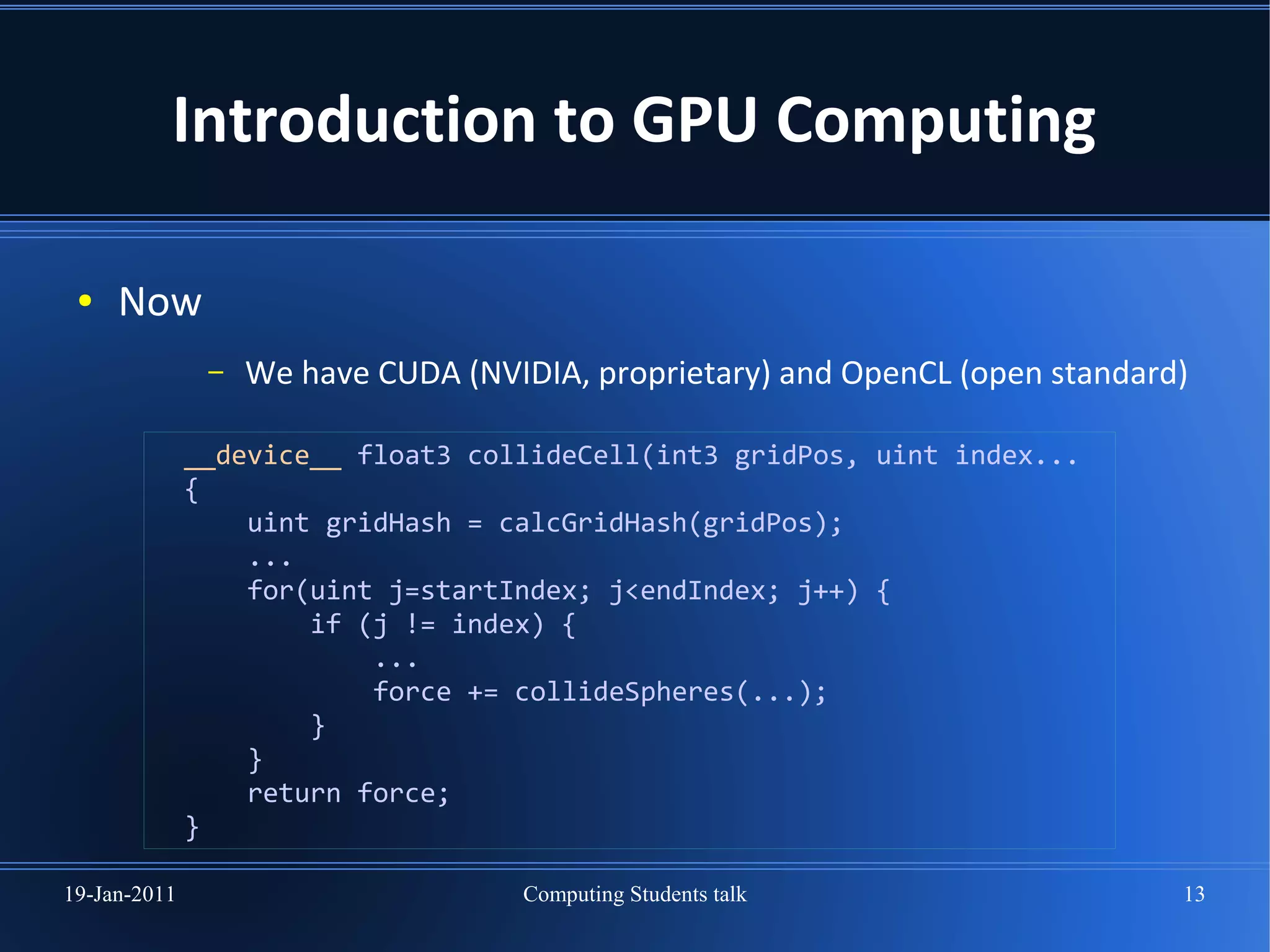

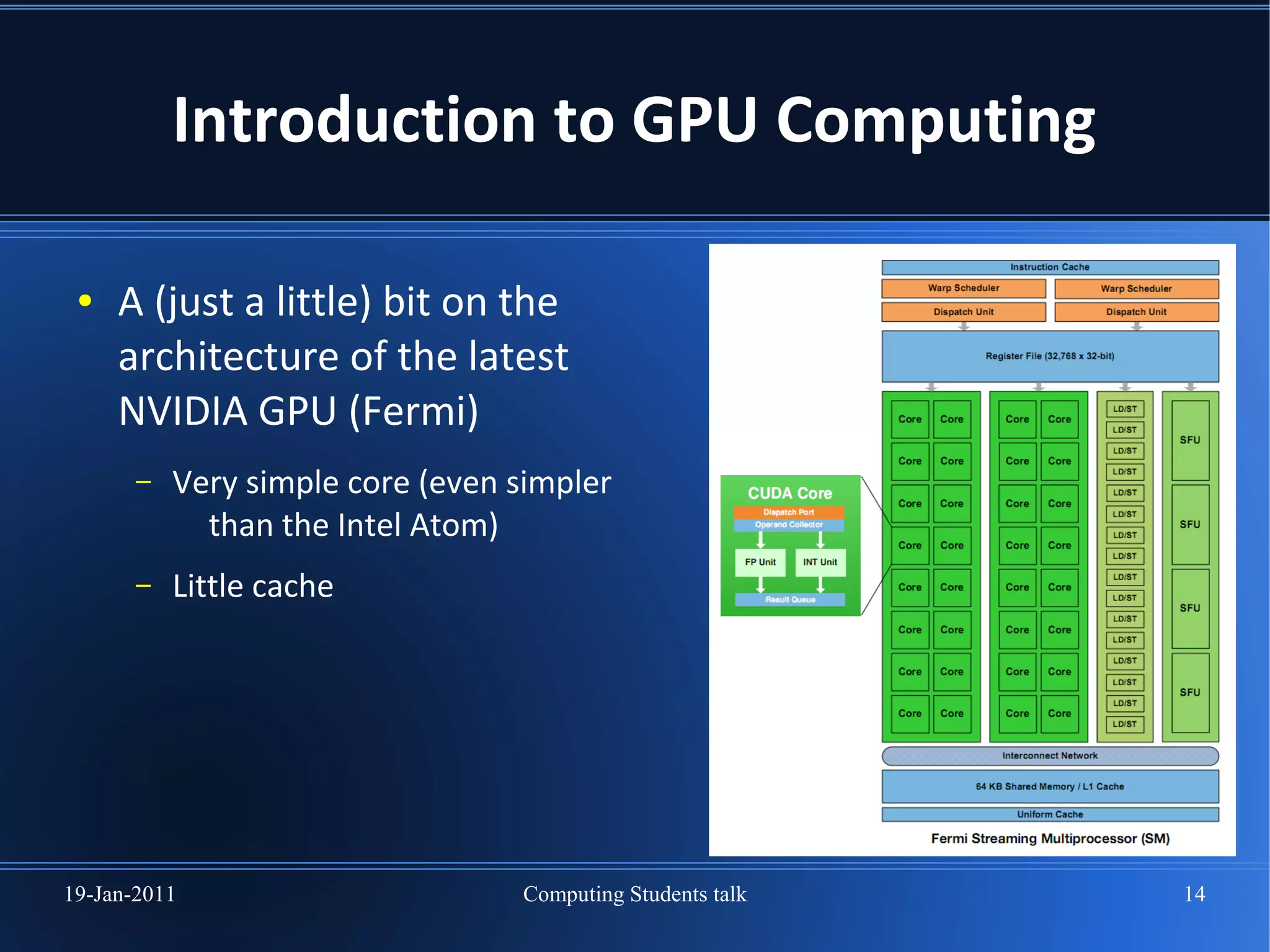

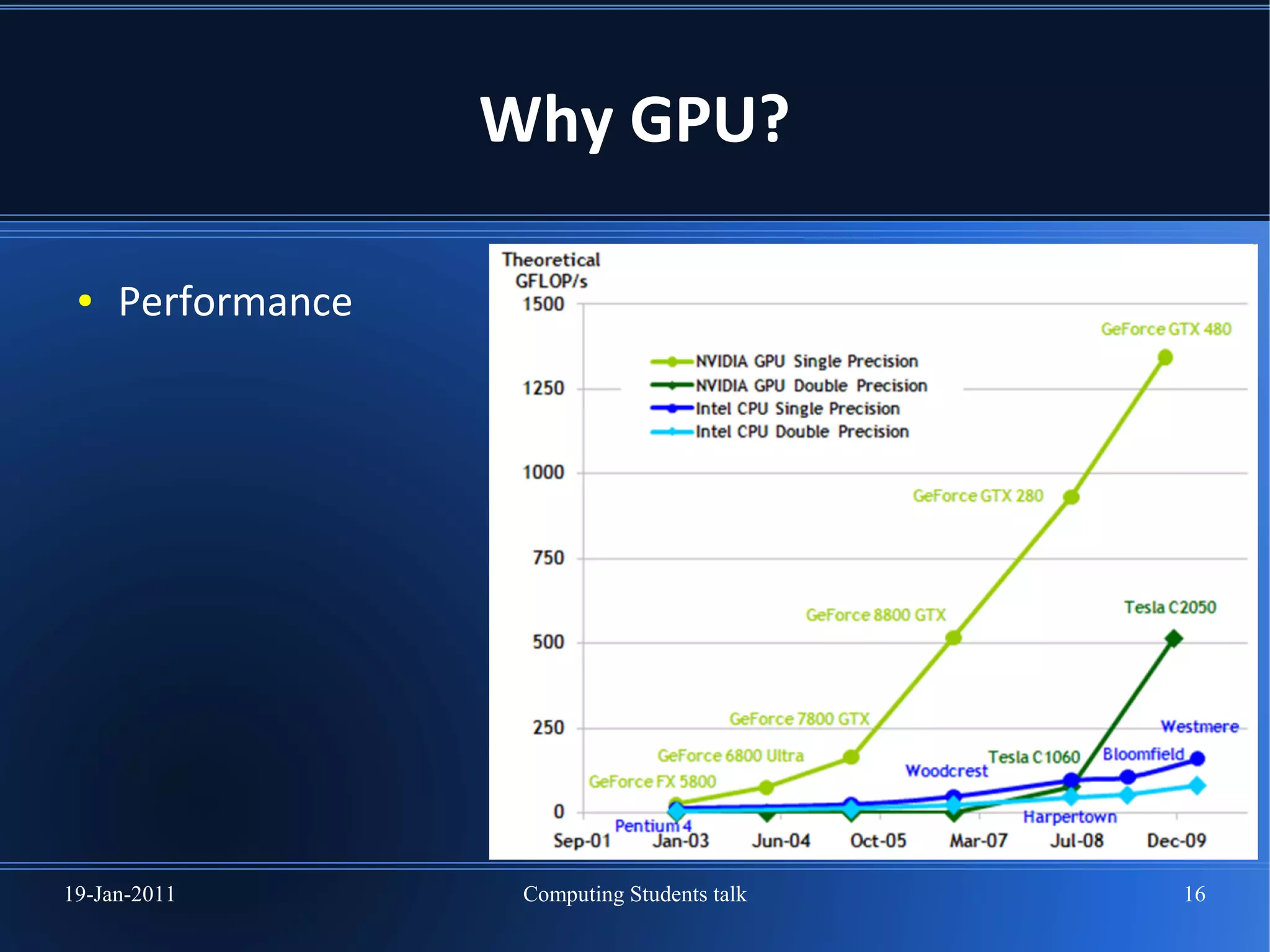

This document discusses research in GPU computing. It provides an introduction to GPU computing, including how GPUs were originally for graphics processing but are now used more broadly through frameworks like CUDA and OpenCL. It discusses advantages of GPUs like their large number of cores compared to CPUs. Open problems in the field are also outlined, such as developing new data structures and algorithms suitable for massive parallelism. The document suggests GPU computing will continue growing in importance as computing moves towards more highly multithreaded architectures.