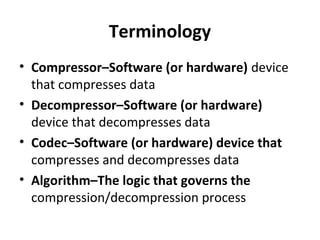

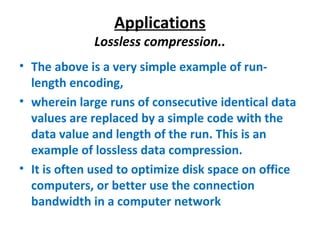

This document discusses different compression techniques including lossless and lossy compression. Lossless compression recovers the exact original data after compression and is used for databases and documents. Lossy compression results in some loss of accuracy but allows for greater compression and is used for images and audio. Common lossless compression algorithms discussed include run-length encoding, Huffman coding, and arithmetic coding. Lossy compression is used in applications like digital cameras to increase storage capacity with minimal quality degradation.

![• The first well-known method for compressing

digital signals is now known as Shannon- Fano

coding. Shannon and Fano [~1948]

simultaneously developed this algorithm which

assigns binary codewords to unique symbols that

appear within a given data file.

• While Shannon-Fano coding was a great leap

forward, it had the unfortunate luck to be quickly

superseded by an even more efficient coding

system : Huffman Coding.](https://image.slidesharecdn.com/compressiontechniques-130202041230-phpapp01/85/Compression-techniques-8-320.jpg)

![• Huffman coding [1952] shares most

characteristics of Shannon-Fano coding.

• Huffman coding could perform effective data

compression by reducing the amount of

redundancy in the coding of symbols.

• It has been proven to be the most efficient

fixed-length coding method available](https://image.slidesharecdn.com/compressiontechniques-130202041230-phpapp01/85/Compression-techniques-9-320.jpg)