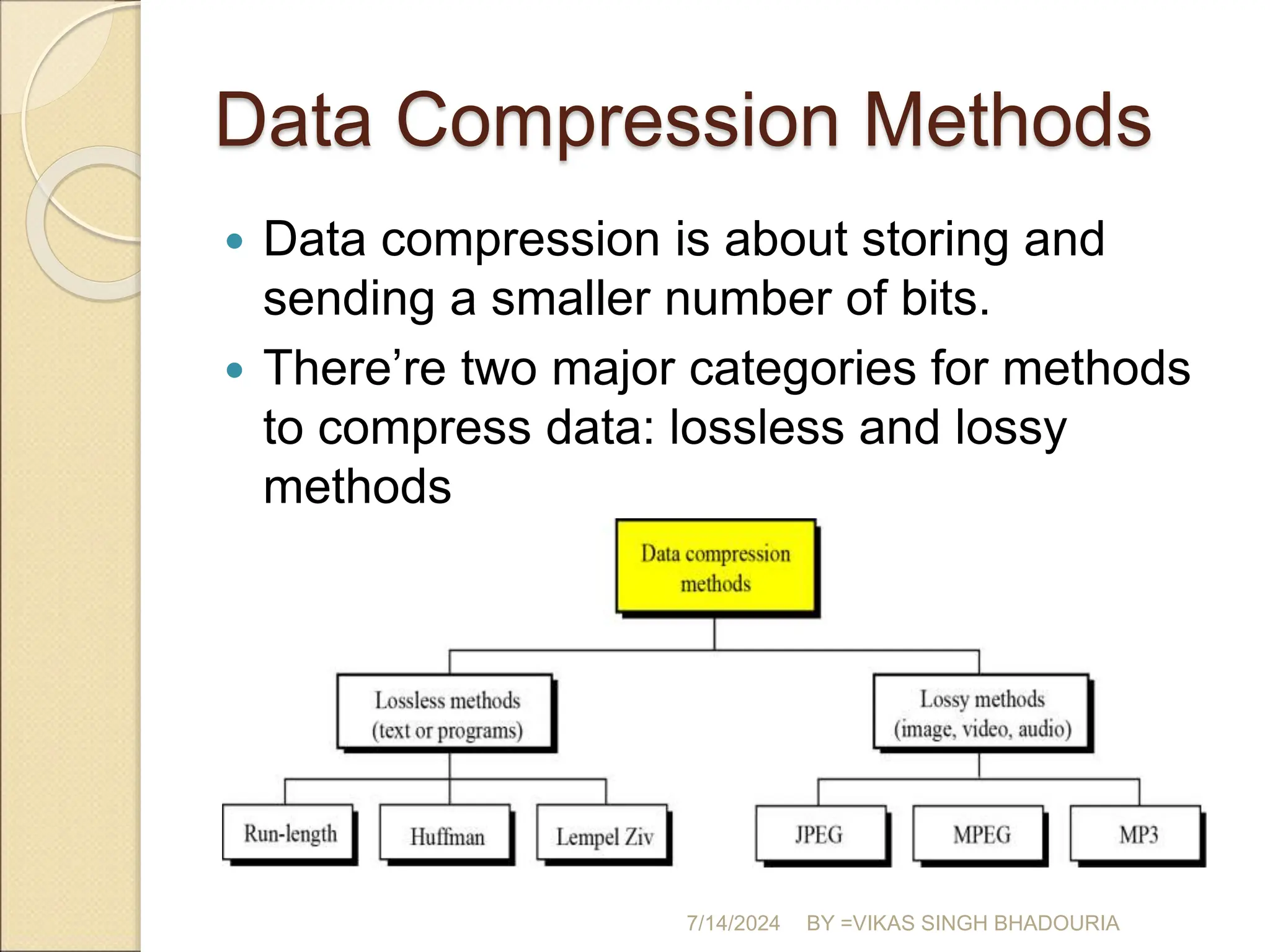

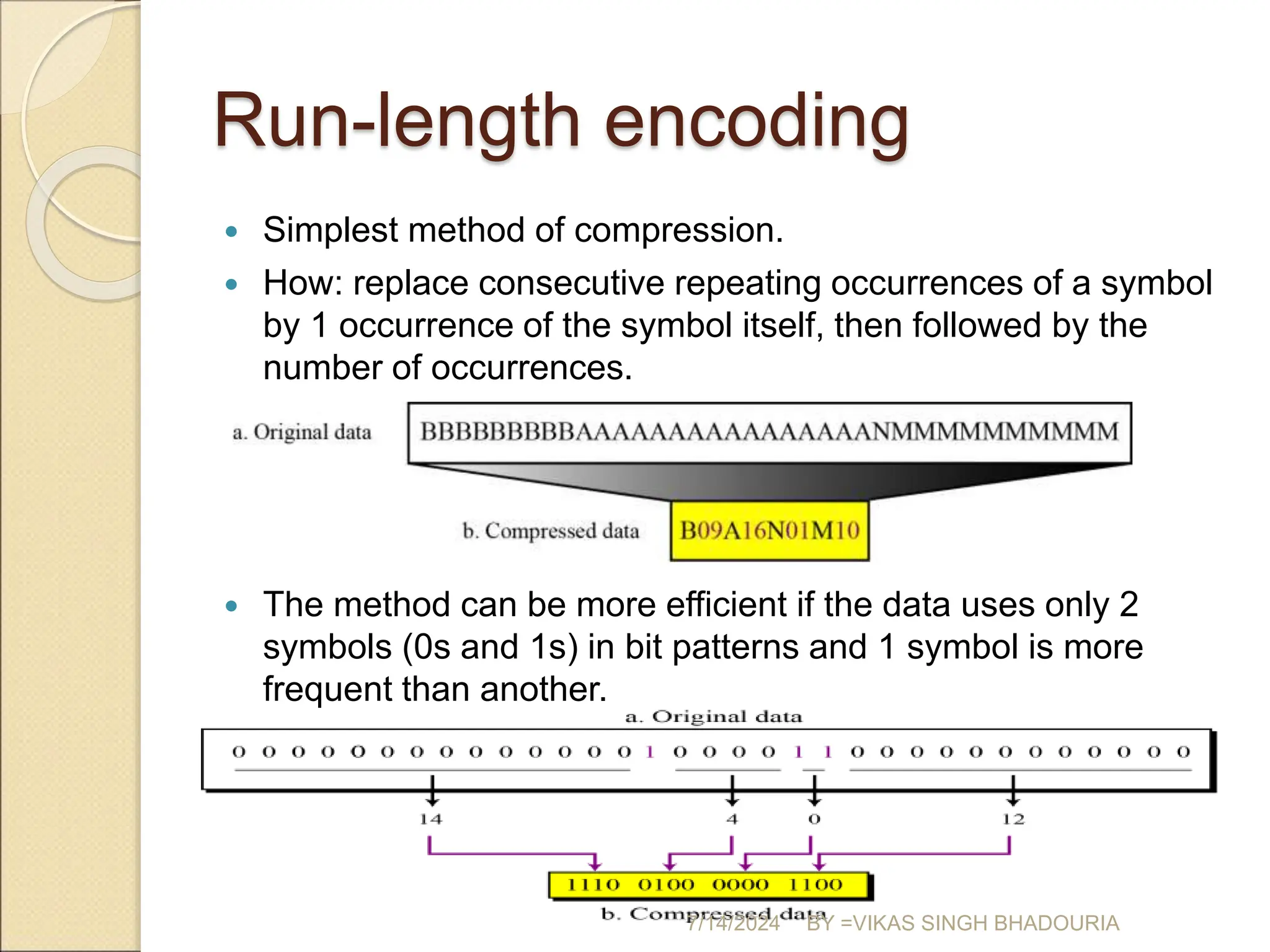

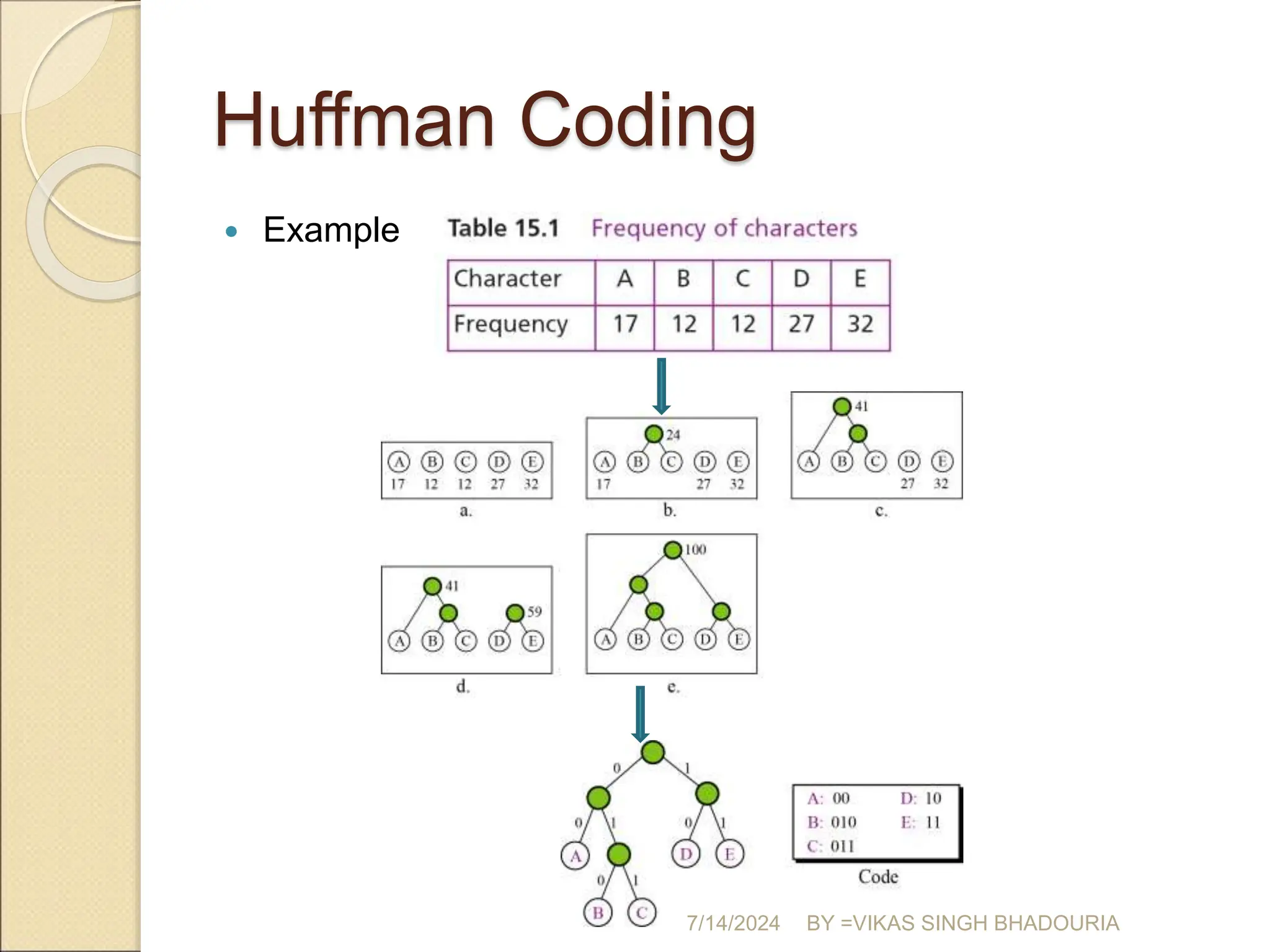

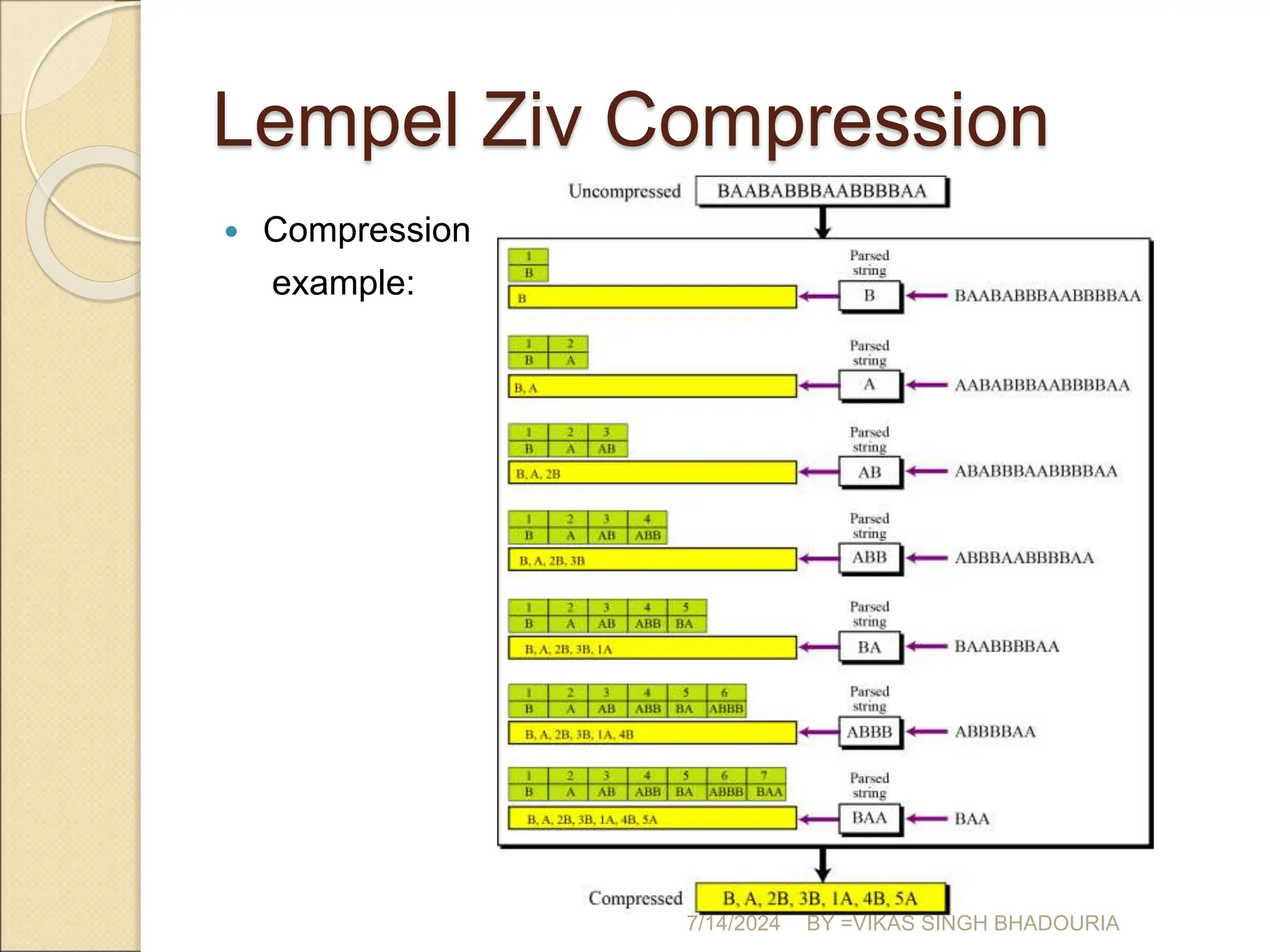

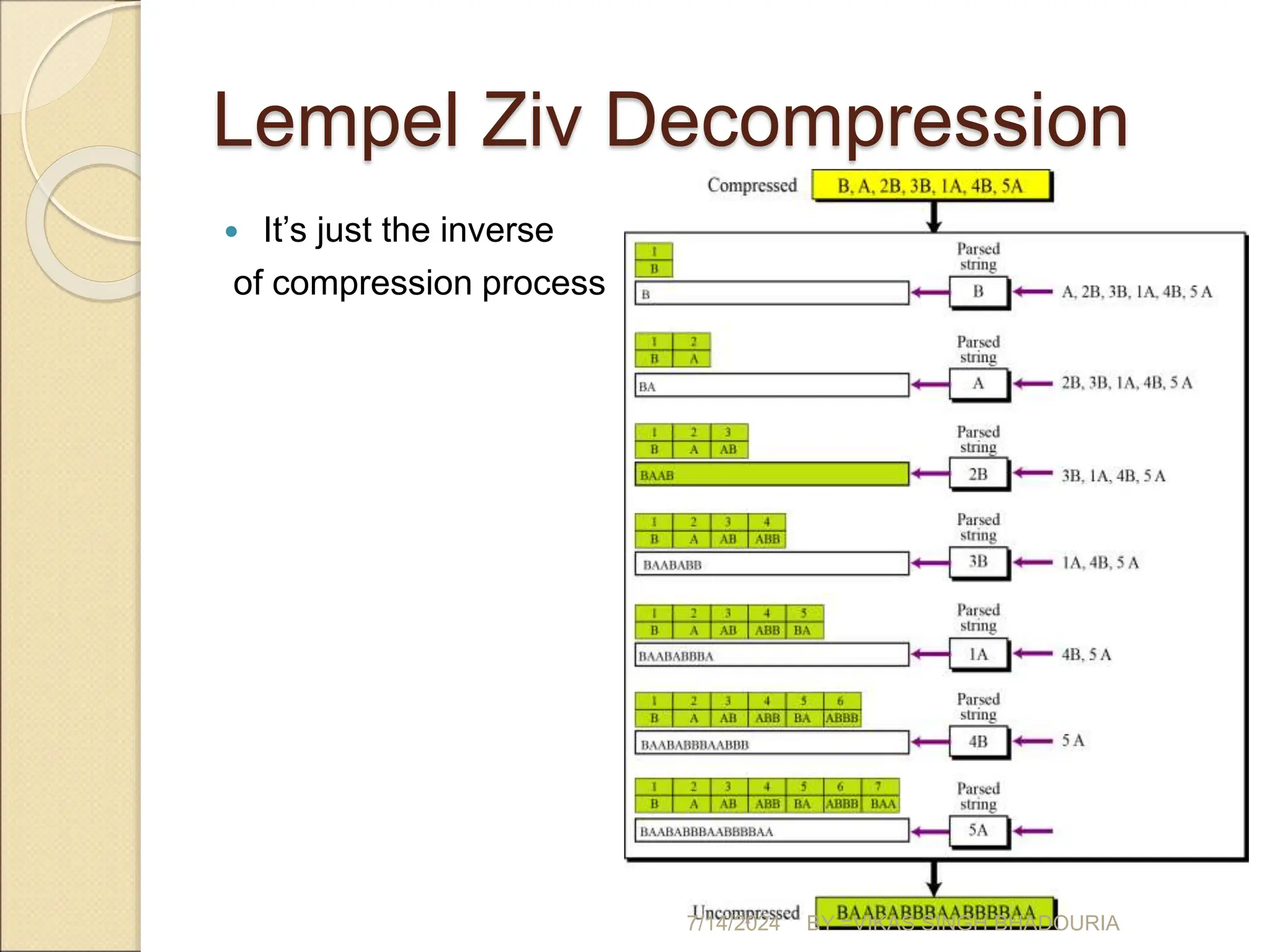

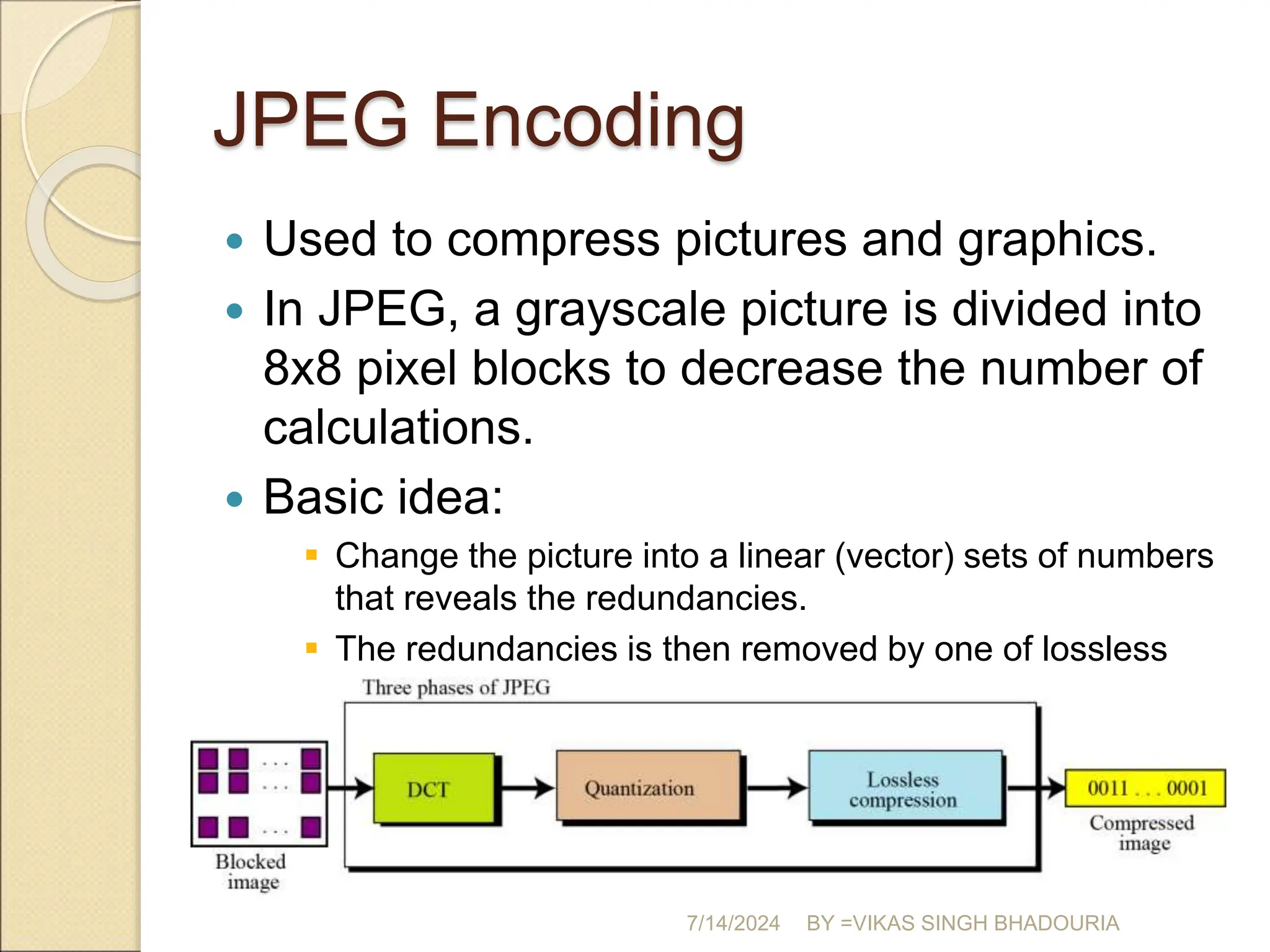

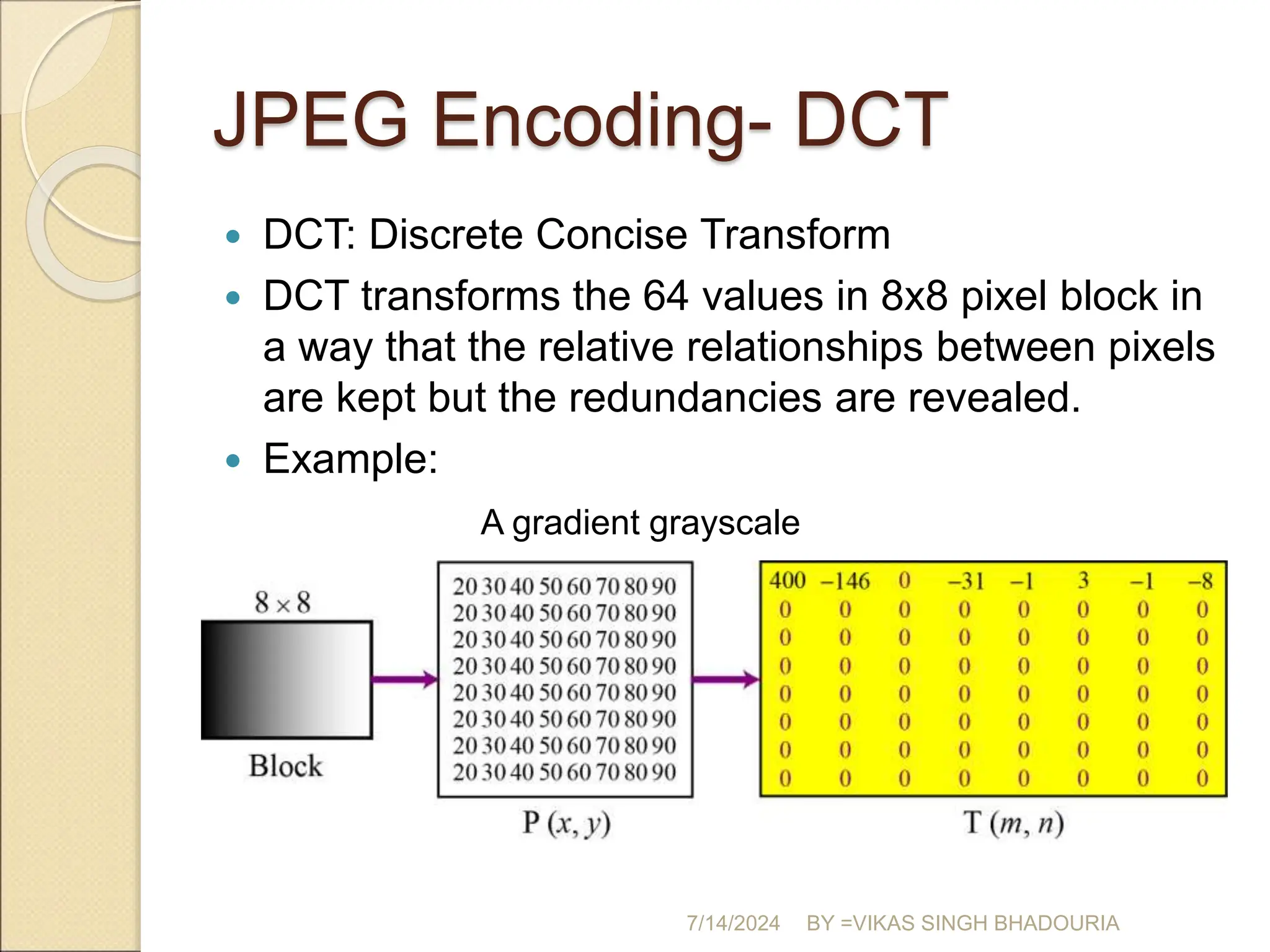

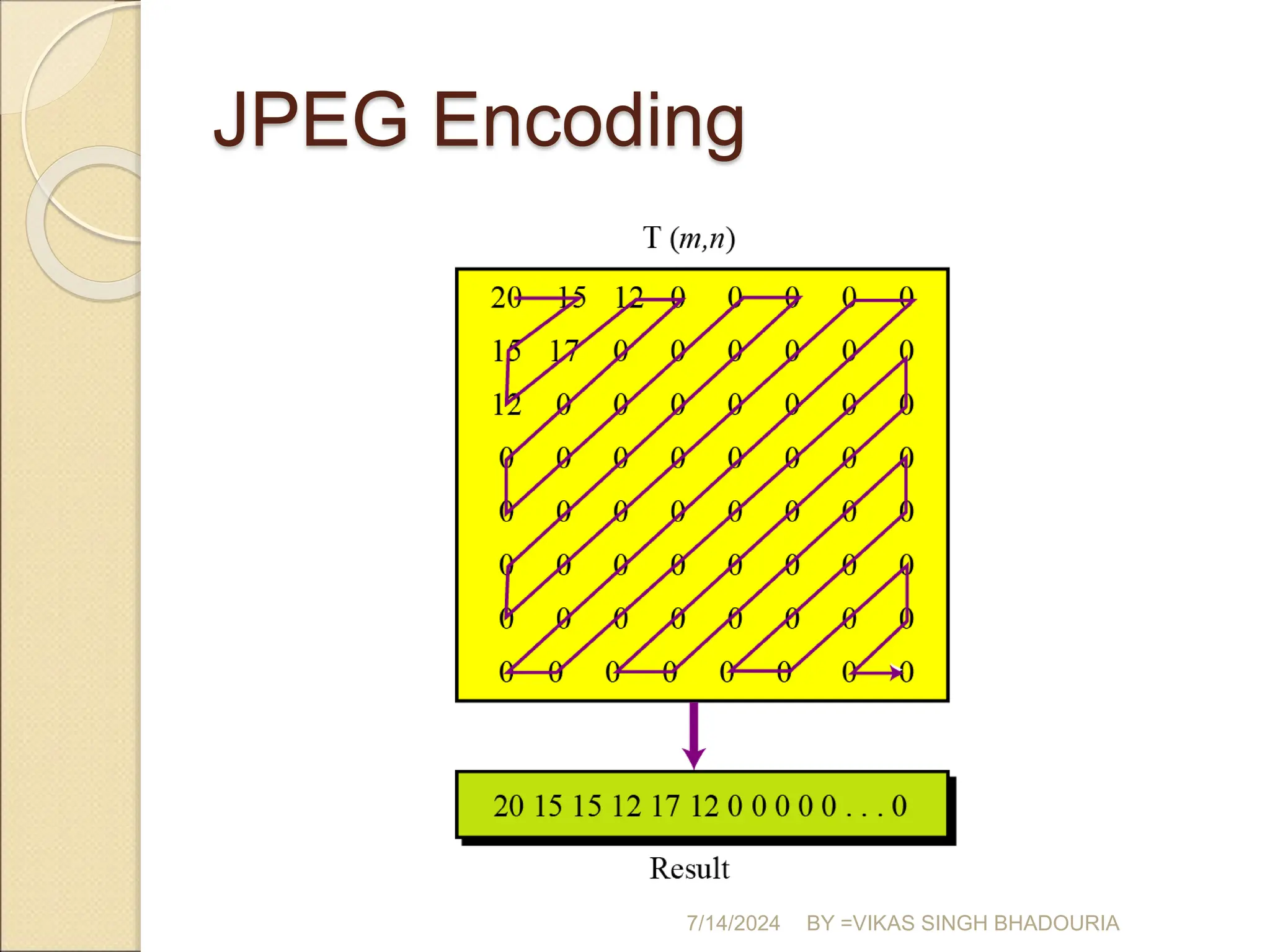

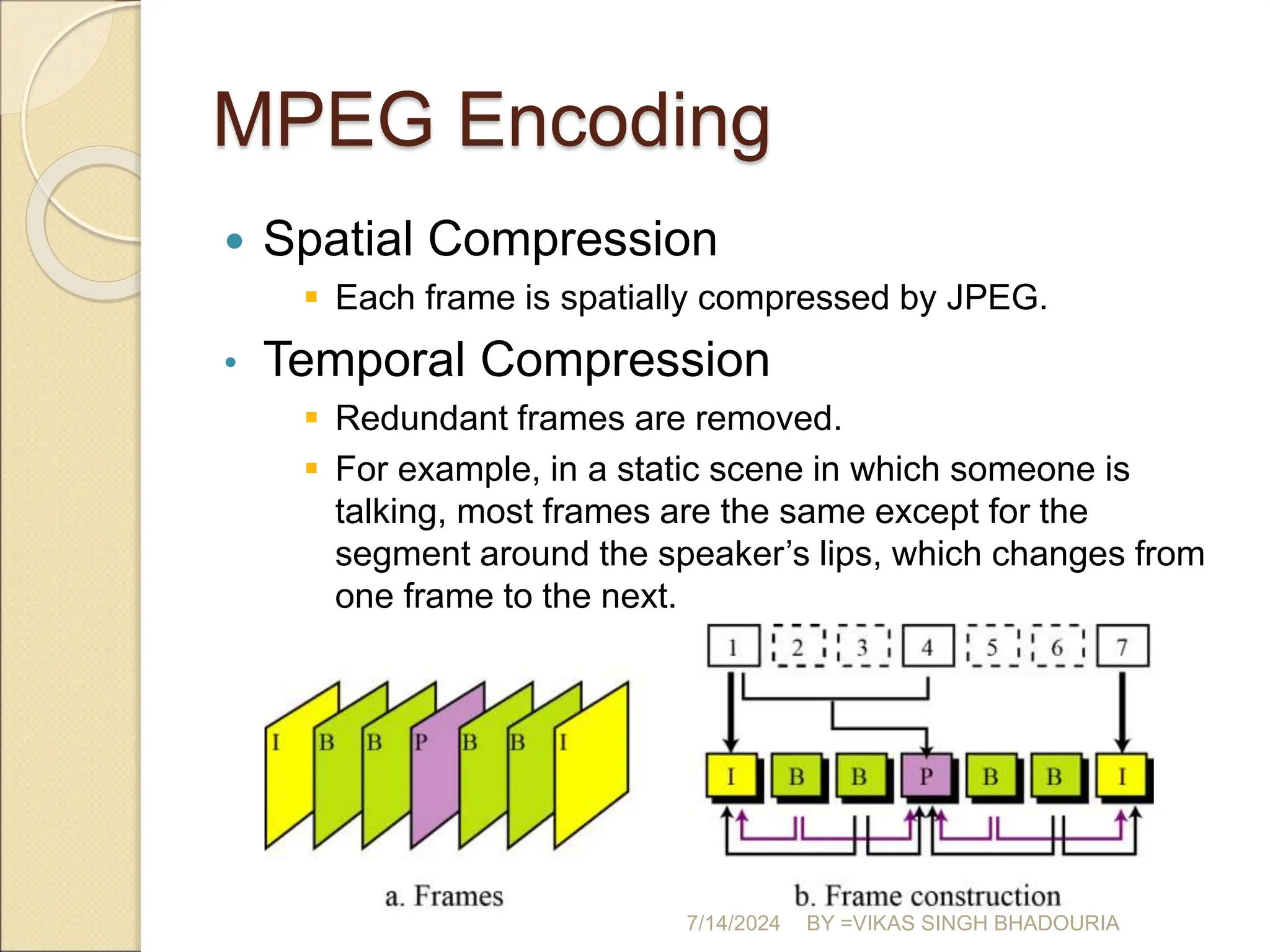

The document discusses data compression, explaining its importance for storage and transmission efficiency and describing two main methods: lossless and lossy compression. Key techniques such as run-length encoding, Huffman coding, and Lempel-Ziv encoding are detailed, alongside lossy methods like JPEG, MPEG, and MP3 for image and audio compression. The document underscores the significance of entropy in measuring information content and highlights various strategies to optimize data storage and transmission.