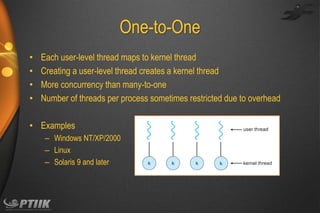

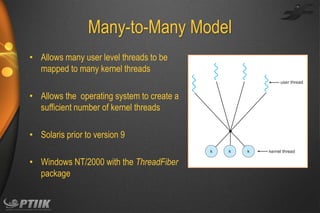

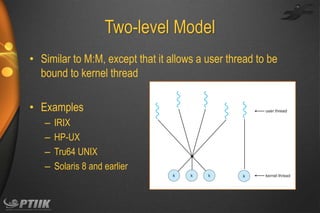

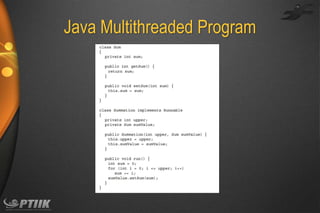

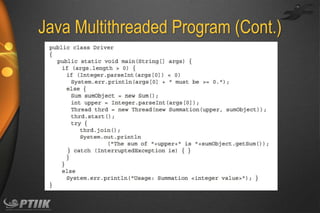

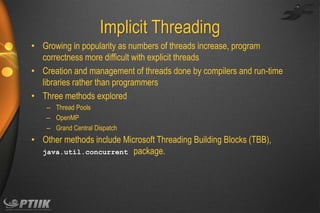

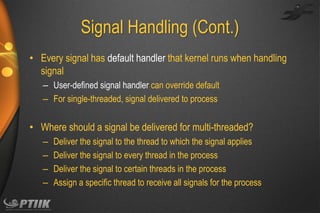

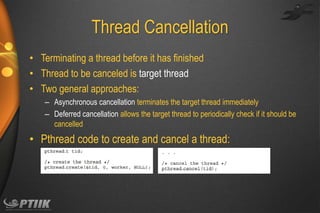

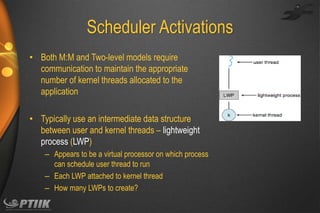

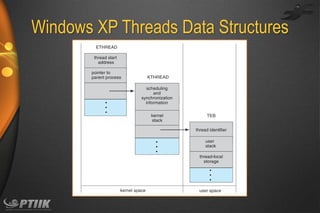

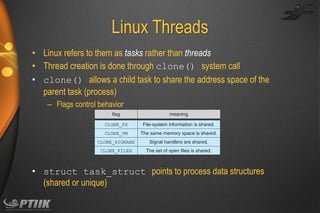

This chapter discusses threads and multithreaded programming. It covers thread models like many-to-one, one-to-one and many-to-many. Common thread libraries like Pthreads, Windows and Java threads are explained. Implicit threading techniques such as thread pools and OpenMP are introduced. Issues with multithreaded programming like signal handling and thread cancellation are examined. Finally, threading implementations in Windows and Linux are overviewed.