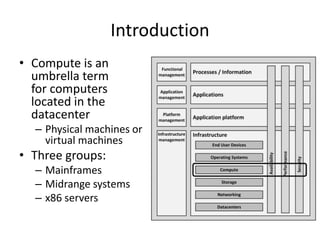

The document provides an overview of compute infrastructure, including:

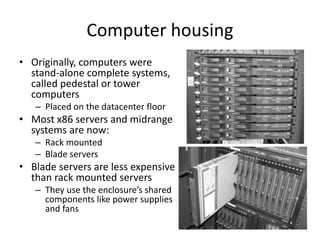

- Physical computers contain components like CPUs, memory, ports, and connectivity.

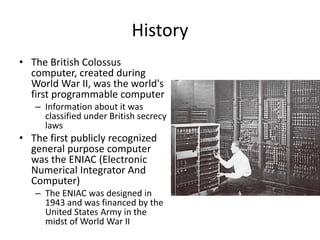

- Early computers used vacuum tubes and punch cards. Transistors and ICs reduced size and cost.

- Common processor types include Intel/AMD x86, ARM, IBM POWER, Oracle SPARC.

- Early computer memory included magnetic cores and RAM chips replaced it. RAM types are SRAM and DRAM.

- The BIOS controls a computer from power on until the operating system loads.