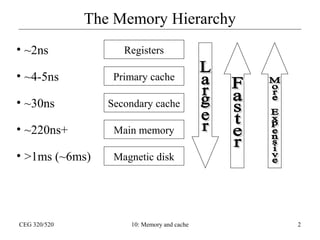

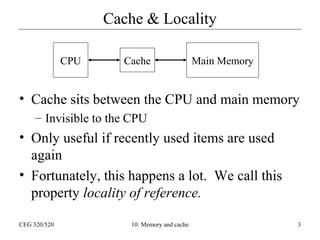

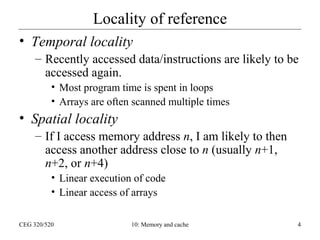

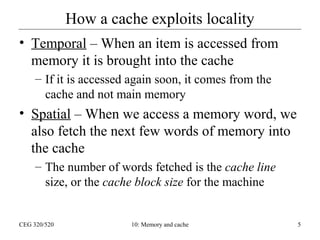

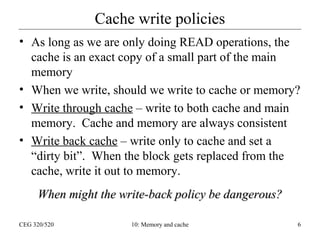

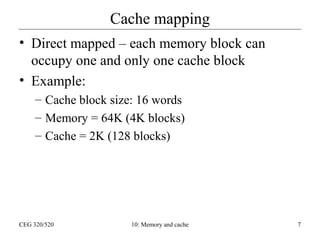

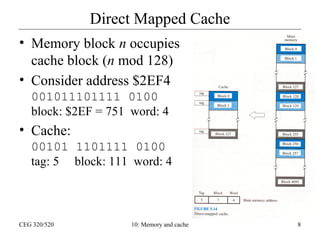

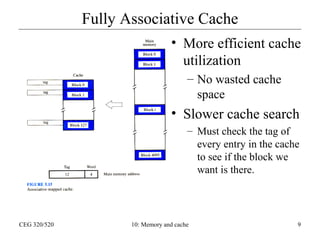

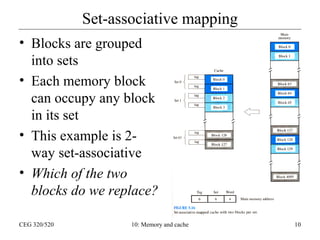

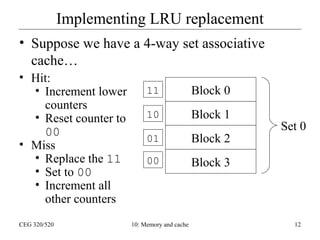

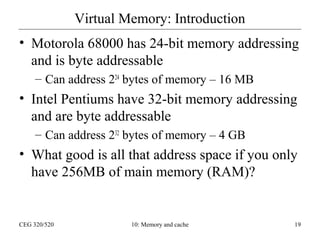

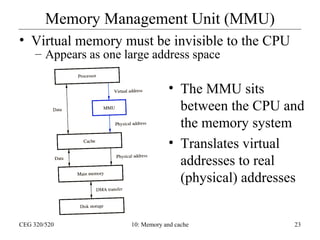

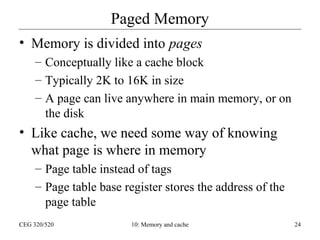

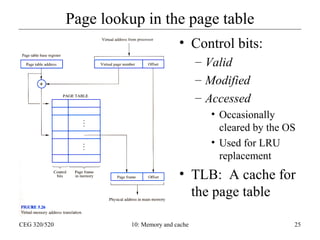

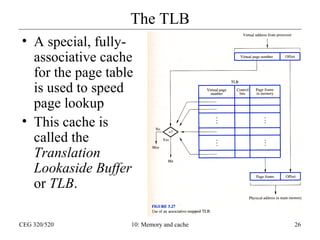

This document discusses cache memory and virtual memory. It begins by explaining the memory hierarchy from fastest to slowest of registers, cache, main memory, and magnetic disk. It describes how cache exploits locality through temporal and spatial locality. Different cache mapping techniques like direct mapping, set associative mapping, and fully associative mapping are covered. The document also discusses virtual memory and how it allows programs to access more memory than physically available using the memory management unit to translate virtual to physical addresses through page tables and the translation lookaside buffer.

![Program Structure

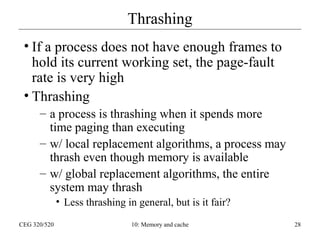

• How should we arrange memory references to large arrays?

– Is the array stored in row-major or column-major order?

• Example:

– Array A[1024, 1024] of type integer

– Page size = 1K

• Each row is stored in one page

– System has one frame

– Program 1

1024 page faults

– Program 2

1024 x 1024 page faults

CEG 320/520

for i := 1 to 1024 do

for j := 1 to 1024 do

A[i,j] := 0;

for j := 1 to 1024 do

for i := 1 to 1024 do

A[i,j] := 0;

10: Memory and cache

30](https://image.slidesharecdn.com/9comporg-memory-140225002309-phpapp01/85/cache-memory-management-30-320.jpg)