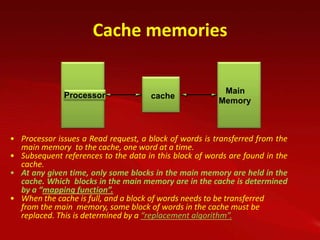

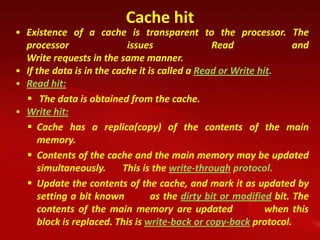

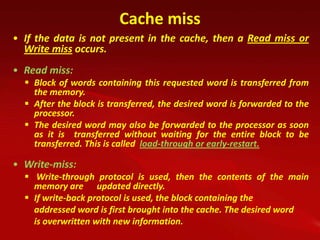

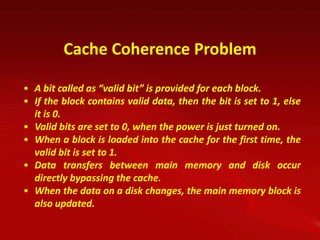

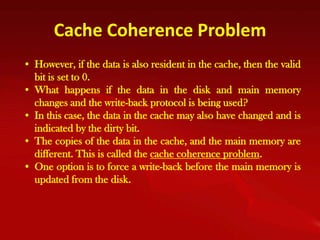

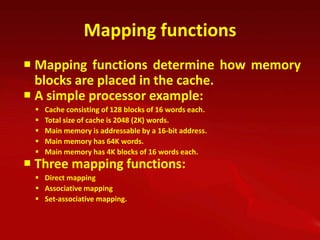

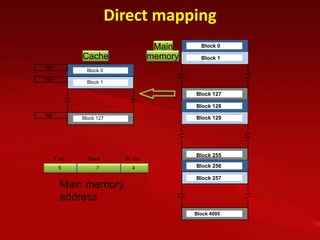

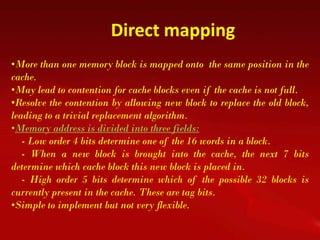

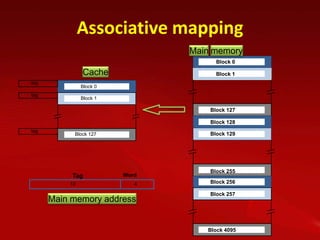

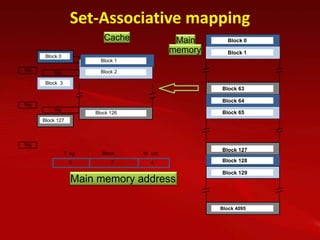

Cache memory is used to improve processor performance by making main memory access appear faster. It works based on the principle of locality of reference, where programs tend to access the same data/instructions repeatedly. A cache hit provides faster access than main memory, while a miss requires retrieving data from main memory. Caches use mapping functions like direct, associative, or set-associative mapping to determine where to place blocks of data from main memory.