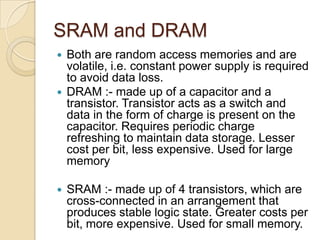

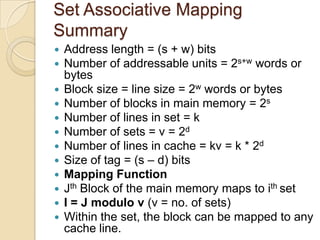

The document explains the organization and functioning of computer memory hierarchies, emphasizing cache memory types such as SRAM and DRAM. It discusses cache mapping techniques—including direct, associative, and set associative mappings—and the implications for performance, miss types, and replacement algorithms. Additionally, the document covers write policies and cache coherency, highlighting the benefits of multi-level caches and the differences between unified and split caches.