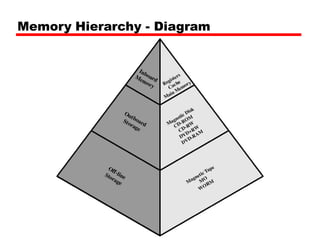

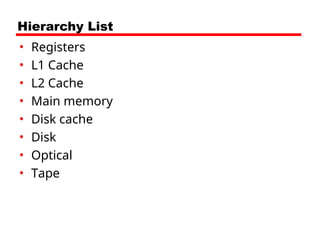

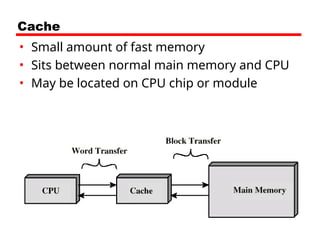

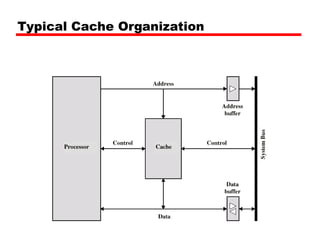

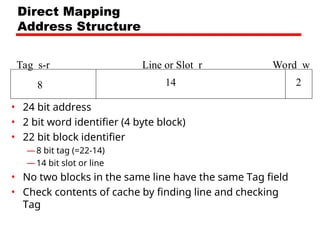

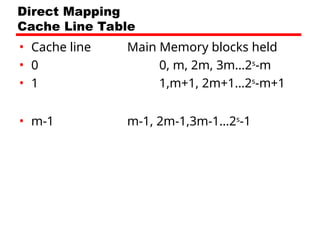

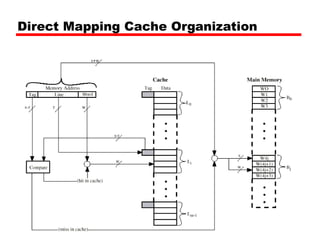

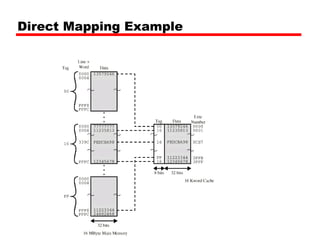

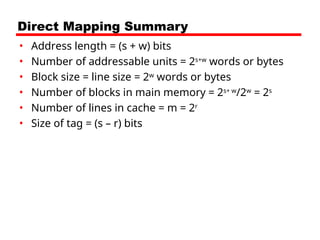

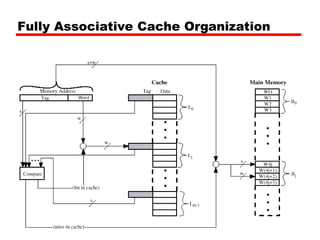

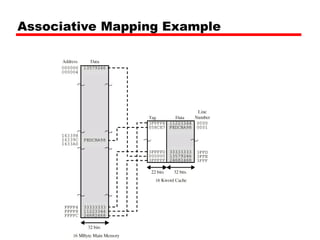

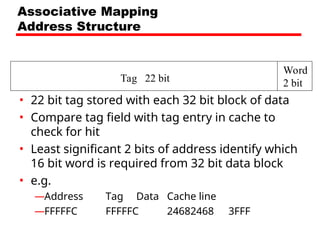

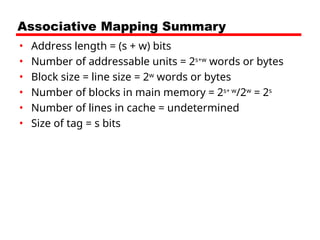

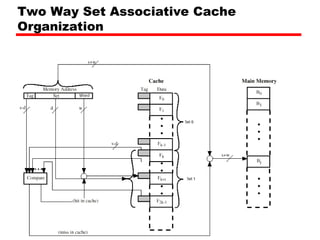

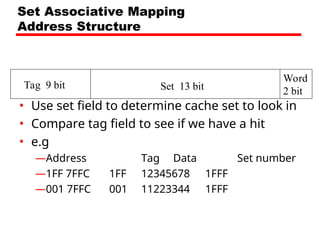

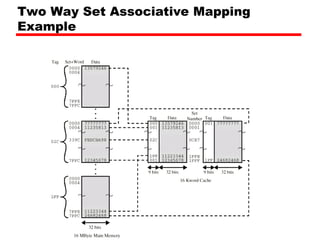

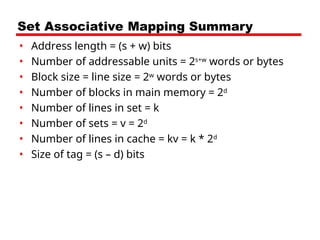

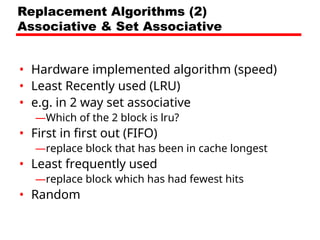

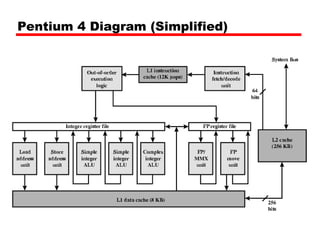

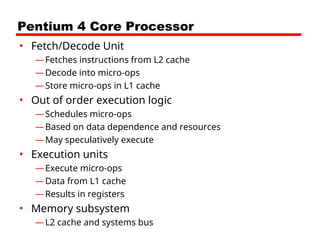

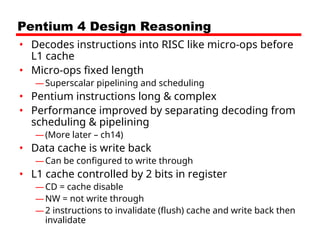

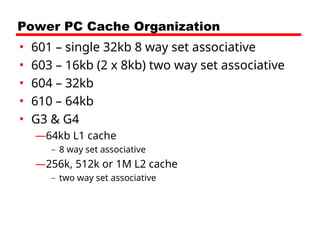

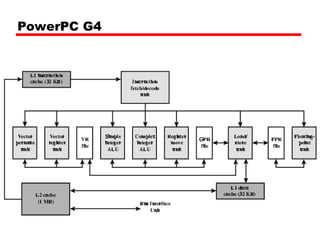

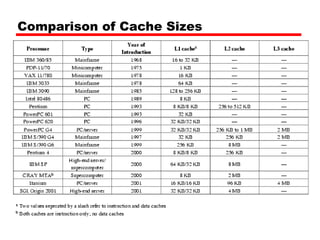

The document provides an overview of cache memory, detailing its characteristics such as location, capacity, access methods, performance, and physical types. It explains different mapping functions, replacement algorithms, and write policies used in cache design, as well as discussing specific architectures like the Pentium 4 and PowerPC cache organization. The importance of cache in improving access times and performance in computer systems is emphasized throughout the text.