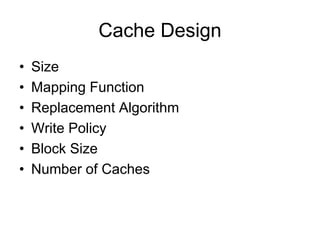

This document discusses memory hierarchy and caching. It can be summarized as follows:

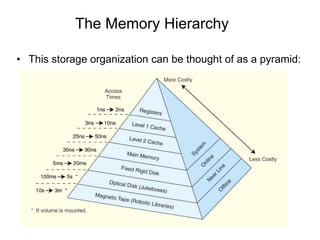

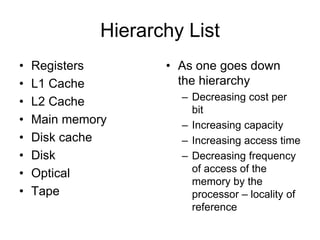

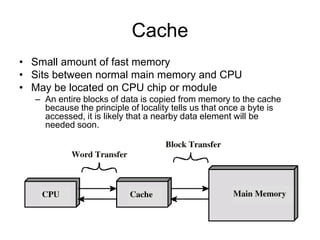

1. Memory is organized in a hierarchy from fastest and smallest (registers and cache) to slowest and largest (disk). Cache sits between CPU and main memory to improve performance by exploiting locality of reference.

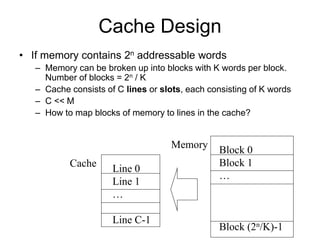

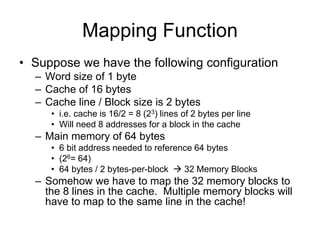

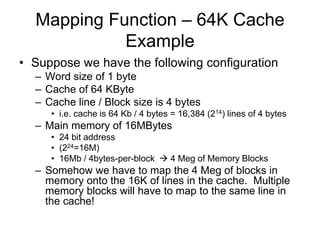

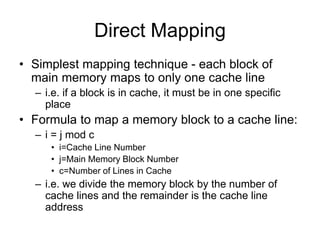

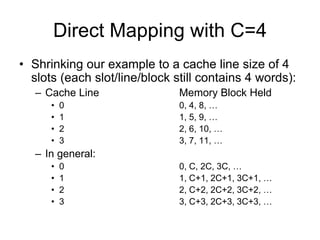

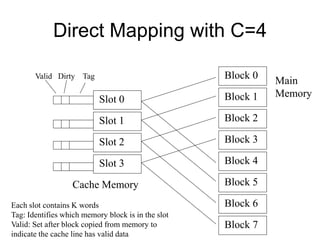

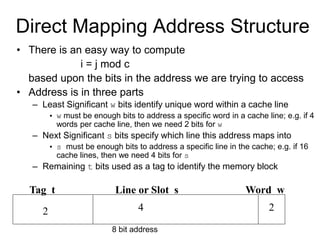

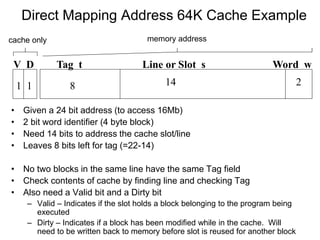

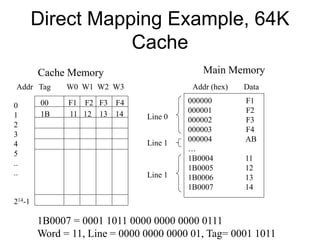

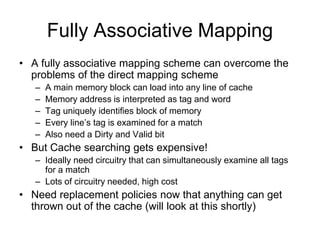

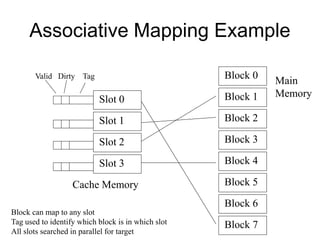

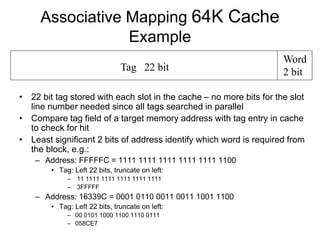

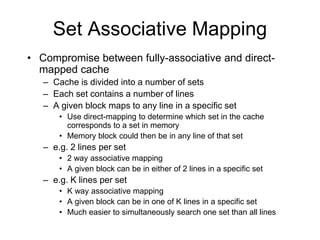

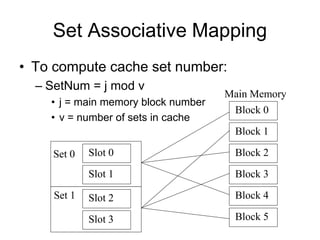

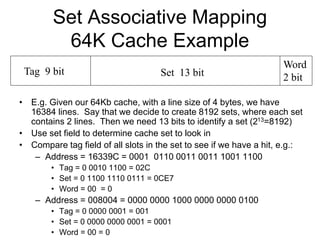

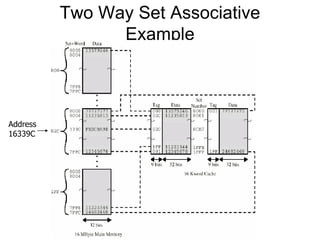

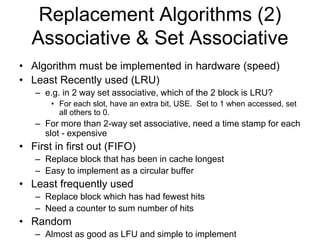

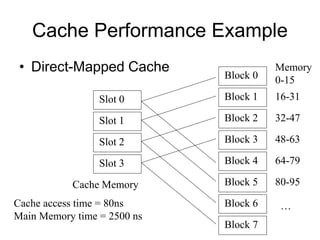

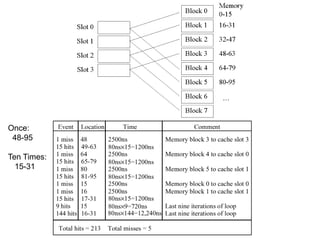

2. Caches use mapping functions to determine which block of main memory corresponds to each cache line. Direct mapping allocates blocks to lines in a fixed way while fully associative mapping allows blocks to map to any line.

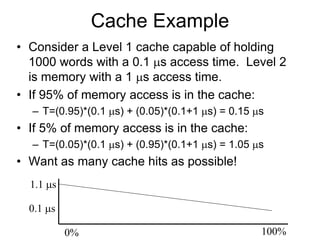

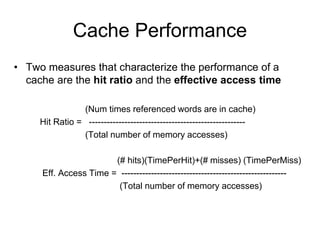

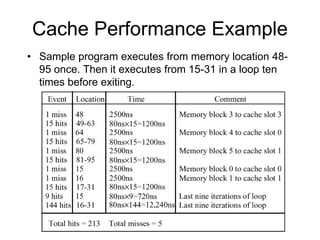

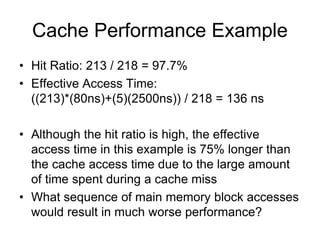

3. Cache hits are faster than misses, which involve reading a block from lower levels. Hit rates above 95% can improve average memory access time significantly compared to lower hit rates.