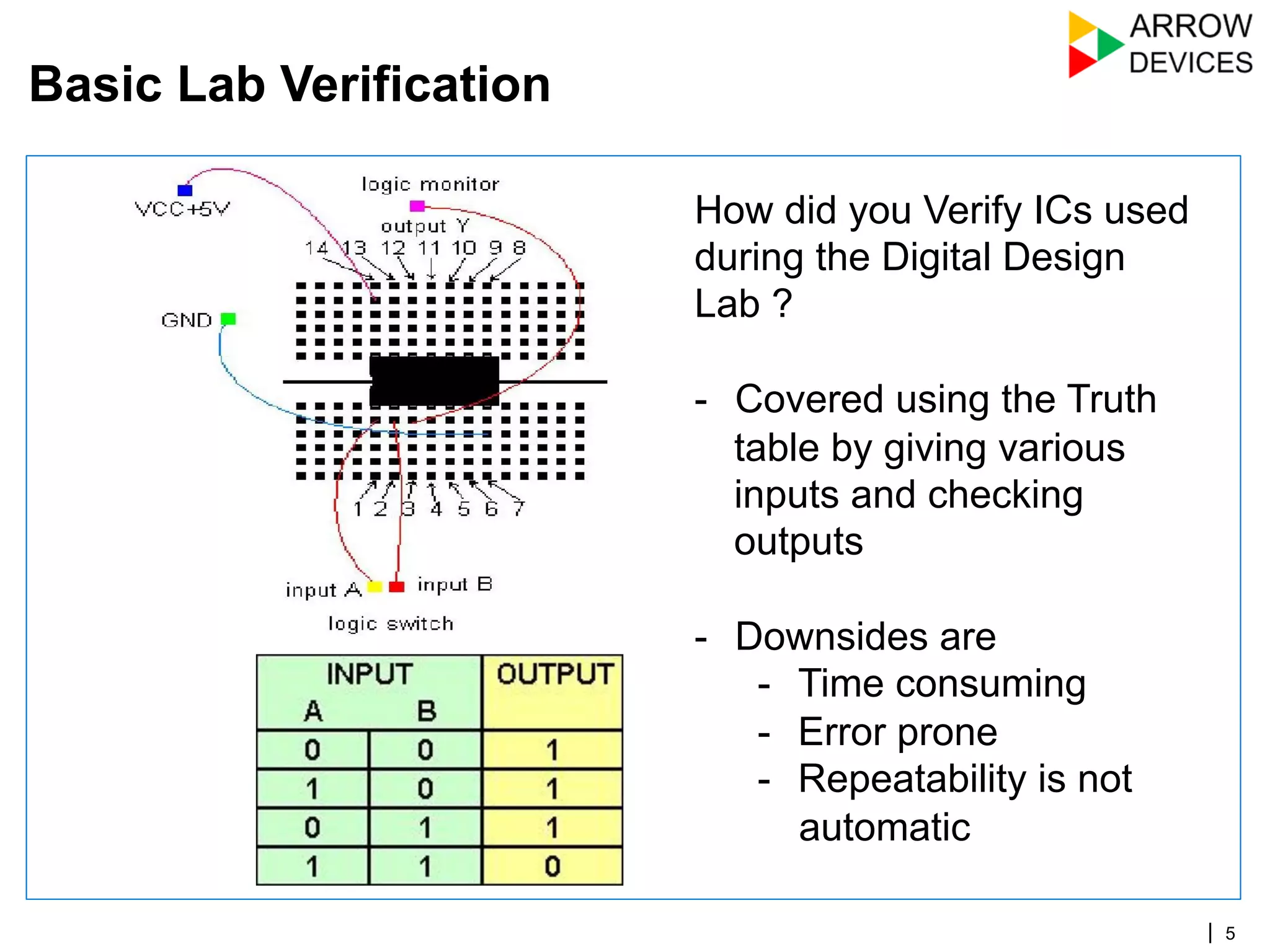

The document discusses the fundamentals of functional verification, emphasizing its critical role in ensuring correct logic performance in integrated circuits. It outlines the verification process, including planning, execution, and debugging, while highlighting various verification methodologies and strategies. Additionally, it covers practical examples and key considerations for effective verification practices in the context of evolving design technologies.

![| 34

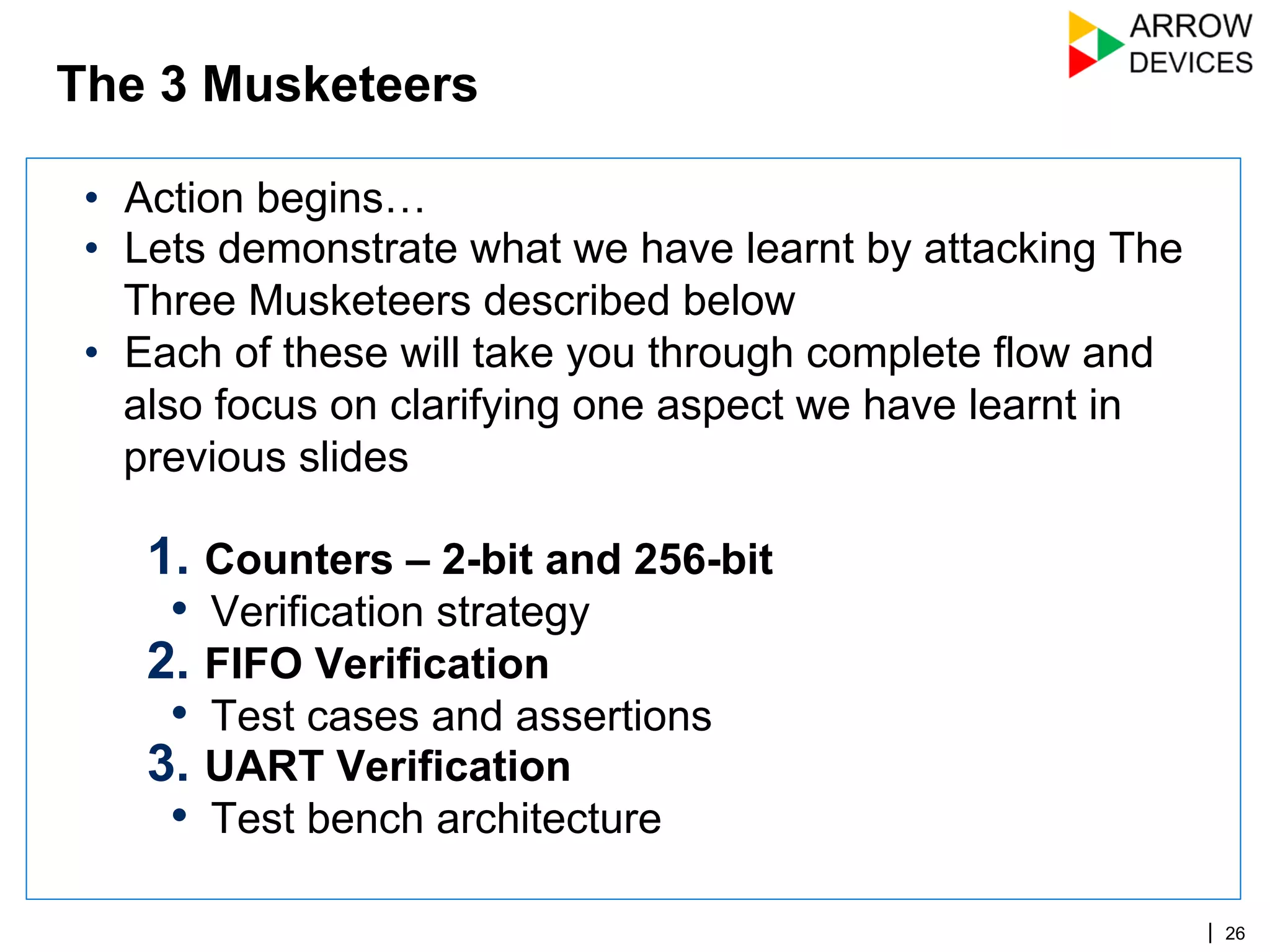

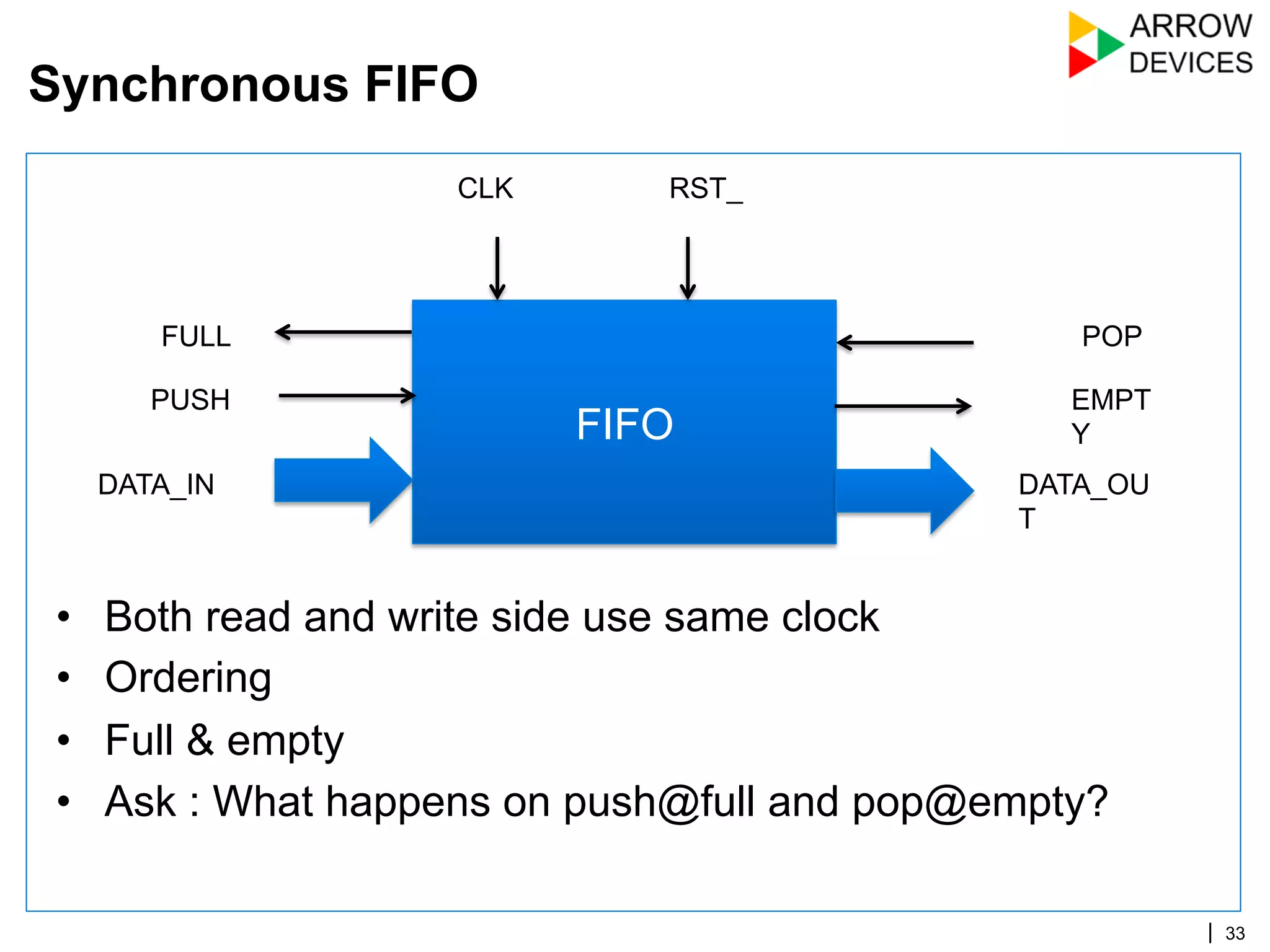

Normal operation

▪ Order of data pushed should match POP

▪ After N PUSH and 0 POP, FIFO FULL assertion

▪ Num of PUSH == Num of POP EMPTY assertion

▪ Stress : PUSH and POP at same time

▪ Reset intermediately

Negative case

▪ PUSH on FULL and POP on EMPTY

Implementation specific

▪ Internal counter alignments for FULL/EMPTY generation

Always true conditions [Assertion]

▪ Both FULL and EMPTY should never get asserted together

▪ Number of clocks between the assertion of Write on input to de-assertion of

empty on output - This is also indicative of performance of the FIFO.

Synchronous FIFO - Test Plan](https://image.slidesharecdn.com/arrowdevicesverificationbasics-140704023656-phpapp01/75/Basics-of-Functional-Verification-Arrow-Devices-34-2048.jpg)