The document provides an overview of software testing concepts including:

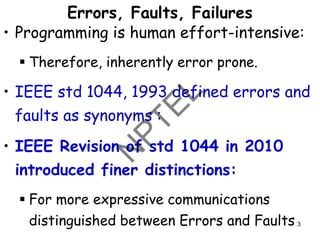

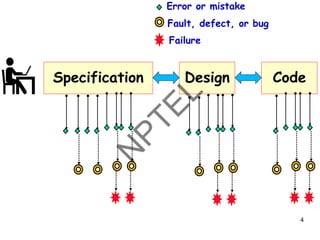

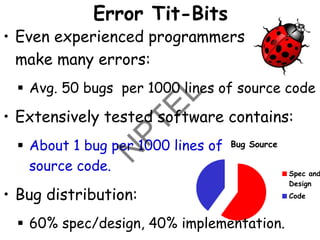

- The differences between errors, faults, failures, and bugs and where they originate from in the development process.

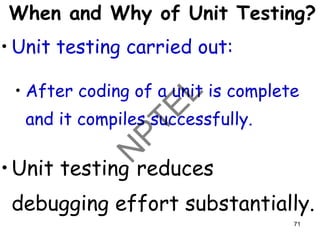

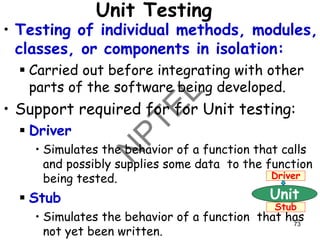

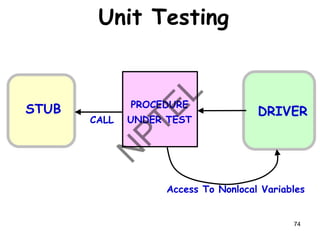

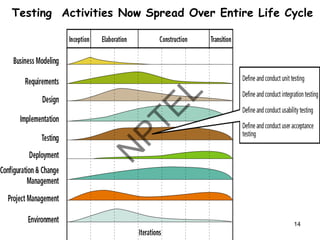

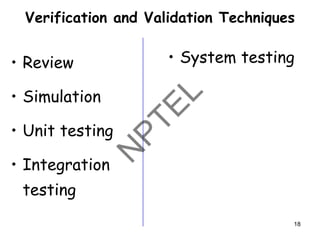

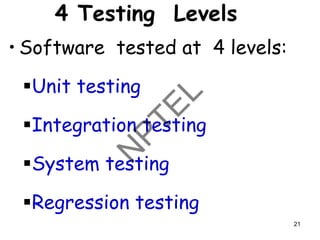

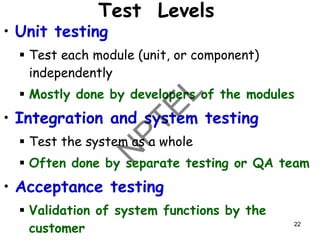

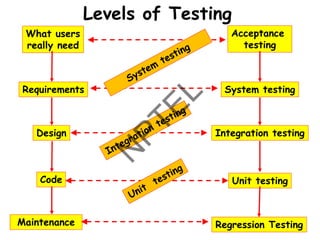

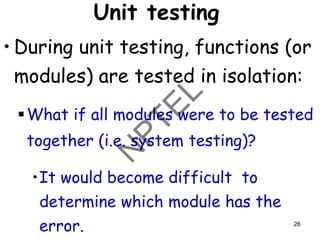

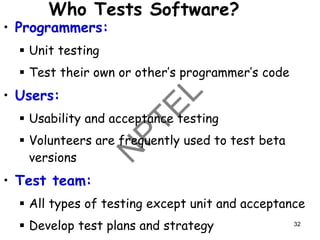

- The four main levels of testing: unit testing, integration testing, system testing, and regression testing.

- The importance of testing and the large costs associated with not adequately testing software as evidenced by the Ariane 5 rocket example.

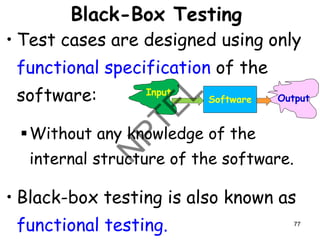

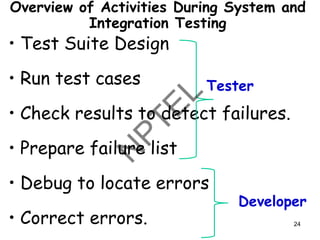

- Key testing activities like test case design, test execution, and analyzing results.

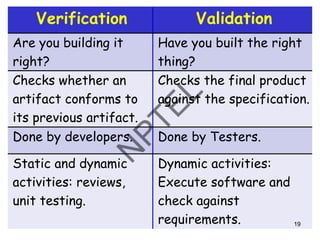

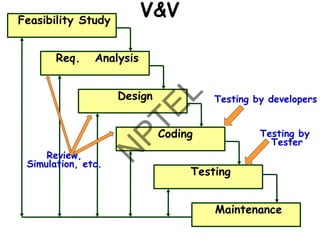

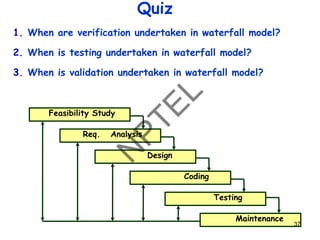

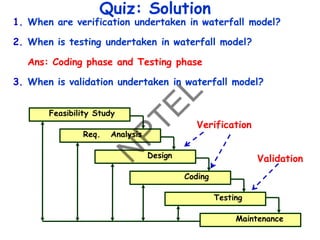

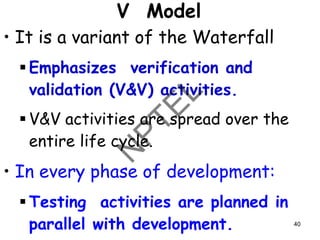

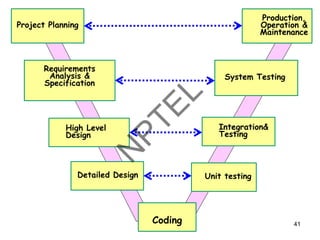

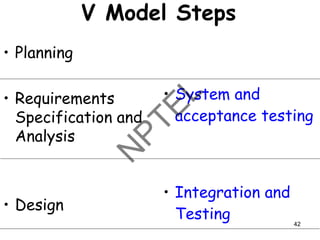

- When verification and validation occur in the software development lifecycle.

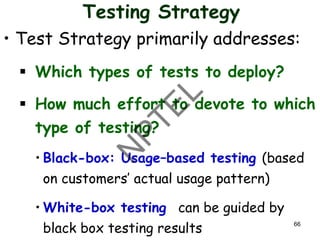

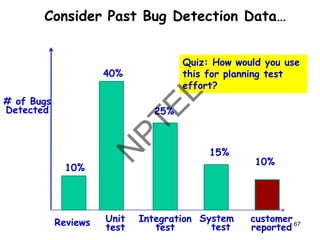

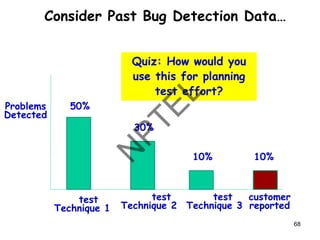

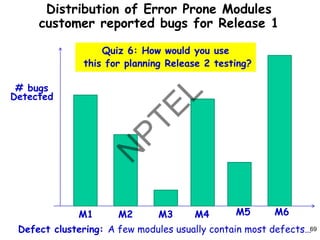

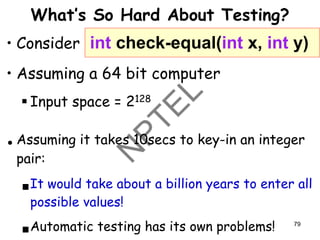

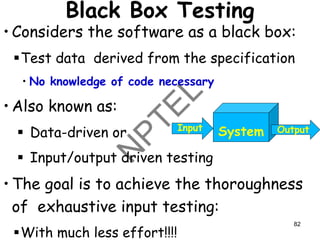

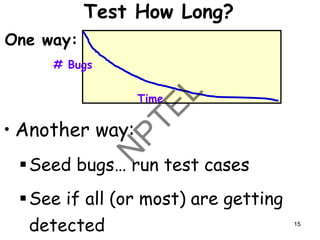

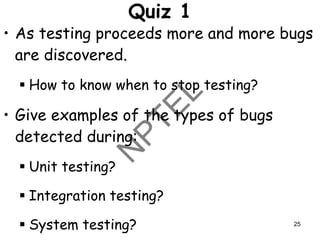

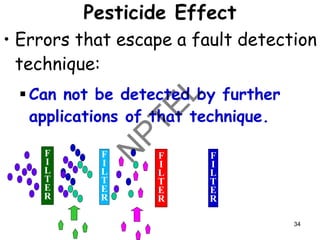

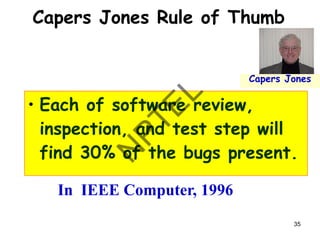

- Common testing strategies, metrics, and challenges.

![How Many Latent Errors?

• Several independent studies

[Jones],[schroeder], etc. conclude:

▪ 85% errors get removed at the end

of a typical testing process.

▪ Why not more?

▪ All practical test techniques are

basically heuristics… they help to

reduce bugs… but do not guarantee

complete bug removal… 47](https://image.slidesharecdn.com/softwaretestingw1watermark-231228185357-de7d6c2e/85/SOFTWARE-TESTING-W1_watermark-pdf-47-320.jpg)

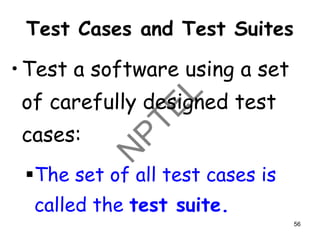

![Test Cases and Test Suites

• A test case is a triplet [I,S,O]

▪I is the data to be input to the

system,

▪S is the state of the system at

which the data will be input,

▪O is the expected output of the

system. 54](https://image.slidesharecdn.com/softwaretestingw1watermark-231228185357-de7d6c2e/85/SOFTWARE-TESTING-W1_watermark-pdf-54-320.jpg)

![Test Execution Example: Return Book

• Test case [I,S,O]

1.Set the program in the required

state: Book record created, member

record created, Book issued

2.Give the defined input: Select renew

book option and request renew for a

further 2 wk period.

3.Observe the output:

▪ Compare it to the expected output.

57](https://image.slidesharecdn.com/softwaretestingw1watermark-231228185357-de7d6c2e/85/SOFTWARE-TESTING-W1_watermark-pdf-57-320.jpg)