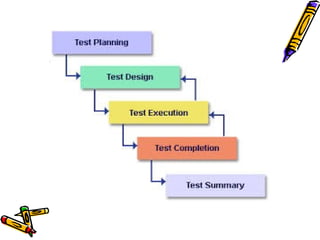

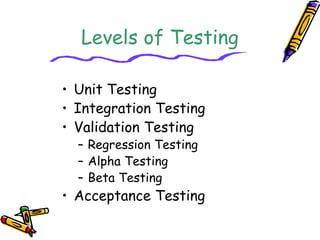

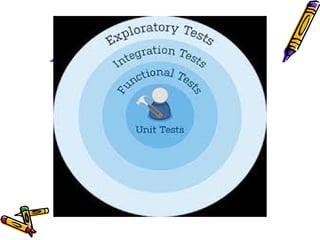

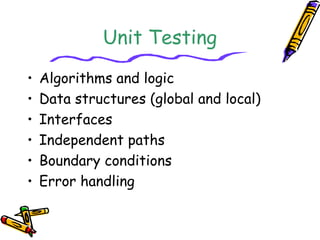

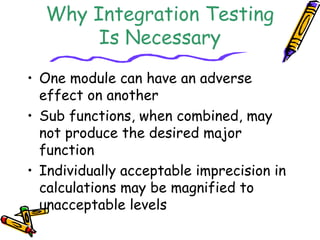

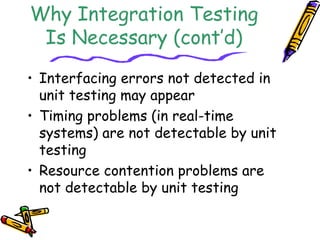

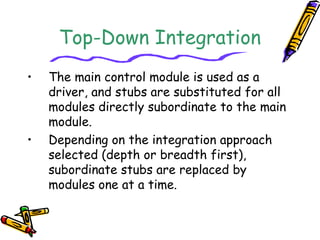

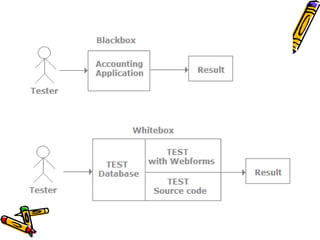

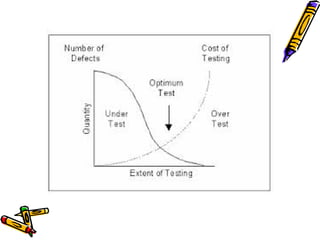

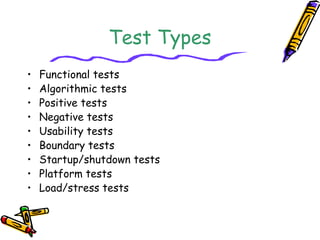

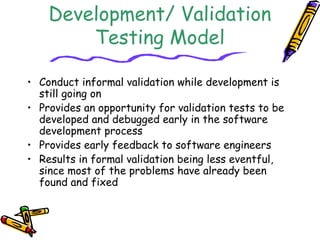

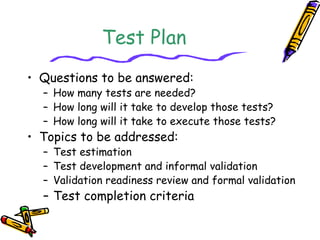

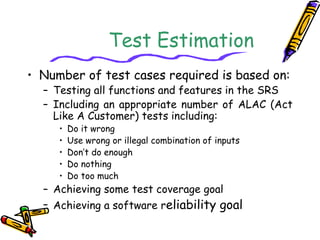

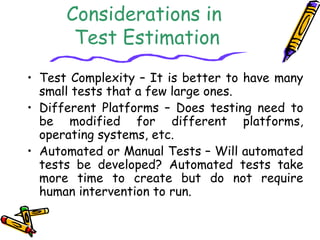

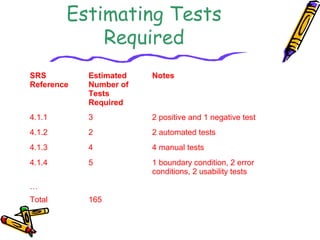

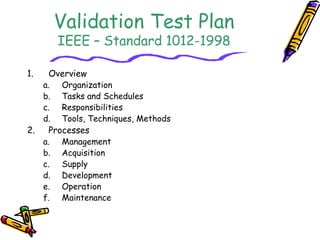

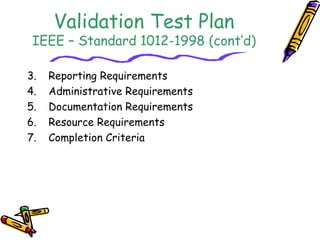

This document discusses software testing practices and processes. It covers topics like unit testing, integration testing, validation testing, test planning, and test types. The key points are that testing aims to find errors, good testing uses both valid and invalid inputs, and testing should have clear objectives and be assigned to experienced people. Testing is done at the unit, integration and system levels using techniques like black box testing.