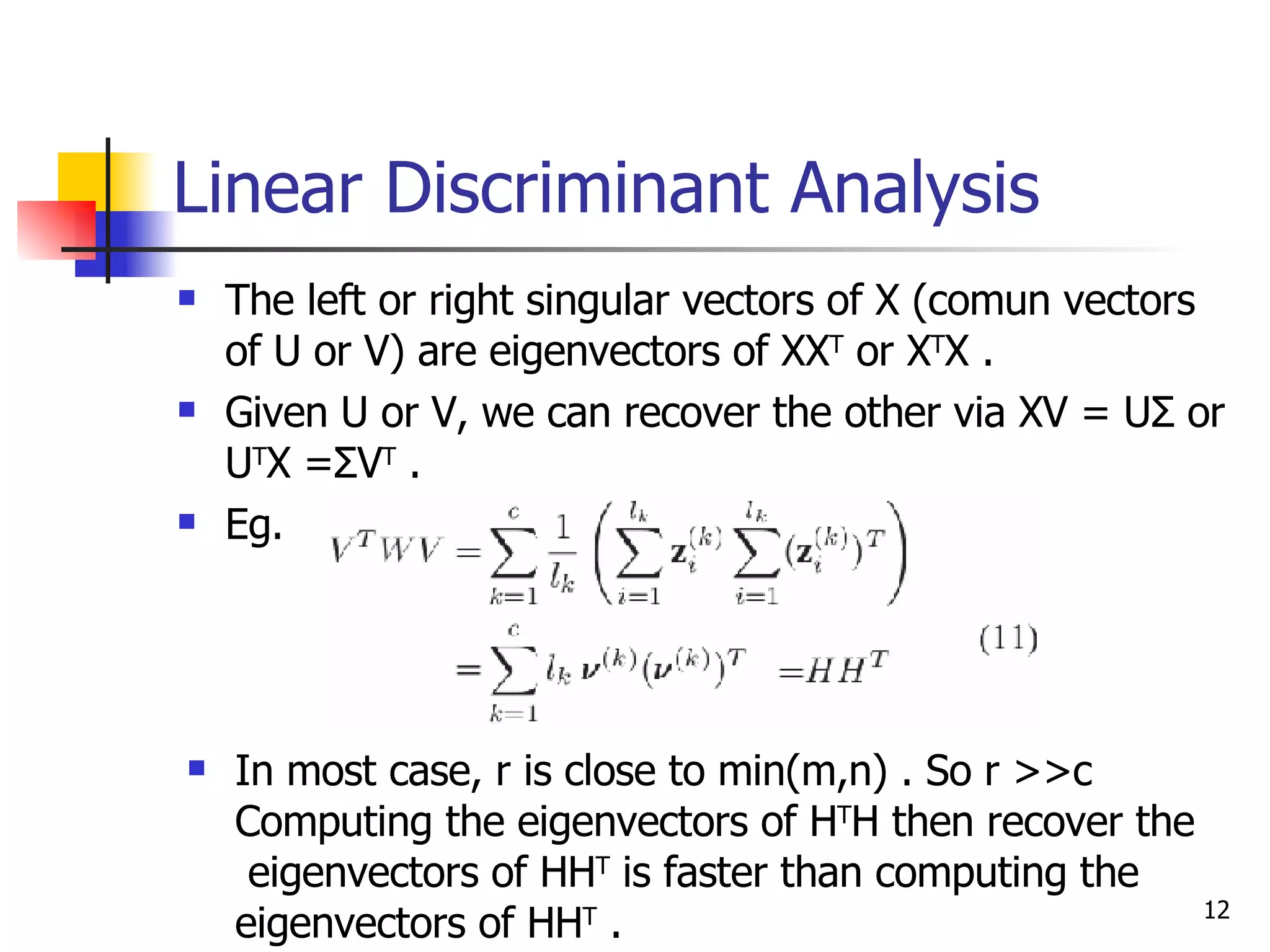

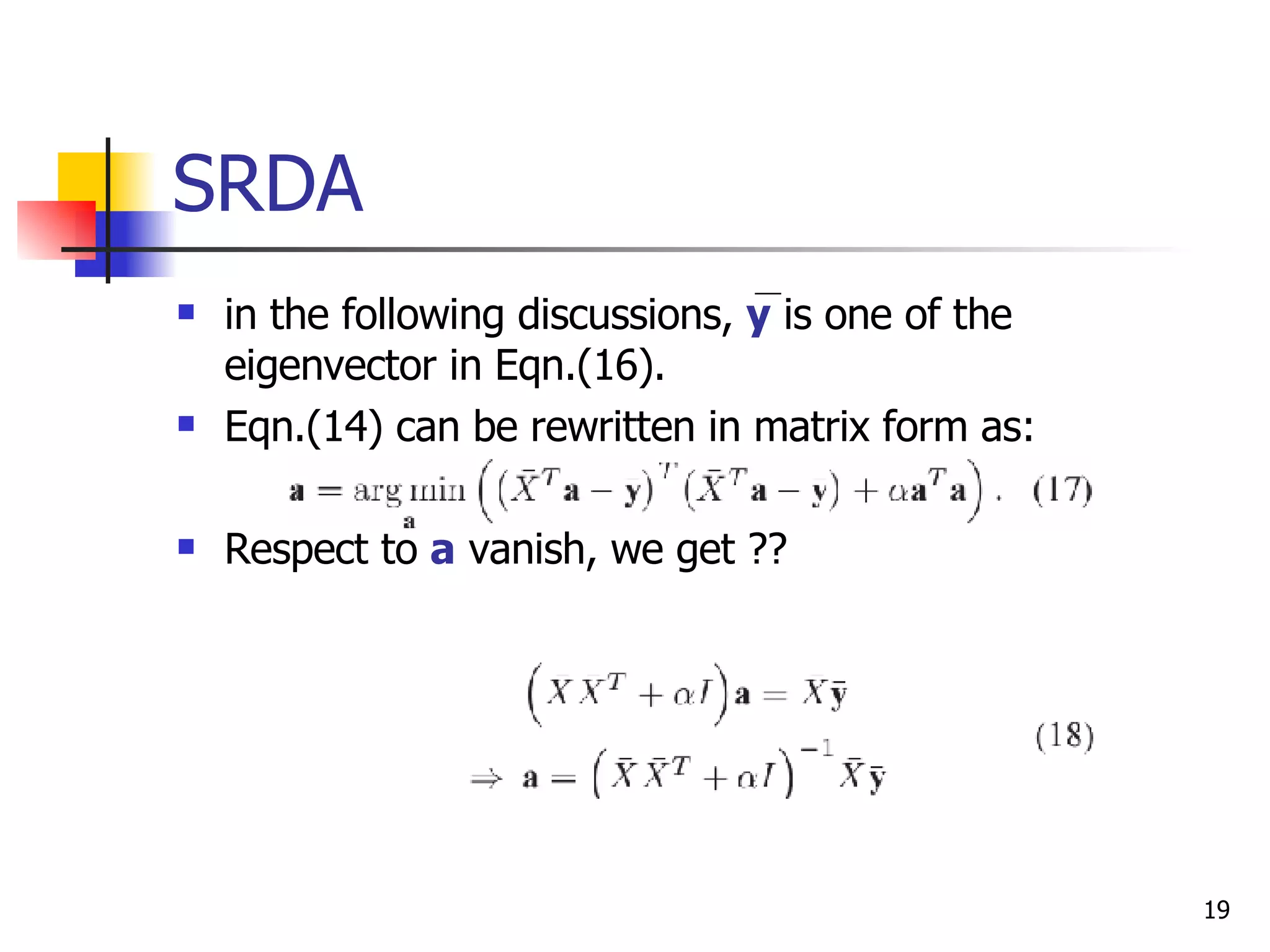

The document proposes a new method called Spectral Regression Discriminant Analysis (SRDA) to address the computational challenges of Linear Discriminant Analysis (LDA) on large, high-dimensional datasets. SRDA combines spectral graph analysis and regression to reduce the time complexity of LDA from quadratic to linear. It works by using the eigenvectors of the within-class scatter matrix to define a regression problem, the solution of which provides the projection vectors that maximize class separability. Experiments on four datasets show SRDA has comparable classification accuracy to LDA but can scale to much larger problems.

![Computational Analysis of LDA Let x i = x i – μ denote to the centered data point and X (k) = [ x 1 (k) ,…, x m k (k) ] denote to the centered data matrix of k -th class. W (k) is a m k x m k matrix with all elements equal to 1/ m k .](https://image.slidesharecdn.com/200708232079/75/20070823-8-2048.jpg)

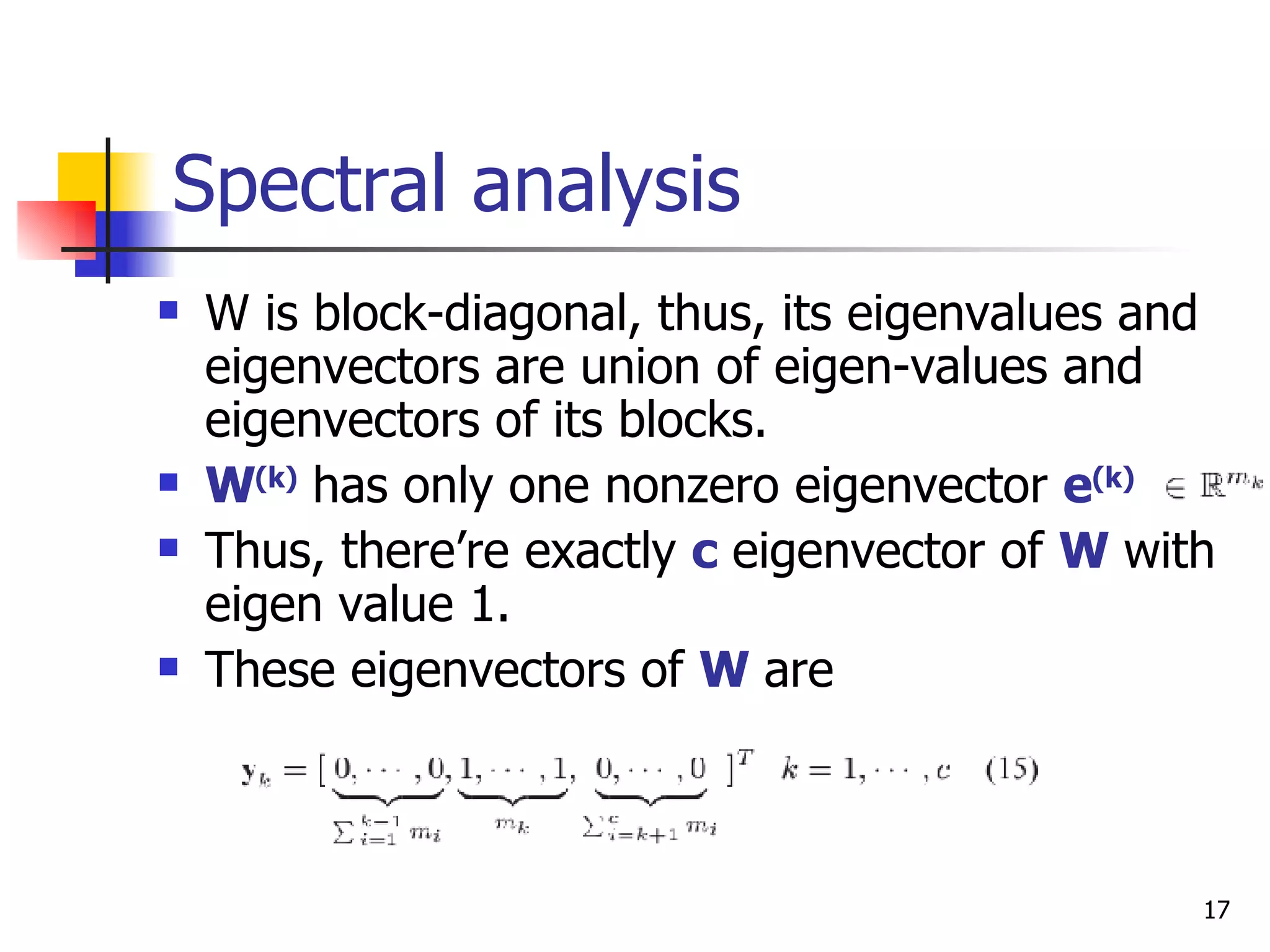

![Spectral analysis In order to guarantee there exists a vector a which satisfies the linear equation system X T a = y , y should be in the space spanned by the row vectors of X . Since Xe = 0, e =[1,…,1] T is orthogonal to this space. e is in the space of { y k }. We pick e as the first eigenvector of and use Gram-Schmidt process to orthogonzlize the remaining eigenvectors. Remove e , which leave us exactly c -1 eigenvectors of W as below.](https://image.slidesharecdn.com/200708232079/75/20070823-18-2048.jpg)

![Theoretical Analysis The i -th and j -th entries of any vector y in the space spanned by { y k } in Eqn.(15) are the same as long as x i and x j belong to the same class. Thus the i -th and j -th rows of Y are the same, where Y = [ y 1 ,…, y c-1 ] . Corollary (3) shows that when sample is linearly independent, the c -1 projective functions of LDA are exactly the solutions of the c -1 linear equations systems X T a k = y K .](https://image.slidesharecdn.com/200708232079/75/20070823-21-2048.jpg)

![Theoretical Analysis Let A = [ a 1 ,…, a c-1 ] be the LDA transformation matrix which embeds the data points into the LDA subspace as: A T X = A T (X + μe T )=Y T + A T μe T The projective functions are usually overfit the traning set thus may not be able to perform well for the test samples, thus the regularization is necessary.](https://image.slidesharecdn.com/200708232079/75/20070823-22-2048.jpg)